May the Speed Be with You: 20K QPS on Rockset

May 31, 2023

Scalability, performance and efficiency are the key considerations behind Rockset’s design and architecture. Today, we are thrilled to share a remarkable milestone in one of these dimensions. A customer workload achieved 20K queries per second (QPS) with a query latency (p95) of under 200ms, while continuously ingesting streaming data, marking a significant demonstration of the scalability of our systems. This technical blog highlights the architecture that paved the way for this accomplishment.

Understanding real-time workloads

High QPS is often crucial for organizations that require real-time or near-real-time processing of a significant volume of queries. These can range from online marketplaces that need to handle a large number of customer queries and product searches to retail platforms that need high QPS to serve personalized recommendations in real time. In most of these real-time use cases, new data never stops arriving and queries never stop either. A database that serves real-time analytical queries has to process reads and writes concurrently.

- Scalability: In order serve the high volume of incoming queries, being able to distribute the workload across multiple nodes and scaling horizontally as needed is important.

- Workload Isolation: When real-time data ingestion and query workloads run on the the same compute units, they directly compete for resources. When data ingestion has a flash flood moment, your queries will slow down or time out making your application flaky. When you have a sudden unexpected burst of queries, your data will lag making your application not so real time anymore.

- Query Optimization: When data sizes are large you cannot afford to scan large portions of your data to respond to queries, especially when the QPS is high as well. Queries need to heavily leverage underlying indexes to reduce the amount of compute needed per query.

- Concurrency: High query rates can lead to contention for locks, causing performance bottlenecks or deadlocks. Implementing effective concurrency control mechanisms is necessary to maintain data consistency and prevent performance degradation.

- Data Sharding and Distribution: Efficiently sharding and distributing data across multiple nodes is essential for parallel processing and load balancing.

Let's discuss each of the above points in more detail and analyze how the Rockset architecture helps.

How Rockset architecture enables QPS scaling

Scale: Rockset separates compute from storage. A Rockset Virtual Instance (VI) is a cluster of compute and cache resources. It is completely separate from the hot storage tier, an SSD-based distributed storage system that stores the user’s dataset. It serves requests for data blocks from the software running on the Virtual Instance. The critical requirement is that multiple Virtual Instances can update and read the same data set residing on HotStorage. A data-update made from one Virtual Instance is visible on the other Virtual Instances in a few milliseconds.

Now, you can well imagine how easy it is to scale up or scale down the system. When the query volume is low, just use one Virtual Instance to serve queries. When the query volume increases spin up a new Virtual Instance and distribute the query load to all the existing Virtual Instances. These Virtual Instances do not need a new copy of the data, instead they all use the hot storage tier to fetch data from. The fact that no data replicas need to be made means that scale-up is fast and quick.

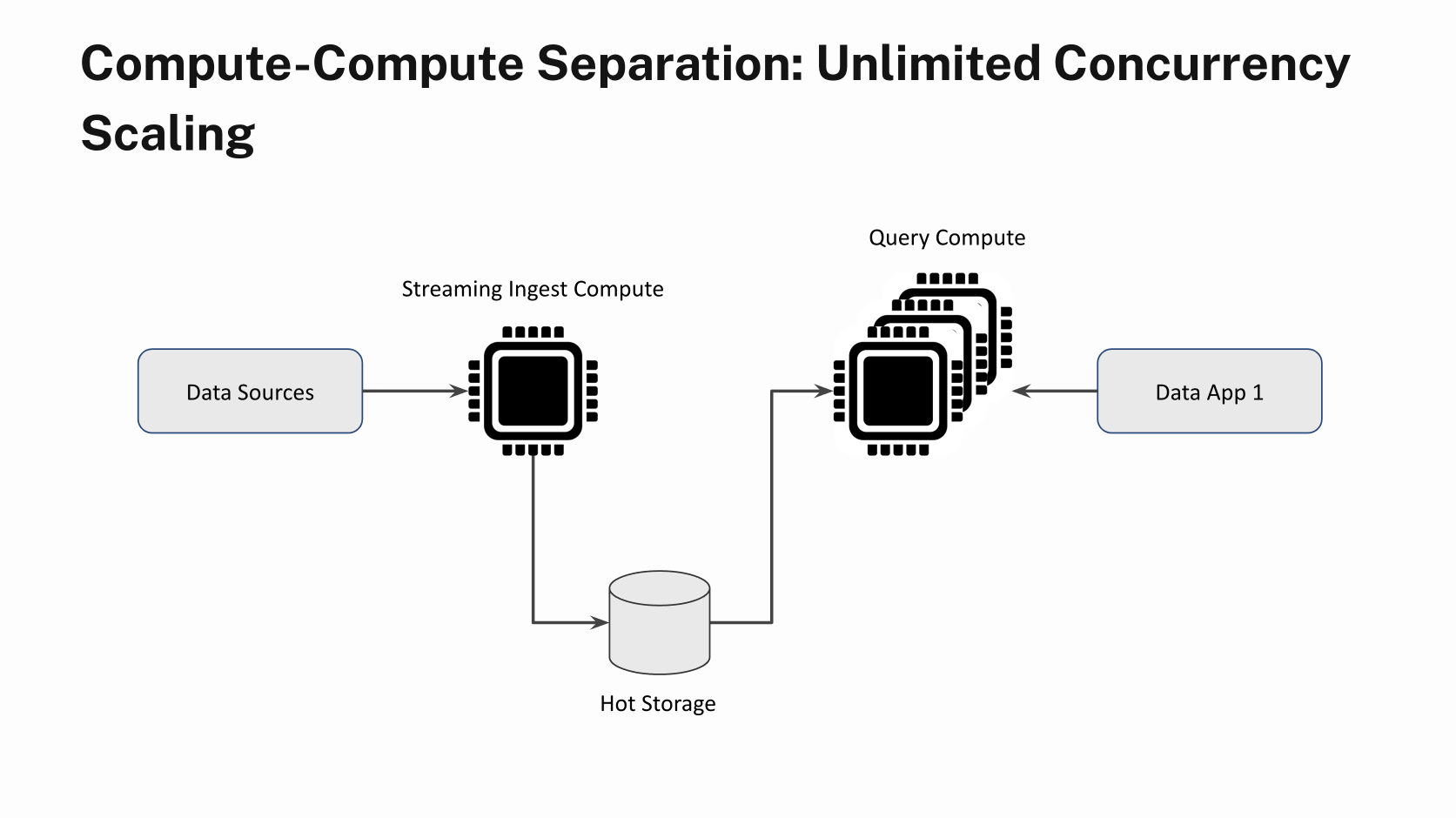

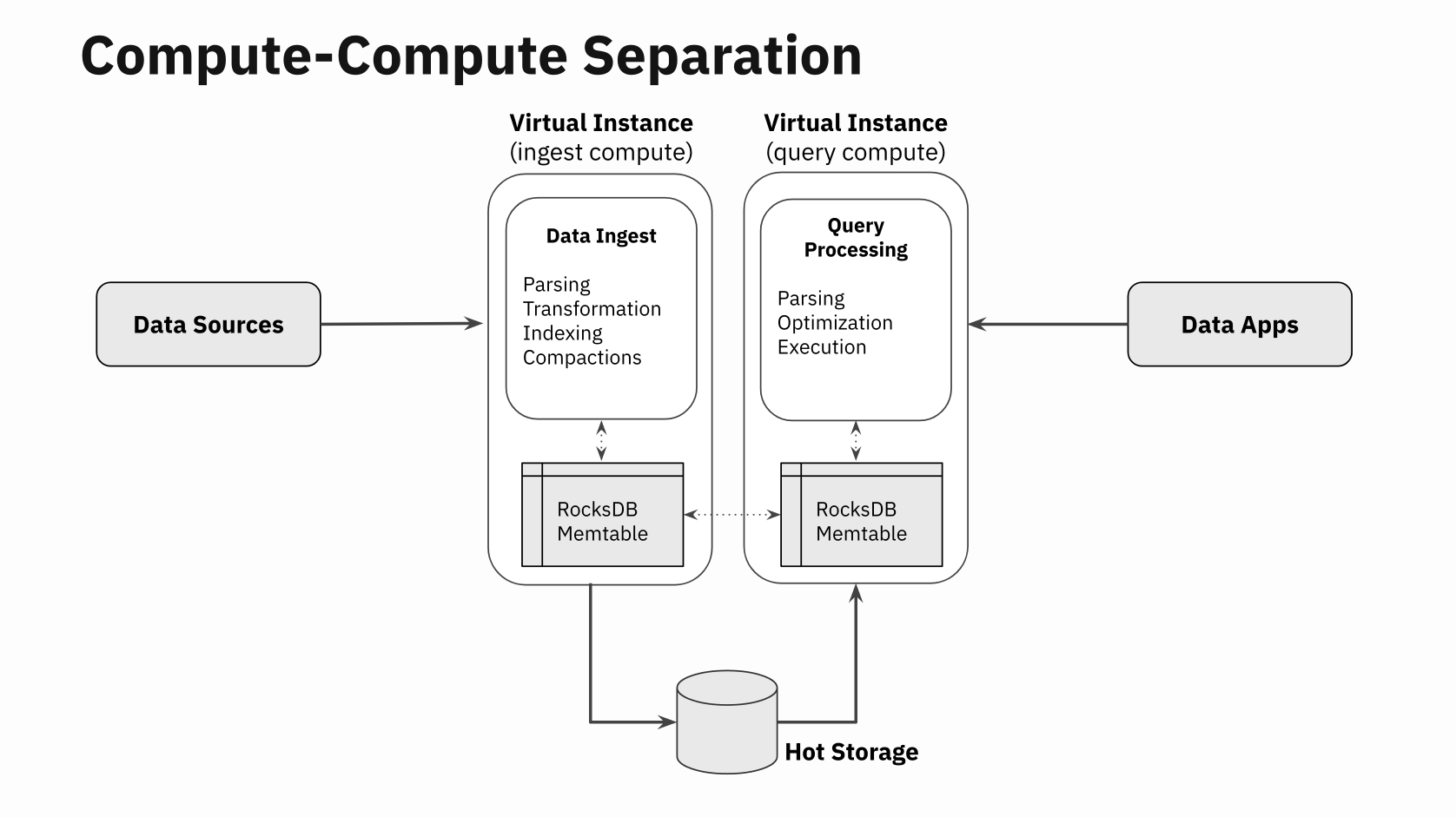

Workload Isolation: Every Virtual Instance in Rockset is completely isolated from any other Virtual Instance. You can have one Virtual Instance processing new writes and updating the hot storage, while a different Virtual Instance can be processing all the queries. The benefit of this is that a bursty write system does not impact query latencies. This is one reason why p95 query latencies are kept low. This design pattern is called Compute-Compute Separation.

Query Optimization: Rockset uses a Converged Index to narrow down the query to process the smallest sliver of data needed for that query. This reduces the amount of compute needed per query, thus improving QPS. It uses the open-source storage engine called RocksDB to store and access the Converged Index.

Concurrency: Rockset employs query admission control to maintain stability under heavy load so that the system does not try to run too many things concurrently and get worse at all of them. It enforces this via what is called the Concurrent Query Execution Limit that specifies the total number of queries allowed to be processed concurrently and Concurrent Query Limit that decides how many queries that overflow from the execution limit can be queued for execution.

This is especially important when the QPS is in the thousands; if we process all incoming queries concurrently, the number of context switches and other overhead causes all the queries to take longer. A better approach is to concurrently process only as many queries as needed to keep all the CPUs at full throttle, and queue any remaining queries until there is available CPU. Rockset’s Concurrent Query Execution Limit and Concurrent Query Limit settings allow you to tune these queues based on your workload.

Data Sharding: Rockset uses document sharding to spread its data on multiple nodes in a Virtual Instance. The single query can leverage compute from all the nodes in a Virtual Instance. This helps with simplified load balancing, data locality and improved query performance.

A peek into the customer workload

Data and queries: The dataset for this customer was 4.5TB in size with a total of 750M rows. Average document size was ~9KB with mixed types and some deeply nested fields. The workload consists of two type of queries:

select * from collection_name where processBy = :processBy

select * from collection_name where array_contains(emails, :email)

The predicate to the query is parameterized so that each run picks a different value for the parameter at query time.

A Rockset Virtual Instance is a cluster of compute and cache and comes in T-shirt sizes. In this case, the workload uses multiple instances of 8XL-sized Virtual Instances for queries and a single XL Virtual Instance to process concurrent updates. An 8XL has 256 vCPUs while a XL has 32 vCPUs.

Here is a sample document. Note the deep levels of nesting in these documents. Unlike other OLAP databases, we do not need to flatten these documents when you store them in Rockset. And the query can access any field in the nested document without impacting QPS.

Updates: A continuous stream of updates to existing records flow in at about 10 MB/sec. This update stream is continuously processed by a XL Virtual Instance. The updates are visible to all Virtual Instances in this setup within a few milliseconds. A separate set of Virtual Instances are used to process the query load as described below.

Demonstrating QPS scaling linearly with compute resources

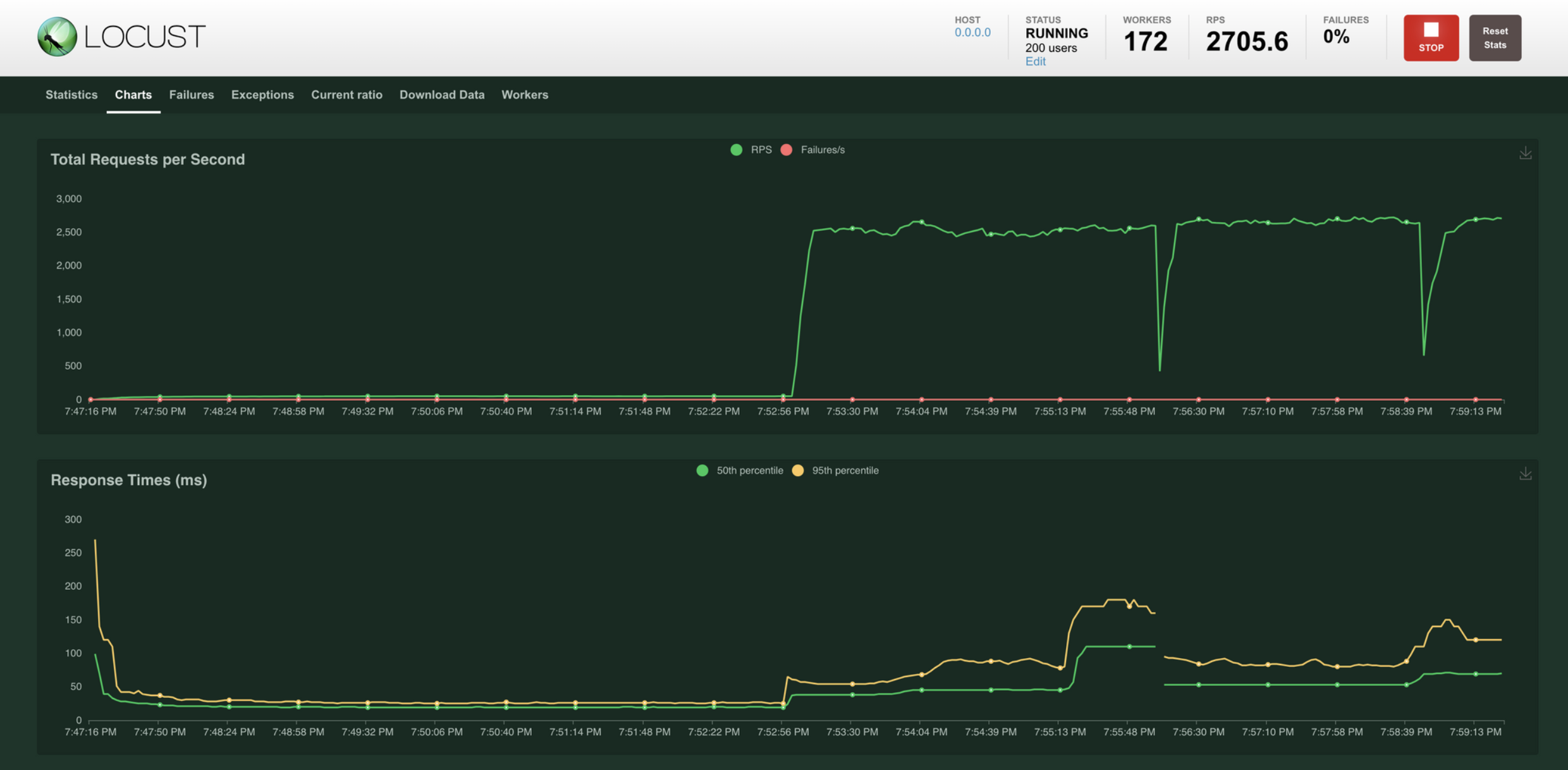

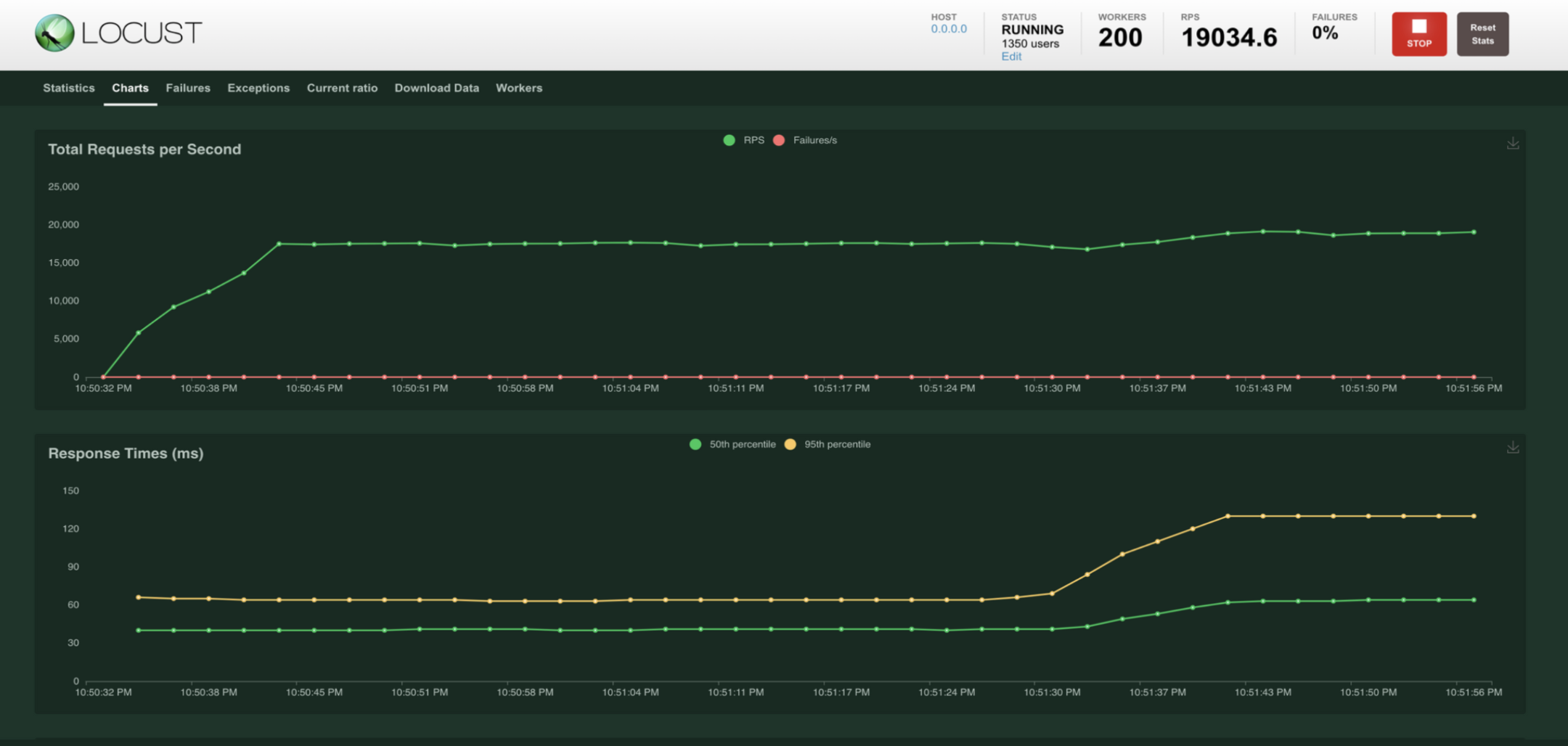

A distributed query generator based on Locust was used to drive up to 20K QPS on the customer dataset. Starting with a single 8XL virtual instance, we observed that it sustained around 2700 QPS at sub-200ms p95 query latency.

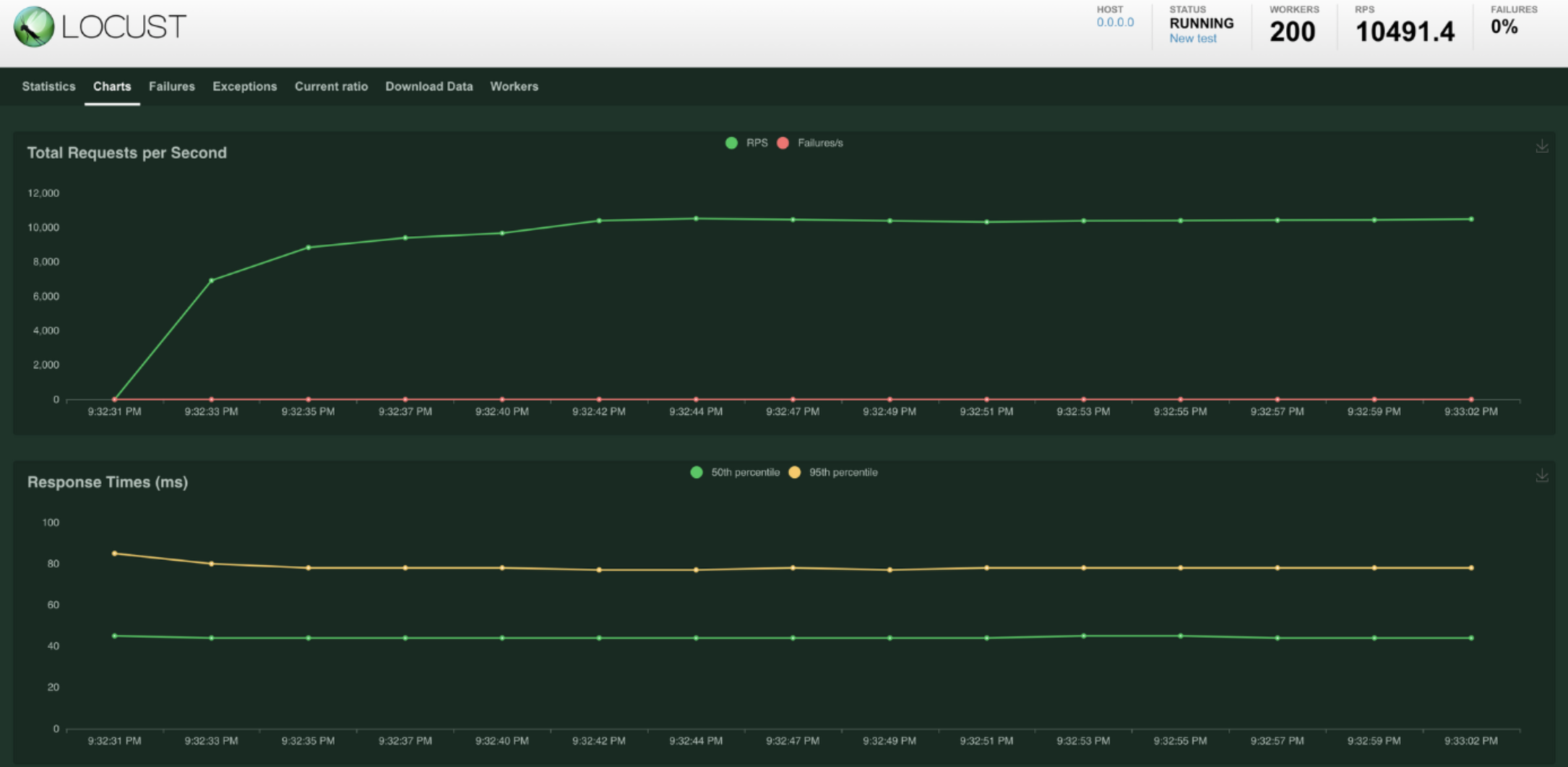

After scaling out to four 8XL Virtual Instances, we observed that it sustained around 10K QPS at sub-200ms p95 query latency.

And after scaling to eight 8XL Virtual Instances, we observed that it continued to scale linearly and sustained around 19K QPS at sub-200ms p95!!

Data freshness

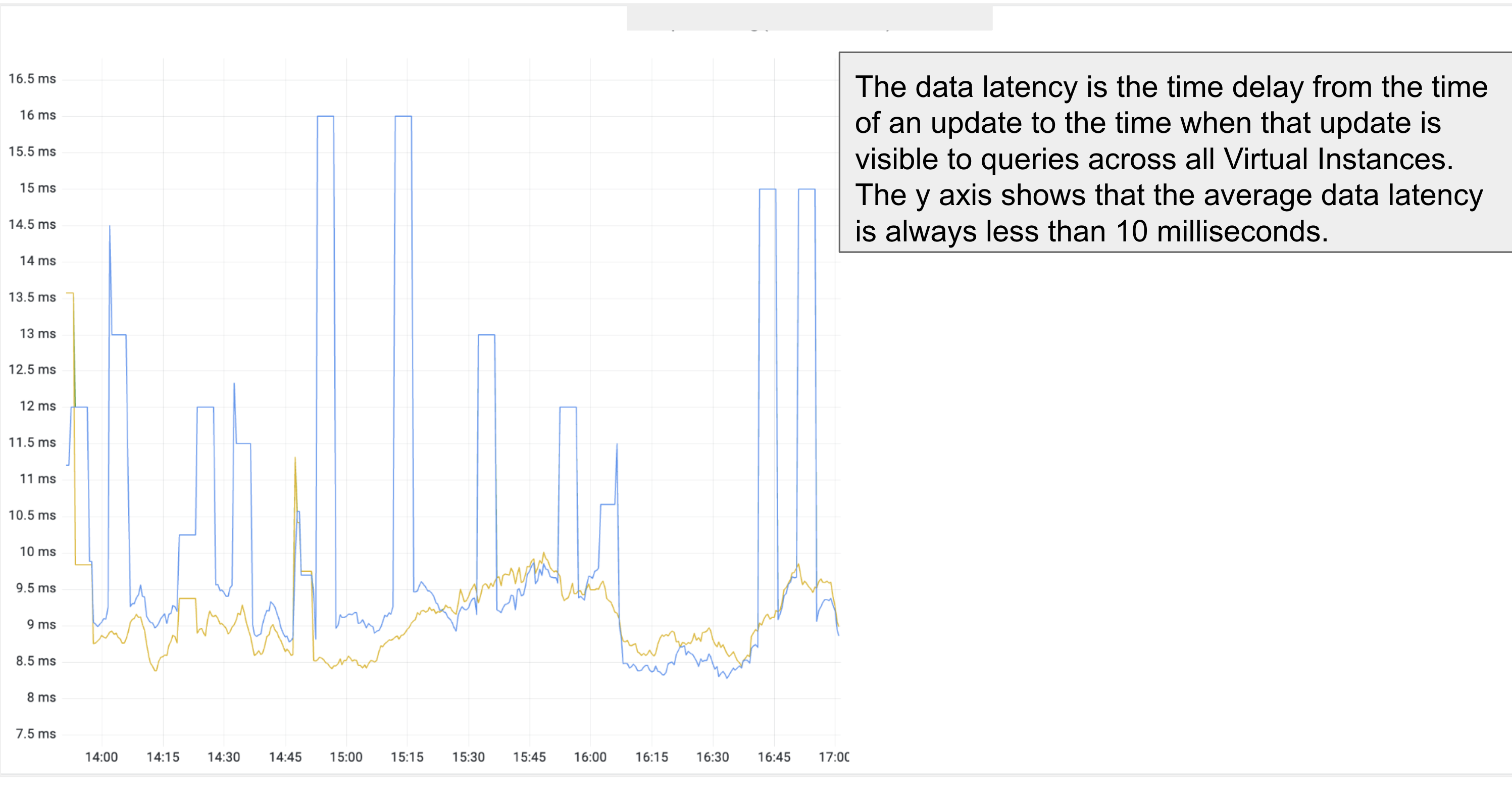

The data updates are occurring on one Virtual Instance and the queries are occurring on eight different Virtual Instances. So, the natural question that arises is, “Are the updates visible on all Virtual Instances, and if so, how long does it take for the updates to be visible in queries?”

The data freshness metric, also called the data latency, across all the Virtual Instances is in single-digit milliseconds as shown in the graph above. This is a true measure of the realtime characteristic of Rockset at high writes and high QPS!

Takeaways

The results show that Rockset can attain near-linear QPS scale-up: it is as easy as creating new Virtual Instances and spreading out the query load to all the Virtual Instances. There is no need to make replicas of data. And at the same time, Rockset continues to process updates concurrently. We are excited about the possibilities that lie ahead as we continue to push the boundaries of what is possible with high QPS.