A Real-Time Rockset Intern Experience

May 5, 2022

I spent the spring of my junior year interning at Rockset, and it couldn’t have been a better decision. When I first arrived at the office on a sunny day in San Mateo, I had no idea that I was about to meet so many systems engineering gurus, or that I was about to consume immensely good food from the festive neighboring streets. Working with my talented and resourceful mentor, Ben (Software Engineer, Systems), I’ve been able to learn more than I ever thought I could in three months! I now see myself as pretty well seasoned at C++ development, more understanding of different database architectures, and slightly better at Super Smash. Only slightly.

One thing I really appreciated was that even on the first day of the internship, I was able to push meaningful code by implementing the SUFFIXES SQL function, something that was desired by and directly impactful to Rockset's customers.

Over the course of my internship at Rockset, I got to dive deeper into many aspects of our systems backend, two of which I’ll go into more detail for. I got myself into way more segfaults and long hours spent debugging in GDB than I bargained for, which I can now say I came out the better end of. :D.

Query Sort Optimization

One of my favorite projects over this internship was to optimize our sort process for queries with the ORDER BY keyword in SQL. For example, queries like:

SELECT a FROM b ORDER BY c OFFSET 1000

would be able to run up to 45% faster with the offset-based optimization added, which is a huge performance improvement, especially for queries with large amounts of data.

We use operators in Rockset to separate responsibilities in the execution of a query, based on different processes such as scans, sorts and joins. One such operator is the SortOperator, which facilitates ordered queries and handles sorting. The SortOperator uses a standard library sort to power ordered queries, which is not receptive to timeouts during query execution since there is no framework for interrupt handling. This means that when using standard sorts, the query deadline is not enforced, and CPU is wasted on queries that should have already timed out.

Current sorting algorithms used by standard libraries are a strategic combination of the quicksort, heapsort and insertion sort, called introsort. Using a strategic loop and tail recursion, we can reduce the number of recursive calls made in the sort, thereby shaving a significant amount of time off the sort. Recursion also halts at a specific depth, after which either heapsort or insertion sort is used, depending on the number of elements in the interval. The number of comparisons and recursive calls made in a sort are very critical in terms of performance, and my mission was to reduce both in order to optimize larger sorts.

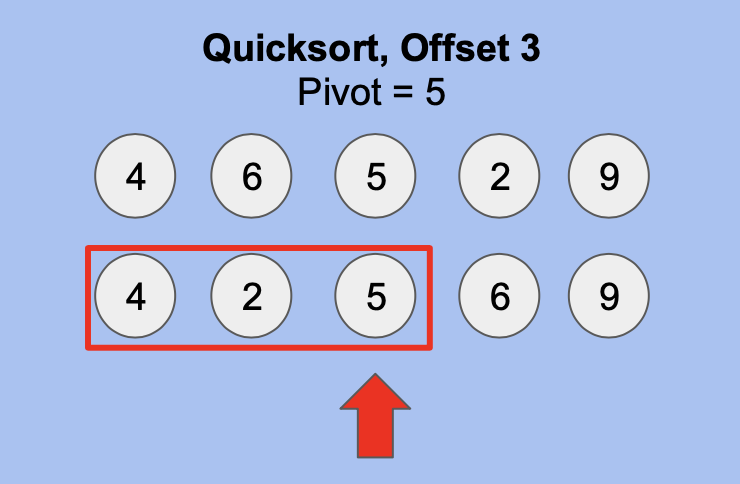

For the offset optimization, I was able to cut recursive calls by an amount proportional to the offset by keeping track of pivots used by previous recursive calls. Based on my modifications to introsort, we know that after a single partitioning, the pivot is in its correct position. Using this previous position, we can eliminate recursive calls before the pivot if its position is less than or equal to the offset requested.

For example, in the above image, we are able to halt recursion on the values before and including the pivot, 5, since its position is <= offset.

In order to serve cancellation requests, I had to make sure that these checks were both timely and done at regular intervals in a way that didn’t increase the latency of sorts. This meant that having cancellation checks correlated 1:1 with the number of comparisons or recursive calls directly would be very damaging to latency. The solution to this was to correlate cancellation checks with recursion depth instead, which through subsequent benchmarking I discovered that a recursion depth of >28 overall corresponded to one second of execution time between levels. For example, between a recursion depth of 29 & 28, there is ~1 second of execution. Similar benchmarks were used to determine when to check for cancellations in the heapsort.

This portion of my internship was heavily related to performance and involved meticulous benchmarking of query execution times, which helped me understand how to view tradeoffs in engineering. Performance time is critical since it is most likely a deciding factor in whether to use Rockset, since it determines how fast we can process data.

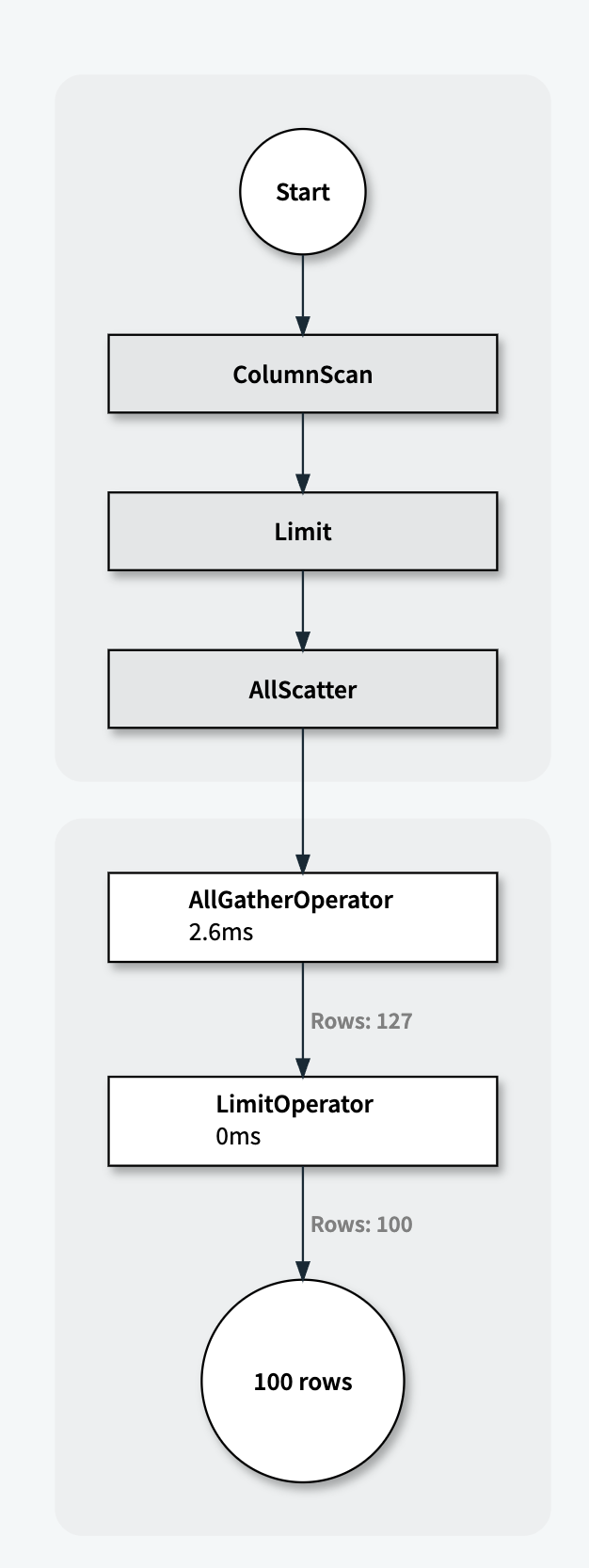

Batching QueryStats to Redis

Another interesting issue I worked on was decreasing the latency of Rockset’s Query Stats publisher after a query is run. Query Stats are important because they provide visibility into where the resources like CPU time and memory are used in query execution. These stats help our backend team to improve query execution performance. There are many different kinds of stats, especially for different operators, which explain how long their processes are taking and the amount of CPU they are using. In the future, we plan to share a visual representation of these stats with our users so they better understand resource utilization in Rockset's distributed query engine.

We currently send the stats from operators used in the execution of queries to intermediately store them in Redis, from where our API server is able to pull them into an internal tool. In the execution of complicated or larger queries, these stats are slow to populate, mostly due to the latency caused by tens of thousands of round trips to Redis.

My job was to decrease the number of trips to Redis by batching them by queryID, and ensure that query stats are populated while preventing spikes in the number of query stats waiting to be pushed. This efficiency improvement would aid us in scaling our query stats system to execute larger, more complex queries. This problem was particularly interesting to me since it deals with the exchange of data between two different systems in a batched and ordered fashion.

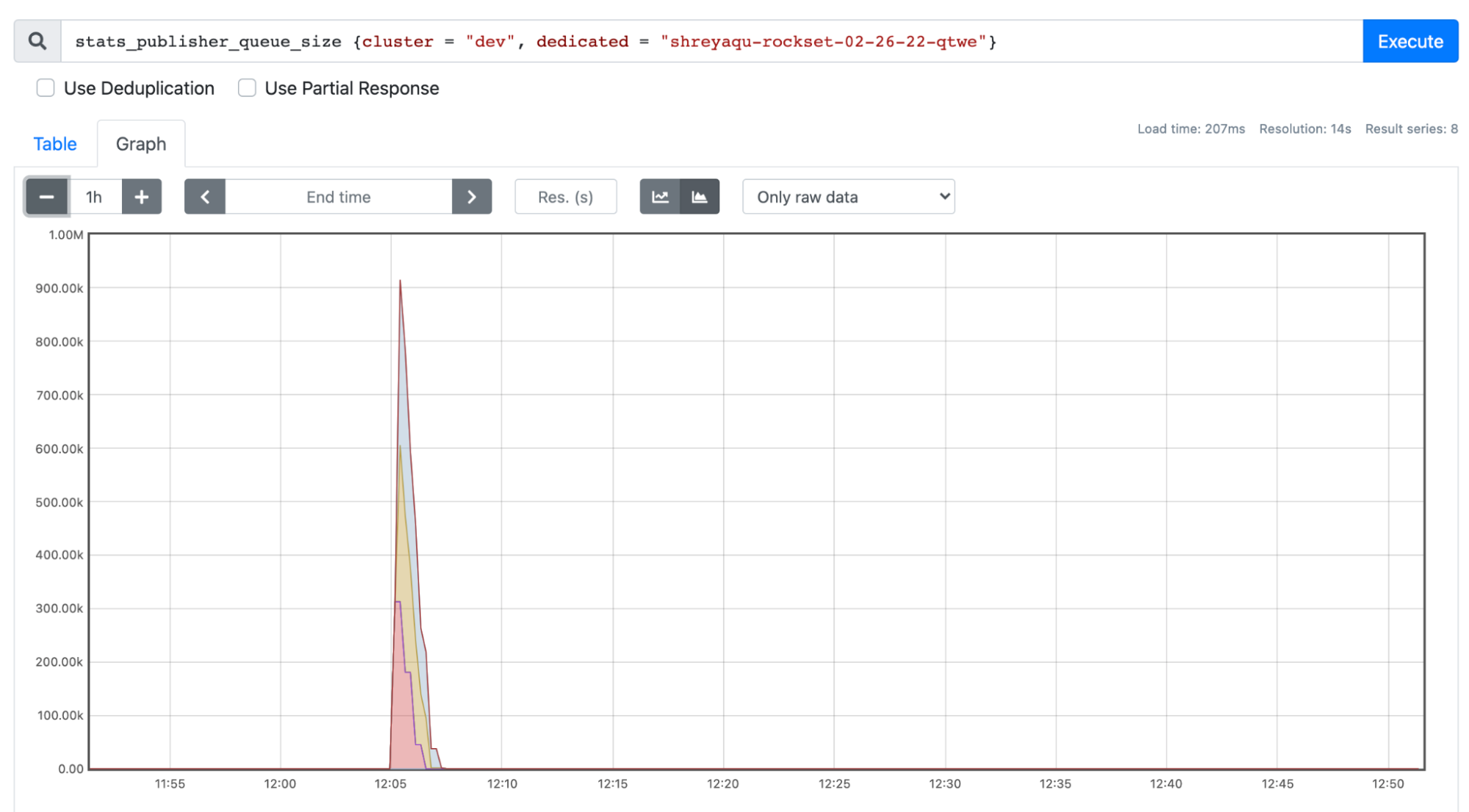

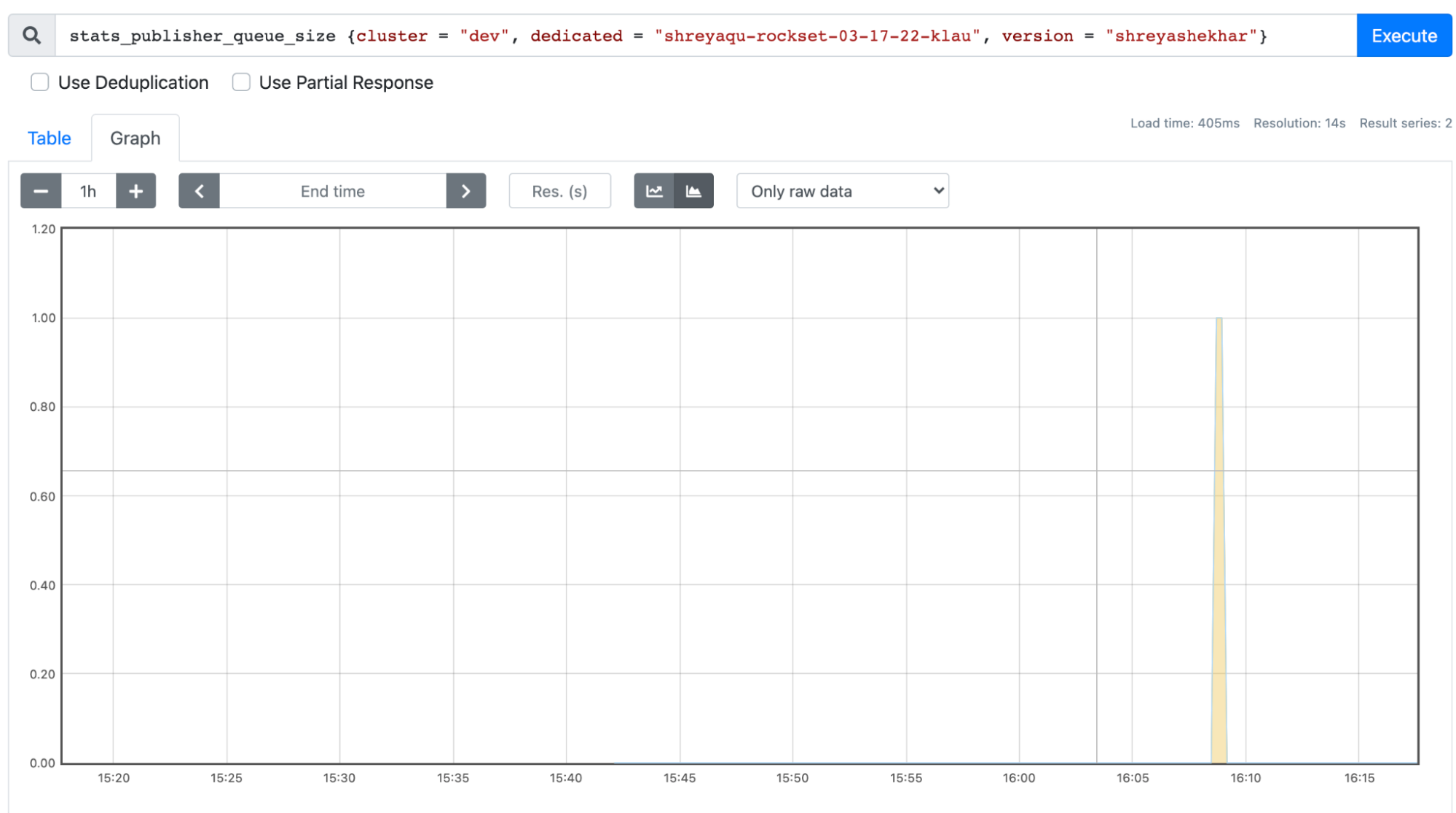

The solution to this issue involved the use of a thread safe map structure of queryID ->queue, which was used to store and unload querystats specific to a queryId. These stats were sent to Redis in as few trips as possible by eagerly unloading a queryID’s queue each time it has been populated, and pushing the entirety of the stats present to Redis. I also refactored the Redis API code we were using to send query stats, creating a function where multiple stats could be sent over instead of just one at a time. As shown in the images below, this dramatically decreased the spikes in query stats waiting to be sent to Redis, never letting multiple query stats from the same queryID fill up the queue.

As shown in the screenshots above, stats publisher queue size was drastically reduced from over 900k to a maximum of 1!

More About the Culture & The Experience

What I really appreciated about my internship experience at Rockset was the amount of autonomy I had over the work I was doing, and the high quality mentorship I received. My daily work felt similar to that of a full-time engineer on the systems team, since I was able to choose and work on tasks I felt were interesting to me while connecting with different engineers to learn more about the code I was working on. I was even able to reach out to other teams such as Sales and Marketing to learn more about their work and help out with aspects I found interesting.

Another aspect I loved was the close-knit community of engineers at Rockset, something I got a lot of exposure to at Hack Week, a week-long company hackathon that was held in Lake Tahoe earlier this year. This was an invaluable experience for me to meet other engineers at the company, and for all of us to hack away at features we felt should be integrated into Rockset’s product without the presence of normal daily tasks or obligations. I felt that this was an amazing idea, since it incentivized the engineers to work on ideas they were personally invested in related to the product and increased ownership for everyone as well. Not to mention, everyone from engineers to executives were present and working together in this hackathon, which made for an open and endearing company environment. We also had innumerable opportunities for bonding within the engineering teams on this trip, one of which was a huge loss for me in poker. And of course, the high stakes games of Super Smash.

Overall, my experience working as as an intern at Rockset was truly everything I had hoped for, and more.

Shreya Shekhar is studying Electrical Engineering & Computer Science and Business Administration at U.C. Berkeley.

Rockset is the leading Real-time Analytics Platform Built for the Cloud, delivering fast analytics on real-time data with surprising efficiency. Learn more at rockset.com.