Best Practices for Analyzing Kafka Event Streams

March 5, 2020

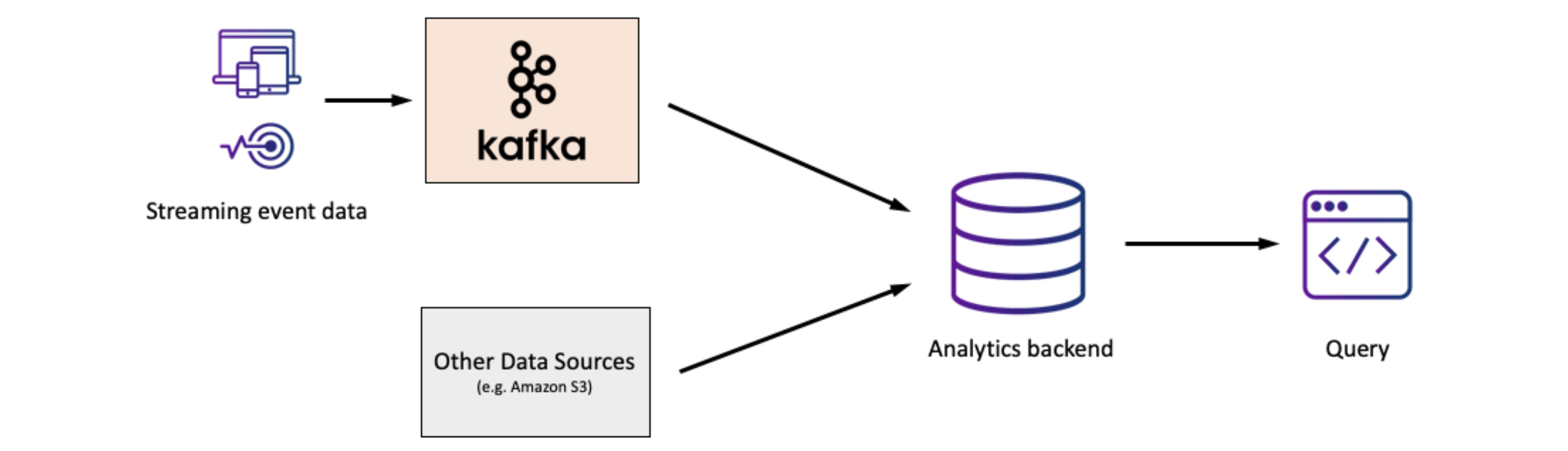

Apache Kafka has seen broad adoption as the streaming platform of choice for building applications that react to streams of data in real time. In many organizations, Kafka is the foundational platform for real-time event analytics, acting as a central location for collecting event data and making it available in real time.

While Kafka has become the standard for event streaming, we often need to analyze and build useful applications on Kafka data to unlock the most value from event streams. In this e-commerce example, Fynd analyzes clickstream data in Kafka to understand what’s happening in the business over the last few minutes. In the virtual reality space, a provider of on-demand VR experiences makes determinations on what content to offer based on large volumes of user behavior data generated in real time and processed through Kafka. So how should organizations think about implementing analytics on data from Kafka?

Considerations for Real-Time Event Analytics with Kafka

When selecting an analytics stack for Kafka data, we can break down key considerations along several dimensions:

- Data Latency

- Query Complexity

- Columns with Mixed Types

- Query Latency

- Query Volume

- Operations

Data Latency

How up to date is the data being queried? Keep in mind that complex ETL processes can add minutes to hours before the data is available to query. If the use case does not require the freshest data, then it may be sufficient to use a data warehouse or data lake to store Kafka data for analysis.

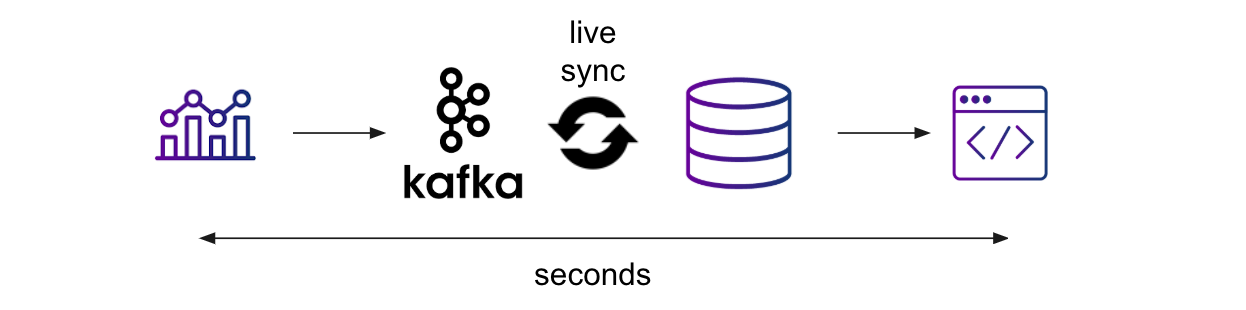

However, Kafka is a real-time streaming platform, so business requirements often necessitate a real-time database, which can provide fast ingestion and a continuous sync of new data, to be able to query the latest data. Ideally, data should be available for query within seconds of the event occurring in order to support real-time applications on event streams.

Query Complexity

Does the application require complex queries, like joins, aggregations, sorting, and filtering? If the application requires complex analytic queries, then support for a more expressive query language, like SQL, would be desirable.

Note that in many instances, streams are most useful when joined with other data, so do consider whether the ability to do joins in a performant manner would be important for the use case.

Columns with Mixed Types

Does the data conform to a well-defined schema or is the data inherently messy? If the data fits a schema that doesn’t change over time, it may be possible to maintain a data pipeline that loads it into a relational database, with the caveat mentioned above that data pipelines will add data latency.

If the data is messier, with values of different types in the same column for instance, then it may be preferable to select a Kafka sink that can ingest the data as is, without requiring data cleaning at write time, while still allowing the data to be queried.

Query Latency

While data latency is a question of how fresh the data is, query latency refers to the speed of individual queries. Are fast queries required to power real-time applications and live dashboards? Or is query latency less critical because offline reporting is sufficient for the use case?

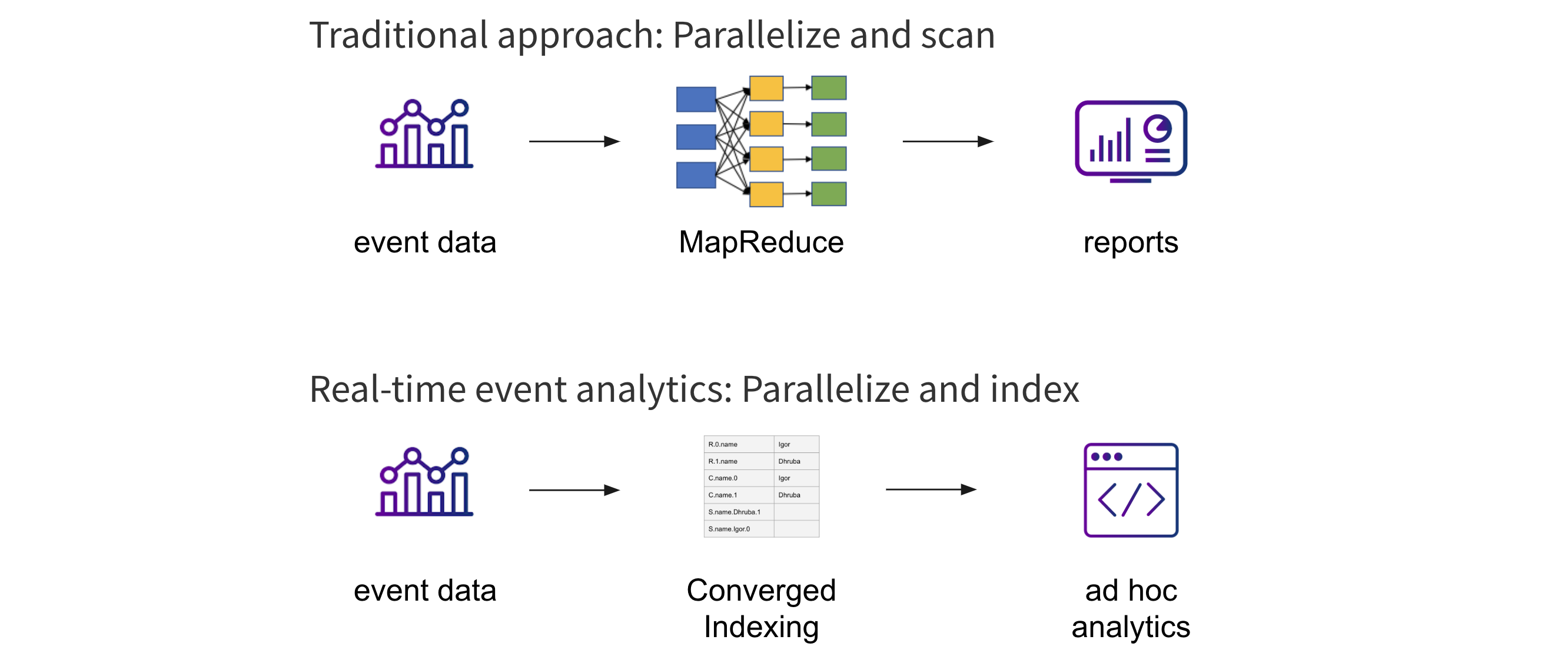

The traditional approach to analytics on large data sets involves parallelizing and scanning the data, which will suffice for less latency-sensitive use cases. However, to meet the performance requirements of real-time applications, it is better to consider approaches that parallelize and index the data instead, to enable low-latency ad hoc queries and drilldowns.

Query Volume

Does the architecture need to support large numbers of concurrent queries? If the use case requires on the order of 10-50 concurrent queries, as is common with reporting and BI, it may suffice to ETL the Kafka data into a data warehouse to handle these queries.

There are many modern data applications that need much higher query concurrency. If we are presenting product recommendations in an e-commerce scenario or making decisions on what content to serve as a streaming service, then we can imagine thousands of concurrent queries, or more, on the system. In these cases, a real-time analytics database would be the better choice.

Operations

Is the analytics stack going to be painful to manage? Assuming it’s not already being run as a managed service, Kafka already represents one distributed system that has to be managed. Adding yet another system for analytics adds to the operational burden.

This is where fully managed cloud services can help make real-time analytics on Kafka much more manageable, especially for smaller data teams. Look for solutions do not require server or database management and that scale seamlessly to handle variable query or ingest demands. Using a managed Kafka service can also help simplify operations.

Conclusion

Building real-time analytics on Kafka event streams involves careful consideration of each of these aspects to ensure the capabilities of the analytics stack meet the requirements of your application and engineering team. Elasticsearch, Druid, Postgres, and Rockset are commonly used as real-time databases to serve analytics on data from Kafka, and you should weigh your requirements, across the axes above, against what each solution provides.

For more information on this topic, do check out this related tech talk where we go through these considerations in greater detail: Best Practices for Analyzing Kafka Event Streams.