How to Build a Recommender System using Rockset and OpenAI Embedding Models

April 22, 2024

Overview

In this guide, you will:

- Gain a high-level understanding of vectors, embeddings, vector search, and vector databases, which will clarify the concepts we will build upon.

- Learn how to use the Rockset console with OpenAI embeddings to perform vector-similarity searches, forming the backbone of our recommender engine.

- Build a dynamic web application using vanilla CSS, HTML, JavaScript, and Flask, seamlessly integrating with the Rockset API and the OpenAI API.

- Find an end-to-end Colab notebook that you can run without any dependencies on your local operating system: Recsys_workshop.

Introduction

A real-time personalized recommender system can add tremendous value to an organization by enhancing the level user engagement and ultimately increasing user satisfaction.

Building such a recommendation system that deals efficiently with high-dimensional data to find accurate, relevant, and similar items in a large dataset requires effective and efficient vectorization, vector indexing, vector search, and retrieval which in turn demands robust databases with optimal vector capabilities. For this post, we will use Rockset as the database and OpenAI embedding models to vectorize the dataset.

Vector and Embedding

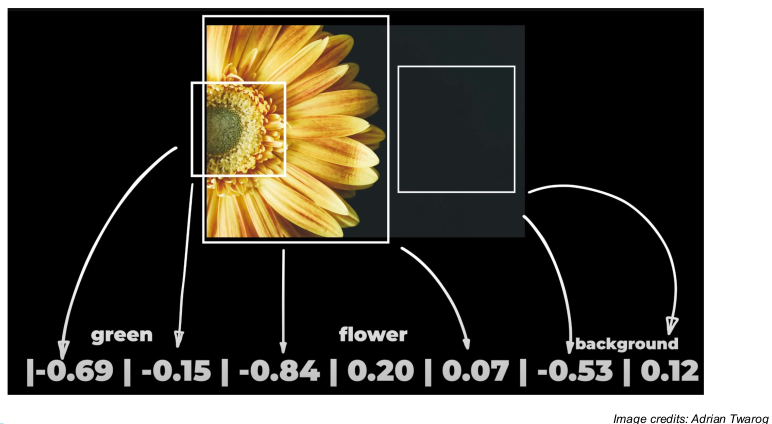

Vectors are structured and meaningful projections of data in a continuous space. They condense important attributes of an item into a numerical format while ensuring grouping similar data closely together in a multidimensional area. For example, in a vector space, the distance between the words "dog" and "puppy" would be relatively small, reflecting their semantic similarity despite the difference in their spelling and length.

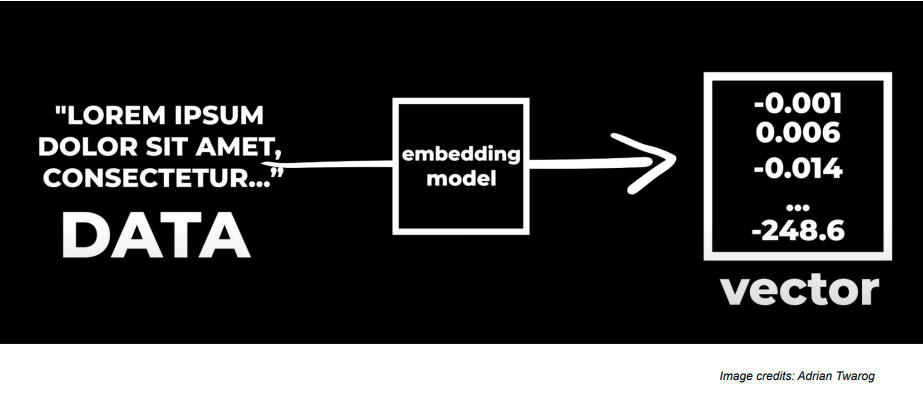

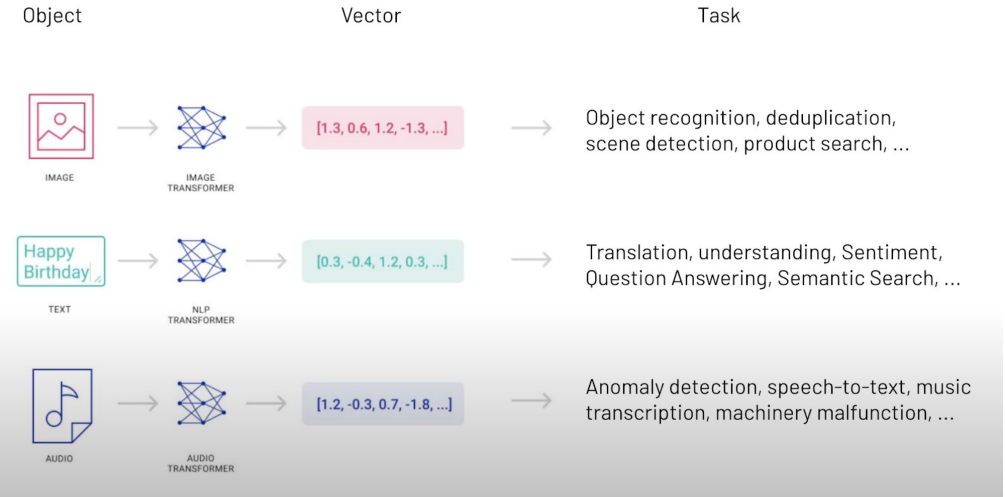

Embeddings are numerical representations of words, phrases, and other data forms.Now, any kind of raw data can be processed through an AI-powered embedding model into embeddings as shown in the picture below. These embeddings can be then used to make various applications and implement a variety of use cases.

Multiple AI models and techniques can be used to create these embeddings. For instance, Word2Vec, GLoVE, and transformers like BERT and GPT can be used to create embeddings. In this tutorial, we’ll be using OpenAI’s embeddings with the “text-embedding-ada-002” model.

Applications such as Google Lens, Netflix, Amazon, Google Speech-to-Text, and OpenAI Whisper, use embeddings of images, text, or even audio and video clips created by an embedding model to generate equivalent vector representations. These vector embeddings very well preserve the semantic information, complex patterns, and all other higher-dimensional relationships in the data.

Vector Search?

It’s a technique that uses vectors to conduct searches and identify relevance among a pool of data. Unlike traditional keyword searches that make use of exact keyword matches, vector search captures semantic contextual meaning as well.

Due to this attribute, vector search is capable of uncovering relationships and similarities that traditional search methods might miss. It does so by converting data into vector representations, storing them in vector databases, and using algorithms to find the most similar vectors to a query vector.

Vector Database

Vector databases are specialized databases where data is stored in the form of vector embeddings. To cater to the complex nature of vectorized data, a specialized and optimized database is designed to handle the embeddings in an efficient manner. To ensure that vector databases provide the most relevant and accurate results, they make use of the vector search.

A production-ready vector database will solve many, many more “database” problems than “vector” problems. By no means is vector search, itself, an “easy” problem, but the mountain of traditional database problems that a vector database needs to solve certainly remains the “hard part.” Databases solve a host of very real and very well-studied problems from atomicity and transactions, consistency, performance and query optimization, durability, backups, access control, multi-tenancy, scaling and sharding and much more. Vector databases will require answers in all of these dimensions for any product, business or enterprise. Read more on challenges related to Scaling Vector Search here.

Overview of the Recommendation WebApp

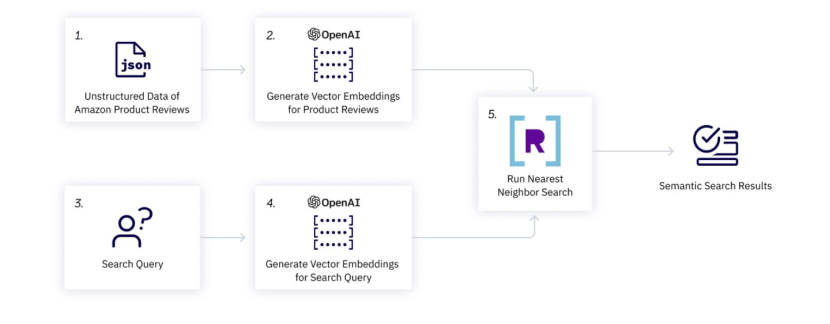

The picture below shows the workflow of the application we’ll be building. We have unstructured data i.e., game reviews in our case. We’ll generate vector embeddings for all of these reviews through OpenAI model and store them in the database. Then we’ll use the same OpenAI model to generate vector embeddings for our search query and match it with the review vector embeddings using a similarity function such as the nearest neighbor search, dot product or approximate neighbor search. Finally, we will have our top 10 recommendations ready to be displayed.

Steps to build the Recommender System using Rockset and OpenAI Embedding

Let’s begin with signing up for Rockset and OpenAI and then dive into all the steps involved within the Google Colab notebook to build our recommendation webapp:

Step 1: Sign-up on Rockset

Sign-up and create an API key to use in the backend code. Save it in the environment variable with the following code:

import os

os.environ["ROCKSET_API_KEY"] = "XveaN8L9mUFgaOkffpv6tX6VSPHz####"

Step 2: Create a new Collection and Upload Data

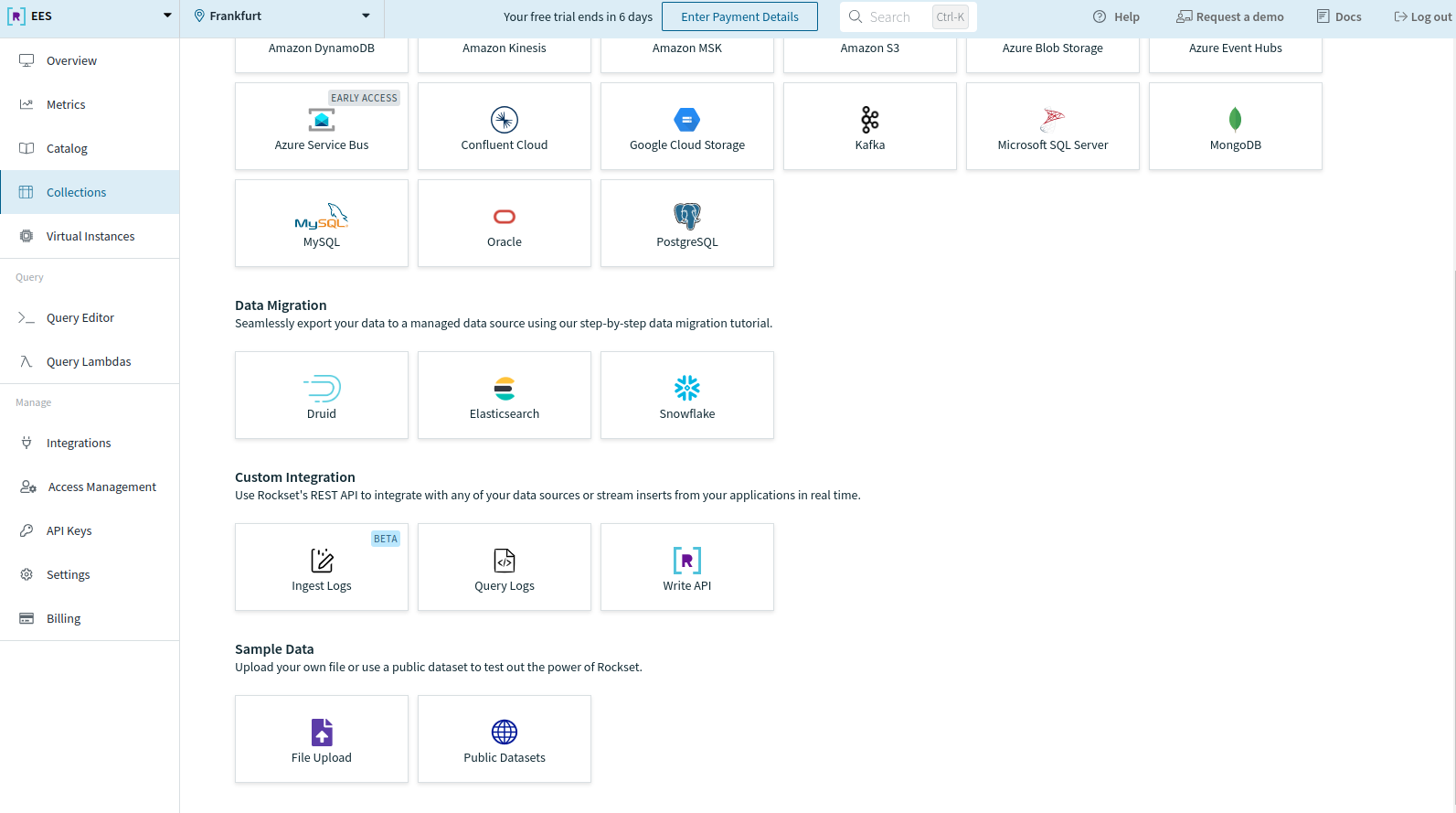

After making an account, create a new collection from your Rockset console. Scroll to the bottom and choose File Upload under Sample Data to upload your data.

For this tutorial, we’ll be using Amazon product review data. The vectorized form of the data is available to download here. Download this on your local machine so it can be uploaded to your collection.

You’ll be directed to the following page. Click on Start.

You can use JSON, CSV, XML, Parquet, XLS, or PDF file formats to upload the data.

Click on the Choose file button and navigate to the file you want to upload. This will take some time. After the file is uploaded successfully, you’ll be able to review it under Source Preview.

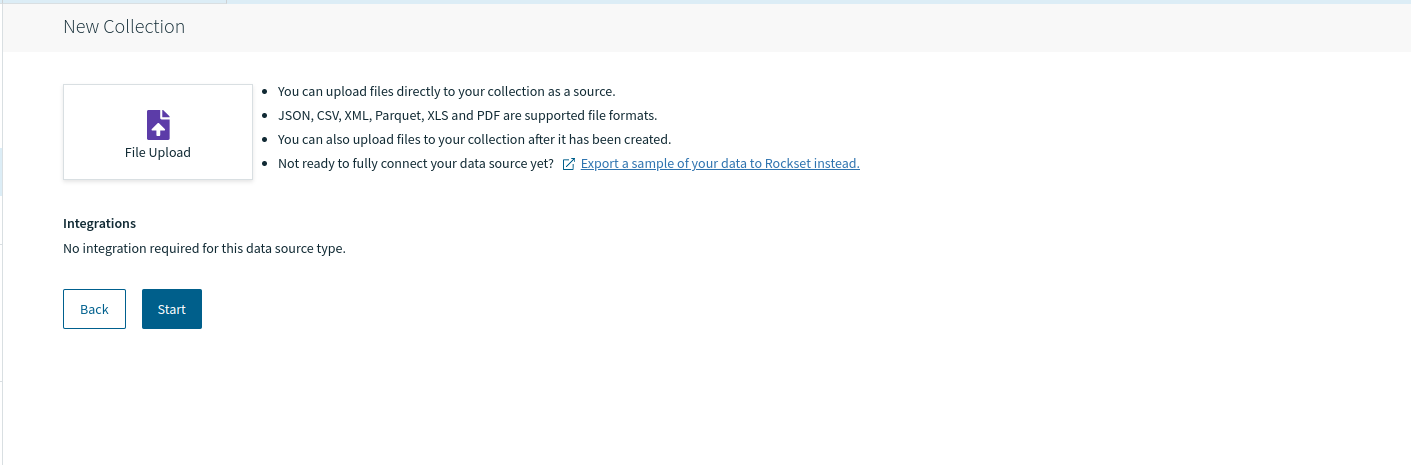

We’ll be uploading the sample_data.json file and then clicking on Next. You’ll be directed to the SQL transformation screen to perform transformations or feature engineering as per your needs.

As we don’t want to apply any transformation now, we’ll move on to the next step by clicking Next.

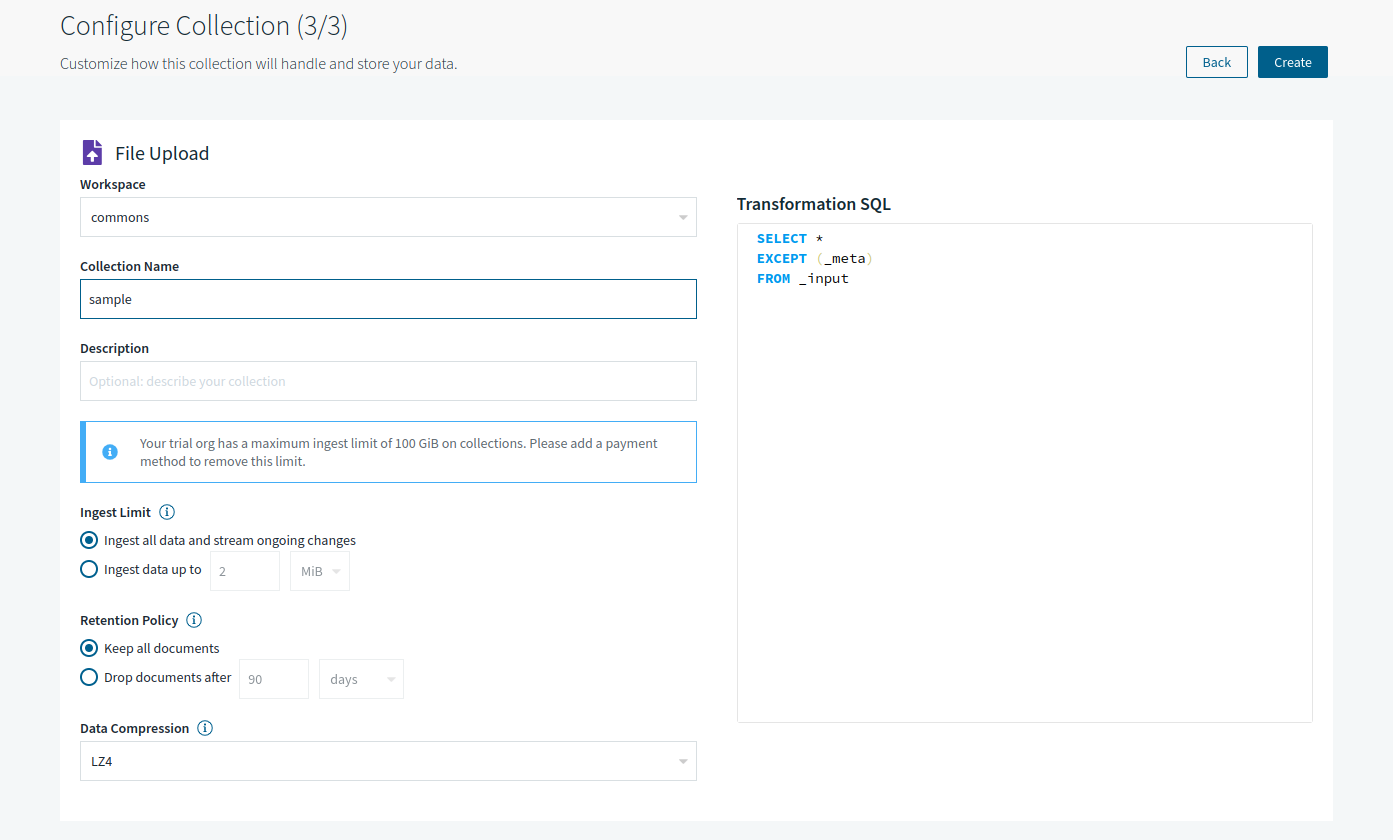

Now, the configuration screen will prompt you to choose your workspace (‘commons’ selected by default) along with Collection Name and several other collection settings.

We’ll name our collection “sample” and move forward with default configurations by clicking Create.

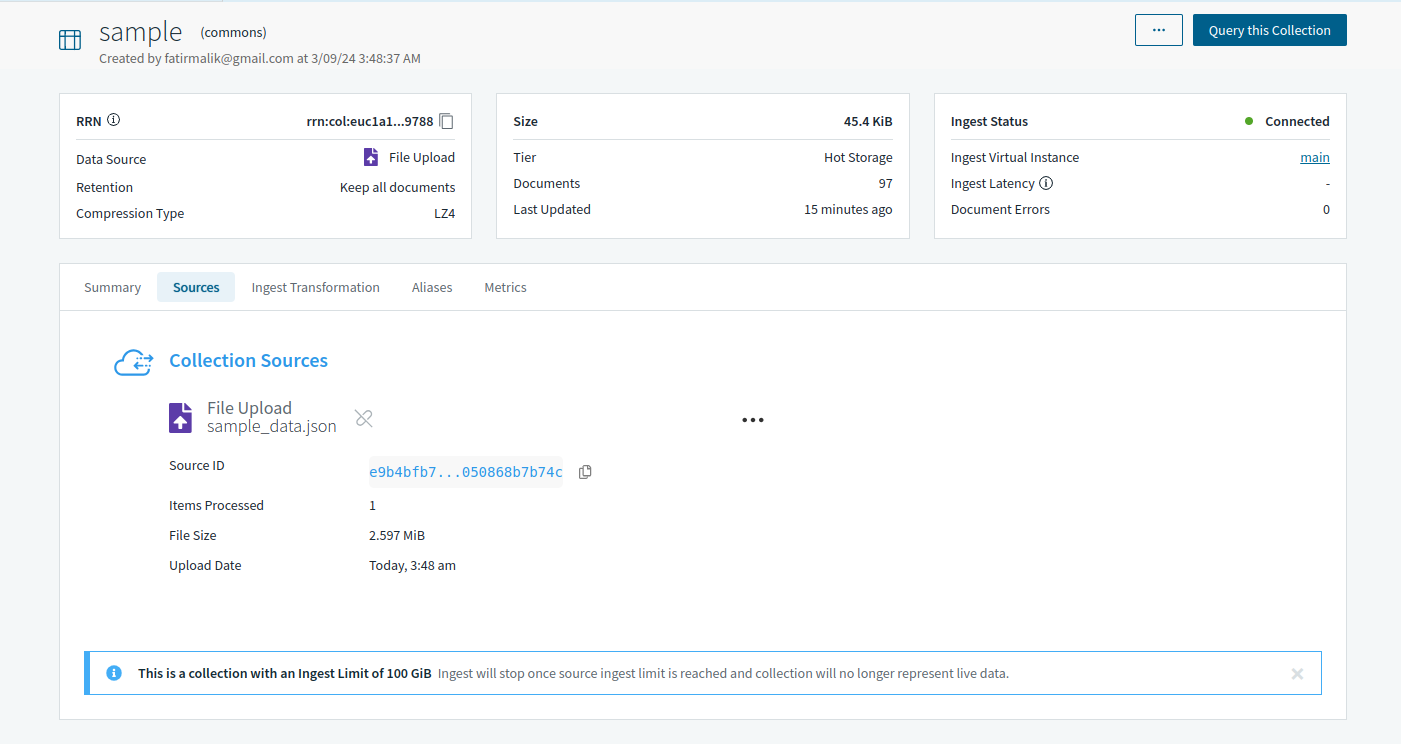

Finally, your collection will be created. However, it might take some time before the Ingest Status changes from Initializing to Connected.

Once the status is updated, Rockset’s query tool can query the collection via the Query this Collection button on the right-top corner in the picture below.

Step 3: Create OpenAI API Key

To convert data into embeddings, we’ll use an OpenAI embedding model. Sign-up for OpenAI and then create an API key.

After signing up, go to API Keys and create a secret key. Don’t forget to copy and save your key. Similar to Rockset’s API key, save your OpenAI key in your environment so it can easily be used throughout the notebook:

import os

os.environ["OPENAI_API_KEY"] = "sk-####"

Step 4: Create a Query Lambda on Rockset

Rockset allows its users to utilize the flexibility and comfort of a managed database platform to the fullest through Query Lambdas. These parameterized SQL queries can be stored in Rocket as a separate resource and then executed on the run with the help of dedicated REST endpoints.

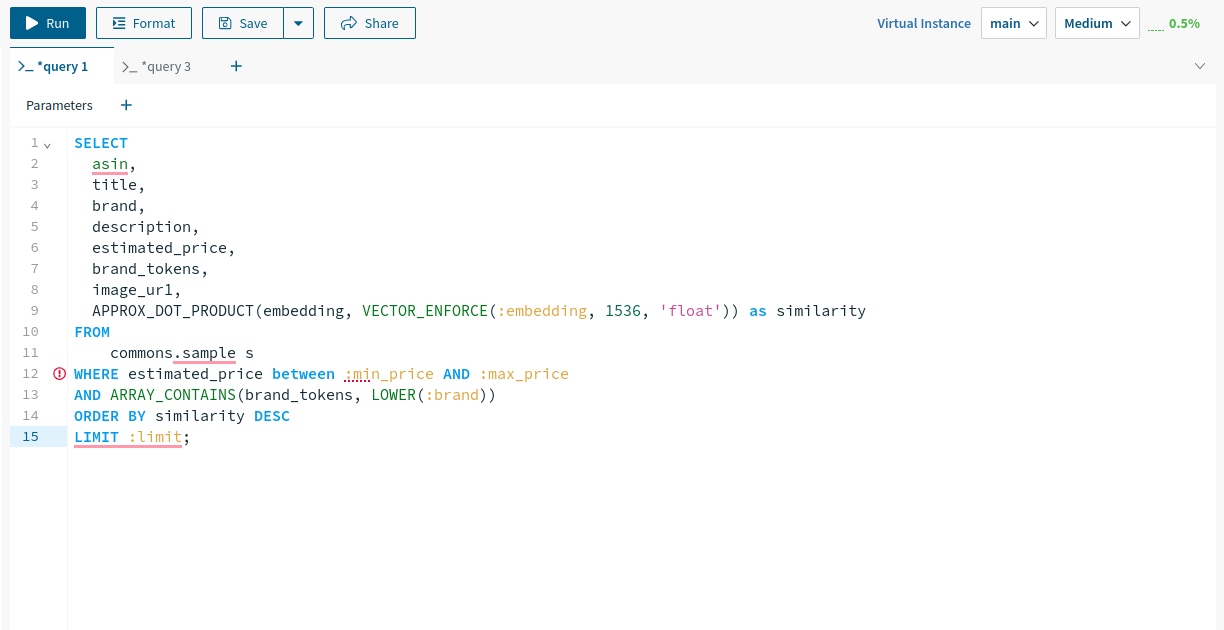

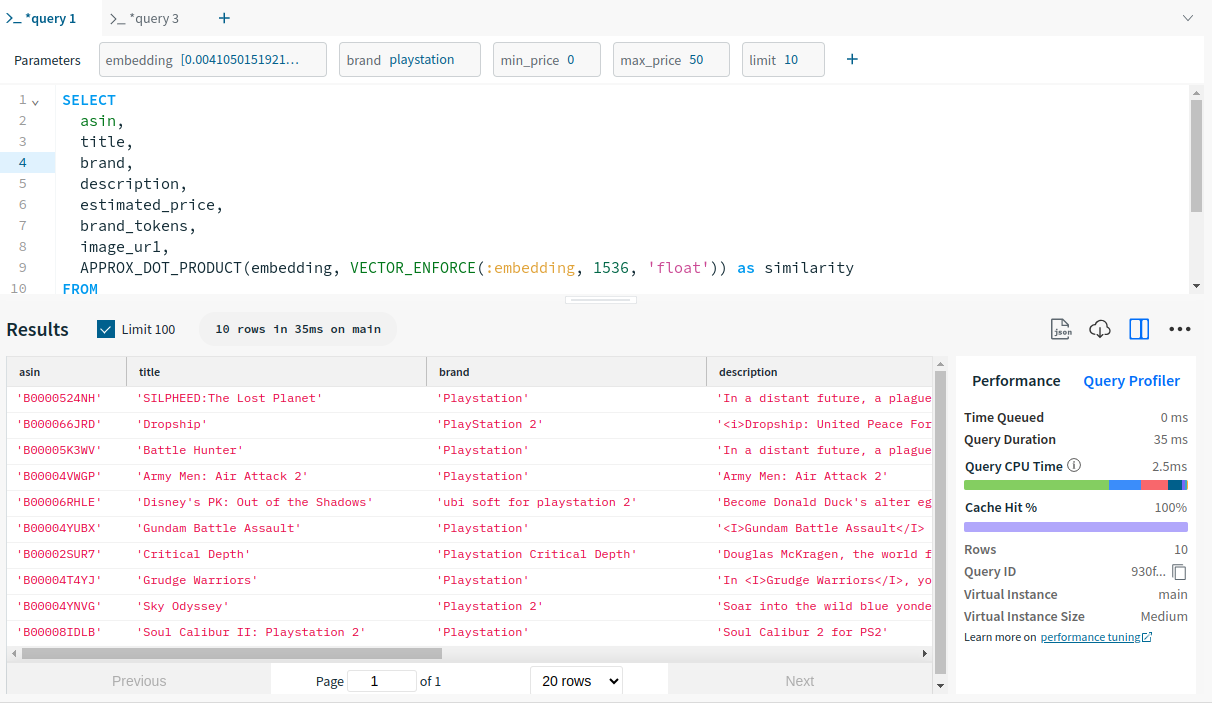

Let’s create one for our tutorial. We’ll be using the following Query Lambda with parameters: embedding, brand, min_price, max_price and limit.

SELECT

asin,

title,

brand,

description,

estimated_price,

brand_tokens,

image_ur1,

APPROX_DOT_PRODUCT(embedding, VECTOR_ENFORCE(:embedding, 1536, 'float')) as similarity

FROM

commons.sample s

WHERE estimated_price between :min_price AND :max_price

AND ARRAY_CONTAINS(brand_tokens, LOWER(:brand))

ORDER BY similarity DESC

LIMIT :limit;

This parameterized query does the following:

- retrieves data from the "sample" table in the "commons" schema. And selects specific columns like ASIN, title, brand, description, estimated_price, brand_tokens, and image_ur1.

- computes the similarity between the provided embedding and the embedding stored in the database using the APPROX_DOT_PRODUCT function.

- filters results based on the estimated_price falling within the provided range and the brand containing the specified value. Next, the results are sorted based on similarity in descending order.

- Finally, the number of returned rows are limited based on the provided 'limit' parameter.

To build this Query Lambda, query the collection made in step 2 by clicking on Query this collection and pasting the parameterized query above into the query editor.

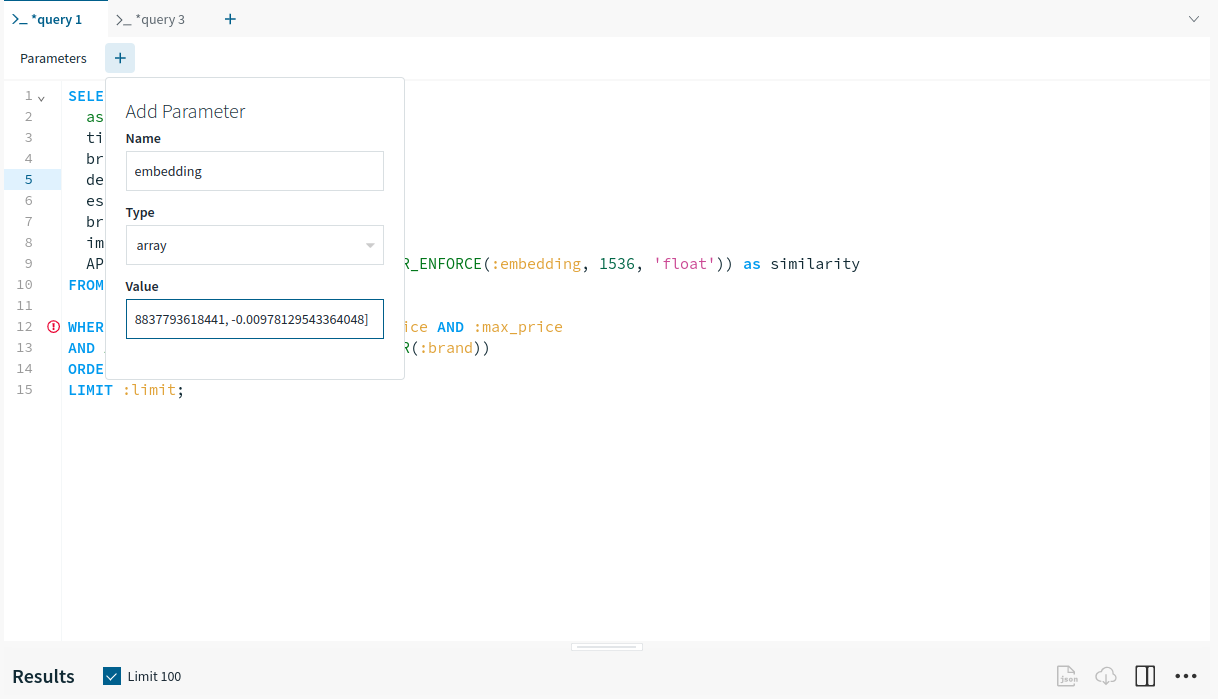

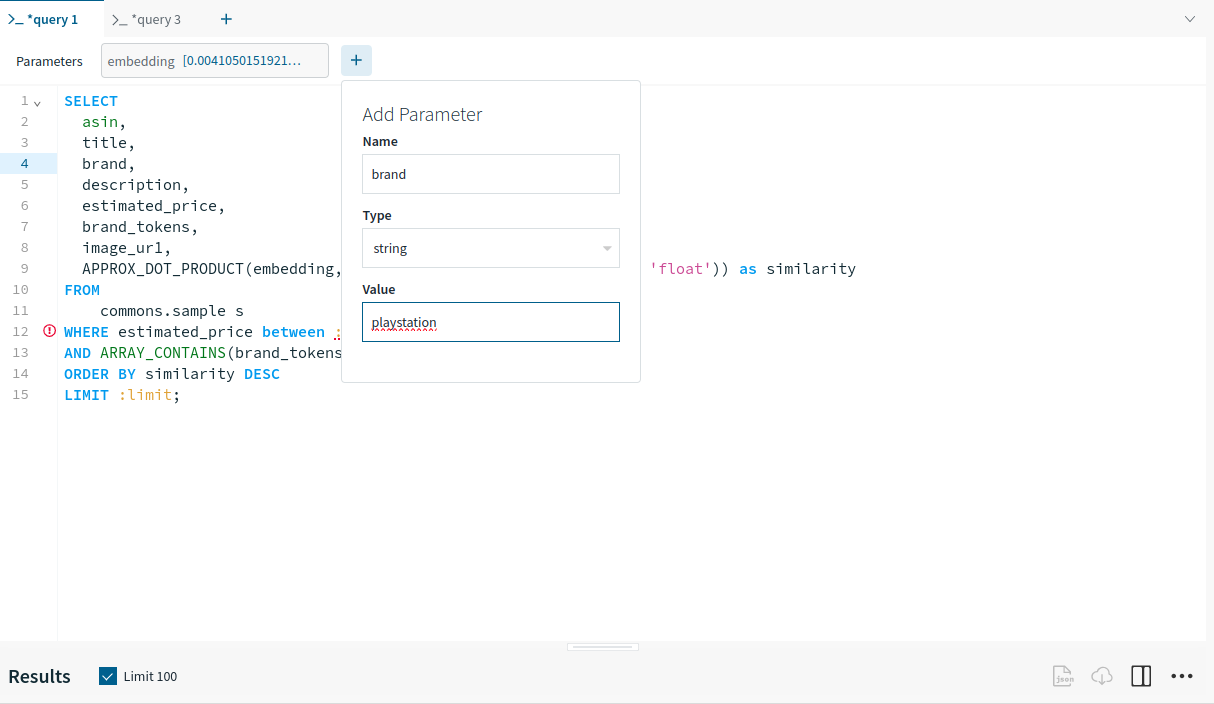

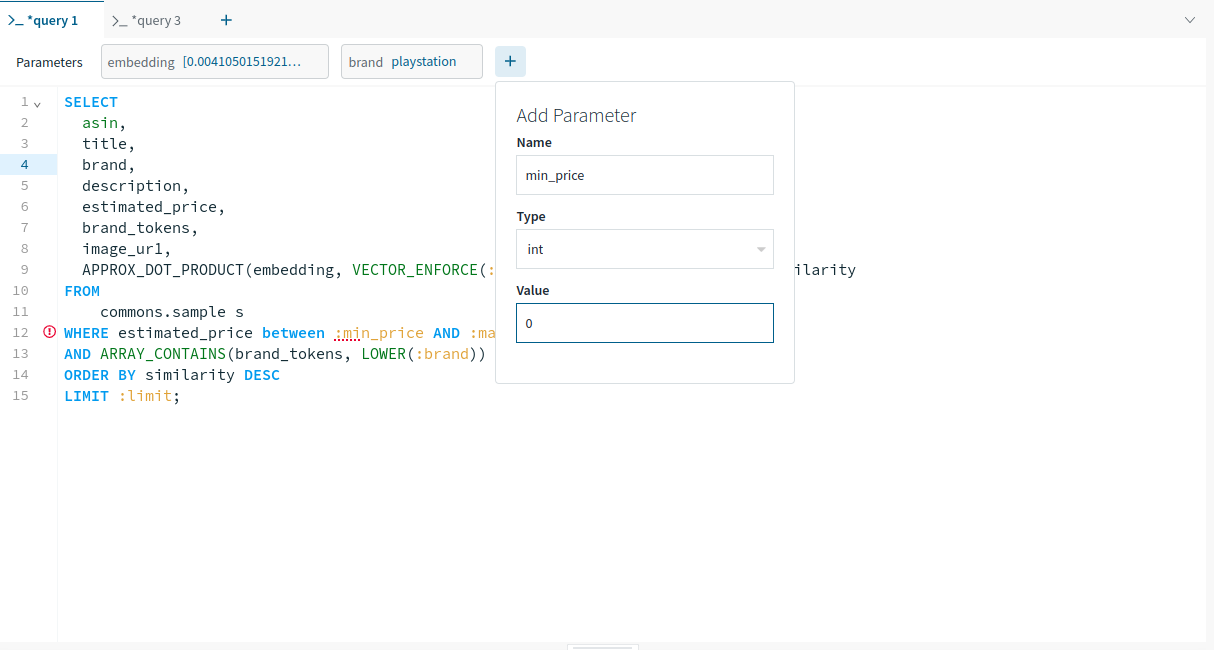

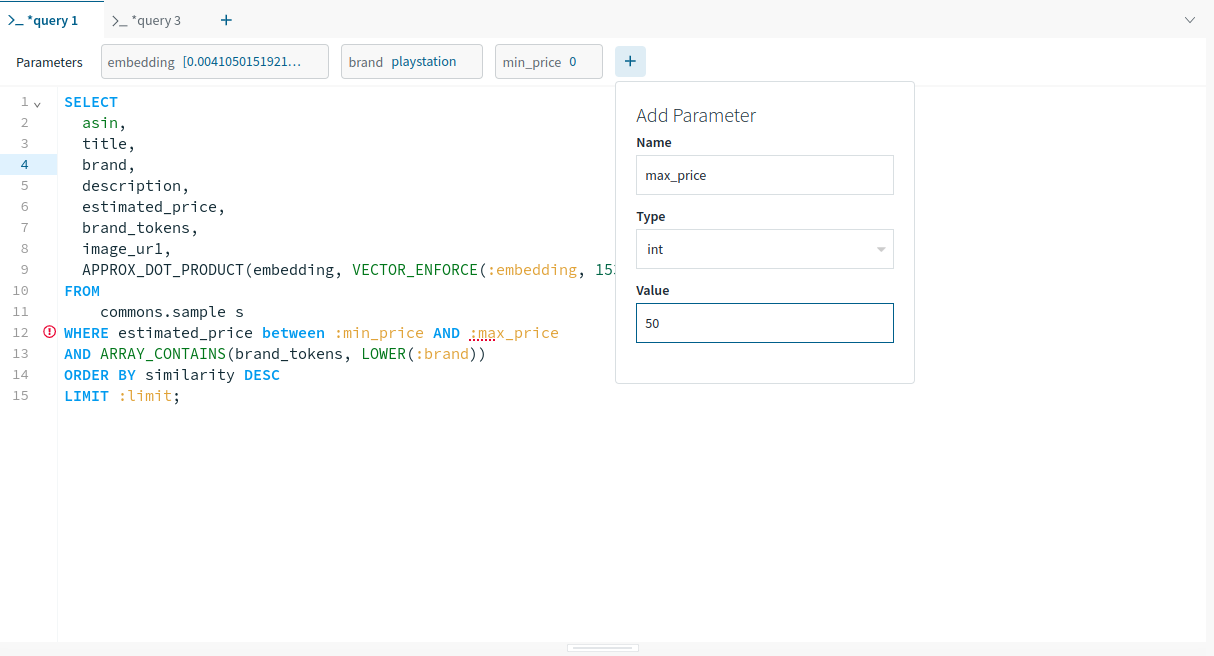

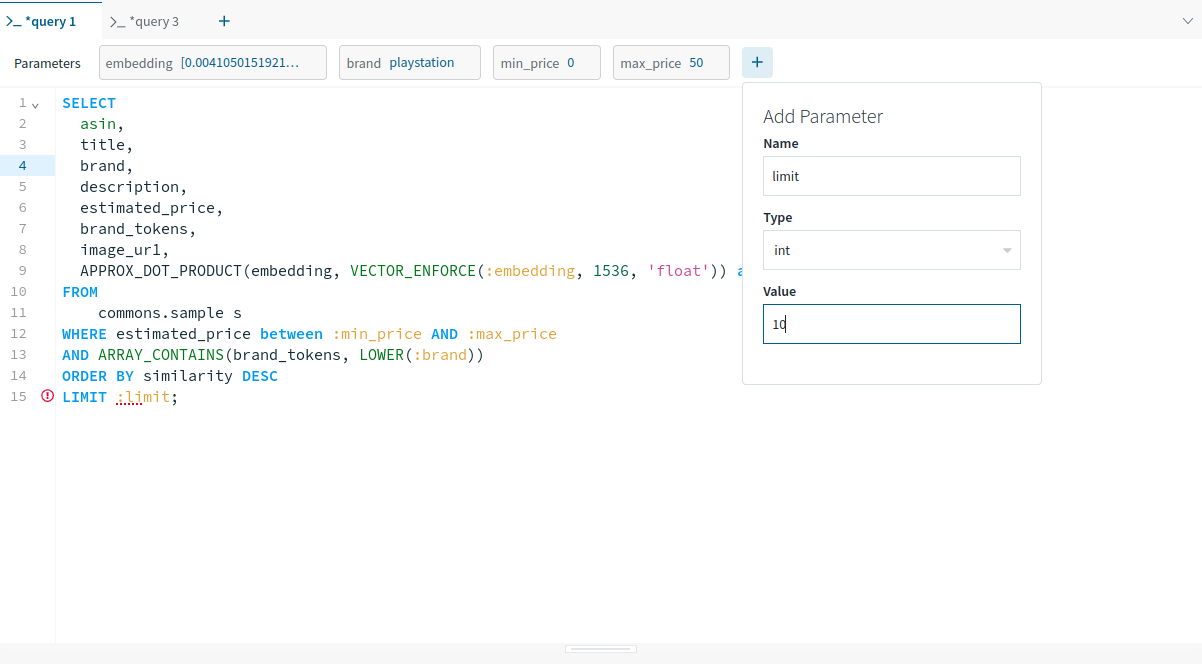

Next, add the parameters one by one to run the query before saving it as a query lambda.

You can use the default embedding value from here. It’s a vectorized embedding for ‘Star Wars’. For the remaining default values, consult the pictures below.

Note: Running the query with a parameter before saving it as Query Lambda is not mandatory. However, it’s a good practice to ensure that the query executes error-free before its usage on the production.

After setting up the default parameters, the query gets executed successfully.

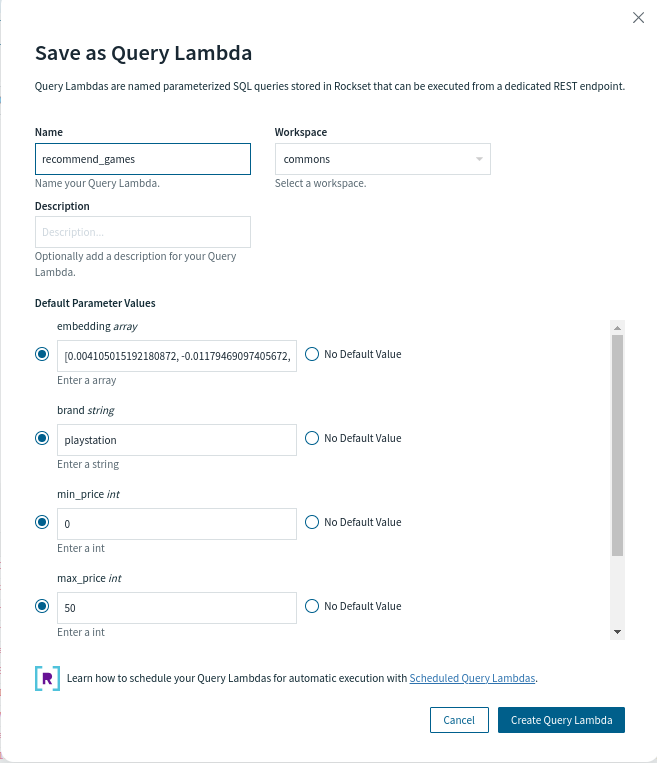

Let’s save this query lambda now. Click on Save in the query editor and name your query lambda which is “recommend_games” in our case.

Frontend Overview

The final step in creating a web application involves implementing a frontend design using vanilla HTML, CSS, and JavaScript, along with backend implementation using Flask, a lightweight, Pythonic web framework.

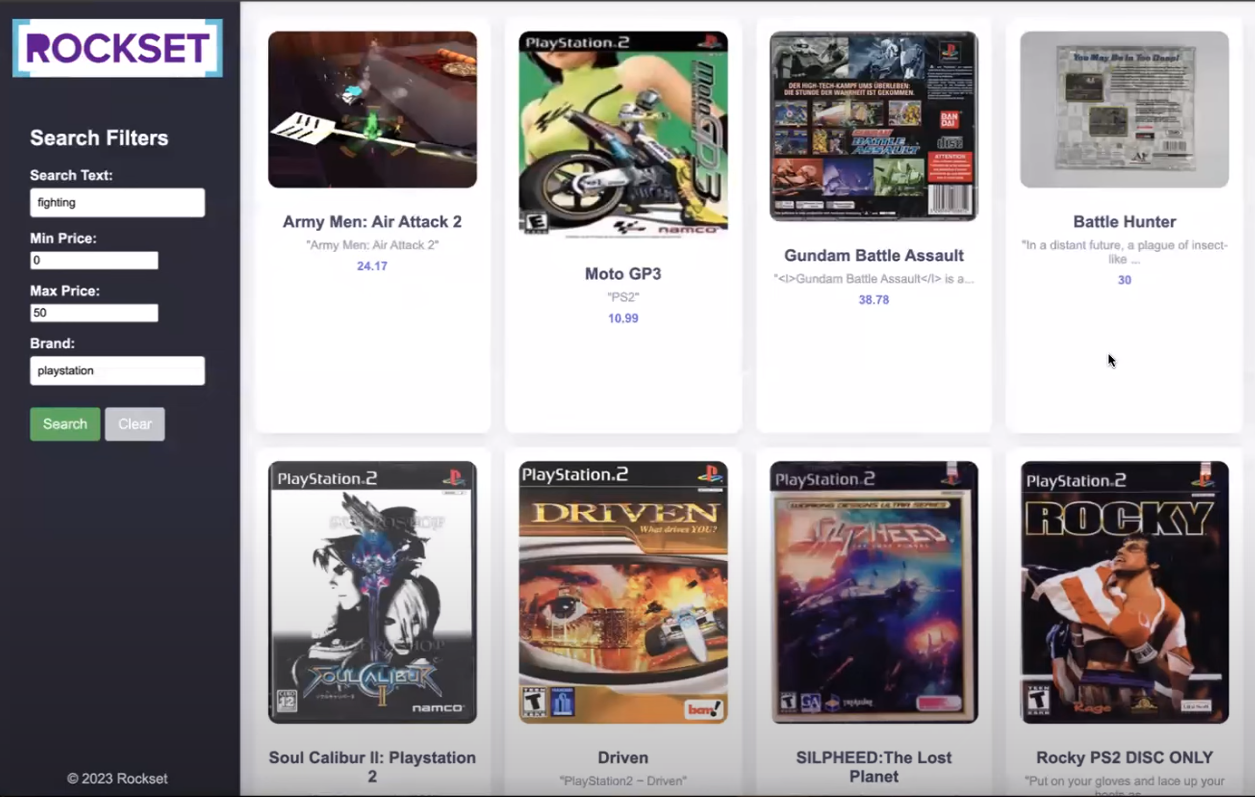

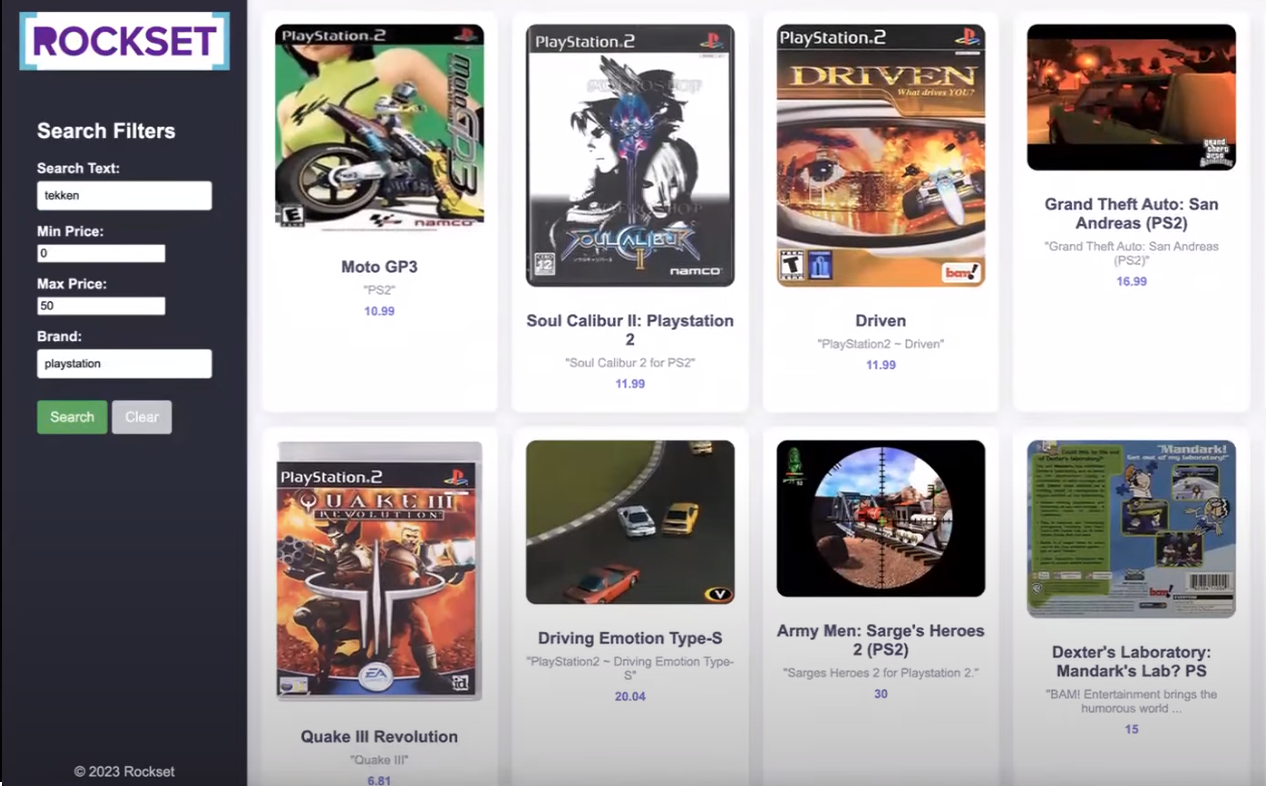

The frontend page looks as shown below:

-

HTML Structure:

- The basic structure of the webpage includes a sidebar, header, and product grid container.

-

Sidebar:

- The sidebar contains search filters such as brands, min and max price, etc., and buttons for user interaction.

-

Product Grid Container:

- The container populates product cards dynamically using JavaScript to display product information i.e. image, title, description, and price.

-

JavaScript Functionality:

- It is needed to handle interactions such as toggling full descriptions, populating the recommendations, and clearing search form inputs.

-

CSS Styling:

- Implemented for responsive design to ensure optimal viewing on various devices and improve aesthetics.

Check out the full code behind this front-end here.

Backend Overview

Flask makes creating web applications in Python easier by rendering the HTML and CSS files via single-line commands. The backend code for the remaining tutorial has been already completed for you.

Initially, the Get method will be called and the HTML file will be rendered. As there will be no recommendation at this time, the basic structure of the page will be displayed on the browser. After this is executed, we can fill the form and submit it thereby utilizing the POST method to get some recommendations.

Let’s dive into the main components of the code as we did for the frontend:

-

Flask App Setup:

- A Flask application named app is defined along with a route for both GET and POST requests at the root URL ("/").

-

Index function:

- Function built to primarily handle both GET and POST requests.

-

If it’s a POST request:

- Extracts form data from the frontend.

- Calls functions to process the data and fetch results from Rockset.

- Modifies the image URLs to point to local files. These images are already saved in a directory.

- Renders the index.html template with the results.

-

If it’s a GET request:

- Renders the index.html template with the search form.

@app.route('/', methods=['GET', 'POST'])

def index():

if request.method == 'POST':

# Extract data from form fields

inputs = get_inputs()

search_query_embedding = get_openai_embedding(inputs, client)

rockset_key = os.environ.get('ROCKSET_API_KEY')

region = Regions.usw2a1

records_list = get_rs_results(inputs, region, rockset_key, search_query_embedding)

folder_path = 'static'

for record in records_list:

# Extract the identifier from the URL

identifier = record["image_url"].split('/')[-1].split('_')[0]

file_found = None

for file in os.listdir(folder_path):

if file.startswith(identifier):

file_found = file

break

if file_found:

# Overwrite the record["image_url"] with the path to the local file

record["image_url"] = file_found

record["description"] = json.dumps(record["description"])

# print(f"Matched file: {file_found}")

else:

print("No matching file found.")

# Render index.html with results

return render_template('index.html', records_list=records_list, request=request)

# If method is GET, just render the form

return render_template('index.html', request=request)

-

Data Processing Functions:

- get_inputs(): Extracts form data from the request.

def get_inputs():

search_query = request.form.get('search_query')

min_price = request.form.get('min_price')

max_price = request.form.get('max_price')

brand = request.form.get('brand')

# limit = request.form.get('limit')

return {

"search_query": search_query,

"min_price": min_price,

"max_price": max_price,

"brand": brand,

# "limit": limit

}

- get_openai_embedding(): Uses OpenAI to get embeddings for search queries.

def get_openai_embedding(inputs, client):

# openai.organization = org

# openai.api_key = api_key

openai_start = (datetime.now())

response = client.embeddings.create(

input=inputs["search_query"],

model="text-embedding-ada-002"

)

search_query_embedding = response.data[0].embedding

openai_end = (datetime.now())

elapsed_time = openai_end - openai_start

return search_query_embedding

- get_rs_results(): Utilizes Query Lambda created earlier in Rockset and returns recommendations based on user inputs and embeddings.

def get_rs_results(inputs, region, rockset_key, search_query_embedding):

print("\nRunning Rockset Queries...")

# Create an instance of the Rockset client

rs = RocksetClient(api_key=rockset_key, host=region)

rockset_start = (datetime.now())

# Execute Query Lambda By Version

rockset_start = (datetime.now())

api_response = rs.QueryLambdas.execute_query_lambda_by_tag(

workspace="commons",

query_lambda="recommend_games",

tag="latest",

parameters=[

{

"name": "embedding",

"type": "array",

"value": str(search_query_embedding)

},

{

"name": "min_price",

"type": "int",

"value": inputs["min_price"]

},

{

"name": "max_price",

"type": "int",

"value": inputs["max_price"]

},

{

"name": "brand",

"type": "string",

"value": inputs["brand"]

}

# {

# "name": "limit",

# "type": "int",

# "value": inputs["limit"]

# }

]

)

rockset_end = (datetime.now())

elapsed_time = rockset_end - rockset_start

records_list = []

for record in api_response["results"]:

record_data = {

"title": record['title'],

"image_url": record['image_ur1'],

"brand": record['brand'],

"estimated_price": record['estimated_price'],

"description": record['description']

}

records_list.append(record_data)

return records_list

Overall, the Flask backend processes user input and interacts with external services (OpenAI and Rockset) via APIs to provide dynamic content to the frontend. It extracts form data from the frontend, generates OpenAI embeddings for text queries, and utilizes Query Lambda at Rockset to find recommendations.

Now, you are ready to run the flask server and access it through your internet browser. Our application is up and running. Let’s add some parameters and fetch some recommendations. The results will be displayed on an HTML template as shown below.

Note: The tutorial's entire code is available on GitHub. For a quick-start online implementation, a end-to-end runnable Colab notebook is also configured.

The methodology outlined in this tutorial can serve as a foundation for various other applications beyond recommendation systems. By leveraging the same set of concepts and using embedding models and a vector database, you are now equipped to build applications such as semantic search engines, customer support chatbots, and real-time data analytics dashboards.

Stay tuned for more tutorials!

Cheers!!!