Cloud Native: What It Means in the Data World

October 30, 2018

Prior to Rockset, I spent eight years at Facebook building out their big data infrastructure and online data infrastructure. All the software we wrote was deployed in Facebook's private data centers, so it was not till I started building on the public cloud that I fully appreciated its true potential.

Facebook may be the very definition of a web-scale company, but getting hardware still required huge lead times and extensive capacity planning. The public cloud, in contrast, provides hardware through the simplicity of API-based provisioning. It offers, for all intents and purposes, infinite compute and storage, requested on demand and relinquished when no longer needed.

An Epiphany on Cloud Elasticity

I came to a simple realization about the power of cloud economics. In the cloud, the price of using 1 CPU for 100 minutes is the same as that of using 100 CPUs for 1 minute. If a data processing task that takes 100 minutes on a single CPU could be reconfigured to run in parallel on 100 CPUs in 1 minute, then the price of computing this task would remain the same, but the speedup would be tremendous!

The Evolution to the Cloud

Recent evolutions of data processing state of the art have each sought to exploit prevailing hardware trends. Hadoop and RocksDB are two examples I’ve had the privilege of working on personally. The falling price of SATA disks in the early 2000s was one major factor for the popularity of Hadoop, because it was the only software that could cobble together petabytes of these disks to provide a large-scale storage system. Similarly, RocksDB blossomed because it leveraged the price-performance sweet spot of SSD storage. Today, the hardware platform is in flux once more, with many applications moving to the cloud. This trend towards cloud will again herald a new breed of software solutions.

The next iteration of data processing software will exploit the fluid nature of hardware in the cloud. Data workloads will grab and release compute, memory, and storage resources, as needed and when needed, to meet performance and cost requirements. But data processing software has to be reimagined and rewritten for this to become a reality.

How to Build for the Cloud

Cloud-native data platforms should scale dynamically to make use of available cloud resources. That means a data request needs to be parallelized and the hardware required to run it instantly acquired. Once the necessary tasks are scheduled and the results returned, the platform should promptly shed the hardware resources used for that request.

Simply processing in parallel does not make a system cloud friendly. Hadoop was a parallel-processing system, but its focus was on optimizing throughput of data processed within a fixed set of pre-acquired resources. Likewise, many other pre-cloud systems, including MongoDB and Elasticsearch, were designed for a world in which the underlying hardware, on which they run, was fixed.

The industry has recently made inroads designing data platforms for the cloud, however. Qubole morphed Hadoop to be cloud friendly, while Amazon Aurora and Snowflake built cloud-optimized relational databases. Here are some architectural patterns that are common in cloud-native data processing:

Use of shared storage rather than shared-nothing storage

The previous wave of distributed data processing frameworks was built for non-cloud infrastructure and utilized shared-nothing architectures. Dr. Stonebraker has written about the advantages of shared-nothing architectures since 1986 (The Case for Shared Nothing), and the advent of HDFS in 2005 made shared-nothing architectures a widespread reality. At about the same time, other distributed software, like Cassandra, HBase, and MongoDB, which used shared-nothing storage, appeared on the market. Storage was typically JBOD, locally attached to individual machines, resulting in tightly coupled compute and storage.

But in the cloud era, object stores have become the dominant storage. Cloud services such as Amazon S3 provide shared storage that can be simultaneously accessed from multiple nodes using well-defined APIs. Shared storage enables us to decouple compute and storage and scale each independently. This ability results in cloud-native systems that are orders of magnitude more efficient. Dr. Dewitt, who taught my database classes at the University of Wisconsin-Madison, postulated in his 2017 position paper that shared storage is back in fashion!

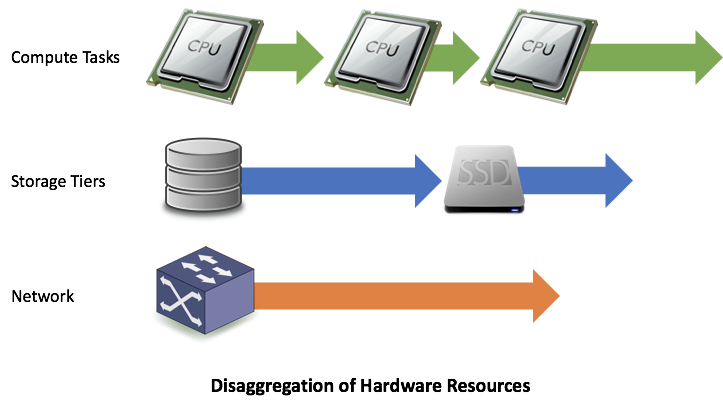

Disaggregated architecture

A cloud-native system is designed in such a way that it uses only as much hardware as is truly needed for the workload it is serving. The cloud offers us the ability to utilize storage, compute, and network independently of each other. We can only benefit from this if we design our service to use more (or less) of one hardware resource without altering its consumption of any other hardware resource.

Enter microservices. A software service can be composed from a set of microservices, with each microservice limited by only one type of resource. This is a disaggregated architecture. If more compute is needed, add more CPUs to the compute microservice. If more storage is needed, increase the storage capacity of the storage microservice. Refer to this HotCloud '18 paper by Prof. Remzi, Andrea, and our very own Venkat for a more thorough articulation of cloud-native design principles.

Cloud-native scheduling to manage both supply and demand

To manage adding and removing hardware resources to and from microservices, we need a new kind of resource scheduler. Traditional task schedulers typically only manage demand, i.e. it schedules task requests among the available hardware resources. In contrast, a cloud-native scheduler can manage both supply and demand. Depending on workload and configured policies, a cloud-native scheduler can request new hardware resources to be provisioned and simultaneously schedule new task requests on provisioned hardware.

Traditional data management software schedulers are not built to shed hardware. But in the cloud, it is imperative that a scheduler shed hardware when not in use. The quicker a system can remove excess hardware, the better its price-performance characteristics.

Separation of durability and performance

Maintaining multiple replicas of user data to provide durability in the event of node failure was a common strategy with pre-cloud systems, such as Hadoop, MongoDB, and Elasticsearch. The downside of this approach was that it cost server capacity. Having two or three replicas effectively doubled or tripled the hardware requirement. A better approach for a cloud-native data platform is to use a cloud object store to ensure durability, without the need for replicas.

Replicas have a role to play in aiding system performance, but in the age of cloud, we can bring replicas online only when there is a need. If there are no requests for a particular piece of data, it can reside purely in cloud object storage. As requests for data increase, one or more replicas can be created to serve them. By using cheaper cloud object storage for durability and only spinning up compute and fast storage for replicas when needed for performance, cloud-native data platforms can provide better price-performance.

Ability to leverage storage hierarchy

The cloud not only allows us to independently scale storage when needed, it also opens up many more shared storage options, such as remote SSD, remote spinning disks, object stores, and long-term cold storage. These storage tiers each provide different cost-latency characteristics, so we can place data on different storage tiers depending on how frequently they are accessed.

Cloud-native data platforms are commonly designed to take advantage of the storage hierarchy readily available in the cloud. In contrast, exploiting the storage hierarchy was never a design goal for many existing systems because it was difficult to implement multiple physical storage tiers in the pre-cloud world. One had to assemble hardware from multiple vendors to set up a hierarchical storage system. This was cumbersome and time consuming, and only very sophisticated users could afford it.

Takeaways

A cloud-only software stack has properties that were never under consideration for traditional systems. Disaggregation is key. Fluid resource management, where hardware supply can closely hug the demand curve, will become the norm—even for stateful systems. Embarrassingly parallel algorithms need to be employed at every opportunity until systems are hardware-resource bound—if not, it is a limitation of your software. You don’t get these advantages by deploying traditional software onto cloud nodes; you have to build for the cloud from the ground up.