On the Pursuit of Happiness (aka Squashing 502/504 Errors)

March 23, 2021

Introduction

502 and 504 errors can be a nuisance for Rockset and our users. For many users running customer-facing applications on Rockset, availability and uptime are very important, so even a single 5xx error is cause for concern. As a cloud service, Rockset deploys code to our production clusters multiple times a week, which means that any component of our distributed system has to stop and restart with new code in an error-free way.

Recently, we embarked on a product quality push to diagnose and remedy many of the causes of 502 and 504 errors that users may encounter. It was not immediately obvious what these issues were since our logging seemed to indicate these error-producing queries did not reach our HTTP endpoints. However, as product quality and user experience are always top of mind for us, we decided to investigate this issue thoroughly to eradicate these errors.

Cluster setup

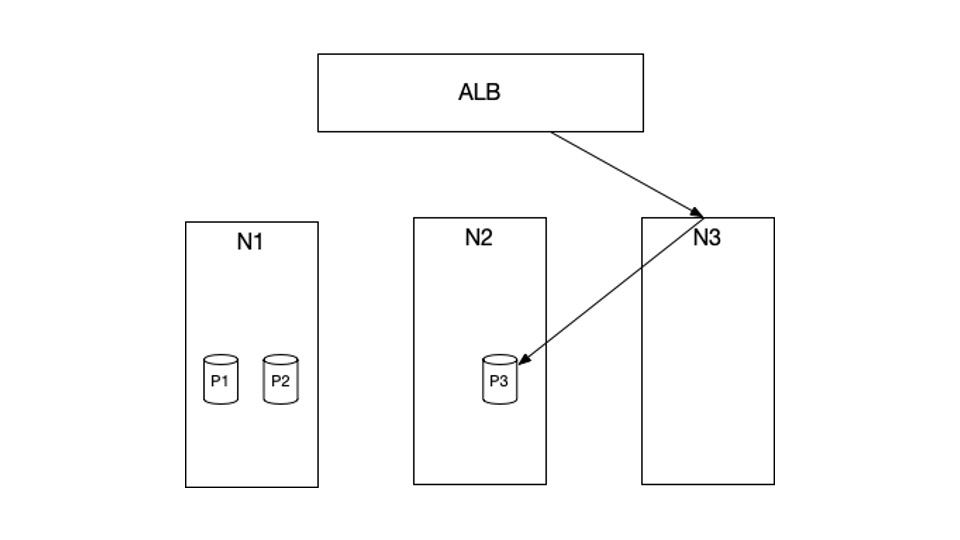

To diagnose the problem, we first need to understand the setup of our cluster. In the example above, N1, N2, and N3 are the EC2 instances currently in our cluster. P1, P2 and P3 are Kubernetes pods that are scheduled in these instances. Specifically, P1 and P2 are scheduled in N1, and P3 is scheduled in N2. There are no pods that are scheduled in N3.

HTTP requests from the web will first hit our AWS Application Load Balancer (ALB), which will then forward these requests to our cluster. The services that receive those HTTP requests from the ALB are set up with NodePort type. What that means is, when a request hits the ALB, it will get routed to a random node, for example, N3. N3 will then route this request to a pod P3, which could live in a totally different node.

We run kube-proxy in iptables mode. This means the routing described above is done through a component called iptables, which is pretty efficient. Our HTTP servers are written in Java using jetty.

With this setup, there are multiple issues we uncovered as we investigated the random 502/504 errors.

Connection idle timeout misconfiguration

Connection idle timeout, or keep-alive timeout, is defined as the amount of time after which the connection will be terminated if there is no data. This act of termination can be initiated from both ends of the connection. In the diagram above, both ALB and P3 can terminate the connection depending on the idle timeout setting. That means if one end has a smaller timeout, it will be the one who terminates the connection.

The issue lay with the fact our HTTP server (P3) had smaller connection idle timeout than the ALB. That meant it was possible that while there was an inflight request from ALB to P3, P3 terminates the connection. This will result in a 502 error because the TCP connection has been closed.

The solution is that the side that sends the request must be the side with smaller idle timeout. In this case, it's the ALB. We increased the idle timeout in Jetty with ServerConnector::setIdleTimeout to fix this issue.

Draining is not set up properly

The ALB employs HTTP persistent connection when sending HTTP requests to our servers. What that means is the ALB will reuse the TCP connection that was established in previous HTTP requests in order to avoid the cost of TCP handshake.

The trouble comes when the server (P3 in this case) wants to drain, possibly due to our periodic code-push, and it does not want to accept any more requests. However, the ALB is unaware of this fact because of the NodePort type of service. Recall that ALB is only aware of the node N3, not the pod P3. That means the ALB would still send requests to N3. Since the TCP connection is reused, these requests would get routed to the draining pod P3. Once the draining period completes, 502s will occur.

One way to fix this is to have every response from P3 include a special header, called Connection: close, when P3 is draining. This header will instruct the ALB not to reuse the old TCP connections and create new ones instead. In this situation, the new connections won't be routed to the draining pods.

One tricky part is that the draining period must be larger than your service’s readiness probe period, so that kube-proxy from other nodes (including N3) are aware of P3 draining and update their iptables rules accordingly.

Node abruptly removed from cluster

When a new collection is created, Rockset employs a mode called bulk-ingest in order to conduct an initial dump of the source data into the collection. This mode is often very CPU intensive, so we need a special type of EC2 machine that is compute-optimized. Internally, we call these bulk nodes. Since these machines are more expensive, we only spin them up when necessary and terminate when we no longer need them. This turned out to cause 502s as well.

Remember from previous sections, requests are routed to a random node in the cluster, including, in this case, the bulk nodes. So when the bulk nodes join the cluster, they're available for receiving and forwarding the requests as well. When the bulk-ingest is completed, we terminate these nodes in order to save on costs. The problem is we terminate these nodes too abruptly, closing the connection between this node and the ALB, causing the inflight requests to fail and producing 502 errors. We diagnosed this problem by noticing that the 502 graph aligned with the graph of the number of bulk nodes currently in the system. Every time number of bulk nodes decreased, 502s occurred.

The fix for us was to use AWS lifecycle hooks to gracefully terminate these nodes, by waiting for the inflight requests to finish before actually terminating them.

Node is not ready to route requests

Similar to the previous problems, this issue involves the node just joining the cluster and not ready to forward requests. When a node joins the cluster, multiple set-up steps need to happen before traffic can flow between this new node and other nodes. For example, kube-proxy in this new node needs to set up iptables rules. Other nodes need to update the firewall to accept the traffic from the new node. These steps are done asynchronously.

That means, the ALB would oftentimes timeout while trying to establish the connection to this new node, as this node would fail to forward the TCP handshake request to other pods. This would cause 504 errors.

We diagnosed this problem by looking at the ALB log from AWS. By inspecting the target node (the node that ALB decided to forward the request to), we can see that a 504 error happens when the target node just joins the cluster.

There are 2 ways to fix this: delay the node joining the ALB target group by a few minutes or just have a static set of nodes for routing. We don’t want to introduce unnecessary delays, so we go with the latter approach. We do this by applying the label alpha.service-controller.kubernetes.io/exclude-balancer=true to the nodes we don’t want the ALB to route to.

Kubernetes bug

Having resolved the issues above, a few 502s would still occur. Understandably, a k8s bug is the last thing we would think of. Luckily, a colleague of mine pointed out this article on a k8s bug causing intermittent connection resets, which matched our problem. It's basically a k8s bug from before version 1.15. The workaround for this is to set ip_conntrack_tcp_be_liberal to avoid marking packets as INVALID.

echo 1 > /proc/sys/net/ipv4/netfilter/ip_conntrack_tcp_be_liberal

Conclusion

There can be many reasons 502 and 504 errors can happen. Some are unavoidable, caused by AWS removing nodes abruptly or your service OOMing or crashing. In this post, I describe the issues that can be fixed through proper configuration.

Personally, this has been a great learning experience. I learned a lot about k8s networking and k8s in general. At Rockset, there are many similar challenges, and we're aggressively hiring. If you're interested, please drop me a message!