How to Use Terraform with Rockset

January 26, 2023

The goal of this blog post is to provide best practices on how to use terraform to configure Rockset to ingest the data into two collections, and how to setup a view and query lambdas that are used in an application, plus to show the workflow of later updating the query lambdas. This mimics how we use terraform at Rockset to manage Rockset resources.

Terraform is the most popular used DevOps tool for infrastructure management, that lets you define your infrastructure as code, and then the tool will take the configuration and compute the steps needed to take it from the current state to the desired state.

Last we’ll look at how to use GitHub actions to automatically run terraform plan for pull requests, and once the pull request are approved and merged, it will run terraform apply to make the required changes.

The full terraform configuration used in this blog post is available here.

Terraform

To follow along on your own, you will need:

and you also need to install terraform on your computer, which is as simple as this on macOS.

$ brew tap hashicorp/tap

$ brew install hashicorp/tap/terraform

(instructions for other operating systems are available in the link above)

Provider setup

The first step to using terraform is to configure the providers we will be using, Rockset and AWS. Create a file called _provider.tf with the contents.

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4"

}

rockset = {

source = "rockset/rockset"

version = "0.6.2"

}

}

}

provider rockset {}

provider aws {

region = "us-west-2"

}

Both providers use environment variables to read the credentials they require to access the respective services.

- Rockset:

ROCKSET_APIKEYandROCKSET_APISERVER - AWS:

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEY, orAWS_PROFILE

Backend configuration

Terraform saves information about the managed infrastructure and configuration in a state file. To share this state between local runs on your computer, and automated runs from GitHub actions, we use a so called backend configuration, which stores the state in an AWS S3 bucket, so all invocations of terraform can use it.

backend "s3" {

bucket = "rockset-community-terraform"

key = "blog/state"

region = "us-west-2"

}

⚠️ For a production deployment, make sure to configure state locking too.

AWS IAM Role

To allow Rockset to ingest the contents of an S3 bucket, we first have to create an AWS IAM role which Rockset will use to access the contents of the bucket. It uses a data source to read information about your Rockset organization, so it can configure AWS correctly.

data rockset_account current {}

resource "aws_iam_policy" "rockset-s3-integration" {

name = var.rockset_role_name

policy = templatefile("${path.module}/data/policy.json", {

bucket = var.bucket

prefix = var.bucket_prefix

})

}

resource "aws_iam_role" "rockset" {

name = var.rockset_role_name

assume_role_policy = data.aws_iam_policy_document.rockset-trust-policy.json

}

data "aws_iam_policy_document" "rockset-trust-policy" {

statement {

sid = ""

effect = "Allow"

actions = [

"sts:AssumeRole"

]

principals {

identifiers = [

"arn:aws:iam::${data.rockset_account.current.account_id}:root"

]

type = "AWS"

}

condition {

test = "StringEquals"

values = [

data.rockset_account.current.external_id

]

variable = "sts:ExternalId"

}

}

}

resource "aws_iam_role_policy_attachment" "rockset_s3_integration" {

role = aws_iam_role.rockset.name

policy_arn = aws_iam_policy.rockset-s3-integration.arn

}

This creates an AWS IAM cross-account role which Rockset is allowed to use to ingest data.

Rockset S3 integration

Now we can create the integration that enables Rockset to ingest data from S3, using the IAM role above.

resource "time_sleep" "wait_30s" {

depends_on = [aws_iam_role.rockset]

create_duration = "15s"

}

resource "rockset_s3_integration" "integration" {

name = var.bucket

aws_role_arn = aws_iam_role.rockset.arn

depends_on = [time_sleep.wait_30s]

}

⚠️ You can get an AWS cross-account role error if you skip the time_sleep resource, because it takes a few seconds for the newly created AWS role to propagate, so this saves you from having to rerun terraform apply again.

Rockset collection

With the integration we are now able to create a workspace to hold all resources we will add, and then setup a collection which ingest data using the above S3 integration.

resource rockset_workspace blog {

name = "blog"

}

resource "rockset_s3_collection" "collection" {

name = var.collection

workspace = rockset_workspace.blog.name

retention_secs = var.retention_secs

source {

format = "json"

integration_name = rockset_s3_integration.integration.name

bucket = var.bucket

pattern = "public/movies/*.json"

}

}

Kafka Collection

Next we’ll setup a collection from a Confluent Cloud source, and add an ingest transformation that summarizes the data.

resource "rockset_kafka_integration" "confluent" {

name = var.bucket

aws_role_arn = aws_iam_role.rockset.arn

use_v3 = true

bootstrap_servers = var.KAFKA_REST_ENDPOINT

security_config = {

api_key = var.KAFKA_API_KEY

secret = var.KAFKA_API_SECRET

}

}

resource "rockset_kafka_collection" "orders" {

name = "orders"

workspace = rockset_workspace.blog.name

retention_secs = var.retention_secs

source {

integration_name = rockset_kafka_integration.confluent.name

}

field_mapping_query = file("data/transformation.sql")

}

The SQL for the ingest transformation is stored in a separate file, which terraform injects into the configuration.

SELECT

COUNT(i.orderid) AS orders,

SUM(i.orderunits) AS units,

i.address.zipcode,

i.address.state,

-- bucket data in five minute buckets

TIME_BUCKET(MINUTES(5), TIMESTAMP_MILLIS(i.ordertime)) AS _event_time

FROM

_input AS i

WHERE

-- drop all records with an incorrect state

i.address.state != 'State_'

GROUP BY

_event_time,

i.address.zipcode,

i.address.state

View

With the data ingested into a collection we can create a view, which limits which documents in a collection can be accessed through that view.

resource rockset_view english-movies {

name = "english-movies"

query = file("data/view.sql")

workspace = rockset_workspace.blog.name

depends_on = [rockset_alias.movies]

}

The view needs an explicit depends_on meta-argument as terraform doesn’t interpret the SQL for the view which resides in a separate file.

Alias

An alias is a way to refer to an existing collection by a different name. This is a convenient way to be able to change the which collection a set of queries use, without having to update the SQL for all of them.

resource rockset_alias movies {

collections = ["${rockset_workspace.blog.name}.${rockset_s3_collection.movies.name}"]

name = "movies"

workspace = rockset_workspace.blog.name

}

For instance, if we started to ingest movies from a Kafka stream, we can update the alias to reference the new collection and all queries start using it immediately.

Role

We create a role which is limited to only executing query lambdas only in the blog workspace, and then save the API key in the AWS Systems Manager Parameter Store for later retrieval by the code which will execute the lambda. This way the credentials will never have to be exposed to a human.

resource rockset_role read-only {

name = "blog-read-only"

privilege {

action = "EXECUTE_QUERY_LAMBDA_WS"

cluster = "*ALL*"

resource_name = rockset_workspace.blog.name

}

}

resource "rockset_api_key" "ql-only" {

name = "blog-ql-only"

role = rockset_role.read-only.name

}

resource "aws_ssm_parameter" "api-key" {

name = "/rockset/blog/apikey"

type = "SecureString"

value = rockset_api_key.ql-only.key

}

Query Lambda

The query lambda stores the SQL in a separate file, and has a tag that uses the terraform variable stable_version which when set, is used to pin the stable tag to that version of the query lambda, and if not set it will point to the latest version.

Placing the SQL in a separate file isn’t a requirement, but it makes for easier reading and you can copy/paste the SQL into the Rockset console to manually try the changes. Another benefit is that reviewing changes to the SQL is easier when it isn’t intermingled with other changes, like it would if it was placed in-line with the terraform configuration.

SELECT

title,

TIME_BUCKET(

YEARS(1),

PARSE_TIMESTAMP('%Y-%m-%d', release_date)

) as year,

popularity

FROM

blog.movies AS m

where

release_date != ''

AND popularity > 10

GROUP BY

year,

title,

popularity

order by

popularity desc

resource "rockset_query_lambda" "top-rated" {

name = "top-rated-movies"

workspace = rockset_workspace.blog.name

sql {

query = file("data/top-rated.sql")

}

}

resource "rockset_query_lambda_tag" "stable" {

name = "stable"

query_lambda = rockset_query_lambda.top-rated.name

version = var.stable_version == "" ? rockset_query_lambda.top-rated.version : var.stable_version

workspace = rockset_workspace.blog.name

}

Applying the configuration

With all configuration files in place, it is time to “apply” the changes, which means that terraform will read the configuration files, and interrogate Rockset and AWS for the current configuration, and then calculate what steps it needs to take to get to the end state.

The first step is to run terraform init, which will download all required terraform providers and configure the S3 backend.

$ terraform init

Initializing the backend...

Successfully configured the backend "s3"! Terraform will automatically

use this backend unless the backend configuration changes.

Initializing provider plugins...

- Finding hashicorp/aws versions matching "~> 4.0"...

- Finding rockset/rockset versions matching "~> 0.6.2"...

- Installing hashicorp/aws v4.39.0...

- Installed hashicorp/aws v4.39.0 (signed by HashiCorp)

- Installing hashicorp/time v0.9.1...

- Installed hashicorp/time v0.9.1 (signed by HashiCorp)

- Installing rockset/rockset v0.6.2...

- Installed rockset/rockset v0.6.2 (signed by a HashiCorp partner, key ID DB47D0C3DF97C936)

Partner and community providers are signed by their developers.

If you'd like to know more about provider signing, you can read about it here:

https://www.terraform.io/docs/cli/plugins/signing.html

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

Next we run terraform plan to get a list of which resources terraform is going to create, and to see the order in which it will create it.

$ terraform plan

data.rockset_account.current: Reading...

data.rockset_account.current: Read complete after 0s [id=318212636800]

data.aws_iam_policy_document.rockset-trust-policy: Reading...

data.aws_iam_policy_document.rockset-trust-policy: Read complete after 0s [id=2982727827]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_iam_policy.rockset-s3-integration will be created

+ resource "aws_iam_policy" "rockset-s3-integration" {

+ arn = (known after apply)

+ id = (known after apply)

+ name = "rockset-s3-integration"

+ path = "/"

+ policy = jsonencode(

{

+ Id = "RocksetS3IntegrationPolicy"

+ Statement = [

+ {

+ Action = [

+ "s3:ListBucket",

]

+ Effect = "Allow"

+ Resource = [

+ "arn:aws:s3:::rockset-community-datasets",

]

+ Sid = "BucketActions"

},

+ {

+ Action = [

+ "s3:GetObject",

]

+ Effect = "Allow"

+ Resource = [

+ "arn:aws:s3:::rockset-community-datasets/*",

]

+ Sid = "ObjectActions"

},

]

+ Version = "2012-10-17"

}

)

+ policy_id = (known after apply)

+ tags_all = (known after apply)

}

...

# rockset_workspace.blog will be created

+ resource "rockset_workspace" "blog" {

+ created_by = (known after apply)

+ description = "created by Rockset terraform provider"

+ id = (known after apply)

+ name = "blog"

}

Plan: 15 to add, 0 to change, 0 to destroy.

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly these actions if you run "terraform apply" now. 7s 665ms 13:53:32

Review the output and verify that it is doing what you expect, and then you are ready to apply the changes using terraform apply. This repeats the plan output, and asks you to verify that you are ready to apply the changes

$ terraform apply

data.rockset_account.current: Reading...

data.rockset_account.current: Read complete after 0s [id=318212636800]

data.aws_iam_policy_document.rockset-trust-policy: Reading...

data.aws_iam_policy_document.rockset-trust-policy: Read complete after 0s [id=2982727827]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

...

# time_sleep.wait_30s will be created

+ resource "time_sleep" "wait_30s" {

+ create_duration = "15s"

+ id = (known after apply)

}

Plan: 16 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

rockset_workspace.blog: Creating...

rockset_kafka_integration.confluent: Creating...

rockset_workspace.blog: Creation complete after 0s [id=blog]

rockset_role.read-only: Creating...

rockset_query_lambda.top-rated: Creating...

rockset_role.read-only: Creation complete after 1s [id=blog-read-only]

rockset_api_key.ql-only: Creating...

rockset_api_key.ql-only: Creation complete after 0s [id=blog-ql-only]

rockset_query_lambda.top-rated: Creation complete after 1s [id=blog.top-rated-movies]

rockset_query_lambda_tag.stable: Creating...

rockset_query_lambda_tag.stable: Creation complete after 0s [id=blog.top-rated-movies.stable]

rockset_kafka_integration.confluent: Creation complete after 1s [id=confluent-cloud-blog]

rockset_kafka_collection.orders: Creating...

aws_ssm_parameter.api-key: Creating...

aws_iam_role.rockset: Creating...

aws_iam_policy.rockset-s3-integration: Creating...

aws_ssm_parameter.api-key: Creation complete after 1s [id=/rockset/blog/apikey]

aws_iam_policy.rockset-s3-integration: Creation complete after 1s [id=arn:aws:iam::459021908517:policy/rockset-s3-integration]

aws_iam_role.rockset: Creation complete after 2s [id=rockset-s3-integration]

aws_iam_role_policy_attachment.rockset_s3_integration: Creating...

time_sleep.wait_30s: Creating...

aws_iam_role_policy_attachment.rockset_s3_integration: Creation complete after 0s [id=rockset-s3-integration-20221114233744029000000001]

rockset_kafka_collection.orders: Still creating... [10s elapsed]

time_sleep.wait_30s: Still creating... [10s elapsed]

time_sleep.wait_30s: Creation complete after 15s [id=2022-11-14T23:37:58Z]

rockset_s3_integration.integration: Creating...

rockset_s3_integration.integration: Creation complete after 0s [id=rockset-community-datasets]

rockset_s3_collection.movies: Creating...

rockset_kafka_collection.orders: Still creating... [20s elapsed]

rockset_s3_collection.movies: Still creating... [10s elapsed]

rockset_kafka_collection.orders: Still creating... [30s elapsed]

rockset_kafka_collection.orders: Creation complete after 34s [id=blog.orders]

rockset_s3_collection.movies: Still creating... [20s elapsed]

rockset_s3_collection.movies: Still creating... [30s elapsed]

rockset_s3_collection.movies: Still creating... [40s elapsed]

rockset_s3_collection.movies: Creation complete after 43s [id=blog.movies-s3]

rockset_alias.movies: Creating...

rockset_alias.movies: Creation complete after 1s [id=blog.movies]

rockset_view.english-movies: Creating...

rockset_view.english-movies: Creation complete after 1s [id=blog.english-movies]

Apply complete! Resources: 16 added, 0 changed, 0 destroyed.

Outputs:

latest-version = "0eb04bfed335946d"

So in about 1 minute it created all required resources (and 30 seconds were spent waiting for the AWS IAM role to propagate).

Updating resources

Once the initial configuration has been applied, we might have to make modifications to one or more resources, e.g. update the SQL for a query lambda. Terraform will help us plan these changes, and only apply what has changed.

SELECT

title,

TIME_BUCKET(

YEARS(1),

PARSE_TIMESTAMP('%Y-%m-%d', release_date)

) as year,

popularity

FROM

blog.movies AS m

where

release_date != ''

AND popularity > 11

GROUP BY

year,

title,

popularity

order by

popularity desc

We’ll also update the variables.tf file to pin the stable tag to the current version, so that the stable doesn’t change until we have properly tested it.

variable "stable_version" {

type = string

default = "0eb04bfed335946d"

description = "Query Lambda version for the stable tag. If empty, the latest version is used."

}

Now we can go ahead and apply the changes.

$ terraform apply

data.rockset_account.current: Reading...

rockset_workspace.blog: Refreshing state... [id=blog]

rockset_kafka_integration.confluent: Refreshing state... [id=confluent-cloud-blog]

rockset_role.read-only: Refreshing state... [id=blog-read-only]

rockset_query_lambda.top-rated: Refreshing state... [id=blog.top-rated-movies]

rockset_kafka_collection.orders: Refreshing state... [id=blog.orders]

rockset_api_key.ql-only: Refreshing state... [id=blog-ql-only]

rockset_query_lambda_tag.stable: Refreshing state... [id=blog.top-rated-movies.stable]

data.rockset_account.current: Read complete after 1s [id=318212636800]

data.aws_iam_policy_document.rockset-trust-policy: Reading...

aws_iam_policy.rockset-s3-integration: Refreshing state... [id=arn:aws:iam::459021908517:policy/rockset-s3-integration]

aws_ssm_parameter.api-key: Refreshing state... [id=/rockset/blog/apikey]

data.aws_iam_policy_document.rockset-trust-policy: Read complete after 0s [id=2982727827]

aws_iam_role.rockset: Refreshing state... [id=rockset-s3-integration]

aws_iam_role_policy_attachment.rockset_s3_integration: Refreshing state... [id=rockset-s3-integration-20221114233744029000000001]

time_sleep.wait_30s: Refreshing state... [id=2022-11-14T23:37:58Z]

rockset_s3_integration.integration: Refreshing state... [id=rockset-community-datasets]

rockset_s3_collection.movies: Refreshing state... [id=blog.movies-s3]

rockset_alias.movies: Refreshing state... [id=blog.movies]

rockset_view.english-movies: Refreshing state... [id=blog.english-movies]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# rockset_query_lambda.top-rated will be updated in-place

~ resource "rockset_query_lambda" "top-rated" {

id = "blog.top-rated-movies"

name = "top-rated-movies"

~ version = "0eb04bfed335946d" -> (known after apply)

# (3 unchanged attributes hidden)

- sql {

- query = <<-EOT

SELECT

title,

TIME_BUCKET(

YEARS(1),

PARSE_TIMESTAMP('%Y-%m-%d', release_date)

) as year,

popularity

FROM

blog.movies AS m

where

release_date != ''

AND popularity > 10

GROUP BY

year,

title,

popularity

order by

popularity desc

EOT -> null

}

+ sql {

+ query = <<-EOT

SELECT

title,

TIME_BUCKET(

YEARS(1),

PARSE_TIMESTAMP('%Y-%m-%d', release_date)

) as year,

popularity

FROM

blog.movies AS m

where

release_date != ''

AND popularity > 11

GROUP BY

year,

title,

popularity

ORDER BY

popularity desc

EOT

}

}

Plan: 0 to add, 1 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

rockset_query_lambda.top-rated: Modifying... [id=blog.top-rated-movies]

rockset_query_lambda.top-rated: Modifications complete after 0s [id=blog.top-rated-movies]

Apply complete! Resources: 0 added, 1 changed, 0 destroyed.

Outputs:

latest-version = "2e268a64224ce9b2"

As you can see it updated the query lambda version as the SQL changed.

Executing the Query Lambda

You can execute the query lambda from the command line using curl. This reads the apikey from the AWS SSM Parameter Store, and then executes the lambda using the latest tag.

$ curl --request POST \

--url https://api.usw2a1.rockset.com/v1/orgs/self/ws/blog/lambdas/top-rated-movies/tags/latest \

-H "Authorization: ApiKey $(aws ssm get-parameters --with-decryption --query 'Parameters[*].{Value:Value}' --output=text --names /rockset/blog/apikey)" \

-H 'Content-Type: application/json'

When we have verified that the query lambda returns the correct results, we can go ahead and update the stable tag to the output of the last terraform apply command.

variable "stable_version" {

type = string

default = "2e268a64224ce9b2"

description = "Query Lambda version for the stable tag. If empty, the latest version is used."

}

Finally apply the changes again to update tag.

$ terraform apply

rockset_workspace.blog: Refreshing state... [id=blog]

data.rockset_account.current: Reading...

rockset_kafka_integration.confluent: Refreshing state... [id=confluent-cloud-blog]

rockset_query_lambda.top-rated: Refreshing state... [id=blog.top-rated-movies]

rockset_role.read-only: Refreshing state... [id=blog-read-only]

rockset_kafka_collection.orders: Refreshing state... [id=blog.orders]

rockset_api_key.ql-only: Refreshing state... [id=blog-ql-only]

rockset_query_lambda_tag.stable: Refreshing state... [id=blog.top-rated-movies.stable]

data.rockset_account.current: Read complete after 1s [id=318212636800]

aws_iam_policy.rockset-s3-integration: Refreshing state... [id=arn:aws:iam::459021908517:policy/rockset-s3-integration]

data.aws_iam_policy_document.rockset-trust-policy: Reading...

aws_ssm_parameter.api-key: Refreshing state... [id=/rockset/blog/apikey]

data.aws_iam_policy_document.rockset-trust-policy: Read complete after 0s [id=2982727827]

aws_iam_role.rockset: Refreshing state... [id=rockset-s3-integration]

aws_iam_role_policy_attachment.rockset_s3_integration: Refreshing state... [id=rockset-s3-integration-20221114233744029000000001]

time_sleep.wait_30s: Refreshing state... [id=2022-11-14T23:37:58Z]

rockset_s3_integration.integration: Refreshing state... [id=rockset-community-datasets]

rockset_s3_collection.movies: Refreshing state... [id=blog.movies-s3]

rockset_alias.movies: Refreshing state... [id=blog.movies]

rockset_view.english-movies: Refreshing state... [id=blog.english-movies]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# rockset_query_lambda_tag.stable will be updated in-place

~ resource "rockset_query_lambda_tag" "stable" {

id = "blog.top-rated-movies.stable"

name = "stable"

~ version = "0eb04bfed335946d" -> "2af51ce4d09ec319"

# (2 unchanged attributes hidden)

}

Plan: 0 to add, 1 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

rockset_query_lambda_tag.stable: Modifying... [id=blog.top-rated-movies.stable]

rockset_query_lambda_tag.stable: Modifications complete after 1s [id=blog.top-rated-movies.stable]

Apply complete! Resources: 0 added, 1 changed, 0 destroyed.

Outputs:

latest-version = "2e268a64224ce9b2"

Now the stable tag refers to the latest query lambda version.

GitHub Action

To make use of Infrastructure as Code, we’re going to put all terraform configurations in a git repository hosted by GitHub, and utilize the pull request workflow for terraform changes.

We will setup a GitHub action to automatically run terraform plan for each pull request, and post a comment on the PR showing the planned changes.

Once the pull request is approved and merged, it will run terraform apply to make the changes in your pull request to Rockset.

Setup

This section is a shortened version of Automate Terraform with GitHub Actions, which will talk you through all steps in much greater detail.

Save the below file as .github/workflows/terraform.yml

name: "Terraform"

on:

push:

branches:

- master

pull_request:

jobs:

terraform:

name: "Terraform"

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v3

- name: Setup Terraform

uses: hashicorp/setup-terraform@v1

with:

# terraform_version: 0.13.0:

cli_config_credentials_token: ${{ secrets.TF_API_TOKEN }}

- name: Terraform Format

id: fmt

run: terraform fmt -check

working-directory: terraform/blog

- name: Terraform Init

id: init

run: terraform init

working-directory: terraform/blog

- name: Terraform Validate

id: validate

run: terraform validate -no-color

working-directory: terraform/blog

- name: Terraform Plan

id: plan

if: github.event_name == 'pull_request'

run: terraform plan -no-color -input=false

working-directory: terraform/blog

continue-on-error: true

- uses: actions/github-script@v6

if: github.event_name == 'pull_request'

env:

PLAN: "terraform\n${{ steps.plan.outputs.stdout }}"

with:

github-token: ${{ secrets.GITHUB_TOKEN }}

script: |

const output = `#### Terraform Format and Style 🖌\`${{ steps.fmt.outcome }}\`

#### Terraform Initialization ⚙️\`${{ steps.init.outcome }}\`

#### Terraform Validation 🤖\`${{ steps.validate.outcome }}\`

#### Terraform Plan 📖\`${{ steps.plan.outcome }}\`

<details><summary>Show Plan</summary>

\`\`\`\n

${process.env.PLAN}

\`\`\`

</details>

*Pushed by: @${{ github.actor }}, Action: \`${{ github.event_name }}\`*`;

github.rest.issues.createComment({

issue_number: context.issue.number,

owner: context.repo.owner,

repo: context.repo.repo,

body: output

})

working-directory: terraform/blog

- name: Terraform Plan Status

if: steps.plan.outcome == 'failure'

run: exit 1

- name: Terraform Apply

if: github.ref == 'refs/heads/master' && github.event_name == 'push'

run: terraform apply -auto-approve -input=false

working-directory: terraform/blog

⚠️ Note that this is a simplified setup, for a production grade configuration you should run terraform plan -out FILE and save the file, so it can be used as input to terraform apply FILE, so only the exact approved changes in the pull request are applied. More information can be found here.

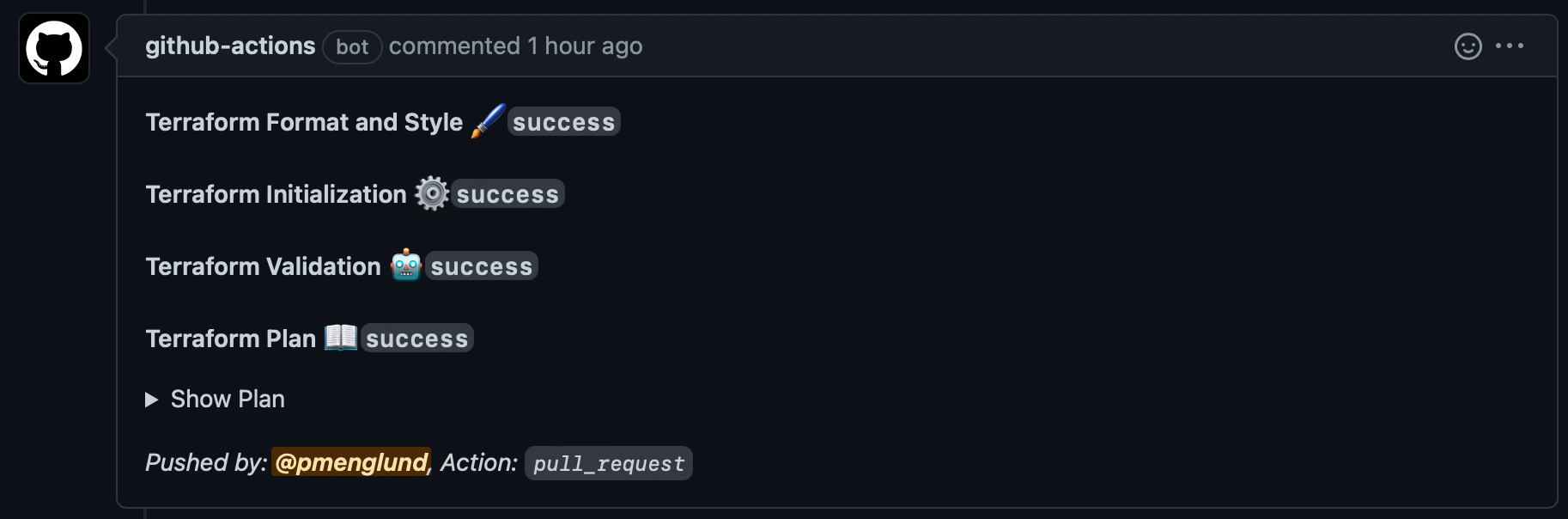

Pull Request

When you create a pull request that changes the terraform config, the workflow will run terraform plan and comment on the PR, which contains the plan output.

This lets the reviewer see that the change can be applied, and by clicking on “Show Plan” they can see exactly what changes are going to be made.

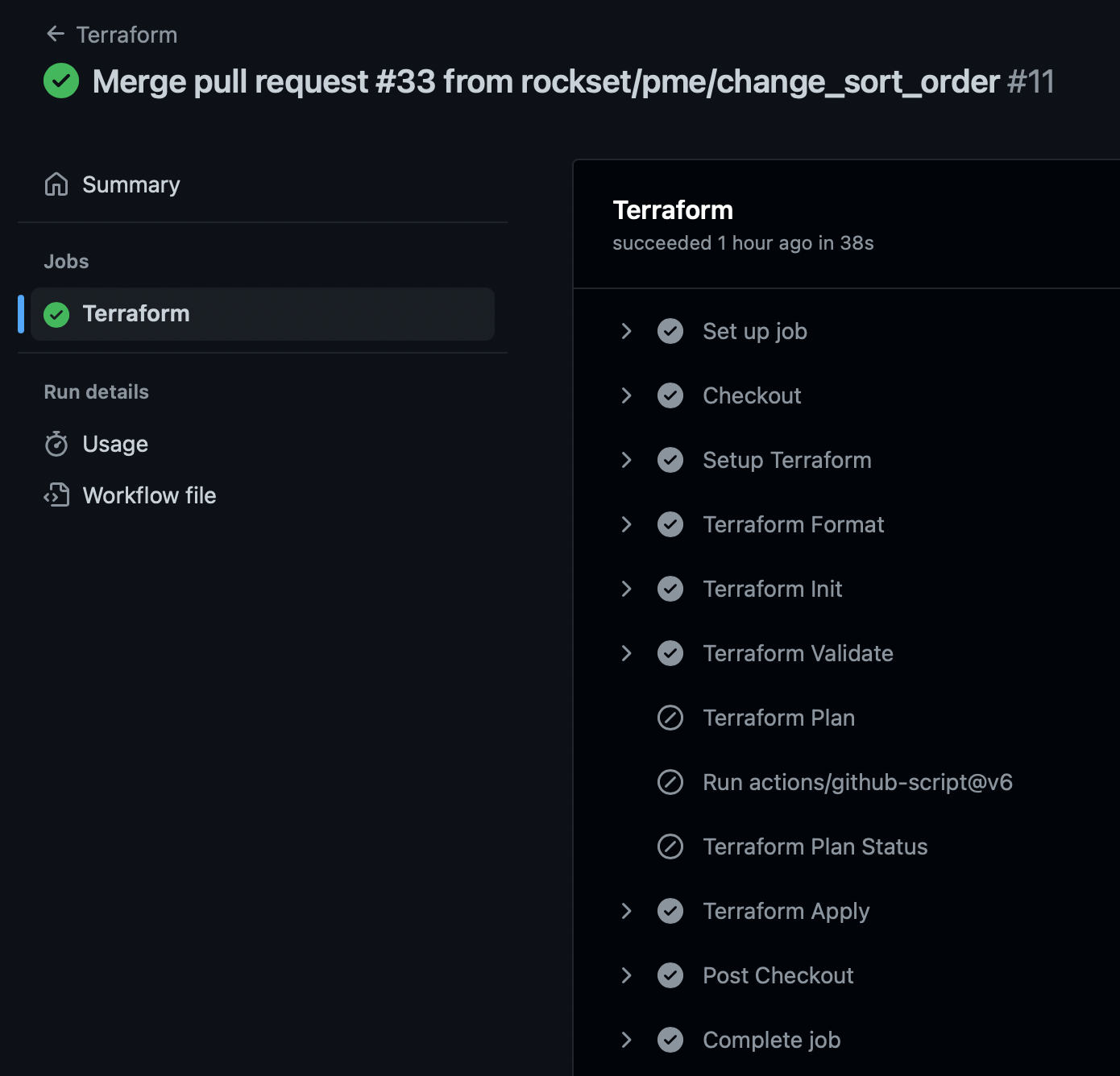

When the PR is approved and merged into the main branch, it will trigger another GitHub action workflow run which applies the change.

Final words

Now we have a fully functional Infrastructure as Code setup that will deploy changes to your Rockset configuration automatically after peer review.