Remote Compactions in RocksDB-Cloud

June 4, 2020

Introduction

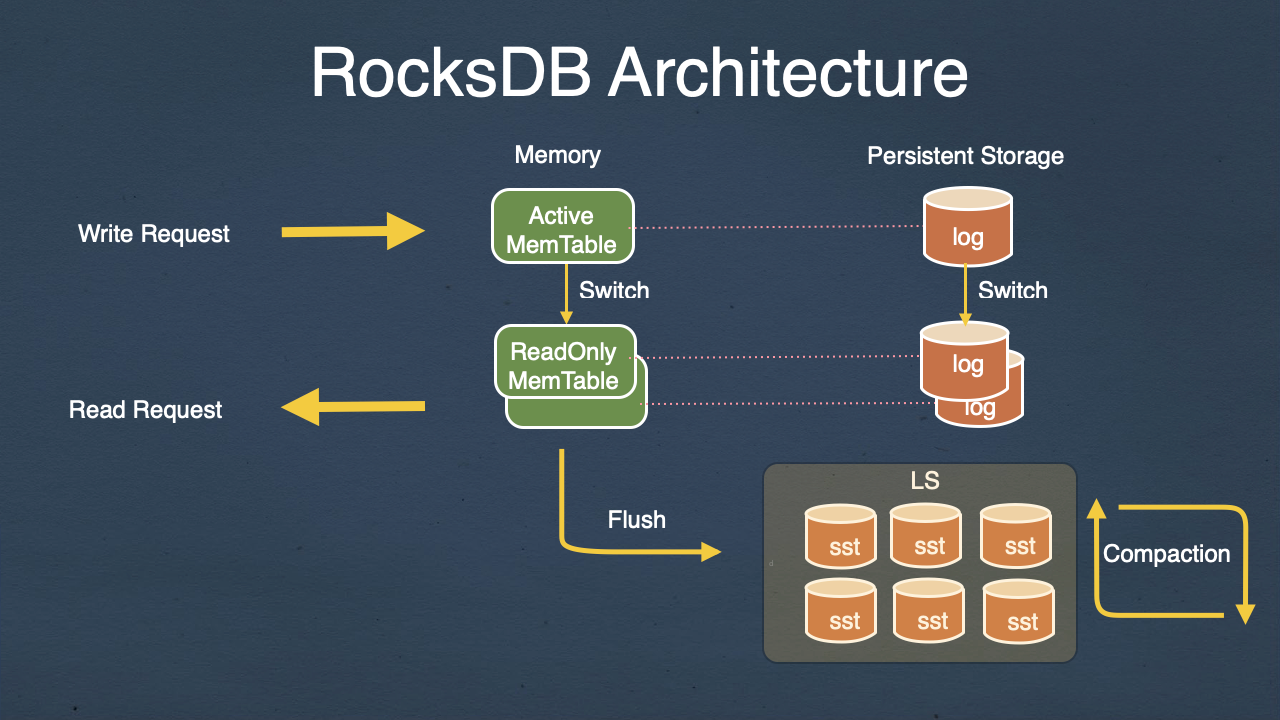

RocksDB is an LSM storage engine whose growth has proliferated tremendously in the last few years. RocksDB-Cloud is open-source and is fully compatible with RocksDB, with the additional feature that all data is made durable by automatically storing it in cloud storage (e.g. Amazon S3).

We, at Rockset, use RocksDB-Cloud as one of the building blocks of Rockset’s distributed Converged Index. Rockset is designed with cloud-native principles, and one of the primary design principles of a cloud-native database is to have separation of compute from storage. We will discuss how we extended RocksDB-Cloud to have a clean separation of its storage needs and its compute needs.

RocksDB’s LSM engine

RocksDB-Cloud stores data in locally attached SSD or spinning disks. The SSD or the spinning disk provides the storage needed to store the data that it serves. New writes to RocksDB-Cloud are written to an in-memory memtable, and then when the memtable is full, it is flushed to a new SST file in the storage.

Being an LSM storage engine, a set of background threads are used for compaction, and compaction is a process of combining a set of SST files and generating new SST files with overwritten keys and deleted keys purged from the output files. Compaction needs a lot of compute resources. The higher the write rate into the database, the more compute resources are needed for compaction, because the system is stable only if compaction is able to keep up with new writes to your database.

The problem when compute and storage are not disaggregated

In a typical RocksDB-based system, compaction occurs on CPUs that are local on the server that hosts the storage as well. In this case, compute and storage are not disaggregated. And this means that if your write rate increases but the total size of your database remains the same, you would have to dynamically provision more servers, spread your data into all those servers and then leverage the additional compute on these servers to keep up with the compaction load.

This has two problems:

- Spreading your data into more servers is not instantaneous because you have to copy a lot of data to do so. This means you cannot react quickly to a fast-changing workload.

- The storage capacity utilization on each of your servers becomes very low because you are spreading out your data to more servers. You lose out on the price-to-performance ratio because of all the unused storage on your servers.

Our solution

The primary reason why RocksDB-Cloud is suitable for separating out compaction compute and storage is because it is an LSM storage engine. Unlike a B-Tree database, RocksDB-Cloud never updates an SST file once it is created. This means that all the SST files in the entire system are read-only except the miniscule portion of data in your active memtable. RocksDB-Cloud persists all SST files in a cloud storage object store like S3, and these cloud objects are safely accessible from all your servers because they are read-only.

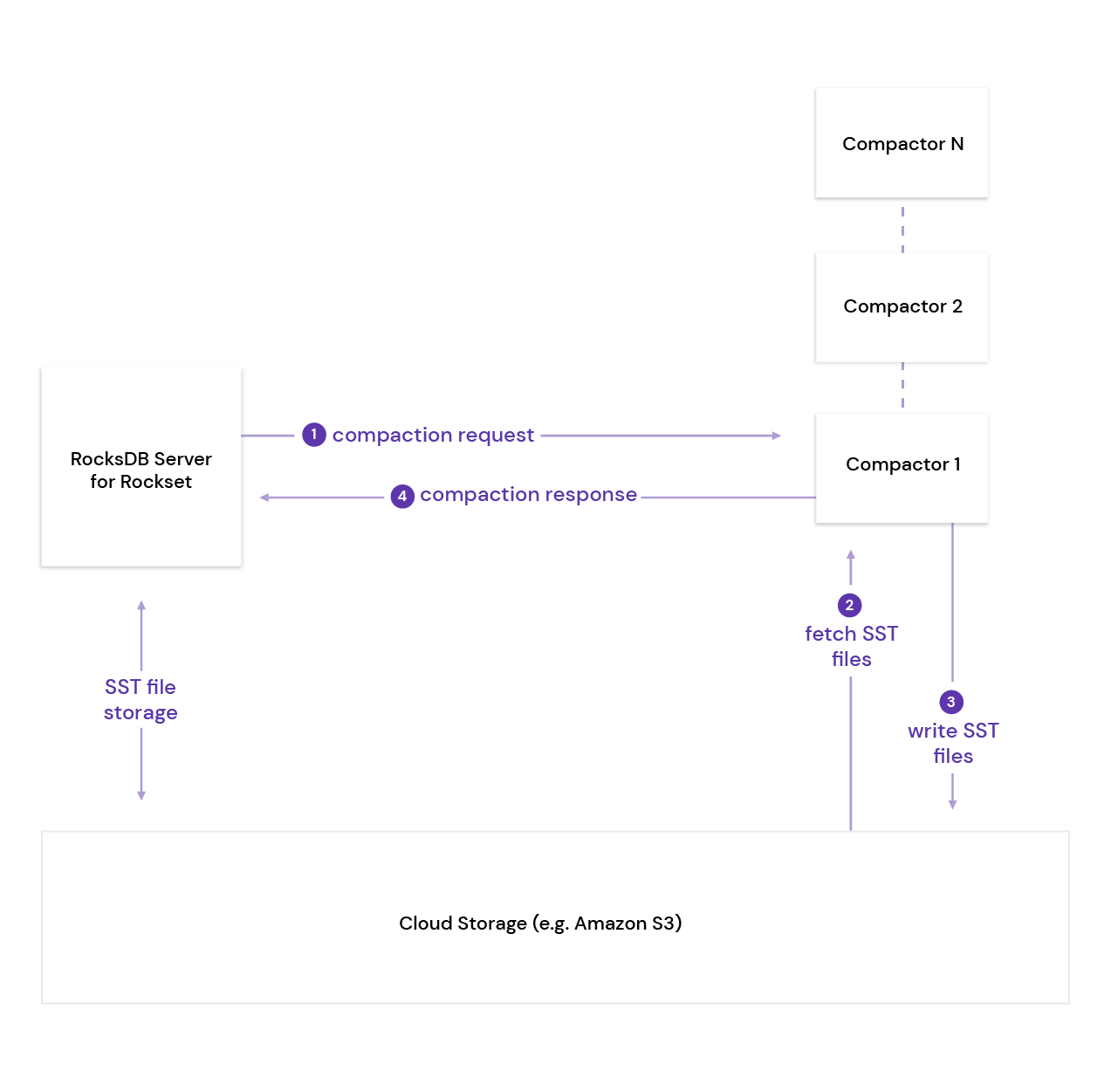

So, our idea is that if a RocksDB-Cloud server A can encapsulate a compaction job with its set of cloud objects and then send the request to a remote stateless server B—and that server B can fetch the relevant objects from the cloud store, do the compaction, produce a set of output SST files which are written back to the cloud object store, and then communicate that information back to server A—we have essentially separated out the storage (which resides in server A) from the compaction compute (which resides in server B). Server A has the storage and while server B has no permanent storage but only the compute needed for compaction. Voila!

RocksDB pluggable compaction API

We extended the base RocksDB API with two new methods that make the compaction engine in RocksDB externally pluggable. In db.h, we introduce a new API to register a compaction service.

Status RegisterPluggableCompactionService(std::unique_ptr<PluggableCompactionService>);

This API registers the plugin which is used to execute the compaction job by RocksDB. Remote compaction happens in two steps: Run and InstallFiles. Hence, the plugin, PluggableCompactionService, would have 2 APIs:

Status Run(const PluggableCompactionParam& job, PluggableCompactionResult* result)

std::vector<Status> InstallFiles(

const std::vector<std::string>& remote_paths,

const std::vector<std::string>& local_paths,

const EnvOptions& env_options, Env* local_env)

Run is where the compaction execution happens. In our remote compaction architecture, Run would send an RPC to a remote compaction tier, and receive a compaction result which has, among other things, the list of newly compacted SST files.

InstallFiles is where RocksDB installs the newly compacted SST files from the cloud (remote_paths) to its local database (local_paths).

Rockset’s compaction tier

Now we will show how we used the pluggable compaction service described above in Rockset’s compaction service. As mentioned above, the first step, Run, sends an RPC to a remote compaction tier with compaction information such as input SST file names and compression information. We call the host that executes this compaction job a compactor.

The compactor, upon receiving the compaction request, would open a RocksDB-Cloud instance in ghost mode. What this means is, RocksDB-Cloud opens the local database with only necessary metadata without fetching all SST files from the cloud storage. Once it opens the RocksDB instance in ghost mode, it would then execute the compaction job, including fetching the required SST files, compact them, and upload the newly compacted SST files to a temporary storage in the cloud.

Here are the options to open RocksDB-Cloud in the compactor:

rocksdb::CloudOptions cloud_options;

cloud_options.ephemeral_resync_on_open = false;

cloud_options.constant_sst_file_size_in_sst_file_manager = 1024;

cloud_options.skip_cloud_files_in_getchildren = true;

rocksdb::Options rocksdb_options;

rocksdb_options.max_open_files = 0;

rocksdb_options.disable_auto_compactions = true;

rocksdb_options.skip_stats_update_on_db_open = true;

rocksdb_options.paranoid_checks = false;

rocksdb_options.compaction_readahead_size = 10 * 1024 * 1024;

There are multiple challenges we faced during the development of the compaction tier, and our solutions:

Improve the speed of opening RocksDB-Cloud in ghost mode

During the opening of a RocksDB instances, in addition to fetching all the SST files from the cloud (which we have disabled with ghost mode), there are multiple other operations that could slow down the opening process, notably getting the list of SST files and getting the size of each SST file. Ordinarily, if all the SST files reside in local storage, the latency of these get-file-size operations would be small. However, when the compactor opens RocksDB-Cloud, each of these operations would result in a remote request to the cloud storage, and the total combined latency becomes prohibitively expensive. In our experience, for a RocksDB-Cloud instance with thousands of SST files, opening it would take up to a minute due to thousands of get-file-size requests to S3. In order to get around this limitation, we introduced various options in the RocksDB-Cloud options to disable these RPCs during opening. As a result, the average opening time goes from 7 seconds to 700 milliseconds.

Disable L0 -> L0 compaction

Remote compaction is a tradeoff between the speed of a single compaction and the ability to run more compaction jobs in parallel. It is because, naturally, each remote compaction job would be slower than the same compaction executed locally due to the cost of data transfer in the cloud. Therefore, we would like to minimize the bottleneck of the compaction process, where RocksDB-Cloud can’t parallelize, as much as possible.

In the LSM architecture, L0->L1 compaction are usually not parallelizable because L0 files have overlapping ranges. Hence, when a L0->L1 compaction is occuring, RocksDB-Cloud has the ability to also execute L0->L0 compaction, with the goal of reducing the number of L0 files and preventing write stalls due to RocksDB-Cloud hitting the L0 file limit. However, the trade off is, each L0 file would grow bigger in size after every L0->L0 compactions.

In our experience, this option causes more trouble than the benefits it brings, because having larger L0 files results in a much longer L0->L1 compaction, worsening the bottleneck of RocksDB-Cloud. Hence, we disable L0->L0 compaction, and live with the rare problem of write stall instead. From our experiment, RocksDB-Cloud compaction catches up with the incoming writes much better.

You can use it now

RocksDB-Cloud is an open-source project, so our work can be leveraged by any other RocksDB developer who wants to derive benefits by separating out their compaction compute from their storage needs. We are running the remote compaction service in production now. It is available with the 6.7.3 release of RocksDB-Cloud. We discuss all things about RocksDB-Cloud in our community.

Learn more about how Rockset uses RocksDB:

- Technical Overview of Compute-Compute Separation- A new cloud architecture for real-time analytics that leverages RocksDB

- Optimizing Bulk Load in RocksDB- An effective technique for quickly loading data into RocksDB

- How We Use RocksDB at Rockset- How we tuned RocksDB for optimal performance

Authors:

Hieu Pham – Software Engineer, Rockset

Dhruba Borthakur – CTO, Rockset