Case Study: How Rockset's Real-Time Analytics Platform Propels the Growth of Our NFT Marketplace

October 26, 2022

At Own the Moment, our mission is to drive the next generation of sports fandom – NFTs (non-fungible tokens) of pro athletes. Player NFTs are much more than the equivalent of digital baseball cards, they are the future of the sports collectibles market.

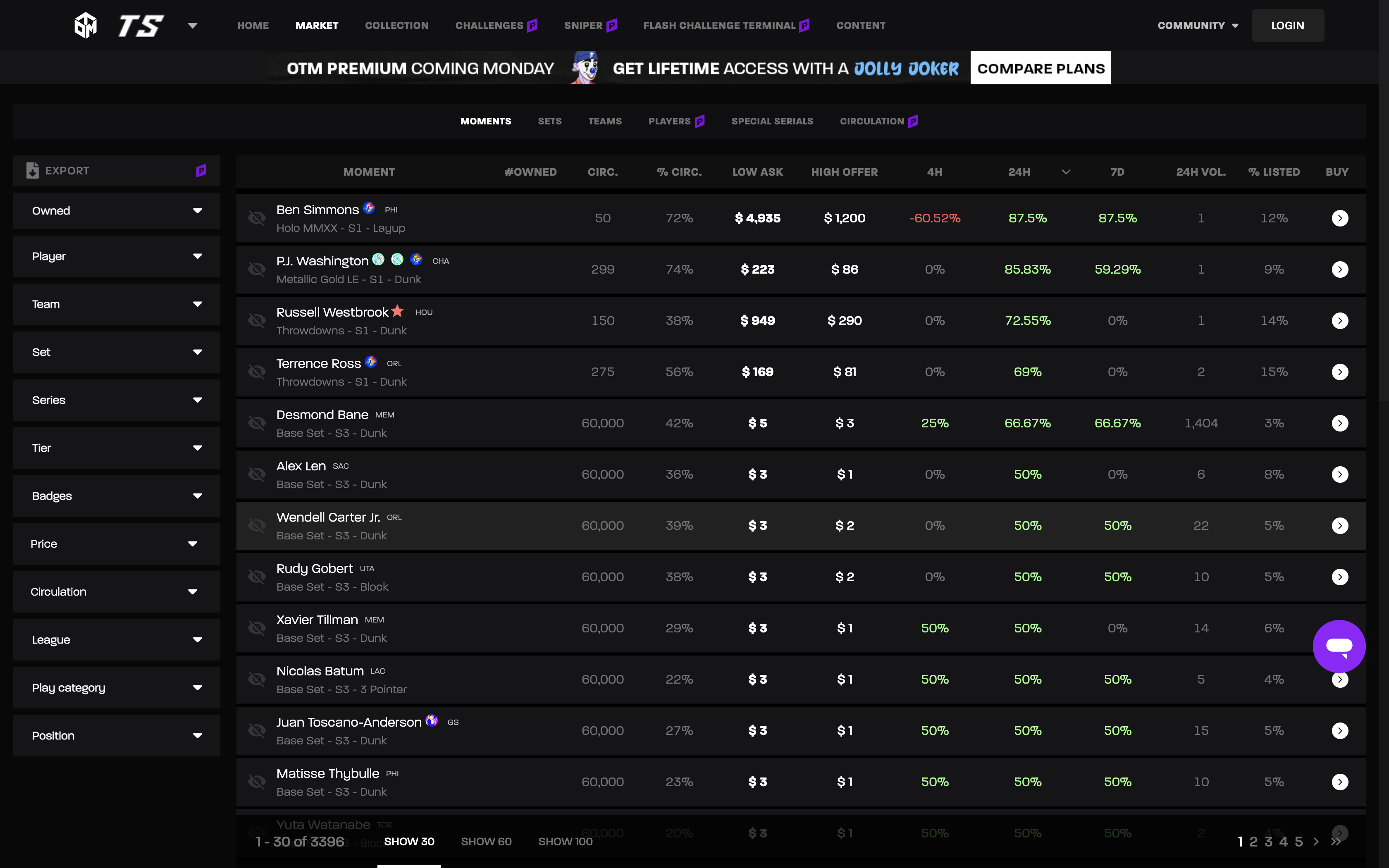

We are helping to lead the way. Fans and investors can track real-time market values for NFL and NBA player NFTs through our service. We also provide an online market for buying and selling NFTs. Think of us like Ameritrade or Coinbase, but for your sports NFT assets.

Most importantly, we have also created a fantasy sports platform called The Owners Club that debuted with a fantasy football league for the 2021-22 season. We gave out $1.5 million in prizes to competitors based on their NFL fantasy teams, composed of the player NFTs they owned.

While owning other types of NFTs has become like collecting art, we’re gamifying sports NFTs in order to create a lot more utility and excitement around them. This also creates greater opportunities for savvy traders to make money buying and selling player NFTs.

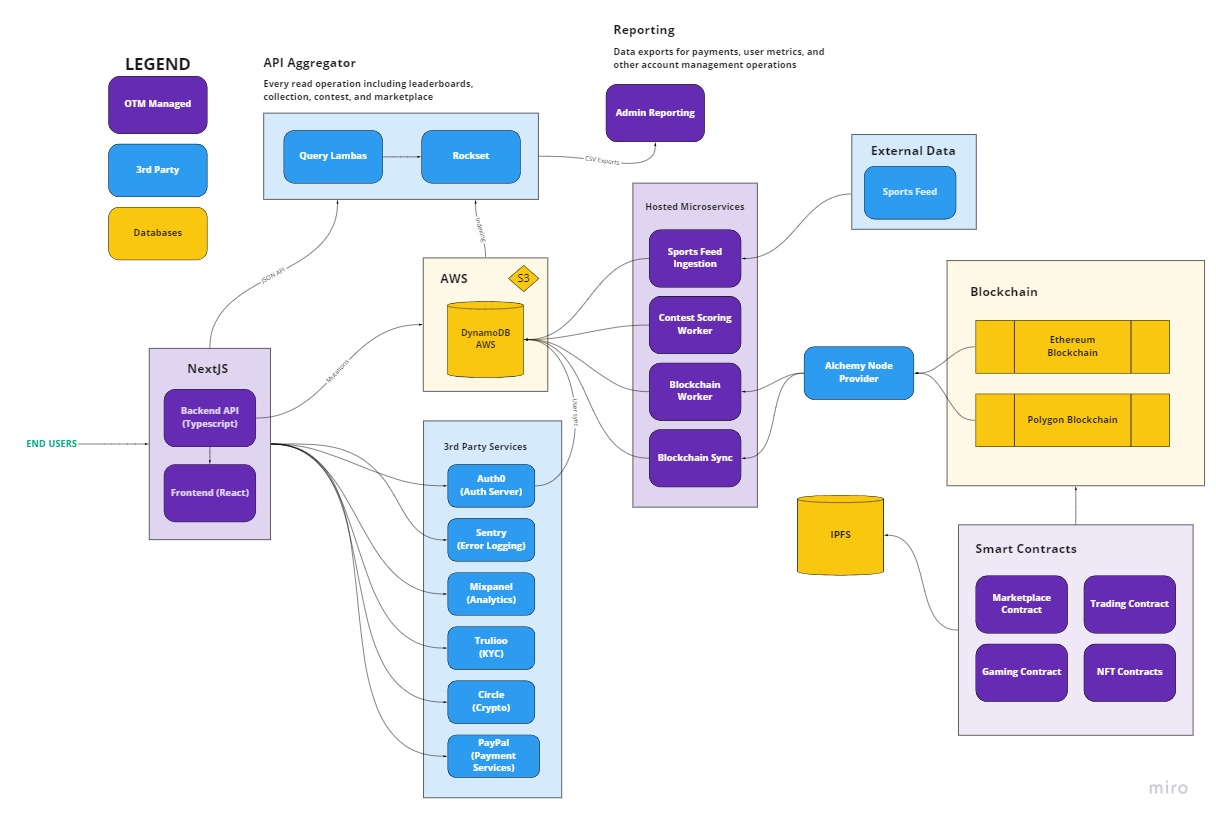

This makes my job as CTO of a small startup extremely interesting, as I had to oversee building a data infrastructure that supported:

- A fantasy sports league where both data ingestion and concurrent usage spikes during game days

- A player leaderboard with real-time results

- Secure, efficient and fast data exchange with the Ethereum blockchain where player NFT data is stored

- More conventional use cases such as internal financial reporting

It’s a tall order. Considering how quickly the Web 3.0 space has been evolving, it’s no surprise that the first version of our data infrastructure didn’t support all of these demands. Fortunately, we were able to quickly pivot after we discovered a real-time analytics database tailor-made for our fast evolving needs.

DynamoDB: Analytics Limitations Revealed

I joined Own The Moment in 2021 while we were still in stealth mode. I quickly discovered that to build our fantasy sports league and NFT marketplace, we would need two main sources of data:

- Real-time game scores and player statistics, both supplied by an external data provider

- Blockchain nodes such as Alchemy that allow us to both read and write information about NFTs and users’ crypto wallets to the blockchain

I built the first version of our data infrastructure wholly around Amazon’s DynamoDB database in the cloud. As our database of record, DynamoDB was great at ingesting external data, which we stored within a single table in DynamoDB. We also had smaller DynamoDB tables storing our user info and the mechanics of our fantasy sports contests. Besides our regular weekly top team contests and cumulative player leaderboards, we ran contests such as worst team, so that users with bad NFT cards still had a chance to win.

To run these contests, we needed to run complex, large-scale queries using the DynamoDB data tables. And because of the diversity of contests, we had a lot of different queries. That’s where DynamoDB’s analytical limitations reared their ugly heads.

For instance, to make any DynamoDB query run reasonably fast, we first needed to create a secondary index with a sort key tailored for that query. Also, DynamoDB, as a NoSQL database, doesn’t support SQL commands such as JOINING multiple tables. Instead, we had to denormalize our main DynamoDB table by importing all of the user information that was stored in separate DynamoDB tables. This had major downsides, such as difficulties keeping data accurately updated during game days, as well needing extra storage for so much redundant data in our main table. It’s all deeply-technical work that requires a developer skilled in DynamoDB analytics. And they are a rare and expensive bunch.

Thrown into the mix was the object-relational mapping (ORM) tool we had deployed called Dynamoose. Dynamoose provides useful features including a programmatic API and a schema for the schemaless DynamoDB. However, the tradeoff for that additional data modeling is a lot of additional latency for our queries. In our case, that resulted in a query latency of three seconds.

All in all, trying to make DynamoDB support fast analytics was a nightmare that would not end. And with the NFL season set to start in less than a month, we were in a bind.

A Faster, Friendlier Solution

We considered a few alternatives. One was to create another data pipeline that would aggregate data as it was ingested into DynamoDB. This would require creating a new table, which would’ve required a few extra weeks of dev time. Another was to scrap DynamoDB and find a traditional SQL database. Both would have required a lot of work.

After finding Rockset through an AWS blog on creating leaderboards, we wasted no time in starting to build a new customer-facing leaderboard based on Rockset. One of the first things my team and I noticed was how easy Rockset was to use. I have worked with almost every database out there in the past twelve years. Rockset’s UI is honestly the best I’ve worked with.

The SQL query editor is top-notch, tracking query history, saving queries and more. It made my six developers, who all know SQL, immediately productive. Just based on skimming the SELECTs and JOINs in a few sample Query Lambdas, they understood what kind of data they had and how to work with it. By the end of the day, they had literally built functioning SQL queries and APIs without any outside help. And with Rockset’s Converged Index™ and automatic query optimizer, all queries are fast and failure proof. We don’t have to build a custom index for every query like we do with Dynamo.

By using Rockset, we saved weeks of man-hours trying to overcome and compensate for DynamoDB’s analytical limitations. We were able to roll out a whole new player leaderboard in just three weeks.

Developer productivity is great, but what about query performance? That’s where Rockset really shined. Once we moved all of the queries feeding our leaderboards to Rockset – 100 Query Lambdas in total – we started being able to query our data in 100 milliseconds or less. That’s at least a 30x speed increase over DynamoDB.

Rockset’s serverless model also made scalability really easy. This was important to optimize both performance as well as price, since our usage is so dynamic. During the first season, our peak concurrent usage during game times – Monday and Thursday nights, and all day Sundays – was 20x higher than during off peak times. I would simply flip a switch and bump up the size of our Rockset instance during game days and not worry about any bottlenecks or time outs.

We gained so much confidence in Rockset’s speed, scalability, and ease of use that we quickly moved the rest of our analytical operations to Rockset. That includes ten data collections in all, the largest of which holds 15 million records, that store key data, including:

- 65,000 NFT transactions worth $1 million in our first season

- the 23,000 current users in our system along with records of the 160,000 NFTs they own

- our largest data collection – 400,000 records ingested from blockchains for NFT transactions related to our smart contracts

DynamoDB remains our database of record, connecting to microservices syncing with the blockchain and streaming data feeds. But literally every data retrieval and analytical calculation now goes through Rockset, from loading the player NFT marketplace and viewing all of the pricing statistics and transactions, to the user cards. Rockset syncs with DynamoDB constantly, pulling new game scores every 5-10 seconds and syncing with the blockchain where NFT and user wallet data is stored, and writing all of that into an indexed collection.

We also do all of our internal administrative reporting through Rockset. Rockset JOINs marketplace, user, and payments information from separate DynamoDB tables to produce aggregate reports that we export as CSV files. We were able to produce those reports in mere minutes using the Collections tab in Rockset.

Building this in DynamoDB, by contrast, would have required scripts and manual joining of records, both of which are quite error-prone. We probably saved days if not weeks of time using Rockset. It also enabled us to run giveaways and contests for users who had complete set collections of NFTs in our system or spent X dollars in the marketplace. Without Rockset, aggregating our ever-expanding collection of DynamoDB tables would have required too much work.

Future Plans

Last season we gave out $1.5 million in prizes. That was real money that was on the line! Still, it was essentially a proof of concept for our Rockset-based analytics platform, which performed flawlessly. We’ve cut the number of query errors and timed-out queries to zero. Every query runs fast out of the box. Our average query latency has shrunk from six seconds to 300 milliseconds. And that’s true for small datasets and larger ones.

Moreover, Rockset makes my developers super productive, with the easy-to-use UI and Write API and SQL support. And features like Converged Index and query optimization eliminate the need to spend valuable engineering time on query performance.

For the coming NFL season, we are talking to a number of potential big name partners in the sports media and fantasy business. They’re coming to us because we’re the only platform I know of today that integrates the blockchain on top of a utility-based NFT solution.

We are also working on a lot of backend changes such as building new APIs in Rockset and new integrations. We are also preparing for 10x growth on every dimension – user base, player NFTs, data records and more. What won’t change is Rockset. It’s proven to us that it can handle all of our needs: ultra-fast, scalable and complex analytics that are easy to develop and cost-effective to manage.