SQL Query Planning for Operational Analytics

July 18, 2019

Rockset is a schemaless SQL data platform. It is designed to support SQL on raw data. While most SQL databases are strongly and statically typed, data within Rockset is strongly but dynamically typed. Dynamic typing makes it difficult for us to adopt off-the-shelf SQL query optimizers since they are designed for statically typed data where the types of the columns are known ahead of time. Most of Rockset's operational analytics use cases execute hundreds of concurrent queries, and each query needs to complete within a few milliseconds. Given our unique challenges and performance requirements, building our own SQL query engine from scratch seemed like the right choice.

This blog post gives you a sneak peek at what happens under the hood of our SQL query engine when you issue a SQL query to Rockset.

Broadly speaking, a SQL query goes through 3 main stages as shown in Figure 1:

- Planning

- Optimization

- Execution

In the planning stage, a set of steps that need to be executed to complete the query is produced. This set of steps is called a query plan. A query plan is further categorized into the following types:

- Logical Query Plan: It is an algebraic representation of the query.

- Physical Query Plan: It consists of operators that execute parts of the query. For example, the logical query plan may contain a "Collection" node that indicates that data must be retrieved from a specific collection, whereas the physical plan contains a "ColumnScan" or "IndexFilter" operator that actually retrieves the data using a specific access method from the index.

Multiple query plans can be produced for the same query from which the query optimizer then chooses the most efficient query plan for execution. The final query plan selected for execution is called the execution plan.

In order to motivate our design choices for the query planner we first need to understand the query optimization stage. Specifically, we need to understand how an optimizer chooses an execution plan. In the next section, we look at the 2 main categories of query optimization techniques.

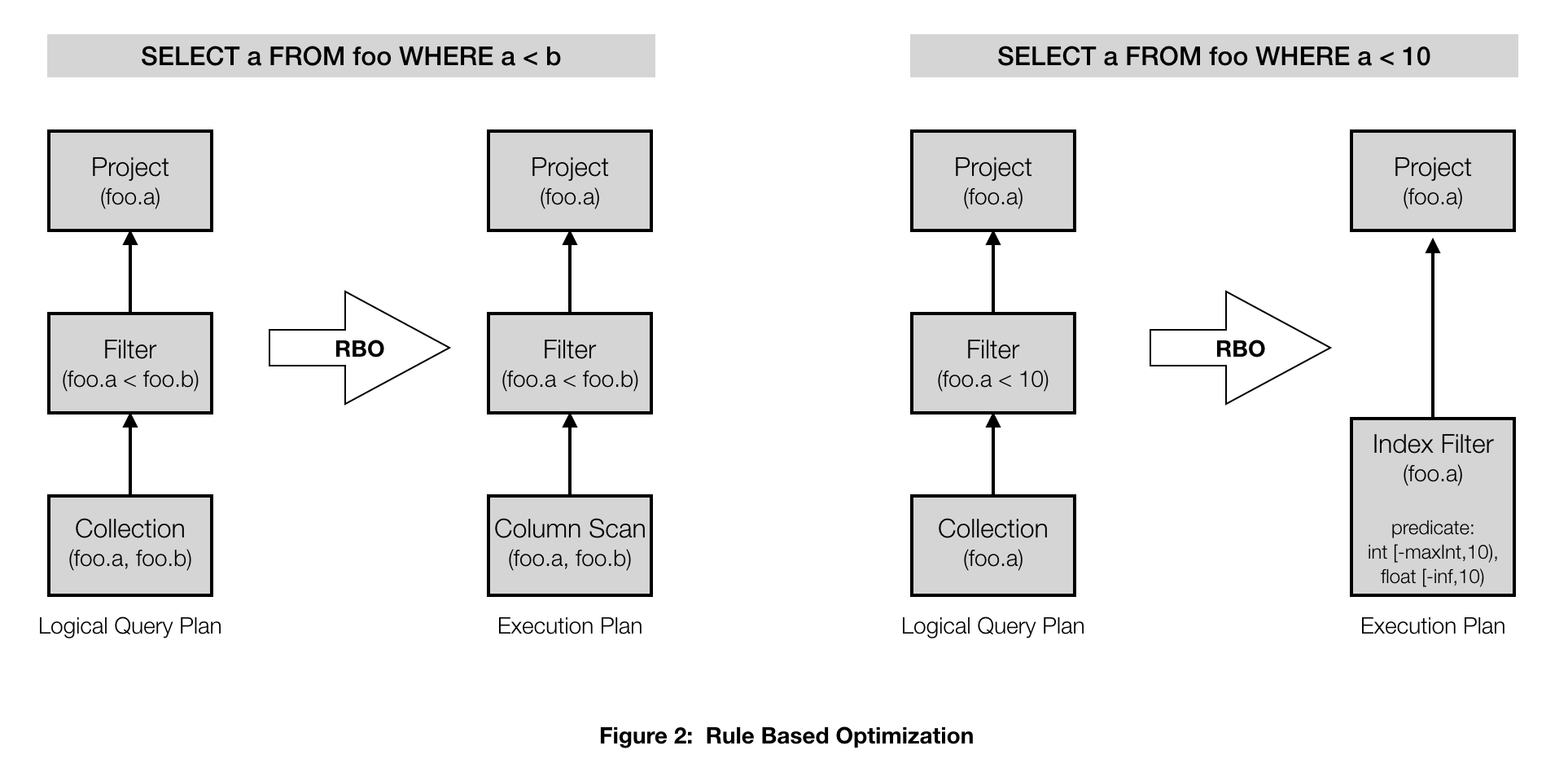

Rule Based Optimization vs. Cost Based Optimization

A query optimizer is entrusted with the job of picking the most efficient execution plan for a particular query. The Rule Based Optimizer (RBO) uses a set of predetermined rules based on a heuristic to deduce the most efficient execution plan. For example, you could have a rule that chooses a different access method to fetch the data from the index based on the nature of the filter clause in the query. We index all fields, so predicates that compare a field value with a constant (such as "a < 10") can be pushed into the index. But predicates that compare a field with another field (such as "a < b") cannot be pushed into the index. You could choose the access method that scans the inverted index for only those documents that satisfy the predicate (IndexFilter) for queries that have predicates that can be pushed down into the index, as opposed to a full columnar scan followed by a filter in the case where the predicates cannot be pushed down. This is illustrated in Figure 2.

Or you may have a rule that chooses a different join strategy depending on whether the join is an equijoin or not. An RBO does not always produce the most efficient execution plan, but in most situations it is good enough.

On the other hand, a Cost Based Optimizer (CBO) starts with all possible query plans in its search space. It evaluates them by assigning a score to every plan. This score is a function of the compute, memory, and time required to execute that plan. The final cost of the plan is memoized by breaking the query plan into simpler sub-plans and scoring each of them as you go along. The cost model can be designed based on the requirements of the system. It also uses other information about the data such as row selectivity and distribution of values to deduce the most efficient execution plan more accurately. Given that the search space of plan alternatives can grow exponentially, a good CBO needs to balance exploration (which grows the search space) with exploitation (scoring the already-explored plans and pruning the ones that will not be optimal).

The first query optimizer for Rockset was rule based. While it worked well for simpler queries with fewer knobs to turn, for more complex queries it soon evolved into a rather gnarly mesh of specialized rules offering very little flexibility to capture other subtleties. Special care had to be taken to ensure that these rules did not step on each other. Further, it was almost impossible to exhaustively cover all the optimizations, often resulting in clunky tweaks to existing rules after a useful heuristic was discovered as an afterthought. Our rule based optimizer soon evolved into a massive house of cards with rules precariously balanced together.

Given that the primary use case for Rockset is operational analytics queries with low latency and high concurrency requirements, there was an increasing emphasis on query performance. The RBO offered a rather brittle approach towards query optimization and we soon realized that we needed something that was extensible, stable, and reliable. After surveying some research literature, we came across Orca, which is a state-of-the-art cost based query optimizer specifically designed for heavy operational workloads. We decided to move towards a cost based optimizer that would help us better meet our requirements. In the process, we decided to rewrite our query planner to support cost based optimization. Our query planning architecture is heavily inspired by Orca[1] as well as CockroachLabs[2].

Now that we understand at a high level how a query optimizer operates, let us move onto how queries are planned in Rockset.

Query Planning

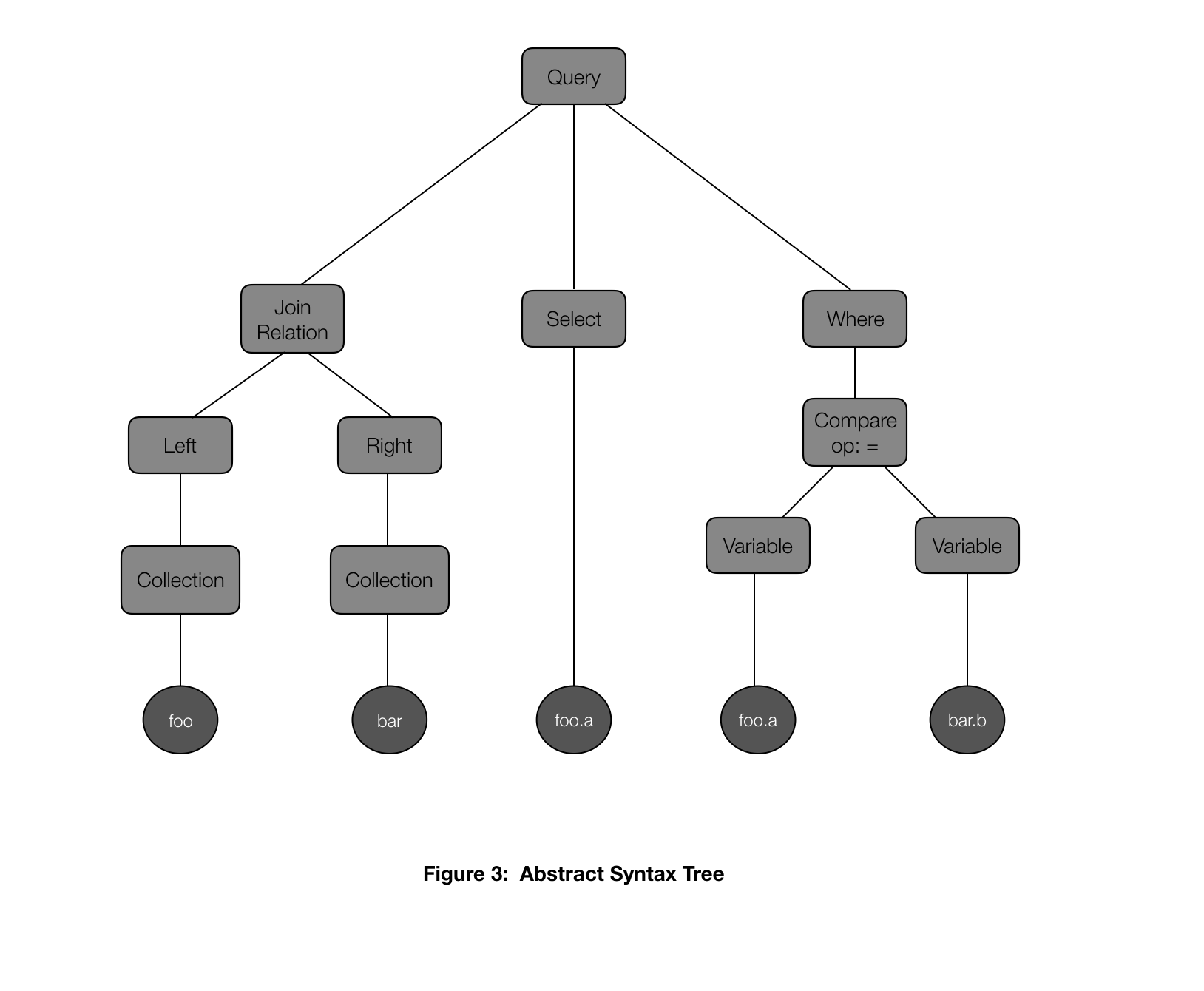

The first step before the planning phase is query parsing. The parser checks the SQL query string for syntactic correctness and then converts it to an abstract syntax tree (AST). This AST is the input to the query planner.

Let us use the following example query as we walk through the different steps of query planning.

SELECT foo.a FROM foo, bar

WHERE foo.a = bar.b

The AST for this query is shown in Figure 3.

The query planner has the following key components:

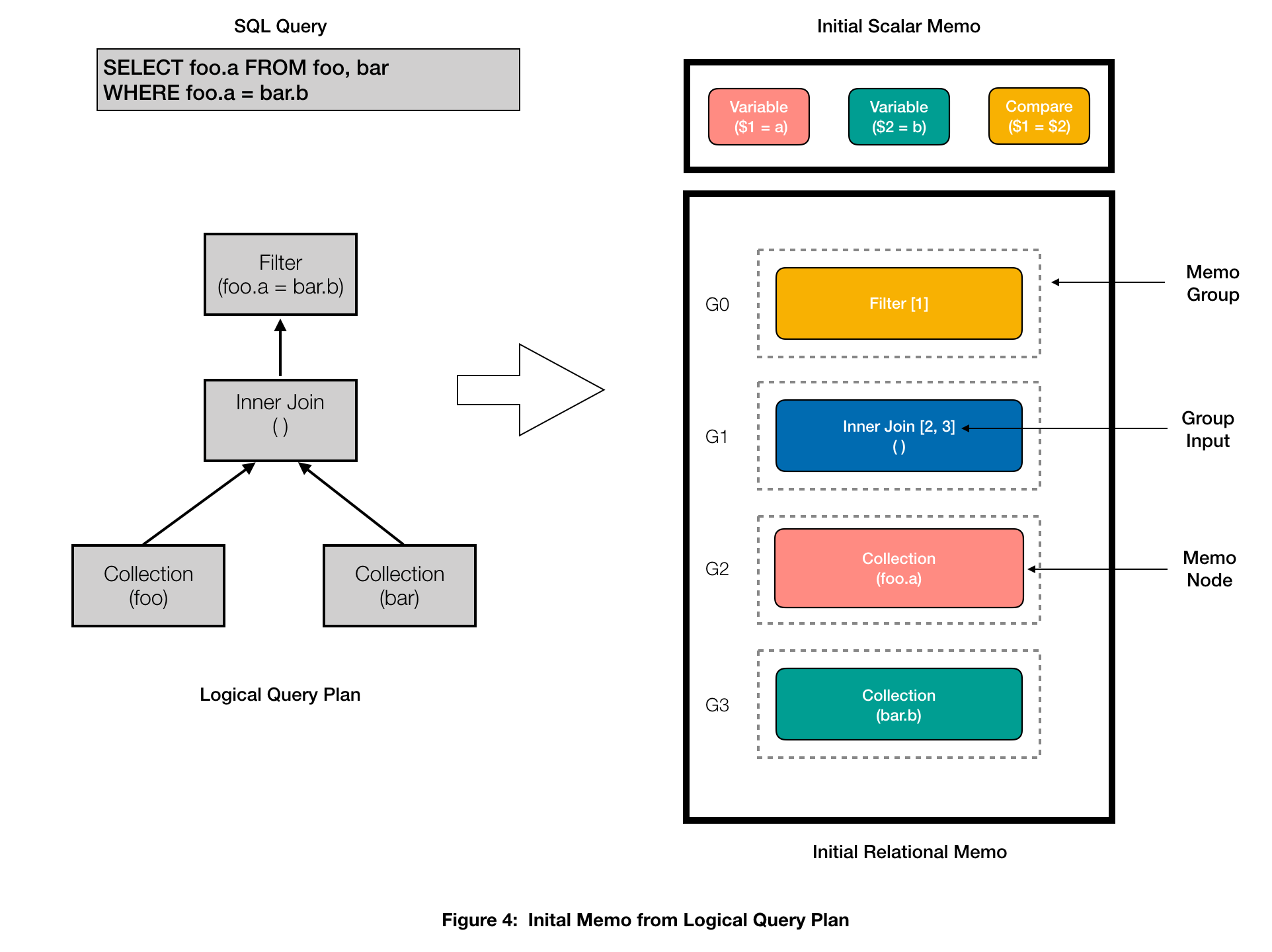

Memo

A Memo is a recursive in-memory data structure used to efficiently store the forest of query plan alternatives generated during query planning.

It consists of the following components:

Memo Group: A Memo consists of a set of containers called groups. Each group contains logically equivalent expressions that each achieve the same group goal in different logical ways.

Memo Node: Each group expression in a memo group is called a memo node. Each memo node is an operator that has other memo groups as children. The memo nodes are subdivided into 2 types:

- Relational (e.g. Collection, Join Relation)

- Scalar (e.g. Expressions)

We have 2 different Memo structures to hold the relational and scalar memo nodes separately. A Relational Memo structure is used to store the relational memo nodes while a Scalar Memo structure stores the scalar memo nodes. Each memo node has a fingerprint that uniquely identifies it. Both the relational and scalar Memos store a unique set of the relational and scalar memo nodes, respectively. The scalar memo does not have groups since the most simplified version of a scalar memo node is stored in the scalar memo.

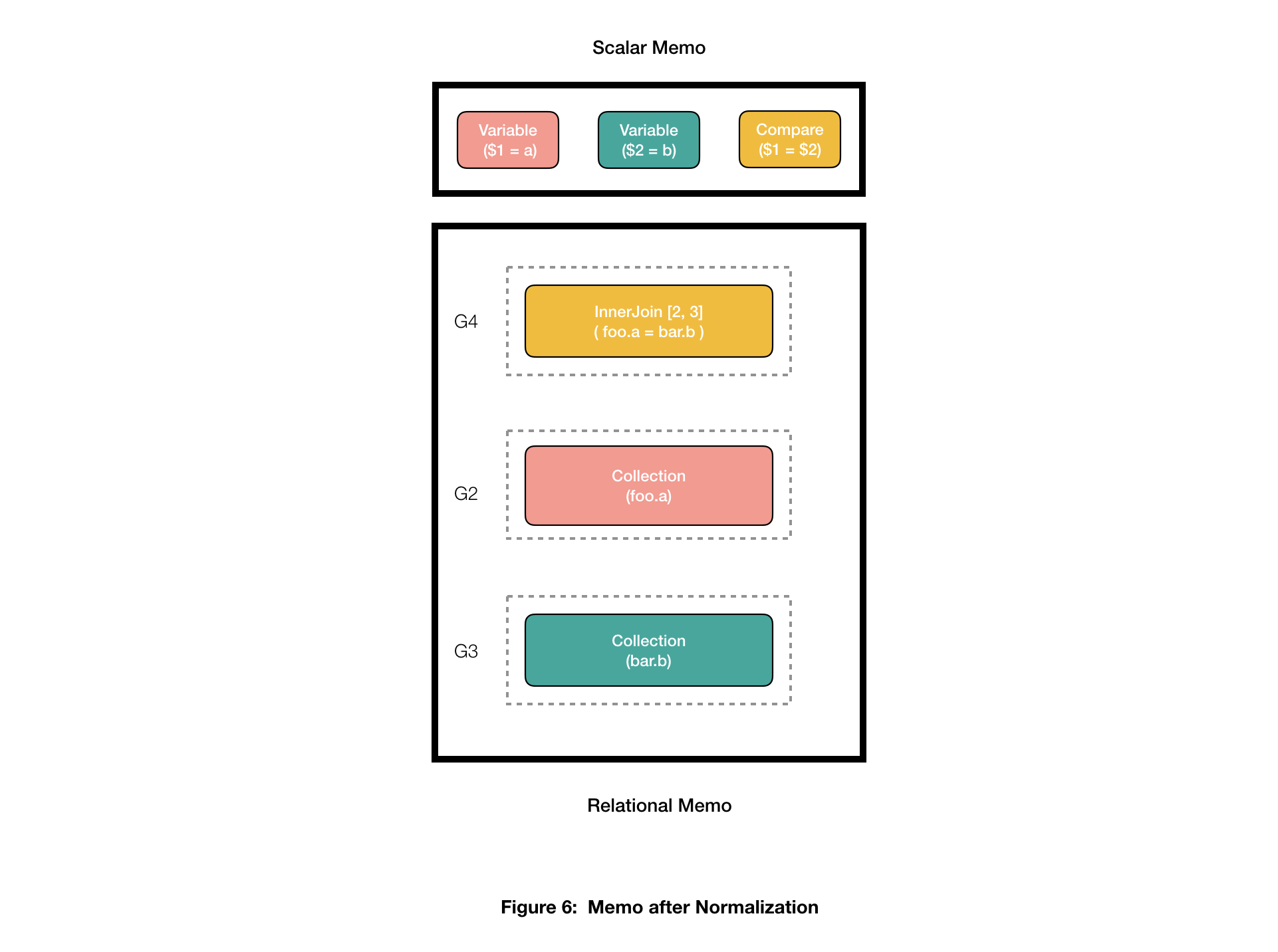

Figure 4 shows the initial contents of the Relational and Scalar Memos for our example query. The logical query plan translates to 4 memo groups, 2 for each Collection, 1 for the InnerJoin with empty predicates, and 1 for the Filter. Group 0 (G0) is also called the root memo group since it corresponds to the root of the logical query plan.

Normalization:

During this step, plan alternatives are generated by applying a set of normalization rules to the plan nodes. Normalization is used mainly to simplify expressions, transform equivalent expressions to a canonical form, and apply optimizations that are believed to always be beneficial in order to save the CBO some work. These rules specify a series of transformations to be applied to a plan node when a particular match condition is satisfied. It is expected that these normalization rules do not lead to cyclic dependencies. The resulting memo nodes are stored in the Memo, which may result in creating new memo groups and/or adding new memo nodes to existing groups. Memo nodes resulting from the normalization of scalars (e.g., constant folding) are considered final. We ignore the cost of computing scalar expressions; we assume that equivalent scalar expressions (such as a + 2 and 2 + a) have the same cost (zero). It is only the relational memo nodes that are explored.

We have implemented our own rule specification language (RSL) to express these normalization rules. We convert these RSL rules to C++ code snippets using our own RSL compiler.

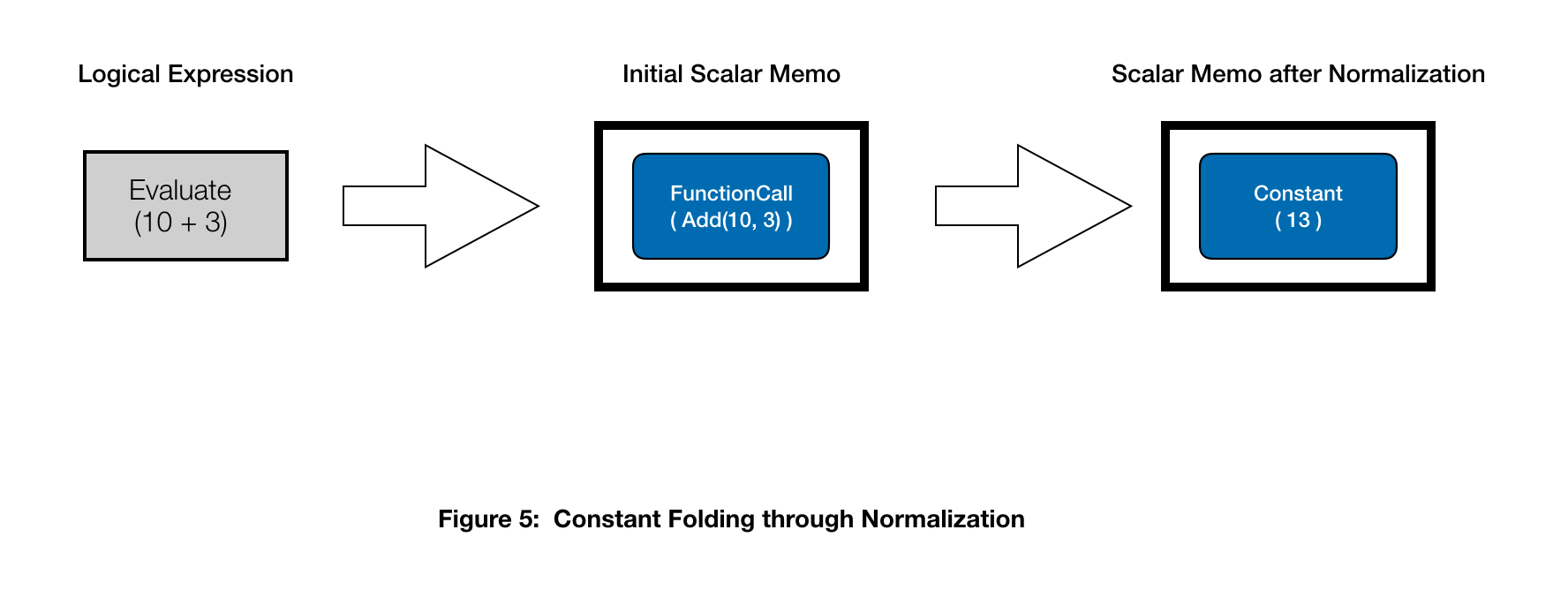

For instance, we can express constant folding in RSL as follows.

[Normalize, Name="evaluateConstantCall"]

FunctionCall(

func: *,

args: * if (allConstant($args))

)

=>

Constant(value: evalFunction($func, $args))

This rule implies that if you encounter a FunctionCall scalar memo node that has all constants for its arguments, replace it with a Constant scalar memo node with its value equal to that of the evaluated function.

This is illustrated in Figure 5.

Going back to our example query, we can specify a normalization rule that produces an alternative plan by pushing down the predicate foo.a = bar.b into the Inner Join operation, as opposed to applying it as a post join predicate.

[Normalize, Name="pushAfterJoinPredicatesIntoInnerJoin"]

Filter(

input: $j=Join(type: kInner, predicates: $join_pred=*),

predicates: $pred=*)

=>

replace($j, predicates: intersectPredicates($join_pred, $pred))

With this normalization,

SELECT foo.a FROM foo, bar

WHERE foo.a = bar.b

effectively converts to

SELECT foo.a FROM foo INNER JOIN bar

ON foo.a = bar.b

Figure 6 shows what the new Memo would look like after normalization. It only shows the memo groups that will be walked during exploration.

Exploration

Exploration happens as part of the query optimization stage. During this phase, the various plan alternatives are costed by scoring dependent memo groups recursively, starting at a Memo’s root group. It is during this phase that the most efficient join strategy, join ordering, and access path would be picked to execute our example query. This is still work in progress and continues to be an active area of development for our team. We will talk about it at length in a future blog post.

Execution

The execution plan obtained as a result of exploration is forwarded to the execution engine, which distributes the tasks across machines to enable distributed query execution. The final results are then relayed back to the end user. We will cover the details about query execution in one of our future blog posts.

A lot of this continues to be actively developed, literally as I write this blog. If working on such exciting problems is your thing, we are hiring!

References:

[1] Soliman, Mohamed A., et al. "Orca: a modular query optimizer architecture for big data." Proceedings of the 2014 ACM SIGMOD international conference on Management of data. ACM, 2014.

[2] CockroachDB: https://github.com/cockroachdb/cockroach/blob/release-19.1/pkg/sql/opt/doc.go