The Rise of Streaming Data and the Modern Real-Time Data Stack

December 9, 2021

Not Just Modern, But Real Time

The modern data stack emerged a decade ago, a direct response to the shortcomings of big data. Companies that undertook big data projects ran head-long into the high cost, rigidity and complexity of managing complex on-premises data stacks. Lifting-and-shifting their big data environment into the cloud only made things more complex.

The modern data stack introduced a set of cloud-native data solutions such as Fivetran for data ingestion, Snowflake, Redshift or BigQuery for data warehousing, and Looker or Mode for data visualization. It meant simplicity, scalability, and lower operational costs. Companies that embraced the modern data stack reaped the rewards, namely the ability to make even smarter decisions with even larger datasets.

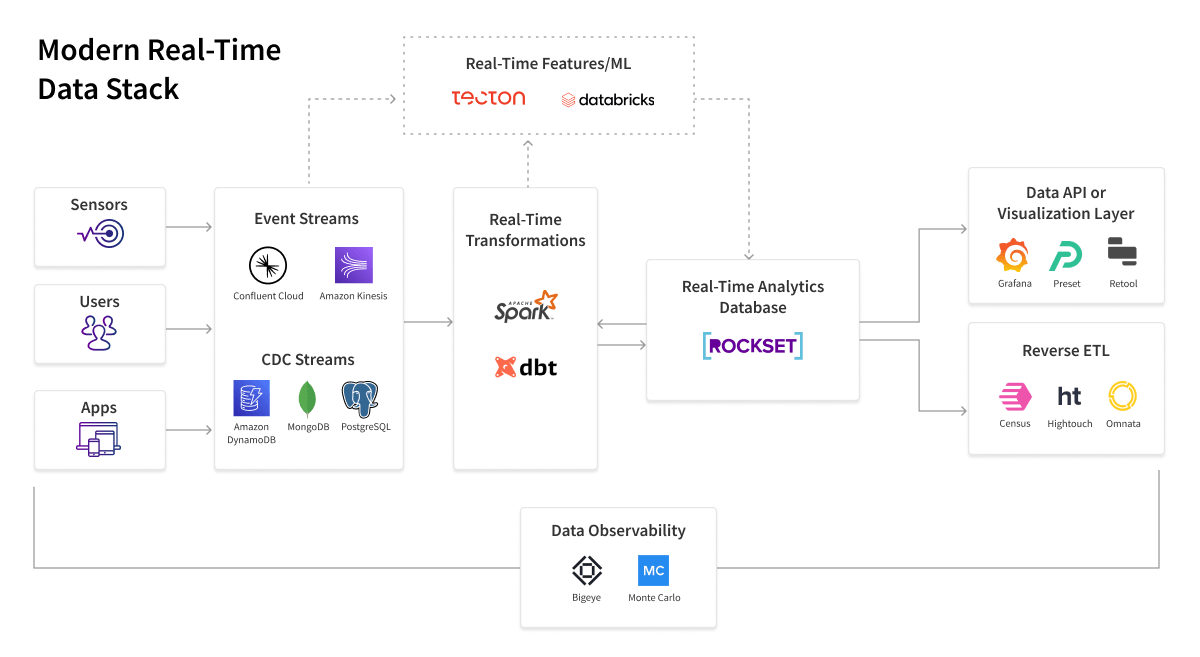

Now more than ten years old, the modern data stack is ripe for innovation. The inevitable next stage? Real-time insights delivered straight to users, i.e. the modern real-time data stack. In this article, we discuss the layers of this stack that demands both cloud-native and SQL capabilities, and identify the best of breed cloud data products in each layer:

- Event and CDC Streams for ingestion: Confluent Cloud, Amazon Kinesis, Striim

- Real-time ETL (or ELT) for real-time transformations: DBT, Amazon Glue, Striim

- Real-Time Analytics Database for fast analytics on fresh data: Rockset

- Data API or Visualization layer: Retool, Grafana, Preset

- Reverse ETL for pushing insights to business apps: Census, Hightouch, Omnata

- Data Observability for ensuring data quality at scale: Bigeye, Monte Carlo

But Why Now?

Remember when you shopped online and it took a week for your packages to arrive -- and you were fine with it? I now expect to get my shipment confirmations within minutes of my credit card being charged, and be able to track my two-day delivery as soon as it leaves the warehouse. I expect the same with my Grubhub dinner delivery and my Uber pickup.

Real-time action isn’t just addictive, it’s becoming our baseline expectation in our consumer and business lives. Take my friendly neighborhood coffee store -- you know the one. Long gone are the days when employees would use old school ERP systems to reorder supplies. No, these days all of the coffee beans, cups, and pastries are tracked and reordered constantly through a fully automated system harvesting sales from the cash registers as soon as they are rung up. In its cover story, the Real-Time Revolution (October 23rd, 2021 edition), the Economist argues:

“The world is on the brink of a real-time revolution in economics, as the quality and timeliness of information are transformed. Big firms from Amazon to Netflix already use instant data to monitor grocery deliveries and how many people are glued to “Squid Game”. The pandemic has led governments and central banks to experiment, from monitoring restaurant bookings to tracking card payments. The results are still rudimentary, but as digital devices, sensors and fast payments become ubiquitous, the ability to observe the economy accurately and speedily will improve. That holds open the promise of better public-sector decision-making—as well as the temptation for governments to meddle.”

Here are some other ways that real-time data is infiltrating our lives:

- Logistics. As soon as you drop off a package for shipping, a sensor in the smart dropbox feeds the data to the shipping company, which detects which driver is closest and re-routes them for immediate pickup. Every day, millions of job tickets are created and tracked in real time across air, freight rail, maritime transport, and truck transport.

- Fitness leaderboards. 10,000 steps a day is a fine goal, but most of us need more motivation. The fitness company Rumble understands that. Its app gives users coins for steps. Rumble also updates leaderboards in real time for a little friendly competition.

- Fraud detection. Time is of the essence in cybercrime. To minimize risk, real-time data such as credit card transactions and login patterns must be constantly analyzed to detect anomalies and take swift action.

- Customer personalization. Online shoppers like relevant product recommendations, but they love when they are offered discounts and bundles for them. To deliver this, e-tailers are mining customers’ past purchases, product views, and a plethora of real-time signals, to create targeted offers that customers are more likely to purchase.

All of these use cases require not just real-time data, but an entire set of tools to ingest, prepare, analyze and output it instantly. Enter the modern real-time data stack, a new wave of cloud solutions created specifically to support real-time analytics with high concurrency, performance and reliability -- all without breaking the bank.

I’ve briefly explained how we’ve arrived at this moment for the modern real-time data stack, as well as some of the use cases that make real-time data so powerful. In this article, I’ll also outline:

- What are the five unique technical characteristics of real-time data;

- What are the four technology requirements of the modern real-time data stack;

- And what are the key solutions you need to deploy in your modern real-time data stack.

Disclaimer: Rockset is a real-time analytics database and one of the pieces in the modern real-time data stack

So What is Real-Time Data (And Why Can’t the Modern Data Stack Handle It)?

Every layer in the modern data stack was built for a batch-based world. The data ingestion, transformation, cloud data warehouse, and BI tools were all designed for a world of weekly or monthly reports, which no longer work in the real-time world. Here are five characteristics of real-time data that the batch-oriented modern data stack has fundamental problems coping with.

- Massive, often bursty data streams. With clickstream or sensor data the volume can be incredibly high -- many terabytes of data per day -- as well as incredibly unpredictable, scaling up and down rapidly.

- Change data capture (CDC) streams. It is now possible to continuously capture changes as they happen in your operational database like MongoDB or Amazon DynamoDB. The problem? Many data warehouses, including some of the best-known cloud ones, are immutable, meaning that data can’t easily be updated or rewritten. That makes it very difficult for the data warehouse to stay synced in real-time with the operational database.

- Out-of-order event streams. With real-time streams, data can arrive out of order (in time), or be re-sent, resulting in duplicates. The batch stack is not built to handle this peculiarity of event streams.

- Deeply-nested JSON and dynamic schemas. Real-time data streams typically arrive raw and semi-structured, say in the form of a JSON document, with many levels of nesting. Moreover, new fields and columns of data are constantly appearing. These can easily break rigid data pipelines in the batch world.

- Destination: Data Apps and Microservices. Real-time data streams typically power analytical or data applications whereas batch systems were built to power static dashboards. This fantastic piece about the anatomy of analytical applications defined a data app as an end-user facing application that natively includes large-scale, aggregate analysis of data in its functionality. This is an important shift, because developers are now end users and they tend to iterate and experiment fast, while demanding more flexibility than what was expected of batch systems.

What Defines the Modern Real-Time Data Stack?

The real-time wave extends some of the core concepts of the Modern Data Stack in natural ways:

| Category | Modern Data Stack | Modern Real-Time Data Stack |

|---|---|---|

| Language | SQL | SQL |

| Deployment | Cloud-native | Cloud-native |

| Data Ops | Complex batch transformations every 15 minutes, hourly or daily | Simple incremental transformations every second |

| Insights | Monthly, Weekly or Daily | Instantly |

| Cost | Affordable at massive scale | Affordable at massive scale and speed |

- SQL Compatibility: SQL, despite being around for nearly a half century, continues to innovate. Embracing SQL as the standard for real-time data analytics is the most affordable and accessible choice.

- Cloud-Native Services: There is cloud, and there is cloud-native. For data engineering teams, cloud-native services are preferred whenever available, providing far better just-in-time scaling for dealing with fluid real-time data sources, so that they don't have to overprovision services or worry about downtime.

- Low Data Operations: Real-time data pipelines force a shift from complex batch transformations to simple continuous transformations. If you need to do a lot of schema management, denormalization of data, or flattening of JSON code before any data can be ingested, then it is neither modern nor real-time.

- Instant Insights: The ability to search, aggregate and join data as it arrives from different sources, detect anomalies in real-time and alert the right users wherever they consume their information (eg: Salesforce or Slack) is a key requirement here.

- Affordability: Affordability has two dimensions -- human efficiency and resource efficiency. Today’s modern real-time data solutions are intuitive and easy to manage, requiring less headcount and less computing to deliver speed at scale.

What are the Key Layers of Your Modern Real-Time Data Stack?

I talk to both customers and vendors in this space every day and here’s my view of the must-have technologies for a modern real-time data stack.

- Event and CDC Streams: This is driven by the interest in real-time clickstream and IoT sensor data. The best event streaming solutions are cloud-based, easy to manage, and cost-effective. Confluent Cloud, Amazon Kinesis and Google Pub/Sub all fit the bill. Confluent Cloud, in particular, provides a lower-ops, more-affordable alternative to Apache Kafka. CDC streaming is also on the rise, as companies separate their real-time-capable analytics systems from their core operational databases. Database and CDC technologies have also matured, making CDC streaming easier and more reliable than in the past. While some OLTP databases can publish CDC streams natively, powerful tools have also stepped in to offload this compute-intensive work from the database. Tools such as Debezium and Striim can sync updates to analytical databases and have them ready for queries in under five seconds.

- Real-time ETL (or ELT) Service: For streamed data, most companies will prefer the flexibility of Extract, Load and Transfer (ELT). The source data remains unblemished, while transformations can be done repeatedly inside the destination system as needed. Popular open-source streaming ETL solutions include Spark and Flink, with Amazon Glue being a popular cloud deployment of Apache Spark. Apache Spark also has PySpark, an easy-to-use tool for transforming streaming data using Python. dbt Cloud is another SQL-based ELT tool which, while rooted in the batch world, has moved strongly into real time. That said, streaming ETL has come a long way, augmented by in-memory stream processing. It can be the right choice when you have massive datasets that require deduplication and other preprocessing before ingestion into your real-time analytics database.

- Real-Time Analytics Database: The lynchpin is an analytics database designed expressly to handle streaming data. That means it must be able to ingest massive data streams and make it ready for queries within seconds. Query results need to be returned even more quickly, even complex ones. And the number of concurrent queries must be able to scale without creating contention that slows down your ingest. A database that uses SQL for efficiency and separates the ingest from the query compute is a key prerequisite. Even better is a real-time analytics database that can perform rollups, searches, aggregations, joins and other SQL operations as the data is ingested. These are difficult requirements, and Rockset is one such cloud-native real-time analytics database. Note: there is an optional real-time ML pipeline with tools like Databricks and Tecton, which help with real-time feature generation and can work well with your real-time analytics database being the serving layer.

- Data API Layer for Real-Time Applications: BI dashboards and visualizations did their job well. But they are expensive, hard to use, and require data analysts to monitor them for changes. So BI did not democratize access to analytics. But API gateways will, by providing secure, simple, easy-to-build, and fast query access to the freshest data. This enables a new class of real-time applications such as monitoring and tracking applications for cybersecurity, logistics or fraud detection that detect and analyze anomalies to minimize needless alerts. Or real-time recommendation engines and ML-driven customer chat systems that help personalize the customer experience. Or data visualisation applications that enable decision makers to explore data in real time for guided, big-picture strategic decisions. We’re excited by GraphQL based APIs created originally at Facebook. Also check out tools such as Apache Superset and Grafana to help you build modern real-time data visualizations.

- Reverse ETL: With reverse ETL tools like Census, Hightouch and Omnata, you bring real-time insights back into your SaaS applications such as Salesforce, Hubspot, and Slack -- wherever your users live. This lets you get the most out of your data, reduce the number of data silos, and boost data-hungry operational teams such as marketing and sales campaigns, supply chain management, and customer support.

- Data Observability: With the real-time data stack, companies ingest higher volumes of data and act on them almost instantly. This means monitoring the health of the data and ensuring that it is indeed reliable, becomes even more important. The ability to monitor data freshness, data schemas, lineage increases trust as more mission-critical as applications, not just humans start consuming the data. Leaders in this space, Bigeye and Monte Carlo, are ensuring that teams can measure and improve the quality of their data in real-time.

Your Next Move

Some companies have parts of the modern real-time data stack today such as a Kafka stream. Others only want to upgrade piece by piece. That’s okay, but keep in mind that if you’re capturing data in real time but using a batch-oriented warehouse to analyze it, you’re not getting your money’s worth. Using the right tool for the job is important for getting the best results with the least effort. Especially when the modern real-time data stack is so affordable, and the ROI is so potentially high.

I’d love to hear your thoughts around real-time data and analytics! Please comment below or contact me if you’d like to discuss the modern real-time data stack.

This was originally published on The Newstack as a contributed article by Shruti Bhat.

About the author

Shruti Bhat is Chief Product Officer and Senior Vice President of Marketing at Rockset. Prior to Rockset, she led Product Management for Oracle Cloud where she had a focus on AI, IoT and Blockchain, and was VP Marketing at Ravello Systems where she drove the start-up's rapid growth from pre-launch to hundreds of customers and a successful acquisition. Prior to that, she was responsible for launching VMware's vSAN and has led engineering teams at HP and IBM.