Three Reference Architectures for Real-Time Analytics On Streaming Data

April 27, 2023

This is part three in Rockset’s Making Sense of Real-Time Analytics (RTA) on Streaming Data series. In part 1, we covered the technology landscape for real-time analytics on streaming data. In part 2 we covered the differences between real-time analytics databases and stream processing. In this post, we’ll get to the details: how does one design an RTA system?

We’ve been helping customers implement real-time analytics since 2018. We’ve noticed many common patterns across streaming data architectures and we’ll be sharing a blueprint for three of the most popular: anomaly detection, IoT, and recommendations.

Our examples will all feature Rockset, but you can swap it out for other RTA databases, with a few use-case-specific caveats. We’ll make sure to call those out in each section, as well as important considerations for each use case.

Anomaly Detection

The general promise of real-time analytics is this: when it comes to analyzing data, fast is better than slow and fresh data is better than stale data. This is especially true for anomaly detection. To demonstrate how broadly applicable anomaly detection is, here are a few examples we’ve encountered:

- A two-sided marketplace monitors for suspiciously low transaction counts across various suppliers. They quickly identify and solve technical infrastructure issues before suppliers churn.

- A game development agency searches for suspiciously high win-rates across its players, helping them quickly identify cheaters, keep gameplay fair, and maintain high retention rates.

- An insurance company sets thresholds for various types of support tickets, identifying issues with services or products before they affect revenue.

The majority of anomaly detectors require streaming data, real-time data and historical data in order to generate inferences. Our example architecture for anomaly detection will leverage both historical data and website activity to search for suspiciously low transaction counts.

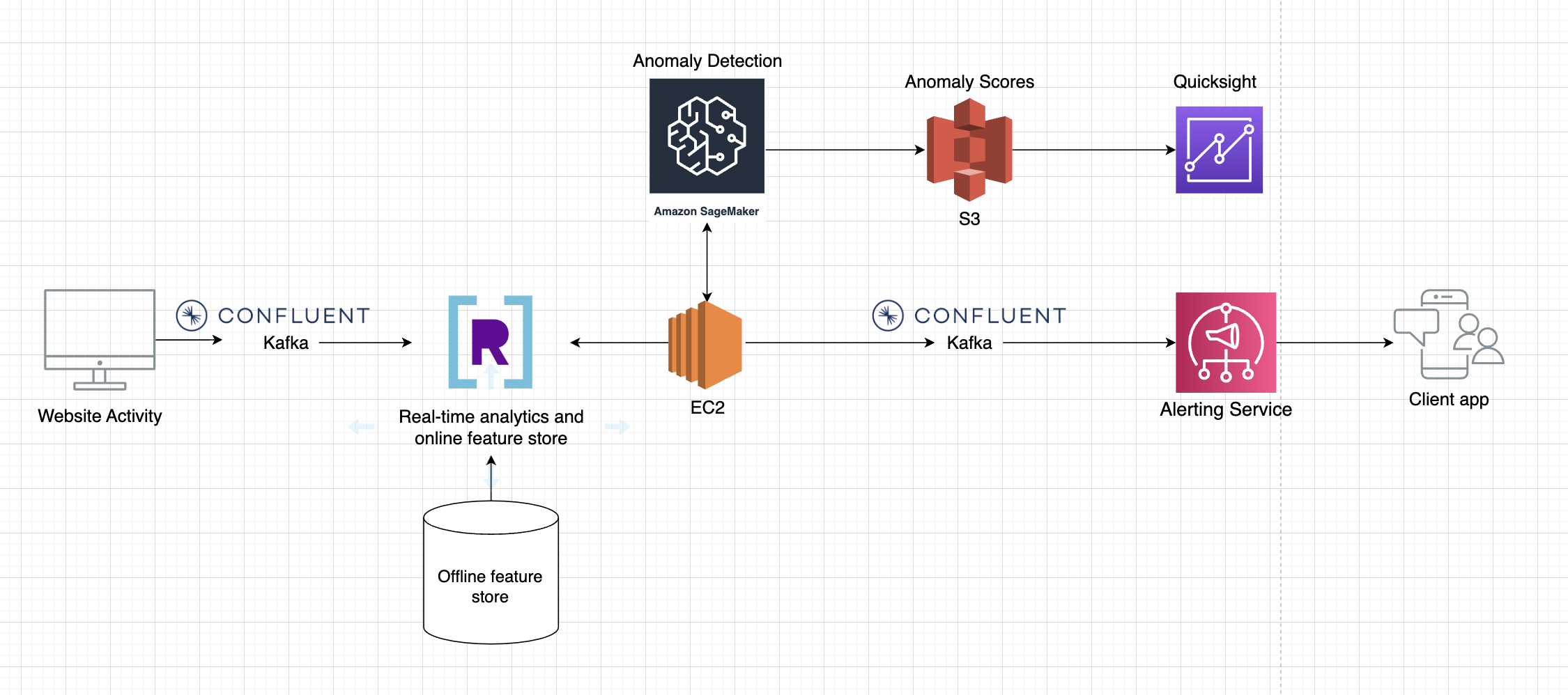

This architecture has a few key components:

- Streaming data: Streaming data is generated by website activity and transported to Rockset via Confluent Cloud.

- Offline feature store: Detecting anomalies requires historical data in order to have a baseline for comparisons. This data tends to be slow changing and is stored in an offline feature store. This could be a cloud data warehouse, a data lake, or a database.

-

Online feature store: We’re using a real-time analytics database as an online feature store. The database has two primary jobs.

- In order to effectively feed an anomaly detection model, you need a database with real-time ingest for streaming data. You have to make decisions on the freshest data available, otherwise you risk making the wrong decision. Once data is ingested, the RTA database can execute either complex or trivial SQL queries to compute features related to transactions. Imagine incorporating data like lifetime transaction account, average transaction amount, geographical data, etc.

- Generating features often requires joining multiple data sets. Therefore, choose an RTA database that supports high-performance joins to enrich real-time data with historical data. This will save you the pain inherent in denormalization.

- Polling: An EC2 instance periodically executes queries on the RTA database for features, feeds them into a machine learning model, and transports model results via Confluent Cloud.

- Model results: Model results are produced via AWS Sagemaker. To learn more about how to build fraud detection models, this is a great resource.

- Data lake: Additionally, anomaly scores are fed to a data lake for durable storage and visualization.

- Visualization: Model results are visualized in AWS Quicksight for trend analysis and operational analytics.

- Alerting service: Anomaly scores are fed via Confluent Cloud to an alerting service, which can alert other teams, services, or apps, as required by business policies.

There are better and worse RTA databases for anomaly detection. Here’s what we’ve found to be important as we’ve worked with real customers:

- Ingest latency: If your real-time data source (website activity in our case) is producing inserts and updates, a high rate of updates could reduce ingest performance. Some RTA databases handle inserts with high performance, but incur large penalties when processing updates or duplicates (Apache Pinot, for example), which often results in a delay between events being produced and the information in those events being available for queries. Rockset is a fully mutable database and processes updates as quickly as it processes inserts.

- Ingest performance: In addition to ingest latency, your RTA database might face streaming data that’s high in volume and velocity. If the RTA database uses a batch or microbatch ingest method (ClickHouse or Apache Druid, for example), there could be significant delays between events being produced and their availability for querying. Rockset allows you to scale compute independently for ingest and querying, which prevents compute contention. It also efficiently handles massive streaming data volumes.

- Mutability: We’ve highlighted the performance impact of updates, but it’s important to ask whether a RTA database can handle updates at all, let alone at high performance. Not all RTA databases are mutable, and yet anomaly detection might require updates to comply with GDPR, to fix errors, or for any other number of reasons.

- Joins: Sometimes the process of enriching or joining streaming data with historical data is called backfilling. For anomaly detection, historical data is essential. Ensure your RTA database can accomplish this without denormalization or data engineering gymnastics. It will save significant operational time, energy, and money. Rockset supports high-performance joins at query time for all data sources, even for deeply nested objects.

- Flexibility: Make sure your RTA database is flexible. Rockset supports ad-hoc queries, automatic indexing, and the flexibility to edit queries on the fly, without admin support.

IoT Analytics

IoT, or the internet of things, involves deriving insights from large numbers of connected devices, which are capable of collecting vast amounts of real-time data. IoT analytics provides a way to harness this data to learn about environmental factors, equipment performance, and other critical business metrics. IoT can sound buzzword-y and abstract, so here are a few concrete use cases we’ve encountered:

- An agriculture company uses connected sensors to identify irregularities in nutrients and water to ensure crop yield is healthy. In margin-sensitive businesses like agriculture, any factor that negatively affects yields needs to be dealt with as quickly as possible. In addition to surfacing nutrient issues, IoT AgTech can make consumption more efficient. Using sensors to monitor water silo levels, soil moisture, and nutrients helps prevent overwatering, overfeeding, and ultimately helps conserve resources. This results in less environmental waste and higher yield, aligning across business goals and sustainability goals.

- A software as a service (SaaS) company provides a platform for buildings to monitor carbon dioxide levels, infrastructure failures, and climate control. This is the classic “smart building” use case, but the sudden rise in remote and hybrid work has made building capacity planning an additional challenge. Occupancy sensors help businesses understand usage patterns across buildings, floors, and meeting rooms. This is powerful data; choosing the right amount of office space has meaningful cost ramifications.

The volume and real-time nature of IoT makes it a natural use case for streaming data analytics. Let’s take a look at a simple architecture and important features to consider.

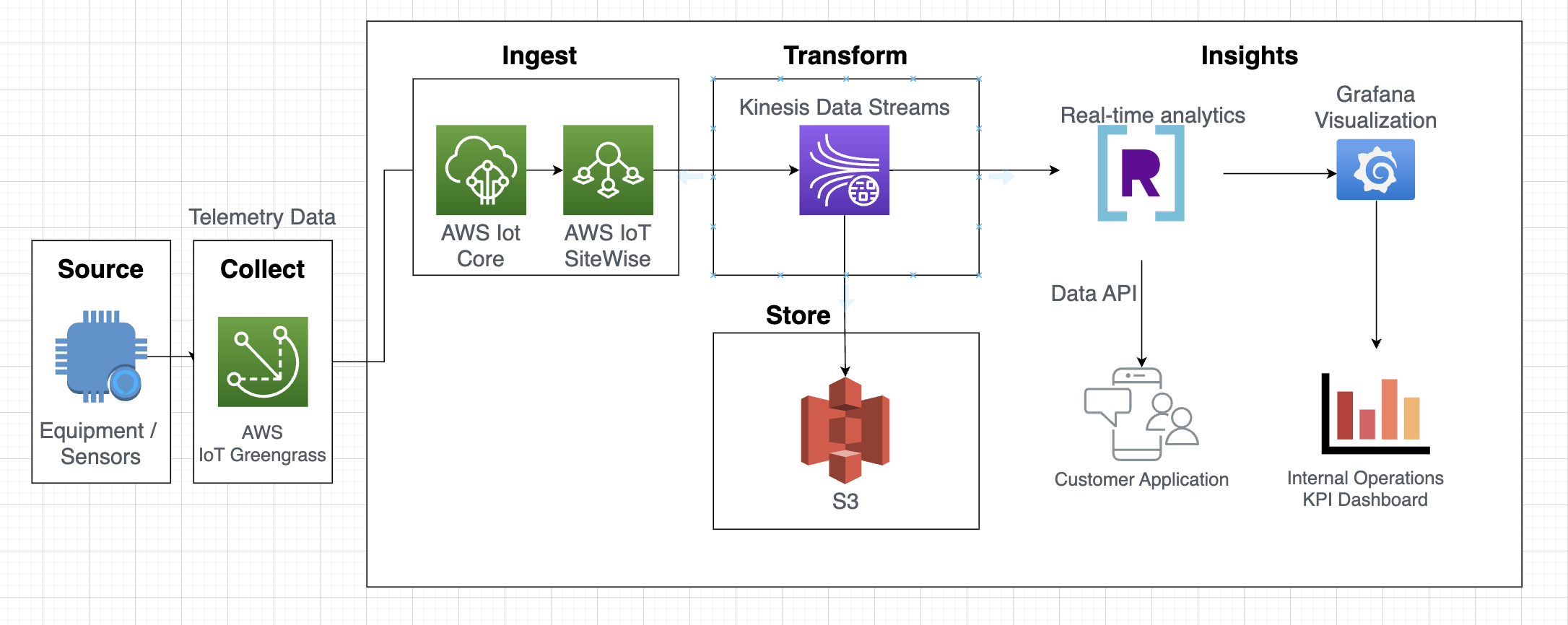

This architecture has a few key components:

- Sensors: Inclinometer metrics are generated by sensors placed throughout a building. These sensors trigger alarms if shelving or equipment exceeds “tilt” thresholds. They also help operators assess the risk of collision or impacts.

- Cloud-based edge integration: AWS Greengrass connects sensors to the cloud, enabling them to send streaming data to AWS.

- Ingestion layer: AWS IoT Core and AWS IoT Sitewise provide a central location for storing and routing events in common industrial formats, reducing complexity for IoT architectures.

- Streaming data: AWS Kinesis Data Streams is the transport layer that sends events to durable storage as well as a real-time analytics database.

- Data lake: S3 is being used as the durable storage layer for IoT events.

- Real-time analytics database: Rockset ingests streaming data from AWS Kinesis Data Streams and makes it available for complex analytical queries by applications.

- Visualization: Rockset is also integrated with Grafana, to visualize, analyze, and monitor IoT sensor data. Note that Grafana can also be configured to send notifications when thresholds are met or exceeded.

When implementing an IoT analytics platform, there are a few important considerations to keep in mind as you choose a database to analyze sensor data:

- Rollups: IoT tends to produce high-volume streaming data, only a subset of which is typically needed for analytics. When individual events reach the database, they can be aggregated or consolidated to save space. It’s important that your RTA database supports rollups at ingestion to reduce storage cost and improve query performance. Rockset supports rollups for all common streaming data sources.

- Consistency: Like other examples in this article, the streaming platform that delivers events to your RTA database will occasionally deliver events that are out-of-order, incomplete, late, or duplicates. Your RTA database should be able to update both records and query results.

- Ingest performance: Similar to other use cases in this article, ingest performance is incredibly important when streaming data is arriving at high velocities. Ensure you stress test your RTA database with realistic data volumes and velocities. Rockset was designed for high-volume, high-velocity use cases, but every database has its limits.

- Time-based queries: Ensure your RTA database has a columnar index partitioned on time, especially if your IoT use case requires time-windowed queries (which it almost certainly will). This feature will improve query latency significantly. Rockset can partition its columnar index by time.

- Automatic data-retention policies: As with all high-volume streaming data use cases, ensure your RTA database supports automatic data retention policies. This will significantly reduce storage costs. Historical data is available for querying in your data lake. Rockset supports time-based retention policies at the collection (table) level.

Recommendations

Personalization is a recommendation technique that delivers custom experiences based on a user’s prior interactions with a company or service. Two examples we’ve encountered with customers include:

- An insurance company delivers personalized, risk-adjusted pricing by using both historical and real-time risk factors, including credit history, employment status, assets, collateral, and more. This pricing model reduces risk for the insurer and reduces policy prices for the consumer.

- An eCommerce marketplace recommends products based on users’ browsing history, what’s in stock, and what similar users have purchased. By surfacing relevant products, the eCommerce company increases conversion from browsing to sale.

Below is a sample architecture for an eCommerce personalization use case.

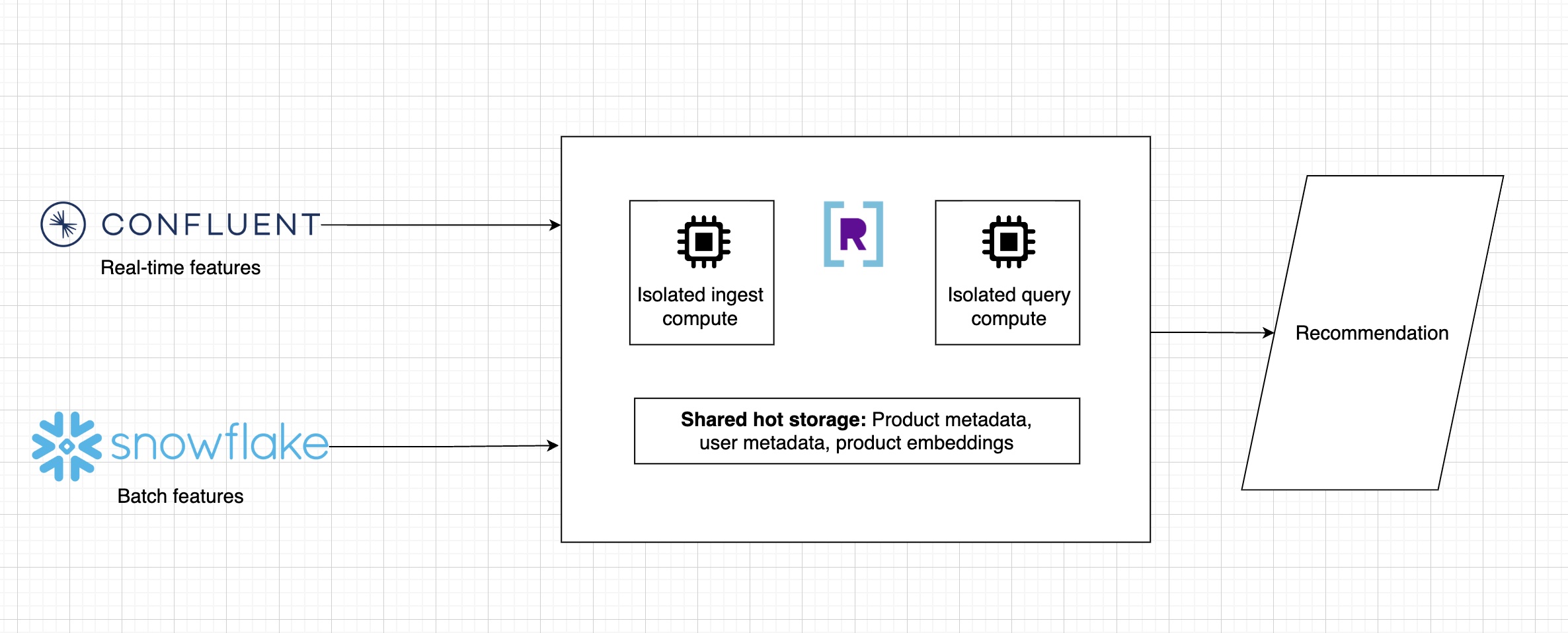

The key components for this architecture are:

- Streaming data: Streaming data is generated by customer website behavior. It’s converted to embeddings and transported via Confluent Cloud to an RTA database.

- Cloud data warehouse: Pre-computed batch / historical features are ingested into an RTA database from Snowflake.

- Real-time analytics database (ingestion): Because Rockset offers compute-compute separation, it can isolate compute for ingest. This ensures predictable performance without overprovisioning, even during periods of bursty queries.

- Real-time analytics database (querying): A separate virtual instance is dedicated to analytical queries for personalization. We'll use a separate virtual instance - compute and memory - to process the application queries. Rockset can support rules-based and machine learning-based algorithms for personalization. In this example, we’re featuring a machine-learning based algorithm, with Rockset ingesting and indexing vector embeddings.

When it comes to RTA databases, this use case has a few unique characteristics to consider:

- Vector search: Vector search is a method for finding similar items or documents in a high-dimensional vector space. The queries calculate similarities between vector representations using distance functions such as Euclidean distance or cosine similarity. In our case, queries are written to find similarities between products, while filtering both real-time metadata, like product availability, and historical metadata, like a user’s previous purchases. If an RTA database supports vector search, you can use distance functions on embeddings directly in SQL queries. This will simplify your architecture considerably, deliver low-latency recommendation results, and enable metadata filtering. Rockset supports vector search in a way that makes product recommendations easy to implement.

- SQL: Any team that’s implemented analytics directly on streaming data, which usually arrives as semi-structured data, understands the difficulty of handling deeply-nested objects and attributes. While an RTA database that supports SQL isn’t a hard requirement, it’s a feature that will simplify operations, reduce the need for data engineering, and increase the productivity of engineers writing queries. Rockset supports SQL out of the box, including on nested objects and arrays.

- Performance: For real-time personalization to be useful, it must be able to quickly analyze fresh data. Efficacy will increase as end-to-end latency decreases. Therefore, the faster an RTA database can ingest and query data, the better. Avoid databases with end-to-end latency greater than 2 seconds. Rockset has the ability to spin up dedicated compute for ingestion and querying, eliminating compute contention. With Rockset, you can achieve ~1 second ingest latency and millisecond-latency SQL queries.

- Joining data: There are many ways to join streaming data to historical data: ksql, denormalization, ETL jobs, etc. However, for this use case, life is easier if the RTA database itself can join data sources at query time. Denormalization, for example, is a slow, brittle and expensive way to get around joins. Rockset supports high-performance joins between streaming data and other sources.

- Flexibility: In many cases, you’ll want to add data attributes on the fly (new product categories, for example). Ensure your RTA database can handle schema drift; this will save many engineering hours as models and their inputs evolve. Rockset is schemaless at ingest and automatically infers schema at query time.

Conclusion

Given the staggering progress in the fields of machine learning and artificial intelligence, it’s clear that business-critical decision making can and should be automated. Streaming, real-time data is the backbone of automation; it feeds models with information about what’s happening now. Companies across industries need to architect their software to leverage streaming data so that they’re real time end-to-end.

There are many real-time analytics databases that make it possible to quickly analyze fresh data. We built Rockset to make this process as simple and efficient as possible, for both startups and large organizations. If you’ve been dragging your feet on implementing real time, it’s never been easier to get started. You can try Rockset right now, with $300 in credits, without entering your credit card. And if you’d like a 1v1 tour of the product, we have a world class engineering team that would love to speak with you.