When Real-Time Matters: Rockset Delivers 70ms Data Latency at 20MB/s Streaming Ingest

June 8, 2023

Streaming data adoption continues to accelerate with over 80% of Fortune 100 companies already using Apache Kafka to put data to use in real time. Streaming data often sinks to real-time search and analytics databases which act as a serving layer for use cases including fraud detection in fintech, real-time statistics in esports, personalization in eCommerce and more. These use cases are latency sensitive with even milliseconds of data delays resulting in revenue loss or risk to the business.

As a result, customers ask about the end-to-end latency they can achieve on Rockset or the time from when data is generated to when it is made available for queries. As of today, Rockset releases a benchmark that achieves 70 ms of data latency on 20 MB/s of throughput on streaming data.

Rockset’s ability to ingest and index data within 70ms is a massive achievement that many large enterprise customers have been struggling to attain for their mission-critical applications. With this benchmark, Rockset gives confidence to enterprises building next-generation applications on real-time streaming data from Apache Kafka, Confluent Cloud, Amazon Kinesis and more.

Several recent product enhancements led Rockset to achieve millisecond-latency streaming ingestion:

- Compute-compute separation: Rockset separates streaming ingest compute, query compute and storage for efficiency in the cloud. The new architecture also reduces the CPU overhead of writes by eliminating duplicative ingestion tasks.

- RocksDB: Rockset is built on RocksDB, a high-performance embedded storage engine. Rockset recently upgraded to RocksDB 7.8.0+ which offers several enhancements that minimize write amplification.

- Data Parsing: Rockset has schemaless ingest and supports open data formats and deeply nested data in JSON, Parquet, Avro formats and more. To run complex analytics over this data, Rockset converts the data at ingest time into a standard proprietary format using efficient, custom-built data parsers.

In this blog, we describe the testing configuration, results and performance enhancements that led to Rockset achieving 70 ms data latency on 20 MB/s of throughput.

Performance Benchmarking for Real-Time Search and Analytics

There are two defining characteristics of real-time search and analytics databases: data latency and query latency.

Data latency measures the time from when data is generated to when it is queryable in the database. For real-time scenarios, every millisecond matters as it can make the difference between catching fraudsters in their tracks, keeping gamers engaged with adaptive gameplay and surfacing personalized products based on online activity and more.

Query latency measures the time to execute a query and return a result. Applications want to minimize query latency to create snappy, responsive experiences that keep users engaged. Rockset has benchmarked query latency on the Star Schema Benchmark, an industry-standard benchmark for analytical applications, and was able to beat both ClickHouse and Druid, delivering query latencies as low as 17 ms.

In this blog, we benchmarked data latency at different ingestion rates using Rockbench. Data latency has increasingly become a production requirement as more and more enterprises build applications on real-time streaming data. We’ve found from customer conversations that many other data systems struggle under the weight of high throughput and cannot achieve predictable, performant data ingestion for their applications. The issue is a lack of (a) purpose-built systems for streaming ingest (b) systems that can scale ingestion to be able to process data even as throughput from event streams increases rapidly.

The goal of this benchmark is to showcase that it’s possible to build low-latency search and analytical applications on streaming data.

Using RockBench for Measuring Throughput and Latency

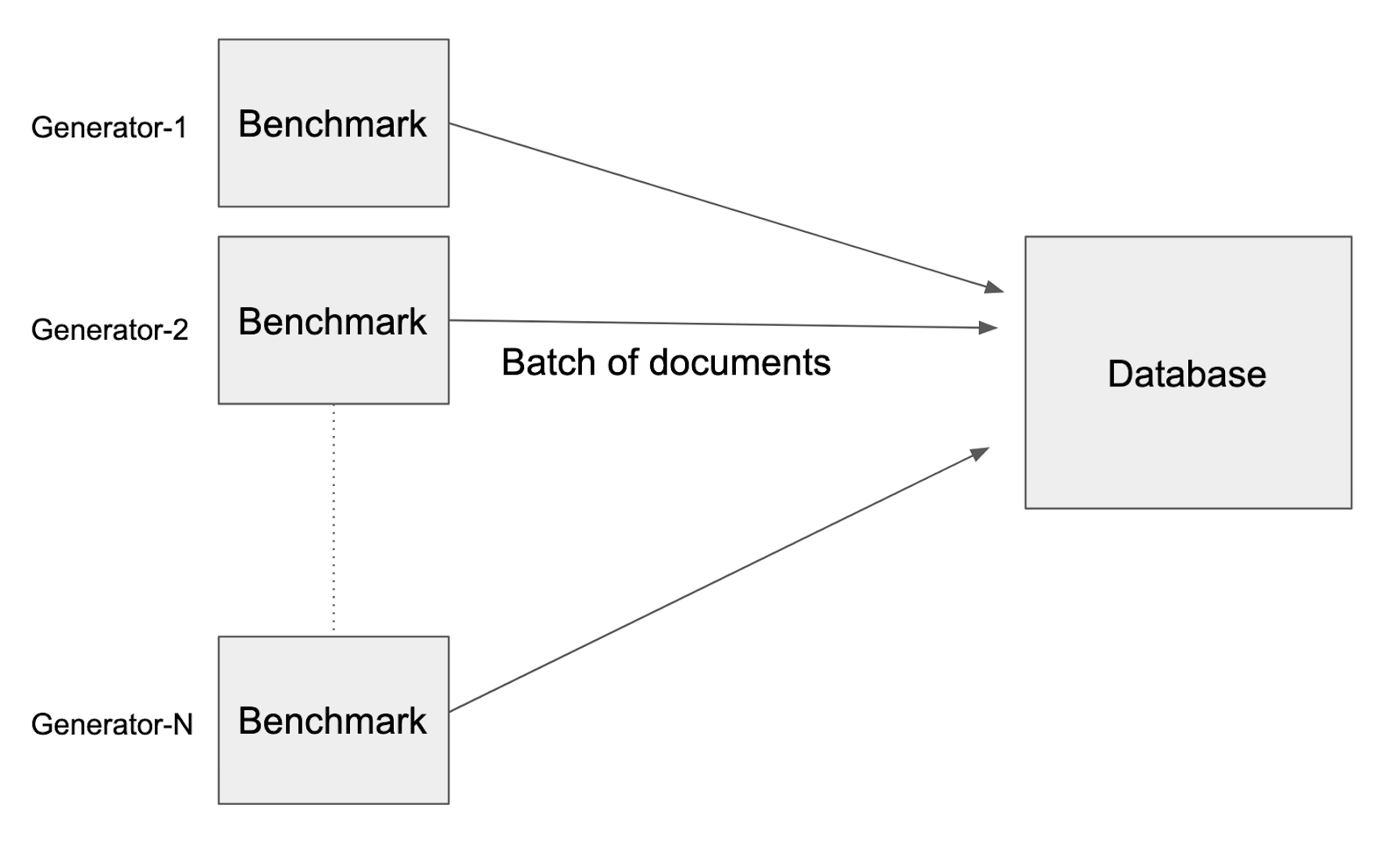

We evaluated Rockset’s streaming ingest performance using RockBench, a benchmark which measures the throughput and end-to-end latency of databases.

RockBench has two components: a data generator and a metrics evaluator. The data generator writes events to the database every second; the metrics evaluator measures the throughput and end-to-end latency.

The data generator creates 1.25KB documents with each document representing a single event. This translates to 8,000 writes being the equivalent of 10 MB/s.

To mirror semi-structured events in realistic scenarios, each document has 60 fields with nested objects and arrays. The document also contains several fields that are used to calculate the end-to-end latency:

_id: The unique identifier of the document_event_time: Reflects the clock time of the generator machinegenerator_identifier: 64-bit random number

The _event_time of each document is then subtracted from the current time of the machine to arrive at the data latency for each document. This measurement also includes round-trip latency—the time required to run the query and get results from the database. This metric is published to a Prometheus server and the p50, p95 and p99 latencies are calculated across all evaluators.

In this performance evaluation, the data generator inserts new documents to the database and does not update any existing documents.

Rockset Configuration and Results

All databases make tradeoffs between throughput and latency when ingesting streaming data with higher throughput incurring latency penalties and vice versa.

We recently benchmarked Rockset’s performance against Elasticsearch at maximum throughput and Rockset achieved up to 4x faster streaming data ingestion. For this benchmark, we minimized data latency to display how Rockset performs for use cases demanding the freshest data possible.

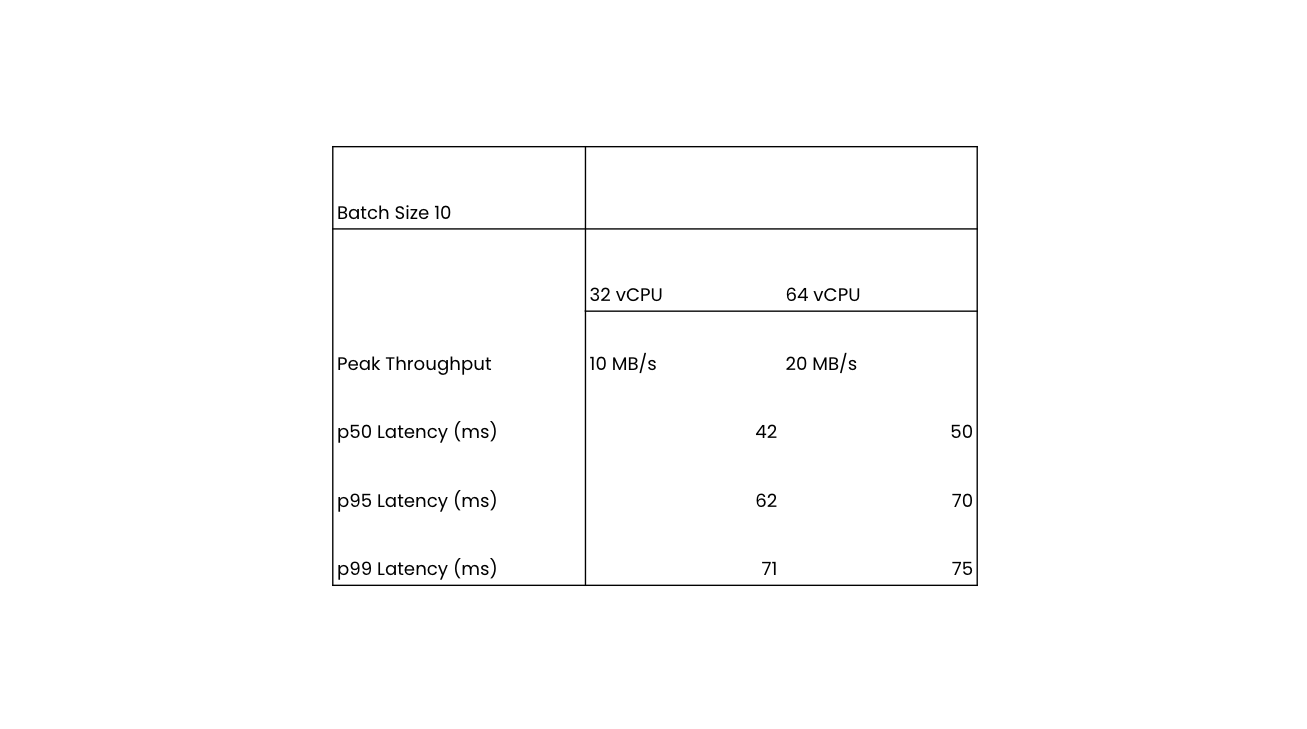

We ran the benchmark using a batch size of 10 documents per write request on a starting Rockset collection size of 300 GB. The benchmark held the ingestion throughput constant at 10 MB/s and 20 MB/s and recorded the p50, p95 and p99 data latencies.

The benchmark was run on XL and 2XL virtual instances or dedicated allocations of compute and memory resources. The XL virtual instance has 32 vCPU and 256 GB memory and the 2XL has 64 vCPU and 512 GB memory.

Here are the summary results of the benchmark at p50, p95 and p99 latencies on Rockset:

At p95 data latency, Rockset was able to achieve 70 ms on 20 MB/s throughput. The performance results show that as throughput scales and the size of the virtual instance increases, Rockset is able to maintain similar data latencies. Furthermore, the data latencies for the p95 and p99 averages are clustered close together showing predictable performance.

Rockset Performance Enhancements

There are several performance enhancements that enable Rockset to achieve millisecond data latency:

Compute-Compute Separation

Rockset recently unveiled a new cloud architecture for real-time analytics: compute-compute separation. The architecture allows users to spin up multiple, isolated virtual instances on the same shared data. With the new architecture in place, users can isolate the compute used for streaming ingestion from the compute used for queries, ensuring not just high performance, but predictable, efficient high performance. Users no longer need to overprovision compute or add replicas to overcome compute contention.

One of the benefits of this new architecture is that we've been able to eliminate duplicate tasks in the ingestion process so that all data parsing, data transformation, data indexing and compaction only happen once. This significantly reduces the CPU overhead required for ingestion, while maintaining reliability and enabling users to achieve even better price-performance.

RocksDB Upgrade

Rockset uses RocksDB as its embedded storage engine under the hood. The team at Rockset created and open-sourced RocksDB while at Facebook and it is currently used in production at Linkedin, Netflix, Pinterest and more web-scale companies. Rockset selected RocksDB for its performance and ability to handle frequently mutating data efficiently. Rockset leverages the latest version of RocksDB, version 7.8.0+, to reduce the write amplification by more than 10%.

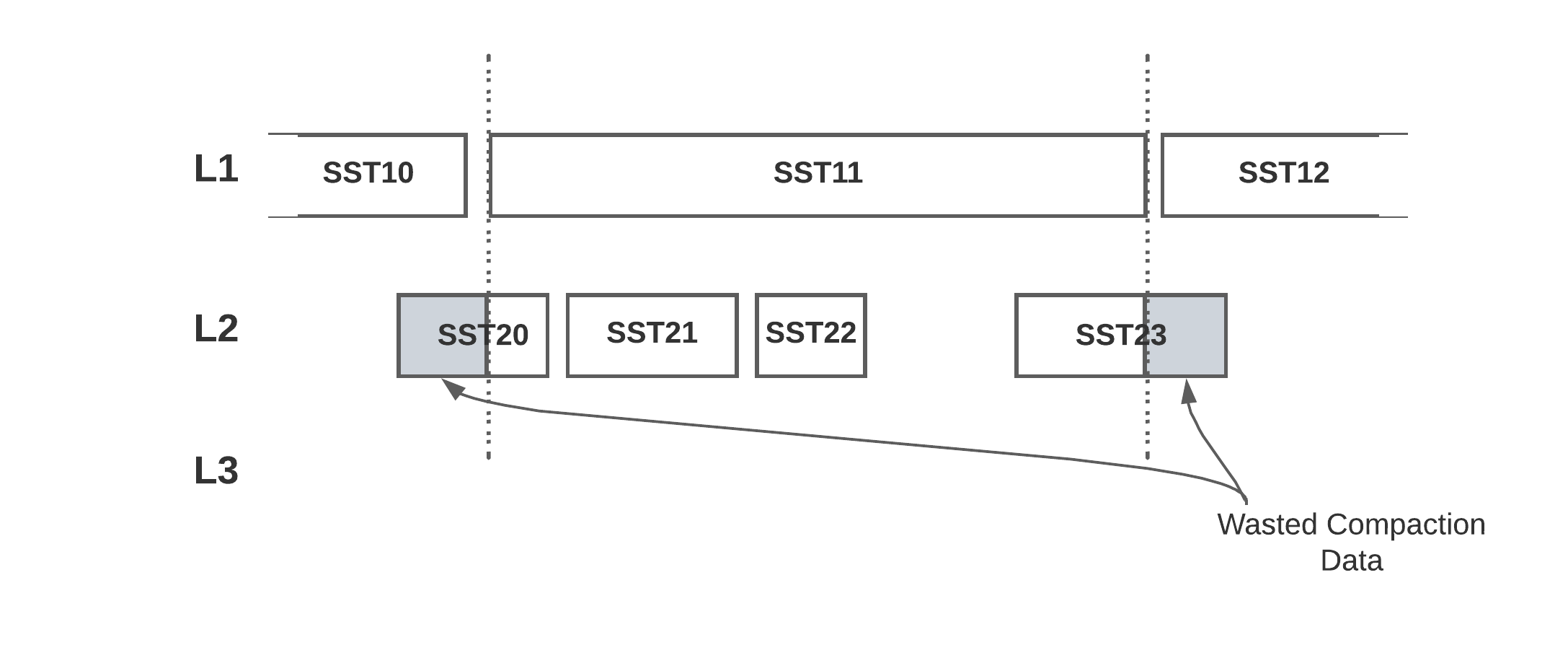

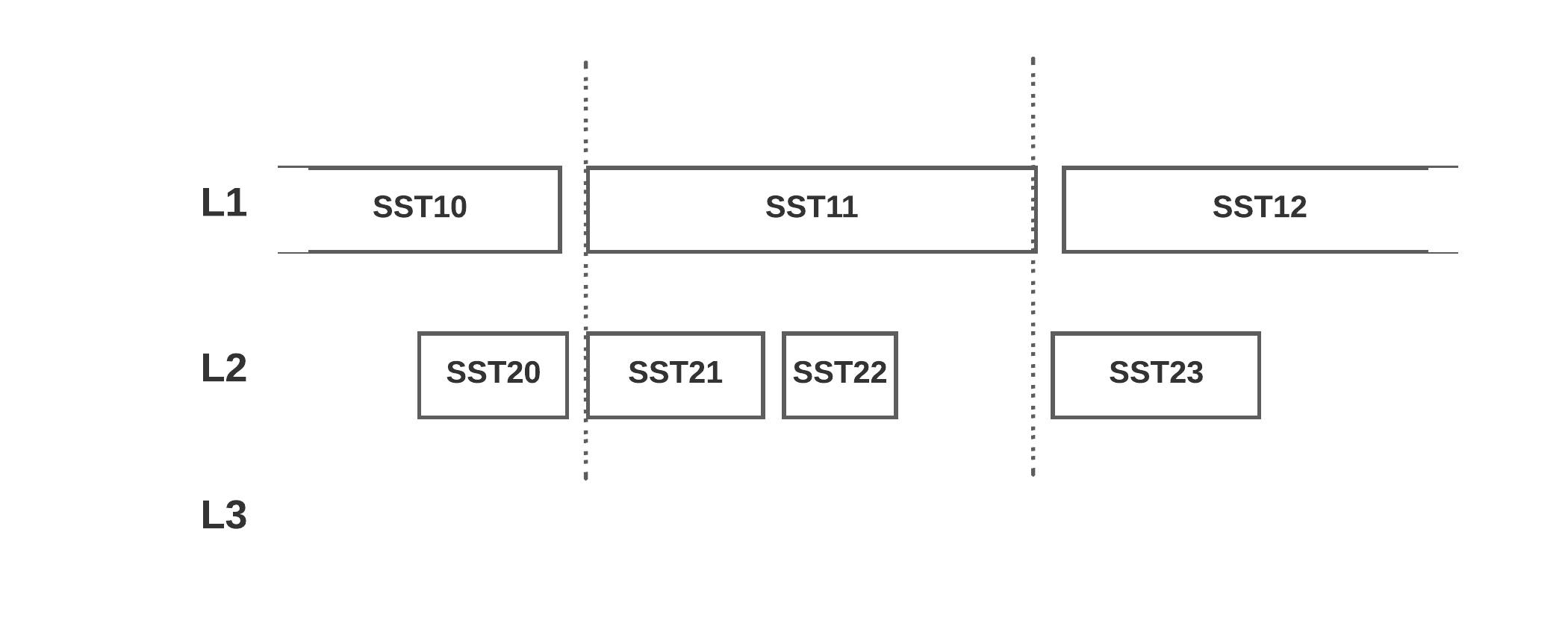

Previous versions of RocksDB used a partial merge compaction algorithm, which picks one file from the source level and compacts to the next level. Compared to a full merge compaction, this produces smaller compaction size and better parallelism. However, it also results in write amplification.

In RocksDB version 7.8.0+, the compaction output file is cut earlier and allows larger than targeted_file_size to align compaction files to the next level files. This reduces write amplification by 10+ percent.

By upgrading to this new version of RocksDB, the reduction in write amplification means better ingest performance, which you can see reflected in the benchmark results.

Custom Parsers

Rockset has schemaless ingest and supports a wide variety of data formats including JSON, Parquet, Avro, XML and more. Rockset’s ability to natively support SQL on semi-structured data minimizes the need for upstream pipelines that add data latency. To make this data queryable, Rockset converts the data into a standard proprietary format at ingestion time using data parsers.

Data parsers are responsible for downloading and parsing data to make it available for indexing. Rockset’s legacy data parsers leveraged open-source components that did not efficiently use memory or compute. Additionally, the legacy parsers converted data to an intermediary format before again converting data to Rockset’s proprietary format. In order to minimize latency and compute, the data parsers have been rewritten in a custom format. Custom data parsers are twice as fast, helping to achieve the data latency results captured in this benchmark.

How Performance Enhancements Benefit Customers

Rockset delivers predictable, high performance ingestion that enables customers across industries to build applications on streaming data. Here are a few examples of latency-sensitive applications built on Rockset in insurance, gaming, healthcare and financial services industries:

- Insurance industry: The digitization of the insurance industry is prompting insurers to deliver policies that are tailored to the risk profiles of customers and adapted in realm time. A fortune 500 insurance company provides instant insurance quotes based on hundreds of risk factors, requiring less than 200 ms data latency in order to generate real-time insurance quotes.

- Gaming industry: Real-time leaderboards boost gamer engagement and retention with live metrics. A leading esports gaming company requires 200 ms data latency to show how games progress in real time.

- Financial services: Financial management software helps companies and individuals track their financial health and where their money is being spent. A Fortune 500 company uses real-time analytics to provide a 360 degree of finances, displaying the latest transactions in under 500 ms.

- Healthcare industry: Health information and patient profiles are constantly changing with new test results, medication updates and patient communication. A leading healthcare player helps clinical teams monitor and track patients in real time, with a data latency requirement of under 2 seconds.

Rockset scales ingestion to support high velocity streaming data without incurring any negative impact on query performance. As a result, companies across industries are unlocking the value of real-time streaming data in an efficient, accessible way. We’re excited to continue to push the lower limits of data latency and share the latest performance benchmark with Rockset achieving 70 ms data latency on 20 MB/s of streaming data ingestion.

You too can experience these performance enhancements automatically and without requiring infrastructure tuning or manual upgrades by starting a free trial of Rockset today.

Richard Lin and Kshitij Wadhwa, software engineers at Rockset, performed the data latency investigation and testing on which this blog is based.