The vector database built for hybrid search at scale

Build, iterate and scale AI apps in the cloud. Create relevant experiences with hybrid search, stay up to date with live data and serve tens of thousands of concurrent users on Rockset.

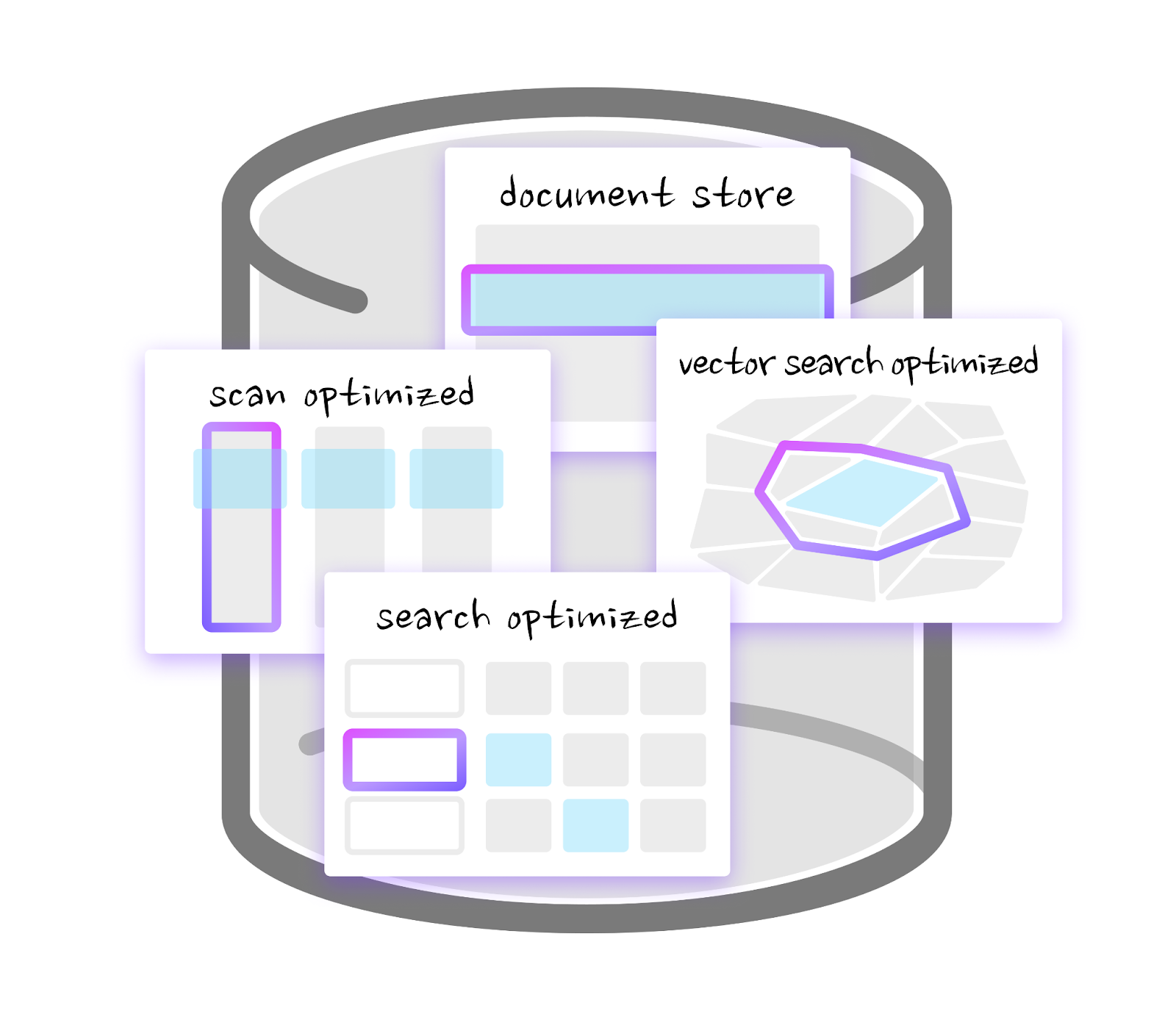

Rockset as a vector database

Create vector embeddings using any machine learning model (Hugging Face, OpenAI, Cohere, etc.) and index them for fast vector search. Combine vector search results with text search, geospatial search and structured search to enhance relevance. Use Rockset for retrieval augmented generation (RAG), personalization engines, semantic search, anomaly detection and more.

Update indexes without impacting live search performance

With compute-compute separation, similarity indexing of vectors will not affect search performance. Indexing happens on a different virtual instance than search for predictable performance. Take your AI applications to production with confidence.

Scale out to support multi-tenant applications at high concurrency

Rockset scales out compute and memory across multiple virtual instances to achieve unlimited concurrency. It incorporates a multi-tenant search design for faster search performance. Performance scales linearly with compute for efficient AI applications.

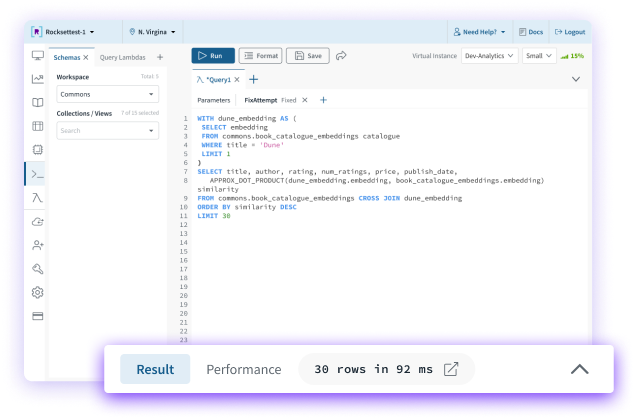

Hybrid search as easy as a SQL WHERE clause

Store and index vectors, text, geospatial and relational data to create relevant AI experiences. Exploit the power of the search index with an integrated SQL engine so your searches are always executed quickly. Rank results using the Reciprocal Rank Function (RRF) or a linear combination with just a SQL ORDER BY clause.

We saw the immense power of real-time analytics and AI to transform JetBlue’s operations and stitching together 3-4 database solutions would have slowed down application development. With Rockset, we found a database that could keep up with the fast pace of innovation at JetBlue.

Sai Ravuru, Senior Manager of Data Science and Analytics

Read case study