20x Faster Ingestion with Rockset's New DynamoDB Connector

July 29, 2021

Since its introduction in 2012, Amazon DynamoDB has been one of the most popular NoSQL databases in the cloud. DynamoDB, unlike a traditional RDBMS, scales horizontally, obviating the need for careful capacity planning, resharding, and database maintenance. As a result, DynamoDB is the database of choice for companies building event-driven architectures and user-friendly, performant applications at scale. As such, DynamoDB is central to many modern applications in ad tech, gaming, IoT, and financial services.

However, while DynamoDB is great for real-time transactions it does not do as well for analytics workloads. Analytics workloads are where Rockset shines. To enable these workloads, Rockset provides a fully managed sync to DynamoDB tables with its built-in connector. The data from DynamoDB is automatically indexed in an inverted index, a column index and a row index which can then be queried quickly and efficiently.

As such, the DynamoDB connector is one of our most widely used data connectors. We see users move massive amounts of data–TBs worth of data–using the DynamoDB connector. Given the scale of the use, we soon uncovered shortcomings with our connector.

How the DynamoDB Connector Currently Works with Scan API

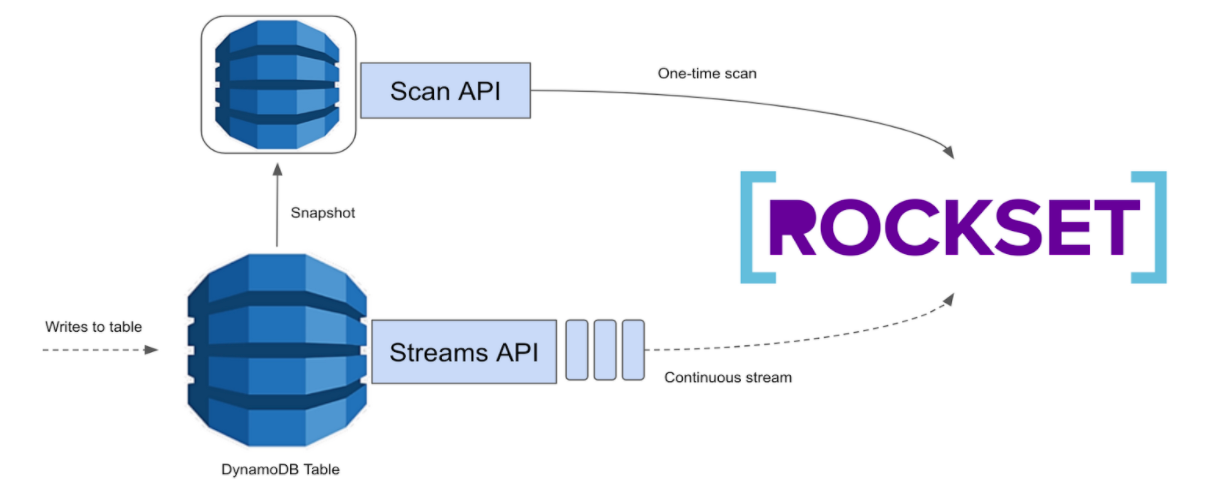

At a high level, we ingest data into Rockset using the current connector in two phases:

- Initial Dump: This phase uses DynamoDB’s Scan API for a one-time scan of the entire table

- Streaming: This phase uses DynamoDB’s Streams API and consumes continuous updates made to a DynamoDB table in a streaming fashion.

Roughly, the initial dump gives us a snapshot of the data, on which the updates from the streaming phase apply. While the initial dump using the Scan API works well for small sizes, it does not always do well for large data dumps.

There are two main issues with DynamoDB’s initial dump as it stands today:

- Unconfigurable segment sizes: Dynamo does not always balance segments uniformly, sometimes leading to a straggler segment that is inordinately larger than the others. Because parallelism is at segment granularity, we have seen straggler segments increase the total ingestion time for several users in production.

- Fixed Dynamo stream retention: DynamoDB Streams capture change records in a log for up to 24 hours. This means that if the initial dump takes longer than 24 hours the shards that were checkpointed at the start of the initial dump will have expired by then, leading to data loss.

Improving the DynamoDB Connector with Export to S3

When AWS announced the launch of new functionality that allows you to export DynamoDB table data to Amazon S3, we started evaluating this approach to see if this would help overcome the shortcomings with the older approach.

At a high level, instead of using the Scan API to get a snapshot of the data, we use the new export table to S3 functionality. While not a drop-in replacement for the Scan API, we tweaked the streaming phase which, together with the export to S3, is the basis of our new connector.

While the old connector took almost 20 hours to ingest 1TB end to end with production workload running on the DynamoDB table, the new connector takes only about 1 hour, end to end. What's more, ingesting 20TB from DynamoDB takes only 3.5 hours, end to end! All you need to provide is an S3 bucket!

Benefits of the new approach:

- Does not affect the provisioned read capacity, and thus any production workload, running on the DynamoDB table

- The export process is a lot faster than custom table-scan solutions

- S3 tasks can be configured to spread the load evenly so that we don’t have to deal with a heavily imbalanced segment like with DynamoDB

- Checkpointing with S3 comes for free (we just recently built support for this)

We are opening up access for public beta, and cannot wait for you to take this for a spin! Sign-up here.

Happy ingesting and happy querying!