3 Ways to Offload Read-Heavy Applications from MongoDB

September 25, 2020

According to over 40,000 developers, MongoDB is the most popular NOSQL database in use right now. The tool’s meteoric rise is likely due to its JSON structure which makes it easy for Javascript developers to use. From a developer perspective, MongoDB is a great solution for supporting modern data applications. Nevertheless, developers sometimes need to pull specific workflows out of MongoDB and integrate them into a secondary system while continuing to track any changes to the underlying MongoDB data.

Tracking data changes, also referred to as “change data capture” (CDC), can help provide valuable insights into business workflows and support other real-time applications. There are a variety of methods your team can employ to help track data changes. This blog post will look at three of them: tailing MongoDB with an oplog, using MongoDB change streams, and using a Kafka connector.

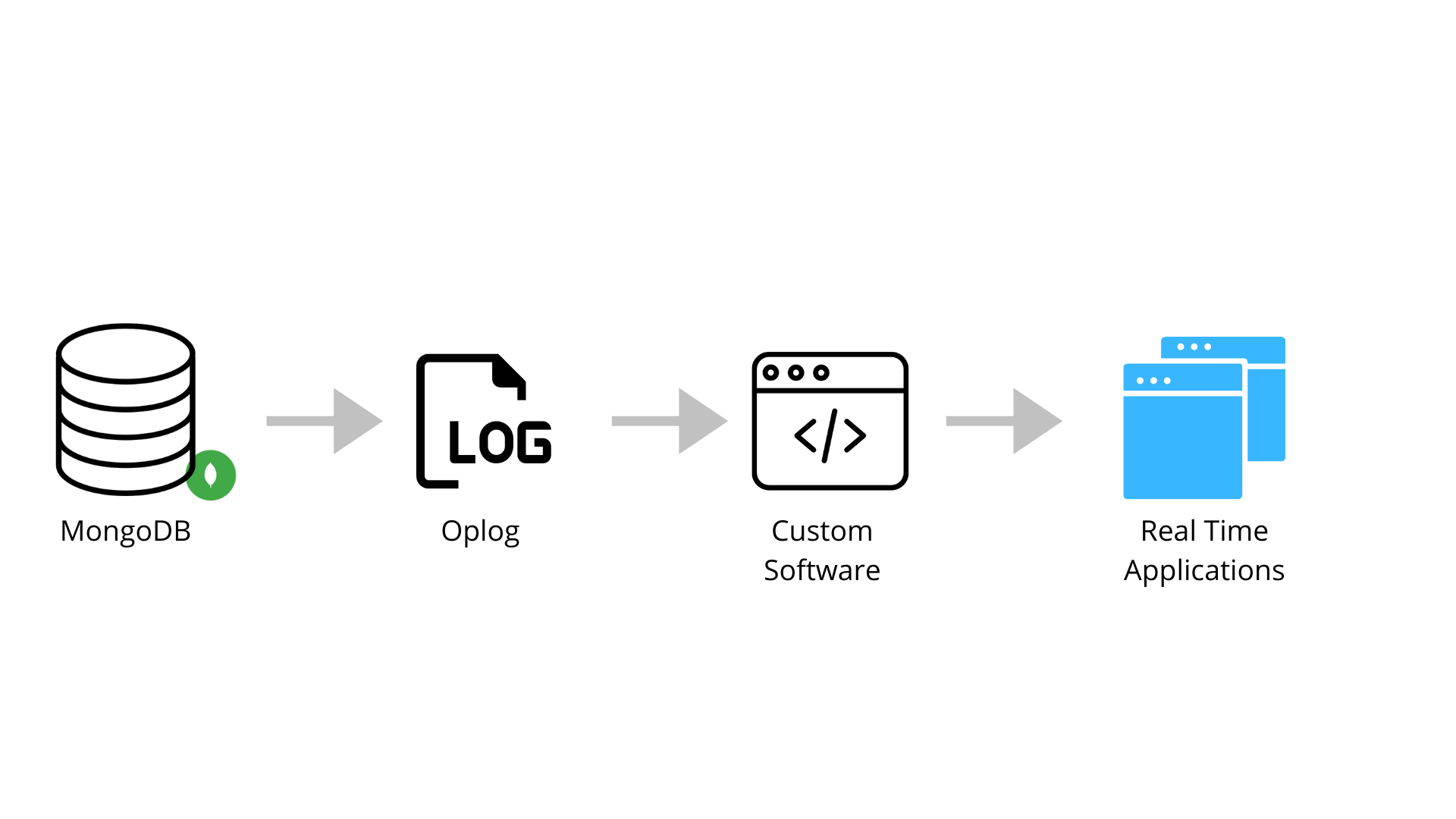

Tailing the MongoDB Oplog

An oplog is a log that tracks all of the operations occurring in a database. If you’ve replicated MongoDB across multiple regions, you’ll need a parent oplog to keep them all in sync. Tail this oplog with a tailable cursor that will follow the oplog to the most recent change. A tailable cursor can be used like a publish-subscribe paradigm. This means that, as new changes come in, the cursor will publish them to some external subscriber that can be connected to some other live database instance.

You can set up a tailable cursor using a library like PyMongo in Python and code similar to what is provided in the example below. What you’ll notice is there is a clause that states while cursor.alive:. This while statement allows your code to keep checking to see if your cursor is still alive and doc references the different documents that captured the change in the oplog.

import time

import pymongo

import redis

redis_uri=”redis://:hostname.redislabs.com@mypassword:12345/0”

r = redis.StrictRedis(url=redis_uri)

client = pymongo.MongoClient()

oplog = client.local.oplog.rs

first = oplog.find().sort('$natural', pymongo.DESCENDING).limit(-1).next()

row_ts = first['ts']

while True:

cursor = oplog.find({'ts': {'$gt': ts}}, tailable=True, await_data=True)

cursor.add_option(8)

while cursor.alive:

for doc in cursor:

row_ts = doc['ts']

r.set(doc['h'], doc)

time.sleep(1)

MongoDB stores its data, including the data in MongoDB’s oplog, in what it references as documents.

In the code above, the documents are referenced in the for loop for doc in cursor:. This loop will allow you to access the individual changes on a document by document basis.

The ts is the key that represents a new row. You can see the ts key example document below, in JSON format:

{ "ts" : Timestamp(1422998574, 1), "h" : NumberLong("-6781014703318499311"), "v" : 2, "op" : "i", "ns" : "test.mycollection", "o" : { "_id" : 1, "data" : "hello" } }

Tailing the oplog does pose several challenges which surface once you have a scaled application requiring secondary and primary instances of MongoDB. In this case, the primary instance acts as the parent database that all of the other databases use as a source of truth.

Problems arise if your primary database wasn’t properly replicated and a network outage occurs. If a new primary database is elected and that primary database hasn’t properly replicated, your tailing cursor will start in a new location, and the secondaries will roll back any unsynced operations. This means that your database will drop these operations. It is possible to capture data changes when the primary database fails; however, to do so, your team will have to develop a system to manage failovers.

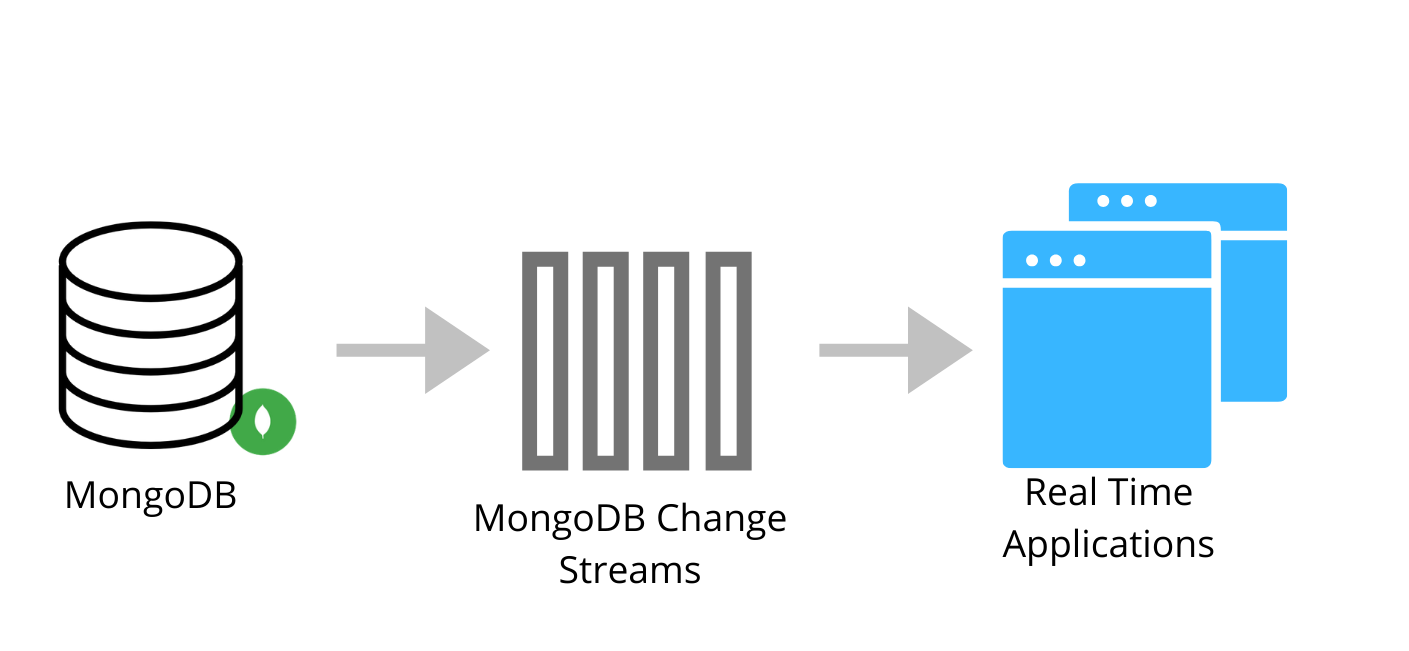

Using MongoDB Change Streams

Tailing the oplog is both code-heavy and highly dependent upon the MongoDB infrastructure’s stability. Because tailing the oplog creates a lot of risk and can lead to your data becoming disjointed, using MongoDB change streams is often a better option for syncing your data.

The change streams tool was developed to provide easy-to-track live streams of MongoDB changes, including updates, inserts, and deletes. This tool is much more durable during network outages, when it uses resume tokens that help keep track of where your change stream was last pulled from. Change streams don’t require the use of a pub-sub (publish-subscribe) model like Kafka and RabbitMQ do. MongoDB change streams will track your data changes for you and push them to your target database or application.

You can still use the PyMongo library to interface with MongoDB. In this case, you will create a change_stream that acts like a consumer in Kafka and serves as the entity that watches for changes in MongoDB. This process is shown below:

import os

import pymongo

from bson.json_util import dumps

client = pymongo.MongoClient(os.environ['CHANGE_STREAM_DB'])

change_stream = client.changestream.collection.watch()

for change in change_stream:

print(dumps(change))

print('') # for readability only

Using change streams is a great way to avoid the issues encountered when tailing the oplog. Additionally, change streams is a great choice for capturing data changes, since that is what it was developed to do.

That said, basing your real-time application on MongoDB change streams has one big drawback: You’ll need to design and develop data sets that are likely indexed in order to support your external applications. As a result, your team will need to take on more complex technical work that can slow down development. Depending on how heavy your application is, this challenge might create a problem. Despite this drawback, using change streams does pose less risk overall than tailing the oplog does.

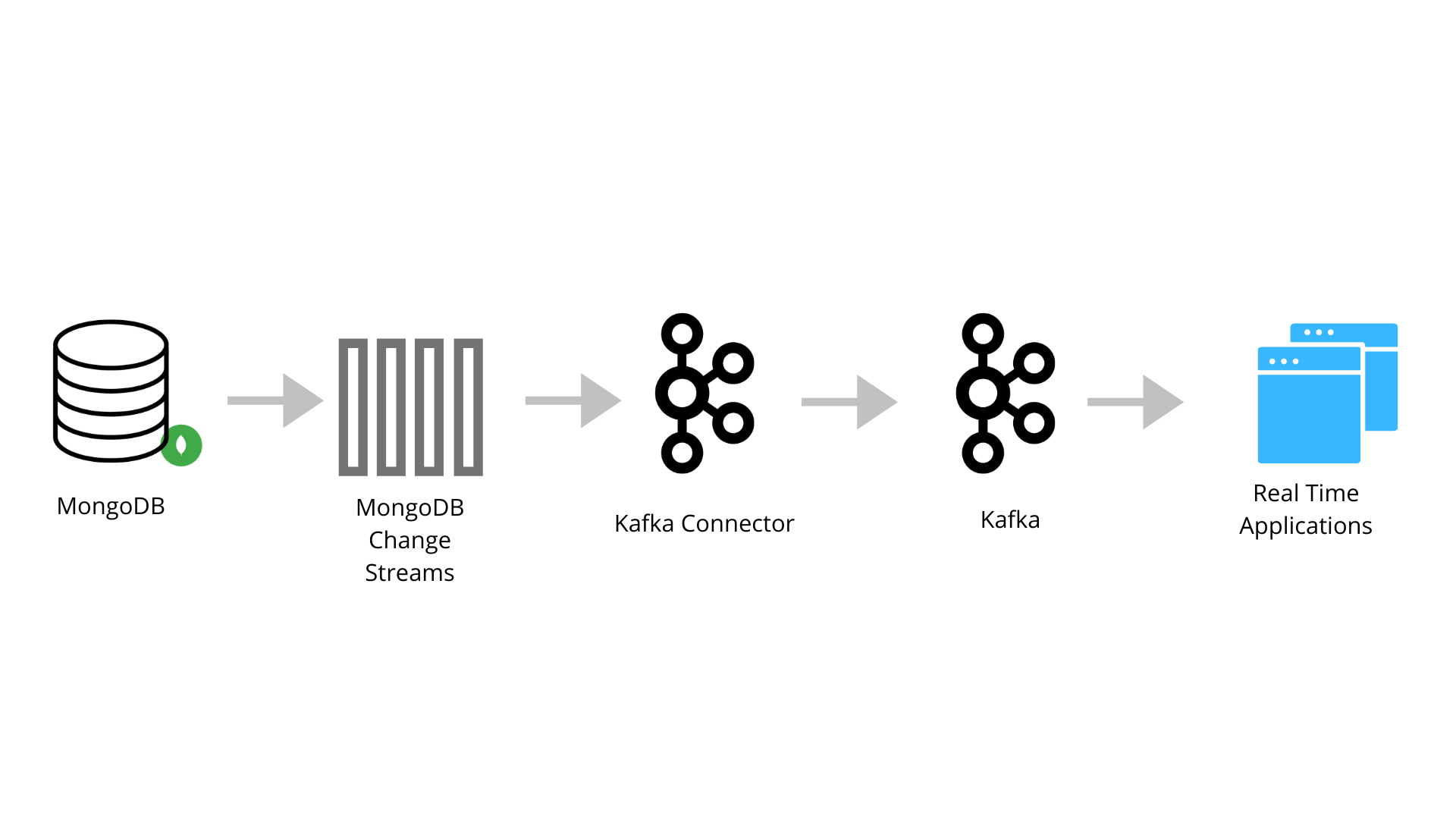

Using Kafka Connector

As a third option, you can use Kafka to connect to your parent MongoDB instance and track changes as they come. Kafka is an open-source data streaming solution that allows developers to create real-time data feeds. MongoDB has a Kafka connector that can sync data in both directions. It can both provide MongoDB with updates from other systems and publish changes to external systems.

For this option, you’ll need to update the configuration of both your Kafka instance and your MongoDB instance to set up the CDC. The Kafka connector will post the document changes to Kafka’s REST API interface. Technically, the data is captured with MongoDB change streams in the MongoDB cluster itself and then published to the Kafka topics. This process is different from using Debezium’s MongoDB connector, which uses MongoDB’s replication mechanism. The need to use MongoDB’s replication mechanism can make the Kafka connector an easier option to integrate.

You can set the Kafka connector to track at the collection level, the database level, or even the deployment level. From there, your team can use the live data feed as needed.

Using a Kafka connector is a great option if your company is already using Kafka for other use cases. With that in mind, using a Kafka connector is arguably one of the more technically complex methods for capturing data changes. You must manage and maintain a Kafka instance that is running external to everything else, as well as some other system and database that sits on top of Kafka and pulls from it. This requires technical support and introduces a new point of failure. Unlike MongoDB change streams, which were created to directly support MongoDB, this method is more like a patch on the system, making it a riskier and more complex option.

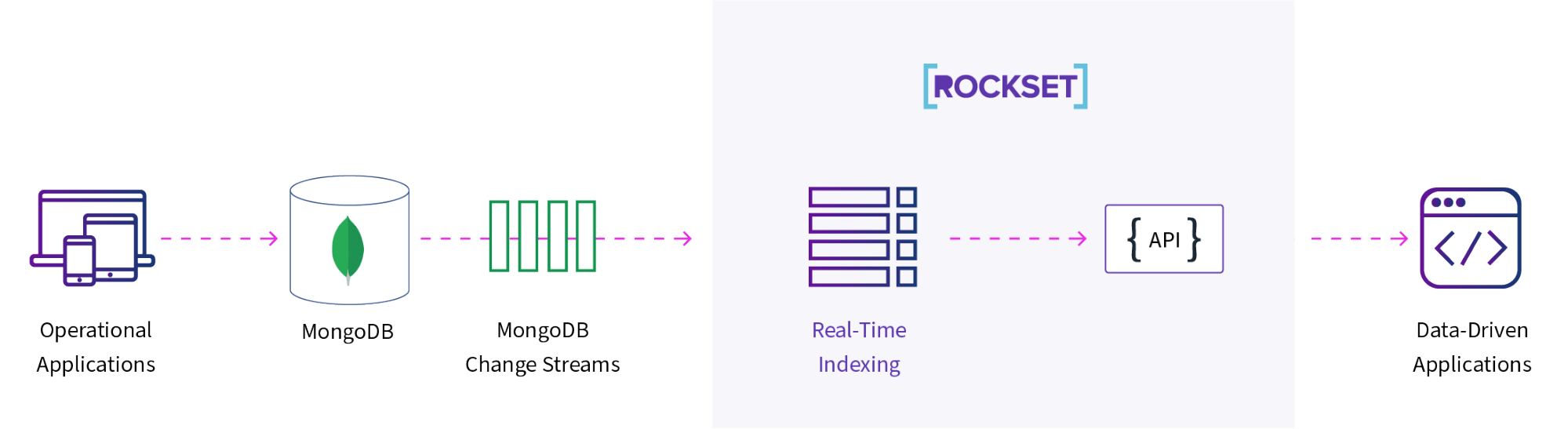

Managing CDC with Rockset and MongoDB Change Streams

MongoDB change streams offers developers another option for capturing data changes. However, this option still requires your applications to directly read the change streams, and the tool doesn’t index your data. This is where Rockset comes in. Rockset provides real-time indexing that can help speed up applications that rely on MongoDB data.

By pushing data to Rockset, you offload your applications’ reads while benefiting from Rocket’s search, columnar, and row-based indexes, making your applications' reads faster. Rockset layers these benefits on top of MongoDB’s change streams, increasing the speed and ease of access to MongoDB’s data changes.

Summary

MongoDB is a very popular option for application databases. Its JSON-based structure makes it easy for frontend developers to use. However, it is often useful to offload read-heavy analytics to another system for performance reasons or to combine data sets. This blog presented three of these methods: tailing the oplog, using MongoDB change streams, and using the Kafka connector. Each of these techniques has its benefits and drawbacks.

If you’re trying to build faster real-time applications, Rockset is an external indexing solution you should consider. In addition to having a built-in connector to capture data changes from MongoDB, it provides real-time indexing and is easy to query. Rockset ensures that your applications have up-to-date information, and it allows you to run complex queries across multiple data systems—not just MongoDB.

Other MongoDB resources:

- Using MongoDB Change Streams for Indexing with Elasticsearch vs Rockset

- Case Study: StoryFire - Scaling a Social Video Platform on MongoDB and Rockset

- Create APIs for Aggregations and Joins on MongoDB in Under 15 Minutes

Ben has spent his career focused on all forms of data. He has focused on developing algorithms to detect fraud, reduce patient readmission and redesign insurance provider policy to help reduce the overall cost of healthcare. He has also helped develop analytics for marketing and IT operations in order to optimize limited resources such as employees and budget. Ben privately consults on data science and engineering problems. He has experience both working hands-on with technical problems as well as helping leadership teams develop strategies to maximize their data.