Breaking Down Cost Barriers For Real-Time Change Data Capture (CDC)

November 29, 2022

Today, I’m excited to share a few product updates we’ve been working on related to real-time Change Data Capture (CDC), including early access for popular templates and 3rd-party CDC platforms. In this post we’ll highlight the new functionality, some examples to help data teams get started, and why real-time CDC just became far more accessible.

What Is CDC And Why Is It Useful?

First, a quick overview of what CDC is and why we’re such big fans. Because all databases make technical tradeoffs, it’s common to move data from a source to a destination based on how the data will be used. Broadly speaking, there are three basic ways to move data from point A to point B:

- A periodic full dump, i.e. copying all data from source A to destination B, completely replacing the previous dump each time.

- Periodic batch updates, i.e. every 15 minutes run a query on A to see which records have changed since the last run (maybe using modified flag, updated time, etc.), and batch insert those into your destination.

- Incremental updates (aka CDC) – as records change in A, emit a stream of changes that can be applied efficiently downstream in B.

CDC leverages streaming in order to track and transport changes from one system to another. This method offers a few enormous advantages over batch updates. First, CDC theoretically allows companies to analyze and react to data in real time, as it’s generated. It works with existing streaming systems like Apache Kafka, Amazon Kinesis, and Azure Events Hubs, making it easier than ever to build a real-time data pipeline.

A Common Antipattern: Real-Time CDC on a Cloud Data Warehouse

One of the more common patterns for CDC is moving data from a transactional or operational database into a cloud data warehouse (CDW). This method has a handful of drawbacks.

First, most CDWs do not support in-place updates, which means as new data arrives they have to allocate and write an entirely new copy of each micropartition via the MERGE command, which also captures inserts and deletes. The upshot? It’s either more expensive (large, frequent writes) or slow (less frequent writes) to use a CDW as a CDC destination. Data warehouses were built for batch jobs, so we shouldn’t be surprised by this. But then what are users to do when real-time use cases arise? Madison Schott at Airbyte writes, “I had a need for semi real-time data within Snowflake. After increasing data syncs in Airbyte to once every 15 minutes, Snowflake costs skyrocketed. Because data was being ingested every 15 minutes, the data warehouse was almost always running.” If your costs explode with a sync frequency of 15 minutes, you simply cannot respond to recent data, let alone real-time data.

Time and time again, companies in a wide variety of industries have boosted revenue, increased productivity and cut costs by making the leap from batch analytics to real-time analytics. Dimona, a leading Latin American apparel company founded 55 years ago in Brazil, had this to say about their inventory management database, “As we brought more warehouses and stores online, the database started bogging down on the analytics side. Queries that used to take tens of seconds started taking more than a minute or timing out altogether….using Amazon’s Database Migration Service (DMS), we now continuously replicate data from Aurora into Rockset, which does all of the data processing, aggregations and calculations.” Real-time databases aren’t just optimized for real-time CDC - they make it attainable and efficient for organizations of any size. Unlike cloud data warehouses, Rockset is purpose built to ingest large amounts of data in seconds and to execute sub-second queries against that data.

CDC For Real-Time Analytics

At Rockset, we’ve seen CDC adoption skyrocket. Teams often have pipelines that generate CDC deltas and need a system that can handle the real-time ingestion of those deltas to enable workloads with low end-to-end latency and high query scalability. Rockset was designed for this exact use case. We’ve already built CDC-based data connectors for many common sources: DynamoDB, MongoDB, and more. With the new CDC support we’re launching today, Rockset seamlessly enables real-time CDC coming from dozens of popular sources across several industry-standard CDC formats.

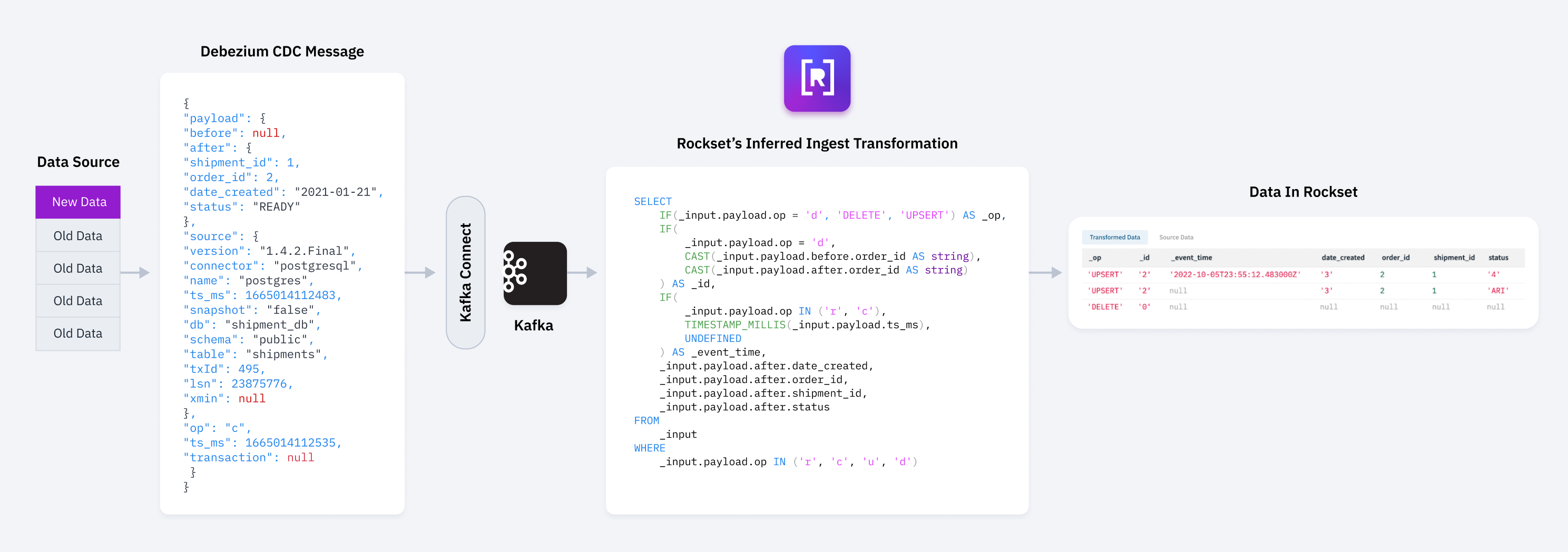

For some background, when you ingest data into Rockset you can specify a SQL query, called an ingest transformation, that is evaluated on your source data. The result of that query is what is persisted to your underlying collection (the equivalent of a SQL table). This gives you the power of SQL to accomplish everything from renaming/dropping/combining fields to filtering out rows based on complex conditions. You can even perform write-time aggregations (rollups) and configure advanced features like data clustering on your collection.

CDC data often comes in deeply nested objects with complex schemas and lots of data that isn’t required by the destination. With an ingest transformation, you can easily restructure the incoming documents, clean up names, and map source fields to Rockset’s special fields. This all happens seamlessly as part of Rockset’s managed, real-time ingestion platform. In contrast, other systems require complex, intermediary ETL jobs/pipelines to achieve similar data manipulation, which adds operational complexity, data latency, and cost.

You can ingest CDC data from virtually any source using the power and flexibility Rockset’s ingest transformations. To do so, there are a few special fields you need to populate.

_id

This is a document’s unique identifier in Rockset. It is important that the primary key from your source is properly mapped to _id so that updates and deletes for each document are applied correctly. For example:

-- simple single field mapping when `field` is already a string

SELECT field AS _id;

-- single field with casting required since `field` isn't a string

SELECT CAST(field AS string) AS _id;

-- compound primary key from source mapping to _id using SQL function ID_HASH

SELECT ID_HASH(field1, field2) AS _id;

_event_time

This is a document’s timestamp in Rockset. Typically, CDC deltas include timestamps from their source, which is helpful to map to Rockset’s special field for timestamps. For example:

-- Map source field `ts_epoch` which is ms since epoch to timestamp type for _event_time

SELECT TIMESTAMP_MILLIS(ts_epoch) AS _event_time

_op

This tells the ingestion platform how to interpret a new record. Most frequently, new documents are exactly that - new documents - and they will be ingested into the underlying collection. However using _op you can also use a document to encode a delete operation. For example:

{"_id": "123", "name": "Ari", "city": "San Mateo"} → insert a new document with id 123

{"_id": "123", "_op": "DELETE"} → delete document with id 123

This flexibility enables users to map complex logic from their sources. For example:

SELECT field as _id, IF(type='delete', 'DELETE', 'UPSERT') AS _op

Check out our docs for more info.

Templates and Platforms

Understanding the concepts above makes it possible to bring CDC data into Rockset as-is. However, constructing the correct transformation on these deeply nested objects and correctly mapping all the special fields can sometimes be error-prone and cumbersome. To address these challenges, we’ve added early-access, native support for a variety of ingest transformation templates. These will help users more easily configure the correct transformations on top of CDC data. By being part of the ingest transformation, you get the power and flexibility of Rockset’s data ingestion platform to bring this CDC data from any of our supported sources including event streams, directly through our write API, or even through data lakes like S3, GCS, and Azure Blob Storage. The full list of templates and platforms we’re announcing support for includes the following:

Template Support

- Debezium: An open source distributed platform for change data capture.

- AWS Data Migration Service: Amazon’s web service for data migration.

- Confluent Cloud (via Debezium): A cloud-native data streaming platform.

- Arcion: An enterprise CDC platform designed for scalability.

- Striim: A unified data integration and streaming platform.

Platform Support

- Airbyte: An open platform that unifies data pipelines.

- Estuary: A real-time data operations platform.

- Decodable: A serverless real-time data platform.

If you’d like to request early access to CDC template support, please email support@rockset.com.

As an example, here is a templatized message that Rockset supports automatic configuration for:

{

"data": {

"ID": "1",

"NAME": "User One"

},

"before": null,

"metadata": {

"TABLENAME": "Employee",

"CommitTimestamp": "12-Dec-2016 19:13:01",

"OperationName": "INSERT"

}

}

And here is the inferred transformation:

SELECT

IF(

_input.metadata.OperationName = 'DELETE',

'DELETE',

'UPSERT'

) AS _op,

CAST(_input.data.ID AS string) AS _id,

IF(

_input.metadata.OperationName = 'INSERT',

PARSE_TIMESTAMP(

'%d-%b-%Y %H:%M:%S',

_input.metadata.CommitTimestamp

),

UNDEFINED

) AS _event_time,

_input.data.ID,

_input.data.NAME

FROM

_input

WHERE

_input.metadata.OperationName IN ('INSERT', 'UPDATE', 'DELETE')

These technologies and products allow you to create highly-secure, scalable, real-time data pipelines in just minutes. Each of these platforms has a built-in connector for Rockset, obviating many manual configuration requirements, such as those for:

- PostgreSQL

- MySQL

- IBM db2

- Vittes

- Cassandra

From Batch To Real-Time

CDC has the potential to make real-time analytics attainable. But if your team or application needs low-latency access to data, relying on systems that batch or microbatch data will explode your costs. Real-time use cases are hungry for compute, but the architectures of batch-based systems are optimized for storage. You’ve now got a new, totally viable option. Change data capture tools like Airbyte, Striim, Debezium, et al, along with real-time analytics databases like Rockset reflect an entirely new architecture, and are finally able to deliver on the promise of real-time CDC. These tools are purpose built for high-performance, low-latency analytics at scale. CDC is flexible, powerful, and standardized in a way that ensures support for data sources and destinations will continue to grow. Rockset and CDC are a perfect match, reducing the cost of real-time CDC so that organizations of any size can finally forward past batch, and towards real-time analytics.

If you’d like to give Rockset + CDC a try, you can start a free, two-week trial with $300 in credits here.