Create a Data API on MySQL Data with Rockset

July 1, 2021

Last week, we walked you through how to scale your Amazon RDS MySQL analytical workload with Rockset. This week will continue with the same Amazon RDS MySQL that we created last week, and upload Airbnb data to a new table.

Uploading data to Amazon RDS MySQL

To get started:

- Let’s first download the Airbnb CSV file. Note: make sure you rename the CSV file to sfairbnb.csv

-

Access the MySQL server via your terminal:

$ mysql -u admin -p -h Yourendpoint -

We’ll need to switch to the right database:

$ use rocksetdemo1 - We’ll need to create a table

Embedded content: https://gist.github.com/nfarah86/df2926f5c193cfdcb4d09ce86d63bde7

-

Upload the data to the table:

LOAD DATA local infile '/yourpath/sfairbnb.csv' -> into table sfairbnb -> fields terminated by ',' -> enclosed by '"' -> lines terminated by '\n' -> ignore 1 rows;

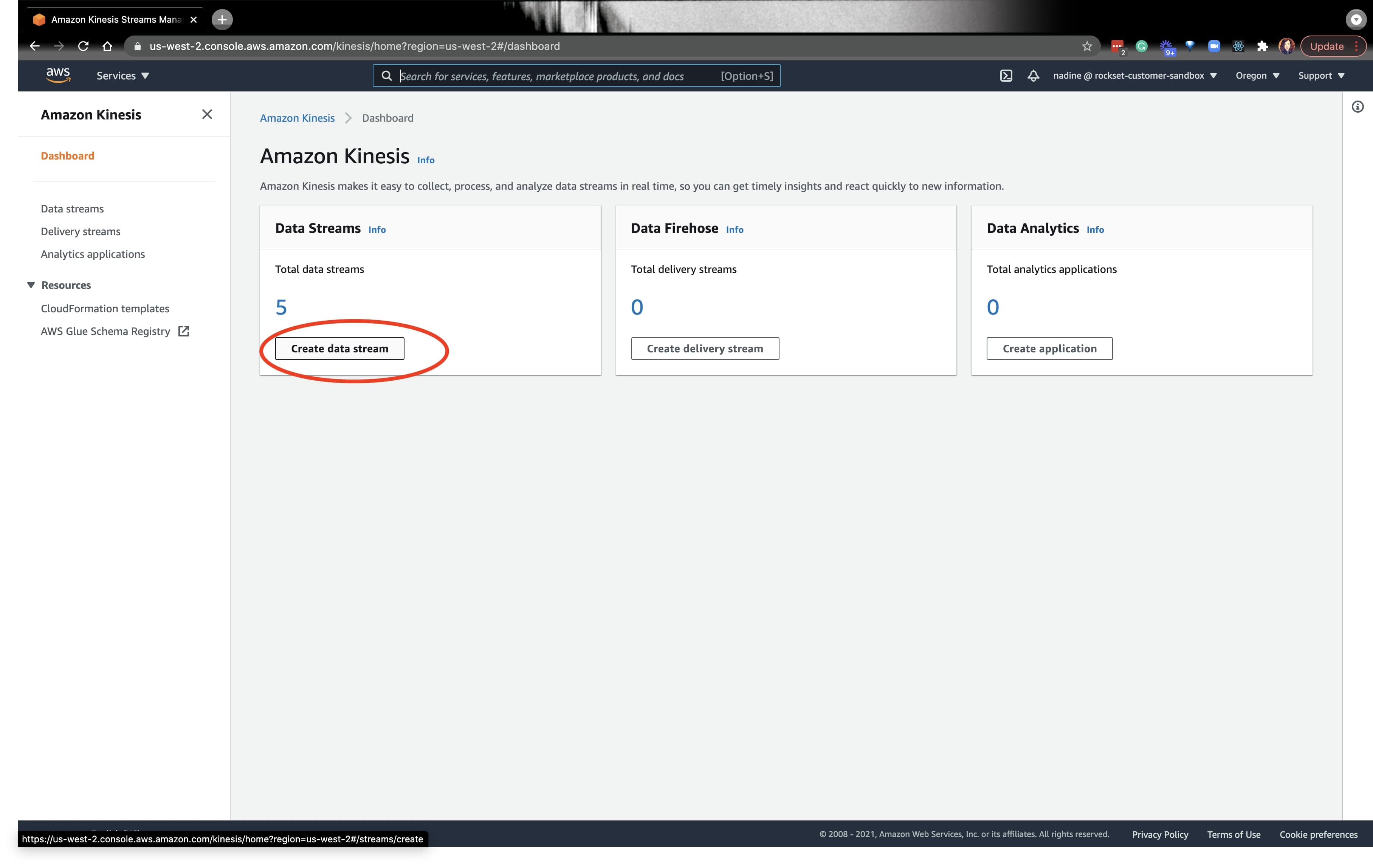

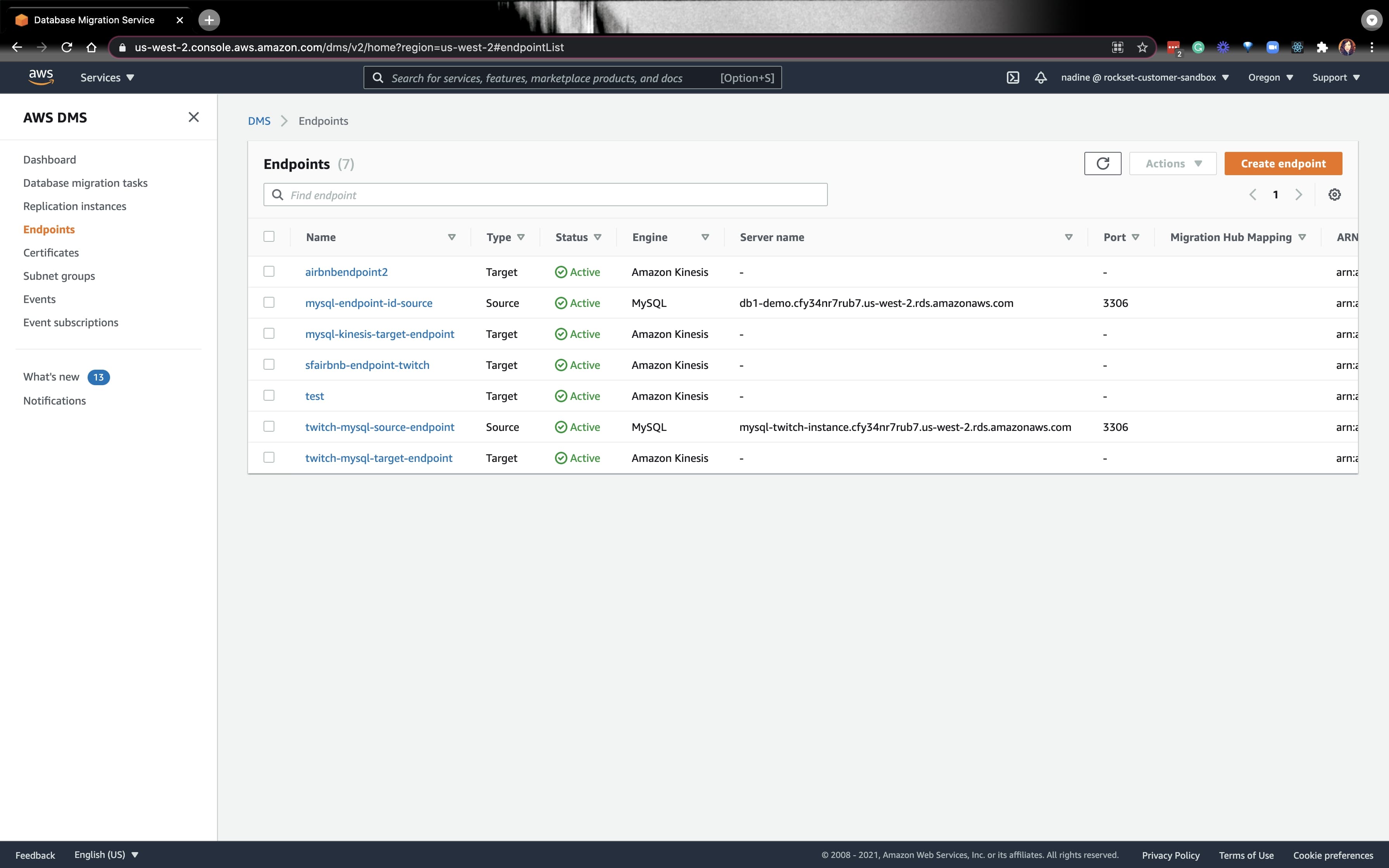

Setting up a New Kinesis Stream and DMS Target Endpoint

Once the data is loaded into MySQL, we can navigate to the AWS console and create another Kinesis data stream. We’ll need to create a Kinesis stream and a DMS Target Endpoint for every MySQL database table on a MySQL server. Since we will not be creating a new MySQL server, we don’t need to create a DMS Source Endpoint. Thus, we can use the same DMS Source Endpoint from last week.

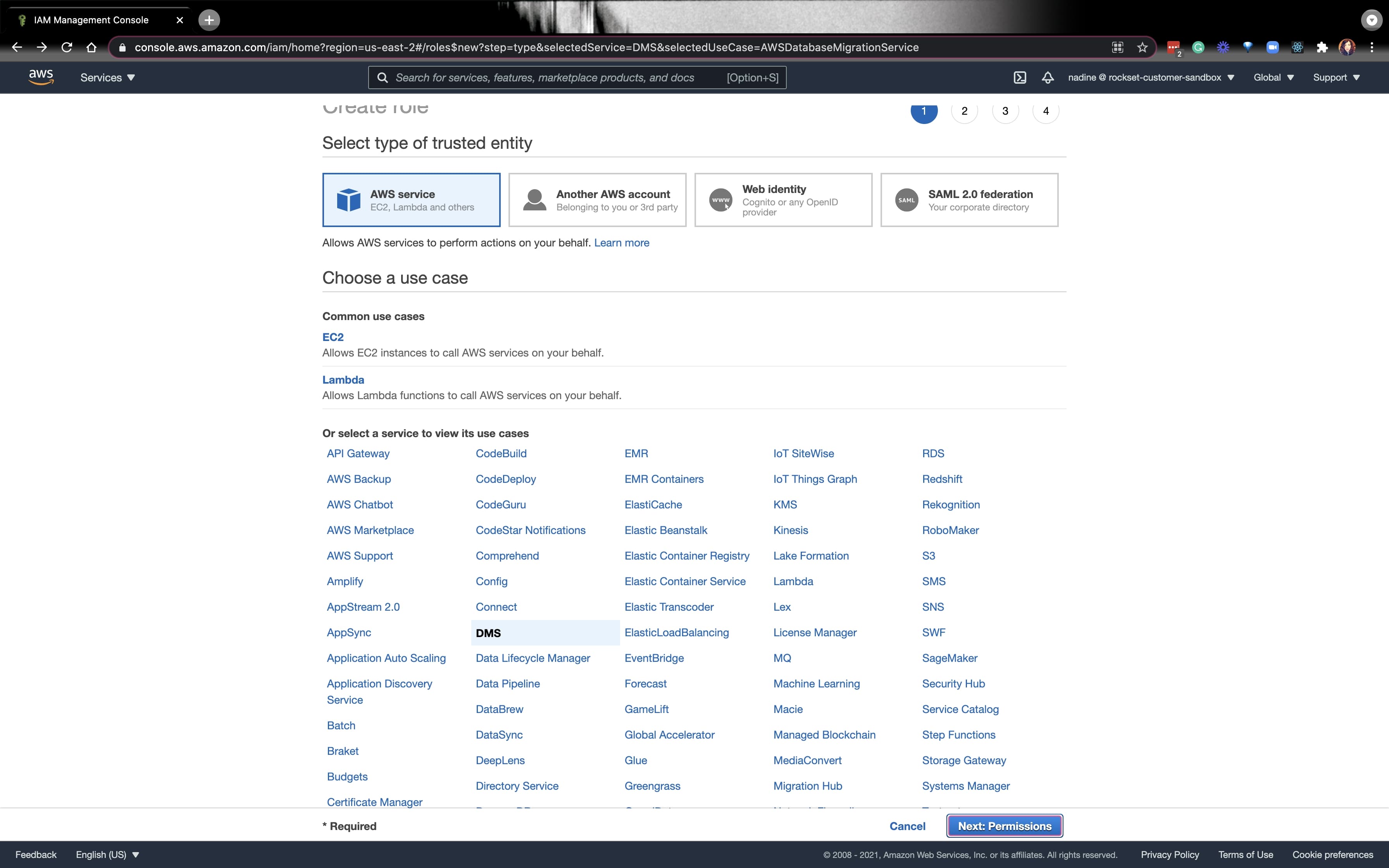

From here, we’ll need to create a role that’ll give the Kinesis Stream full access. Navigate to the AWS IAM console and create a new role for an AWS service, and click on DMS. Click on Next: Permissions on the bottom right.

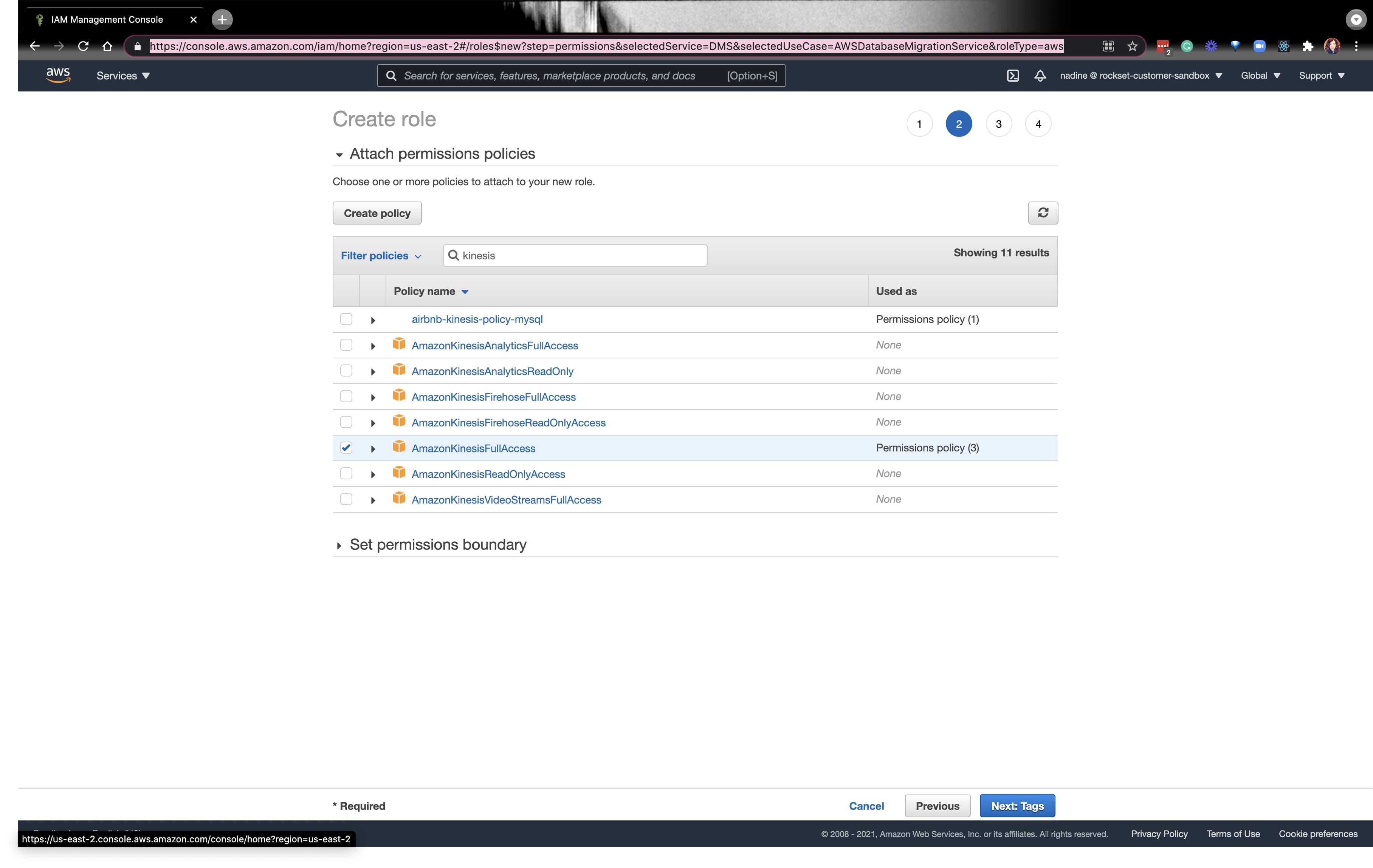

Check the box for AmazonKinesisFullAccess and click on Next: Tags:

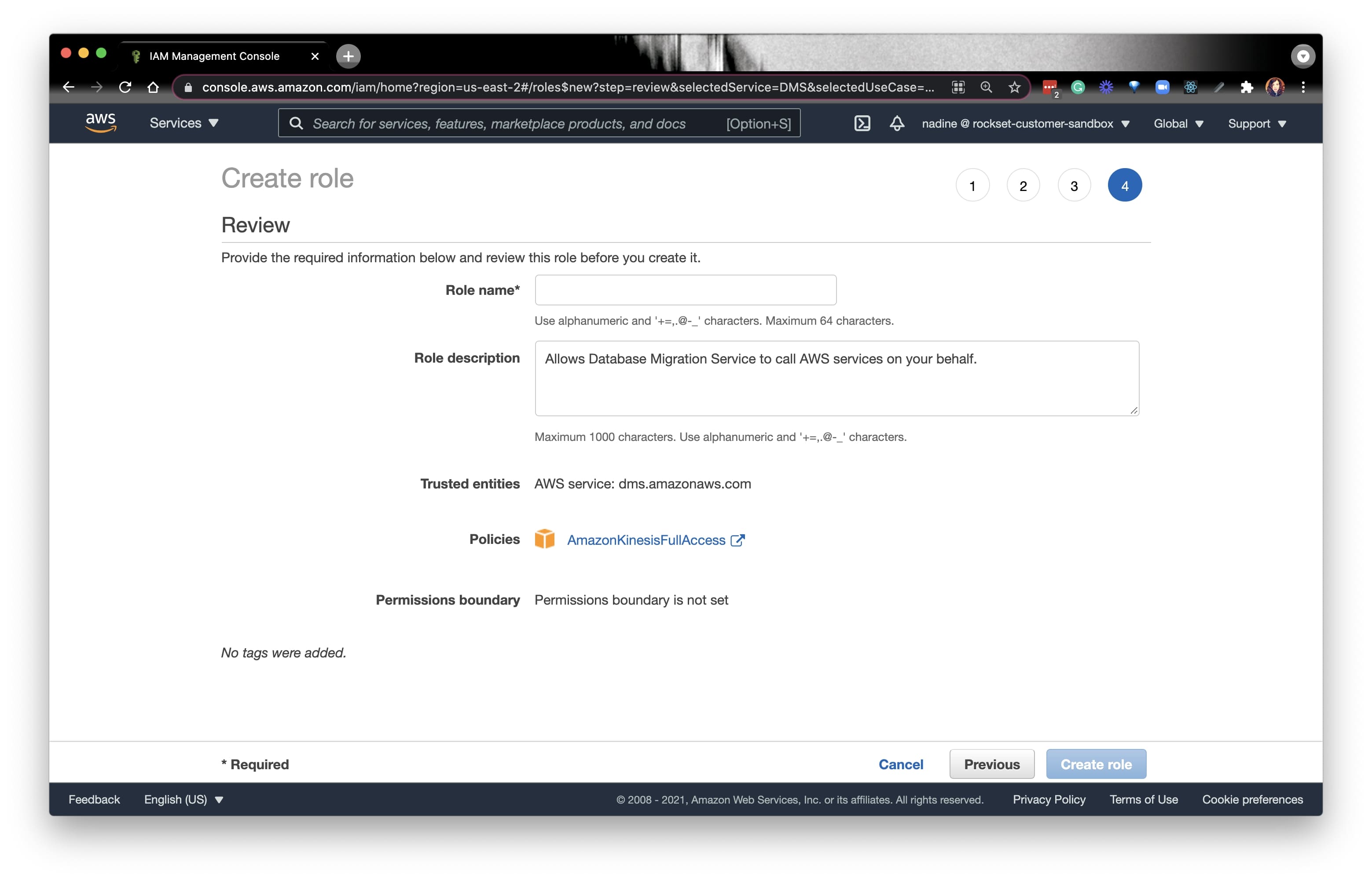

Fill out the details as you see fit and click on Create role on the bottom right. Be sure to save the role ARN for the next step.

Now, let’s go to the DMS console:

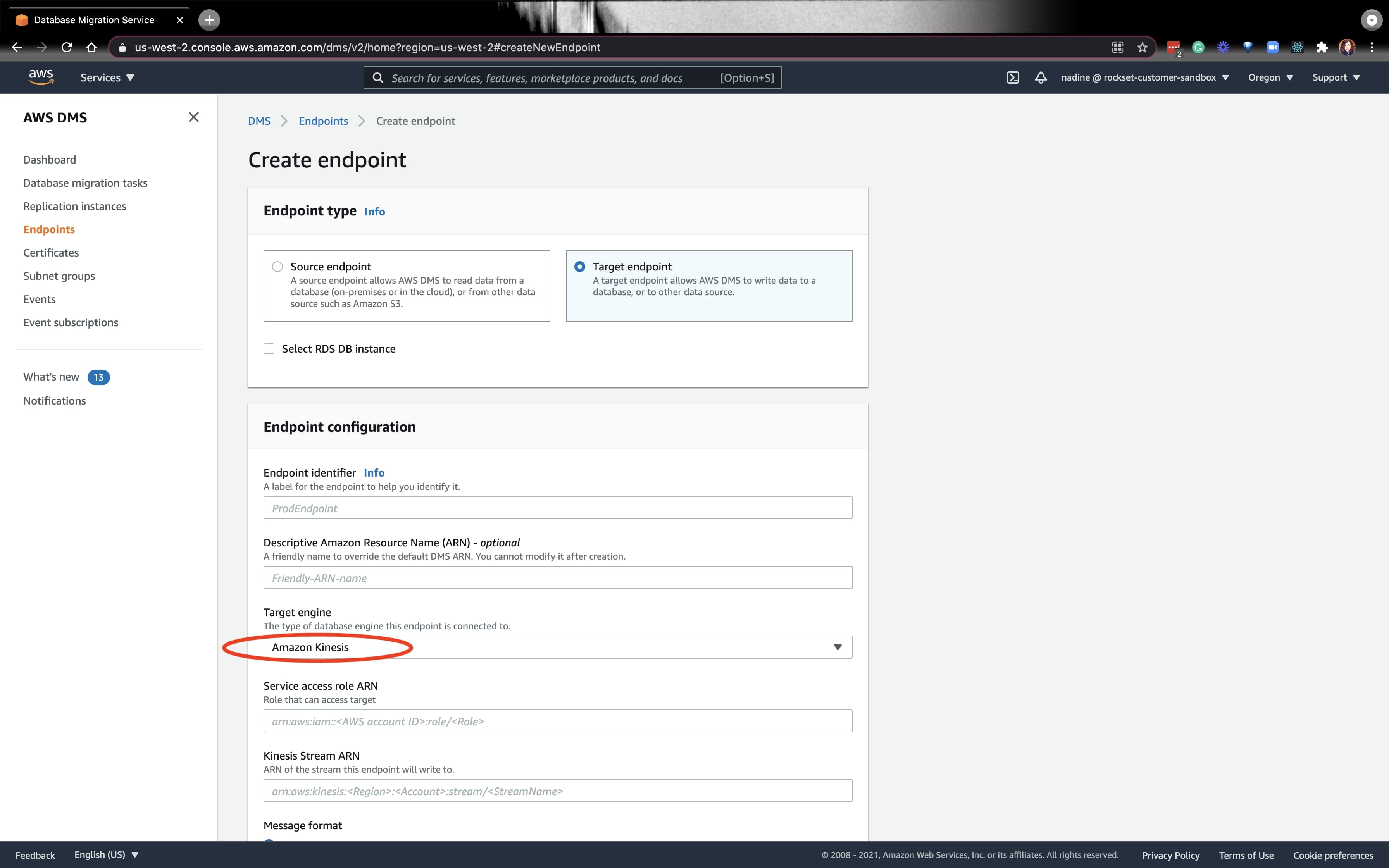

Let's create a new Target endpoint. On the drop-down, pick Kinesis:

For the Service access role ARN, you can put the ARN of the role we just created. Similarly, for the Kinesis Stream ARN, put the ARN for the Kinesis Stream we created. For the rest of the fields below, you can follow the instructions from our docs.

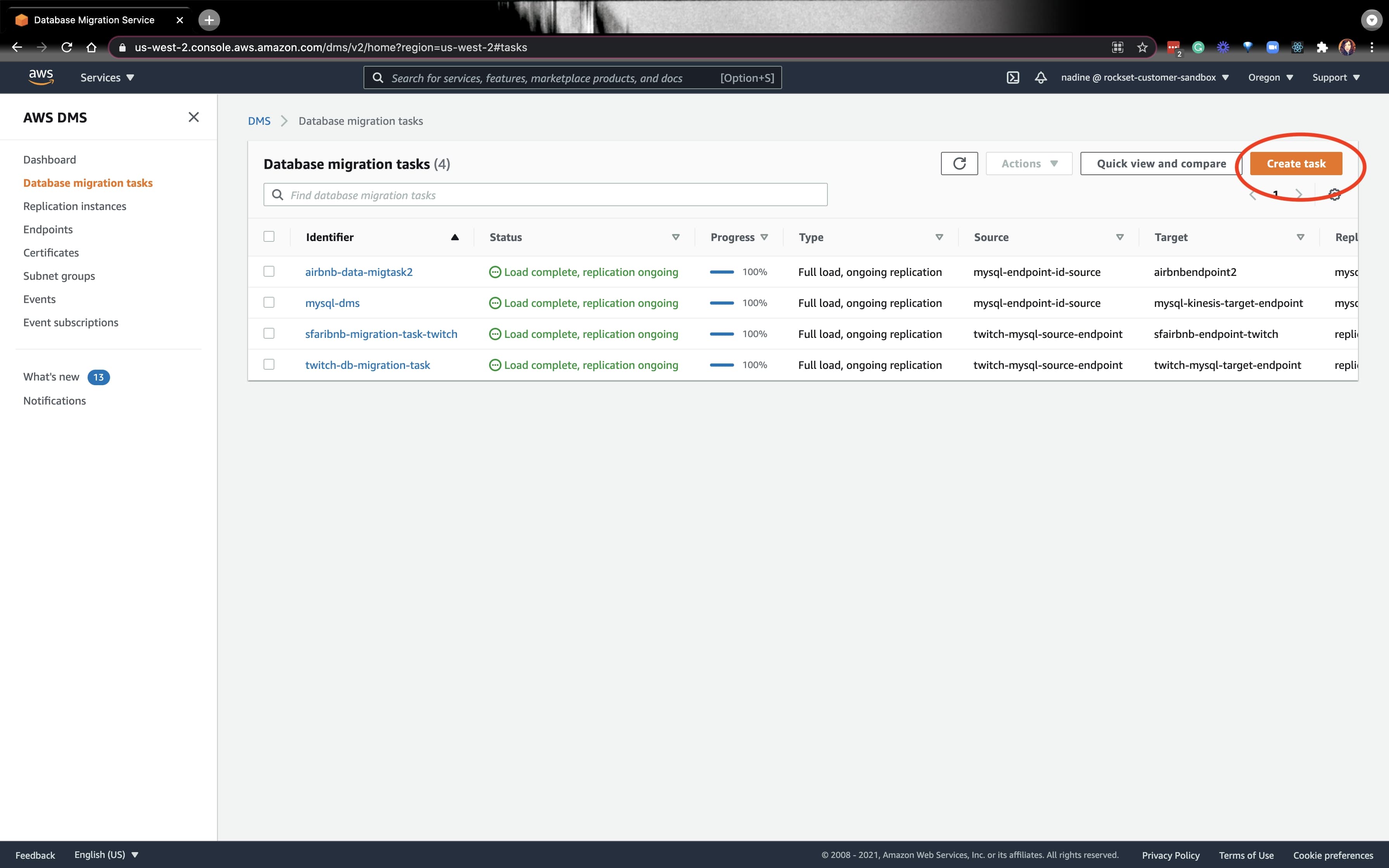

Next, we’ll need to create a Data migration task:

We’ll choose the source endpoint we created last week, and choose the endpoint we created today. You can read the docs to see how to modify the Task Settings.

If everything is working great, we’re ready for the Rockset portion.

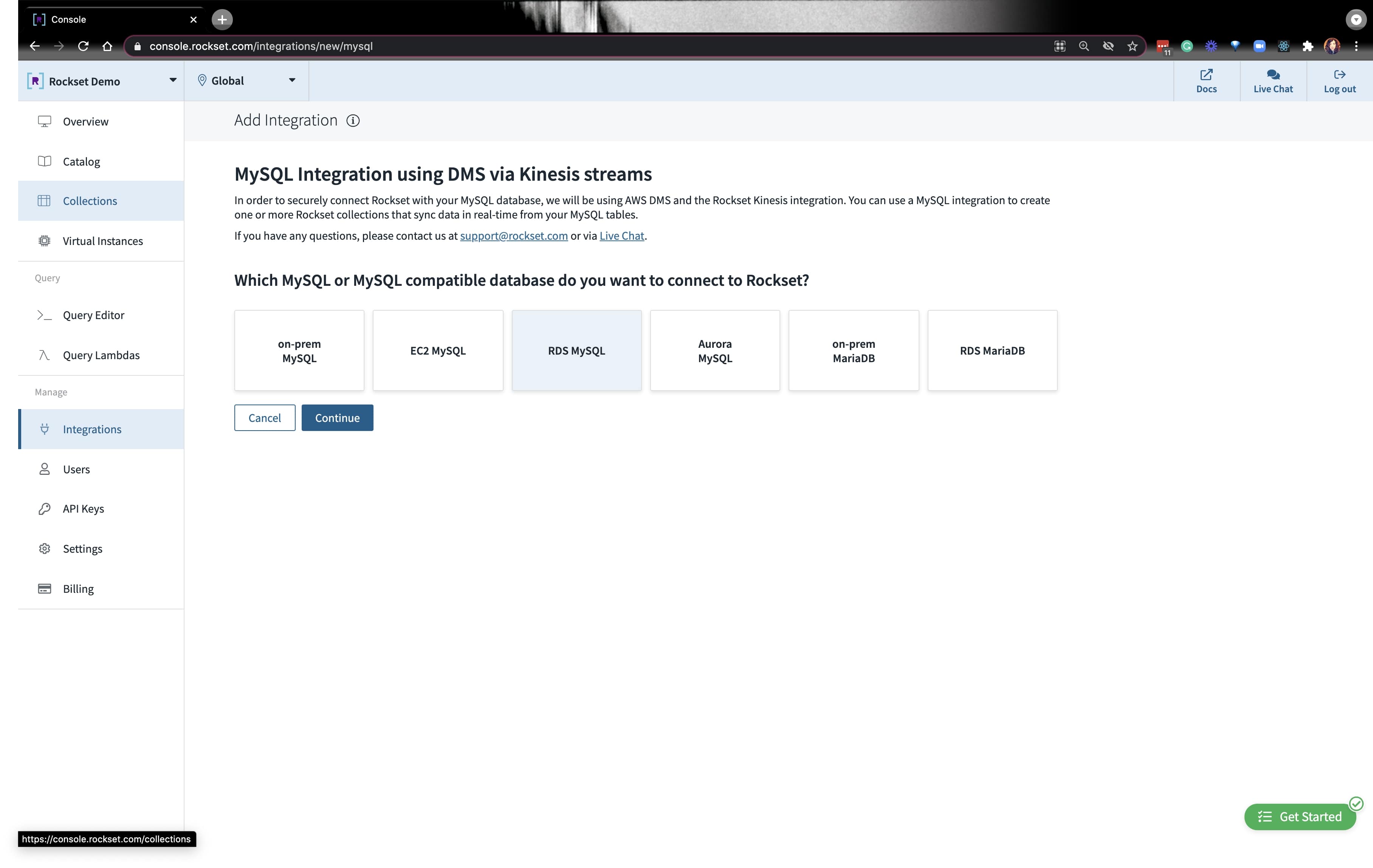

Integrating MySQL with Rockset via a data connector

Go ahead and create a new MySQL integration and click on RDS MySQL. You’ll see prompts to ensure that you did the various setup instructions we just covered above. Just click Done and move to the next prompt.

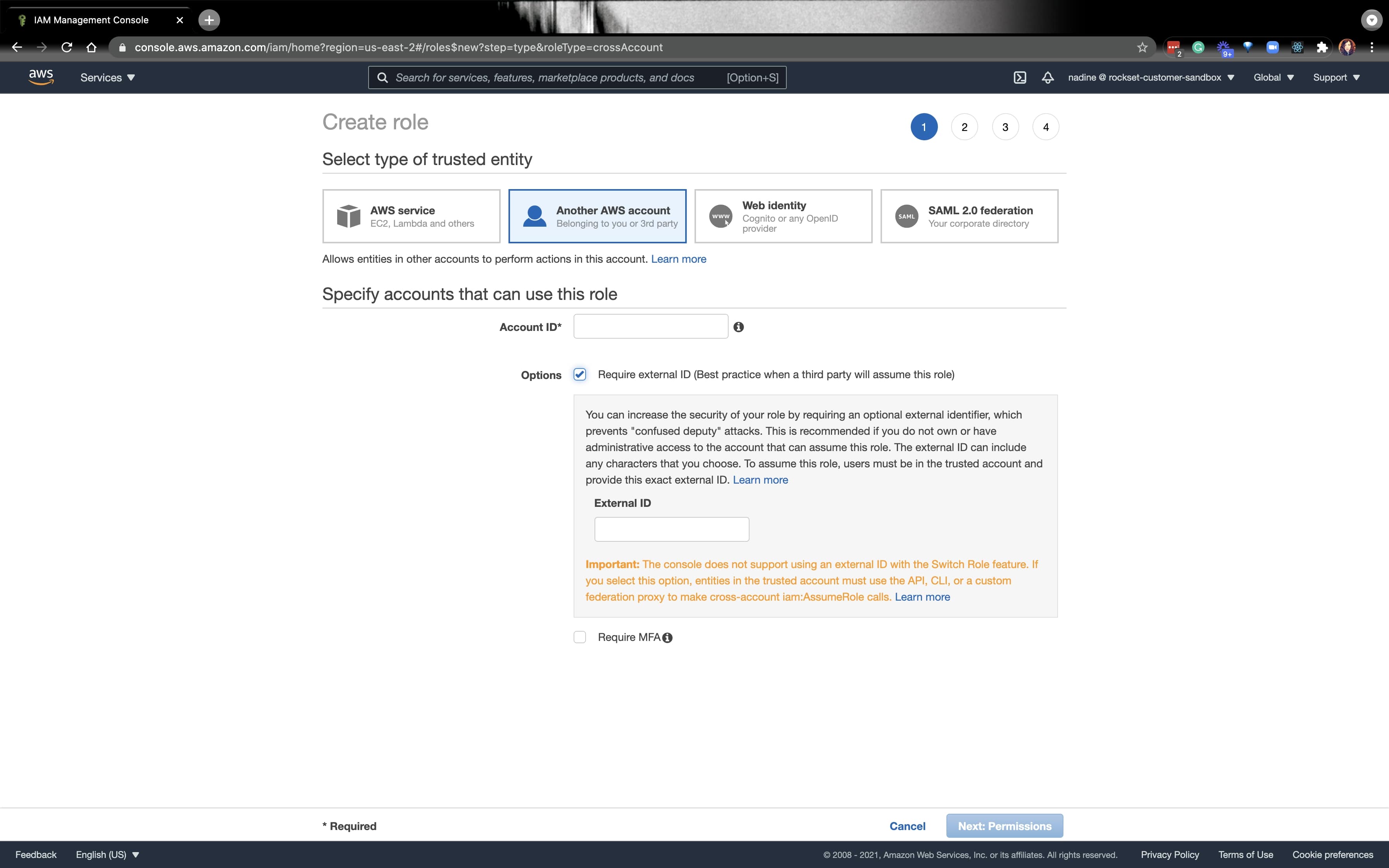

The last prompt will ask you for a role ARN specifically for Rockset. Navigate to the AWS IAM console and create a rockset-role and put Rockset’s account and external ID:

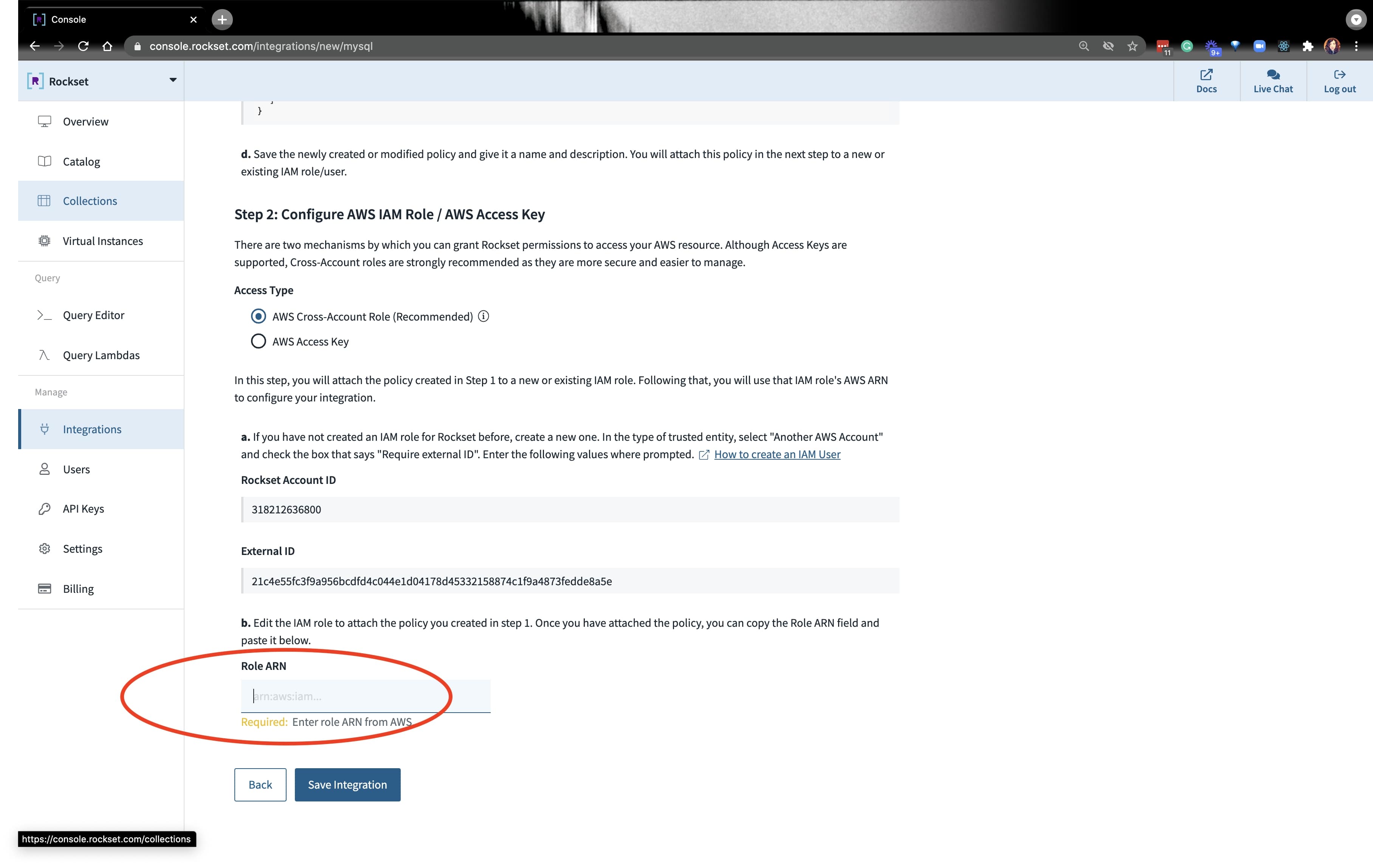

You’ll grab the ARN from the role we created and paste it at the bottom where it requires that information:

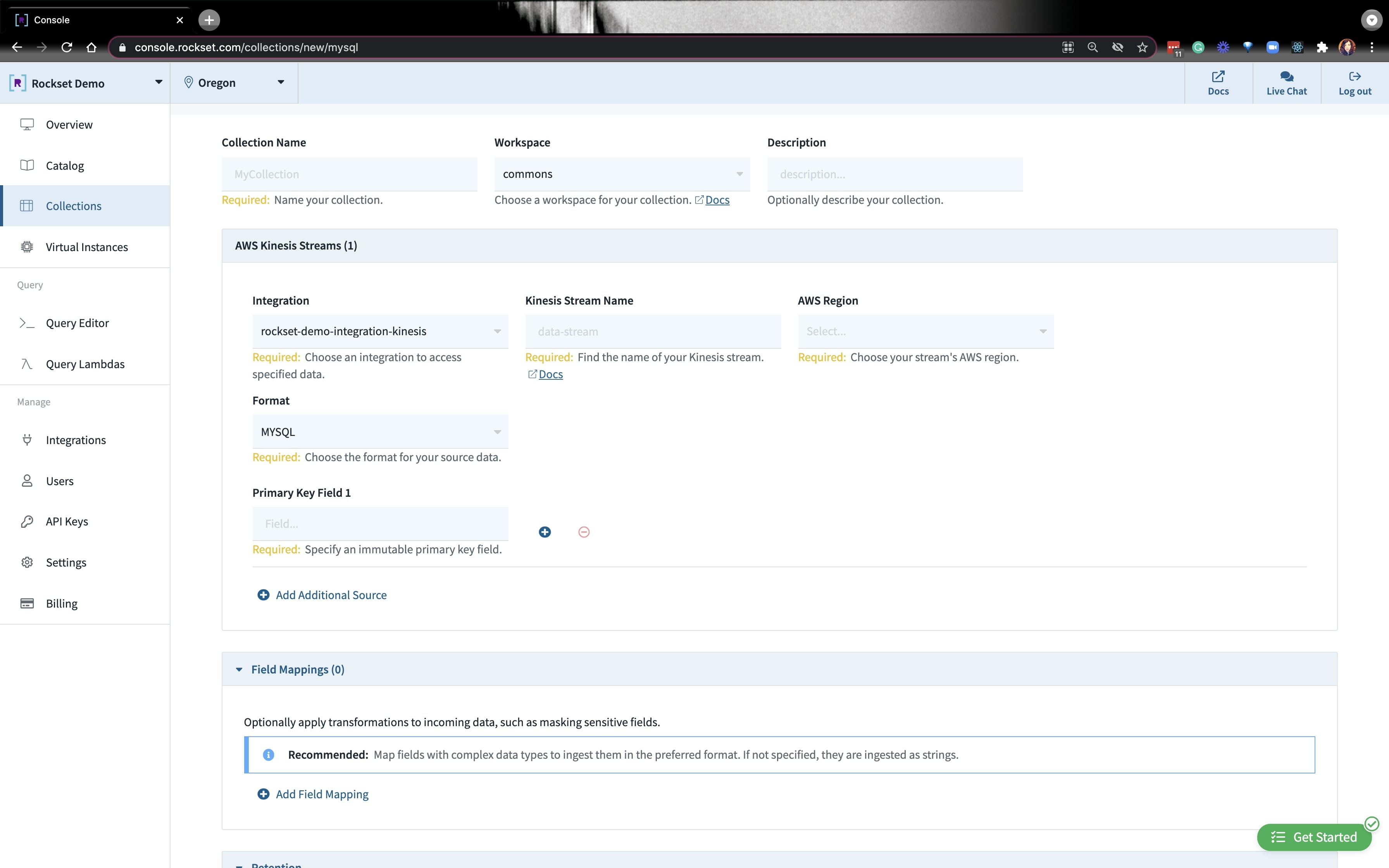

Once the integration is set up, you’ll need to create a collection. Go ahead and put your collection name, AWS region, and kinesis stream information:

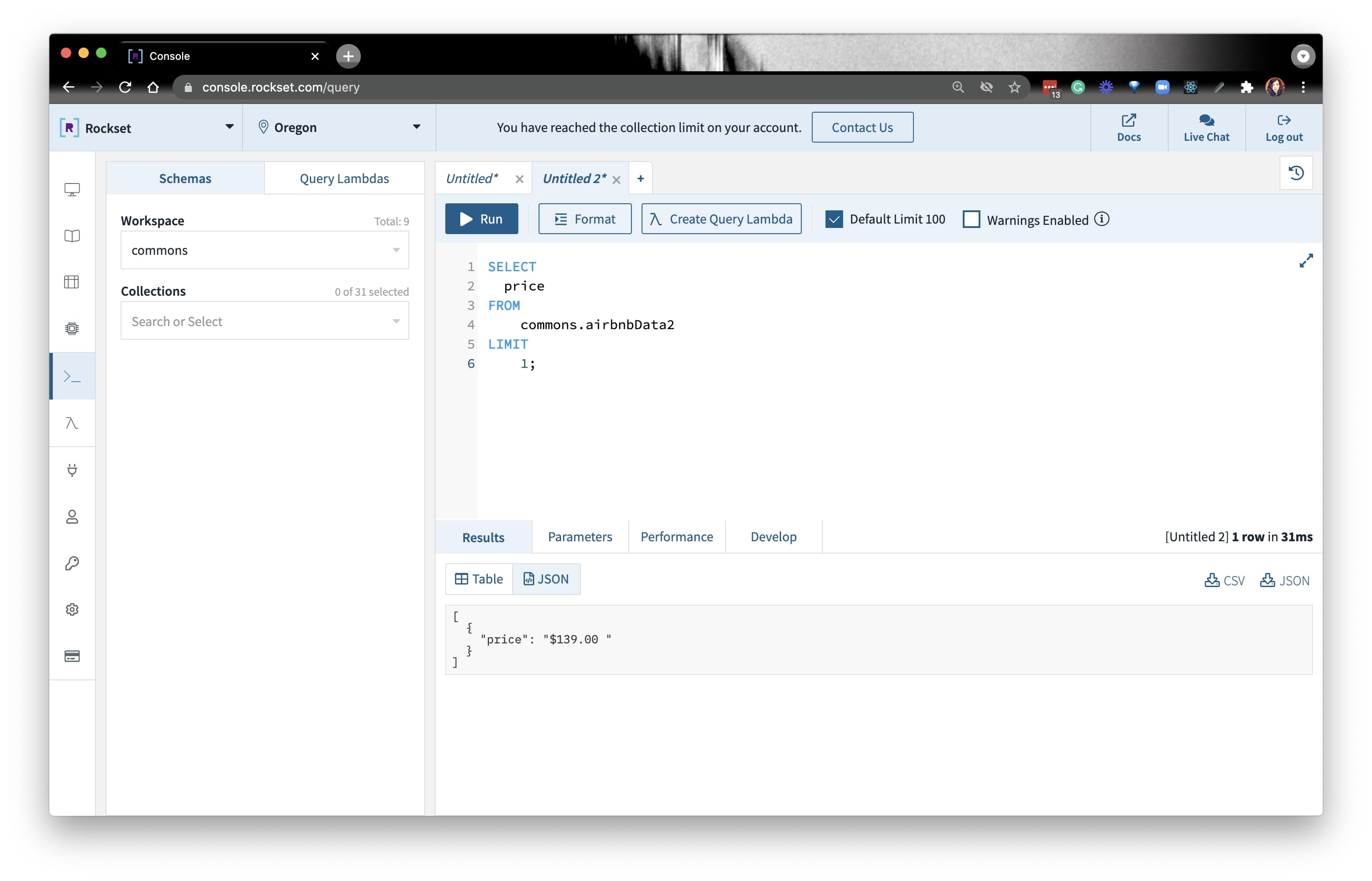

After a minute or so, you should be able to query your data that’s coming in from MySQL!

Querying the Airbnb Ddata on Rockset

After everything is loaded, we’re ready to write some queries. Since the data is based on SF— and we know SF prices are nothing to brag about— we can see what the average Airbnb price is in SF. Since price comes in as a string type, we’ll have to convert it to a float type:

SELECT price

FROM yourCollection

LIMIT 1;

We first used regex to get rid of the $. There are two approaches:

In this stream, we used REGEXP_LIKE(). From there, we TRY_CAST() price to a float type. Then, we got the average price. The query looked like this:

SELECT AVG(try_cast(REGEXP_REPLACE(price, '[^\d.]') as float)) avgprice

FROM commons.sfairbnbCollectioName

WHERE TRY_CAST(REGEXP_REPLACE(price, '[^\d.]') as float) is not null and city = 'San Francisco';

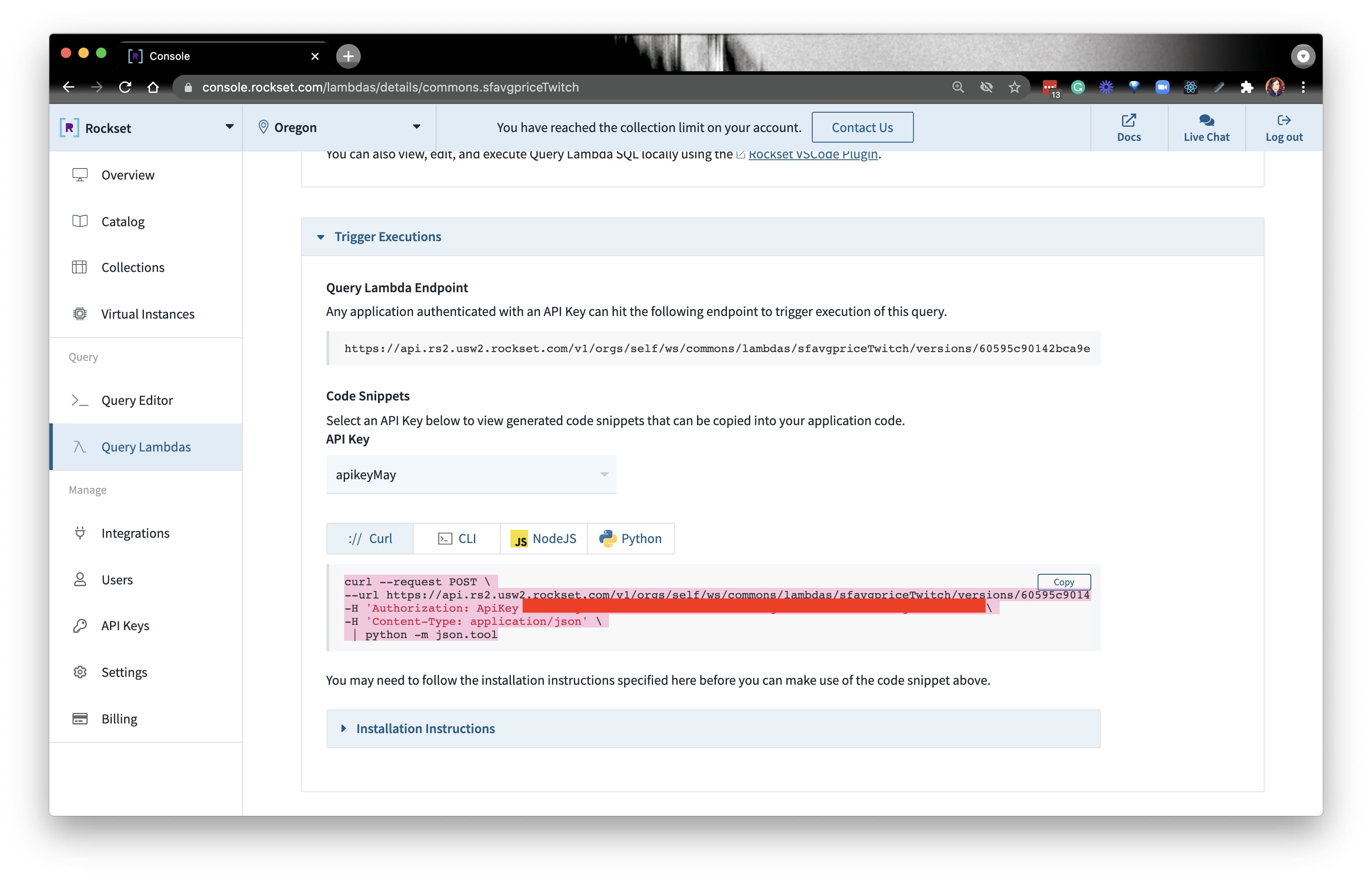

Once we write the query, we can use the Query Lambda feature to create a data API on the data from MySQL. We can execute the query on our terminal by copying the CURL command and pasting it in our terminal:

Voila! This is an end-to-end example of how you can scale your MySQL analytical loads on Rockset. If you haven’t already, you can read Justin’s blog more about scaling MySQL for real-time analytics.

You can catch the stream of this guide here:

Embedded content: https://www.youtube.com/embed/0UCiWfs-_nI

TLDR: you can find all the resources you need in the developer corner.