Introduction to Semantic Search: Embeddings, Similarity Metrics and Vector Databases

October 13, 2023

Note: for important background on vector search, see part 1 of our Introduction to Semantic Search: From Keywords to Vectors.

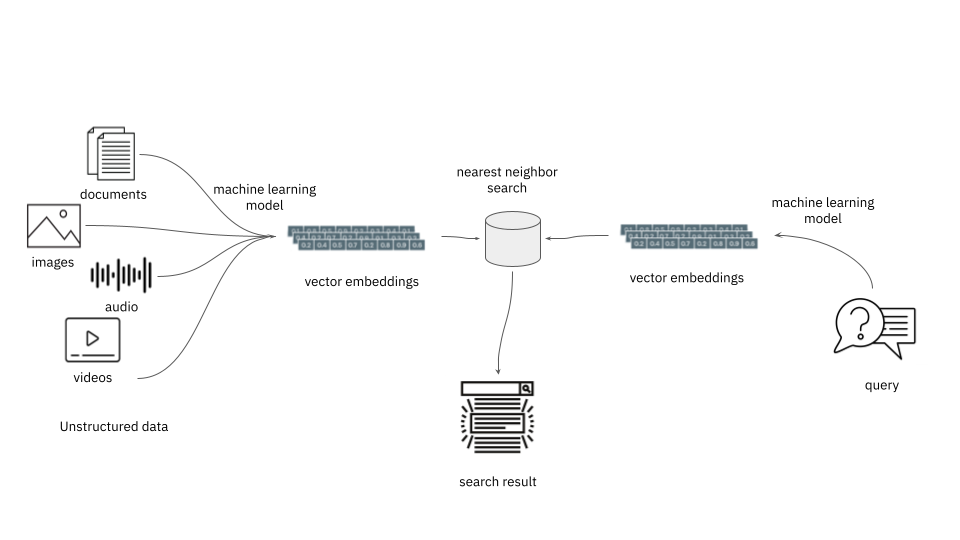

When building a vector search app, you’re going to end up managing a lot of vectors, also known as embeddings. And one of the most common operations in these apps is finding other nearby vectors. A vector database not only stores embeddings but also facilitates such common search operations over them.

The reason why finding nearby vectors is useful is that semantically similar items end up close to each other in the embedding space. In other words, finding the nearest neighbors is the operation used to find similar items. With embedding schemes available for multilingual text, images, sounds, data, and many other use cases, this is a compelling feature.

Generating Embeddings

A key decision point in developing a semantic search app that uses vectors is choosing which embedding service to use. Every item you want to search on will need to be processed to produce an embedding, as will every query. Depending on your workload, there may be significant overhead involved in preparing these embeddings. If the embedding provider is in the cloud, then the availability of your system—even for queries—will depend on the availability of the provider.

This is a decision that should be given due consideration, since changing embeddings will normally entail repopulating the whole database, an expensive proposition. Different models produce embeddings in a different embedding space so embeddings are typically not comparable when generated with different models. Some vector databases, however, will allow multiple embeddings to be stored for a given item.

One popular cloud-hosted embedding service for text is OpenAI’s Ada v2. It costs a few pennies to process a million tokens and is widely used across different industries. Google, Microsoft, HuggingFace, and others also provide online options.

If your data is too sensitive to send outside your walls, or if system availability is of paramount concern, it is possible to locally produce embeddings. Some popular libraries to do this include SentenceTransformers, GenSim, and several Natural Language Processing (NLP) frameworks.

For content other than text, there are a wide variety of embedding models possible. For example, SentenceTransfomers allows images and text to be in the same embedding space, so an app could find images similar to words, and vice versa. A host of different models are available, and this is a rapidly growing area of development.

Nearest Neighbor Search

What precisely is meant by “nearby” vectors? To determine if vectors are semantically similar (or different), you will need to compute distances, with a function known as a distance measure. (You may see this also called a metric, which has a stricter definition; in practice, the terms are often used interchangeably.) Typically, a vector database will have optimized indexes based on a set of available measures. Here’s a few of the common ones:

A direct, straight-line distance between two points is called a Euclidean distance metric, or sometimes L2, and is widely supported. The calculation in two dimensions, using x and y to represent the change along an axis, is sqrt(x^2 + y^2)—but keep in mind that actual vectors may have thousands of dimensions or more, and all of those terms need to be computed over.

Another is the Manhattan distance metric, sometimes called L1. This is like Euclidean if you skip all the multiplications and square root, in other words, in the same notation as before, simply abs(x) + abs(y). Think of it like the distance you’d need to walk, following only right-angle paths on a grid.

In some cases, the angle between two vectors can be used as a measure. A dot product, or inner product, is the mathematical tool used in this case, and some hardware is specially optimized for these calculations. It incorporates the angle between vectors as well as their lengths. In contrast, a cosine measure or cosine similarity accounts for angles alone, producing a value between 1.0 (vectors pointing the same direction) to 0 (vectors orthogonal) to -1.0 (vectors 180 degrees apart).

There are quite a few specialized distance metrics, but these are less commonly implemented “out of the box.” Many vector databases allow for custom distance metrics to be plugged into the system.

Which distance measure should you choose? Often, the documentation for an embedding model will say what to use—you should follow such advice. Otherwise, Euclidean is a good starting point, unless you have specific reasons to think otherwise. It may be worth experimenting with different distance measures to see which one works best in your application.

Without some clever tricks, to find the nearest point in embedding space, in the worst case, the database would need to calculate the distance measure between a target vector and every other vector in the system, then sort the resulting list. This quickly gets out of hand as the size of the database grows. As a result, all production-level databases include approximate nearest neighbor (ANN) algorithms. These trade off a tiny bit of accuracy for much better performance. Research into ANN algorithms remains a hot topic, and a strong implementation of one can be a key factor in the choice of a vector database.

Selecting a Vector Database

Now that we’ve discussed some of the key elements that vector databases support–storing embeddings and computing vector similarity–how should you go about selecting a database for your app?

Search performance, measured by the time needed to resolve queries against vector indexes, is a primary consideration here. It is worth understanding how a database implements approximate nearest neighbor indexing and matching, since this will affect the performance and scale of your application. But also investigate update performance, the latency between adding new vectors and having them appear in the results. Querying and ingesting vector data at the same time may have performance implications as well, so be sure to test this if you expect to do both concurrently.

Have a good idea of the scale of your project and how fast you expect your users and vector data to grow. How many embeddings are you going to need to store? Billion-scale vector search is certainly feasible today. Can your vector database scale to handle the QPS requirements of your application? Does performance degrade as the scale of the vector data increases? While it matters less what database is used for prototyping, you will want to give deeper consideration to what it would take to get your vector search app into production.

Vector search applications often need metadata filtering as well, so it’s a good idea to understand how that filtering is performed, and how efficient it is, when researching vector databases. Does the database pre-filter, post-filter or search and filter in a single step in order to filter vector search results using metadata? Different approaches will have different implications for the efficiency of your vector search.

One thing often overlooked about vector databases is that they also need to be good databases! Those that do a good job handling content and metadata at the required scale should be at the top of your list. Your analysis needs to include concerns common to all databases, such as access controls, ease of administration, reliability and availability, and operating costs.

Conclusion

Probably the most common use case today for vector databases is complementing Large Language Models (LLMs) as part of an AI-driven workflow. These are powerful tools, for which the industry is only scratching the surface of what’s possible. Be warned: This amazing technology is likely to inspire you with fresh ideas about new applications and possibilities for your search stack and your business.

Learn how Rockset supports vector search here.