Real-Time Analytics on DynamoDB - Using DynamoDB Streams with Lambda and ElastiCache

August 12, 2019

Real-time analytics is used by many organizations to support mission-critical decisions on real-time data. The real-time journey typically starts with live dashboards on real-time data and soon moves to automating actions on that data with applications like instant personalization, gaming leaderboards and smart IoT systems. In this post, we’ll be focusing on building live dashboards and real-time applications on data stored in DynamoDB, as we have found DynamoDB to be a commonly used data store for real-time use cases.

We’ll evaluate a few popular approaches to implementing real-time analytics on DynamoDB, all of which use DynamoDB Streams but differ in how the dashboards and applications are served:

1. DynamoDB Streams + Lambda + S3

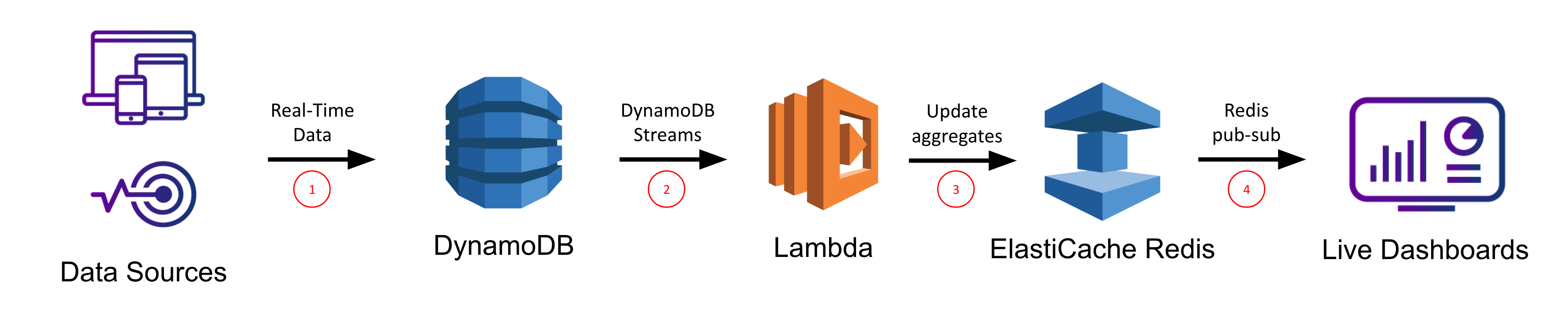

2. DynamoDB Streams + Lambda + ElastiCache for Redis

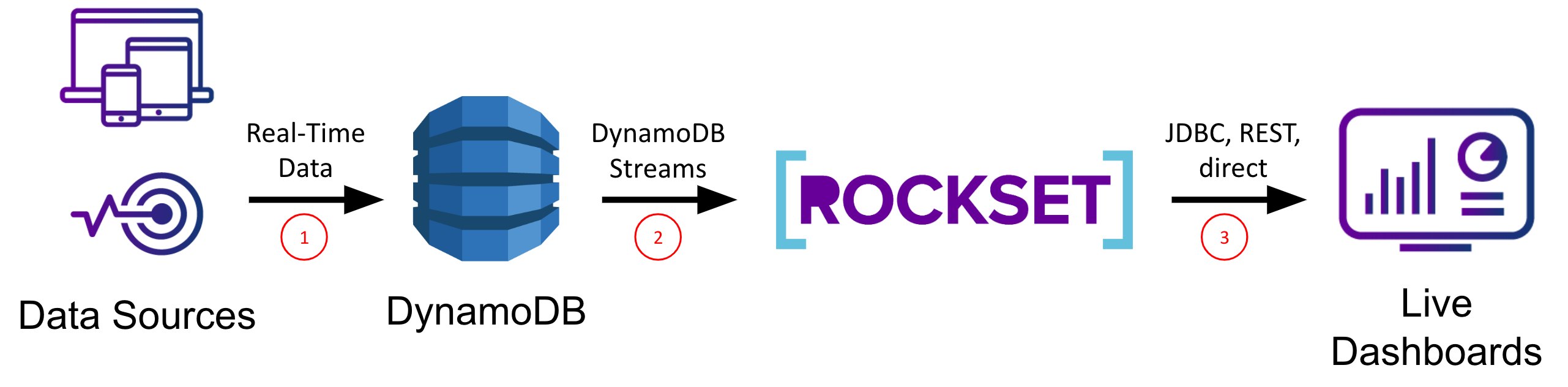

3. DynamoDB Streams + Rockset

We’ll evaluate each approach on its ease of setup/maintenance, data latency, query latency/concurrency, and system scalability so you can judge which approach is best for you based on which of these criteria are most important for your use case.

Technical Considerations for Real-Time Dashboards and Applications

Building dashboards and applications on real-time data is non-trivial as any solution needs to support highly concurrent, low latency queries for fast load times (or else drive down usage/efficiency) and live sync from the data sources for low data latency (or else drive up incorrect actions/missed opportunities). Low latency requirements rule out directly operating on data in OLTP databases, which are optimized for transactional, not analytical, queries. Low data latency requirements rule out ETL-based solutions which increase your data latency above the real-time threshold and inevitably lead to “ETL hell”.

DynamoDB is a fully managed NoSQL database provided by AWS that is optimized for point lookups and small range scans using a partition key. Though it is highly performant for these use cases, DynamoDB is not a good choice for analytical queries which typically involve large range scans and complex operations such as grouping and aggregation. AWS knows this and has answered customers requests by creating DynamoDB Streams, a change-data-capture system which can be used to notify other services of new/modified data in DynamoDB. In our case, we’ll make use of DynamoDB Streams to synchronize our DynamoDB table with other storage systems that are better suited for serving analytical queries.

Amazon S3

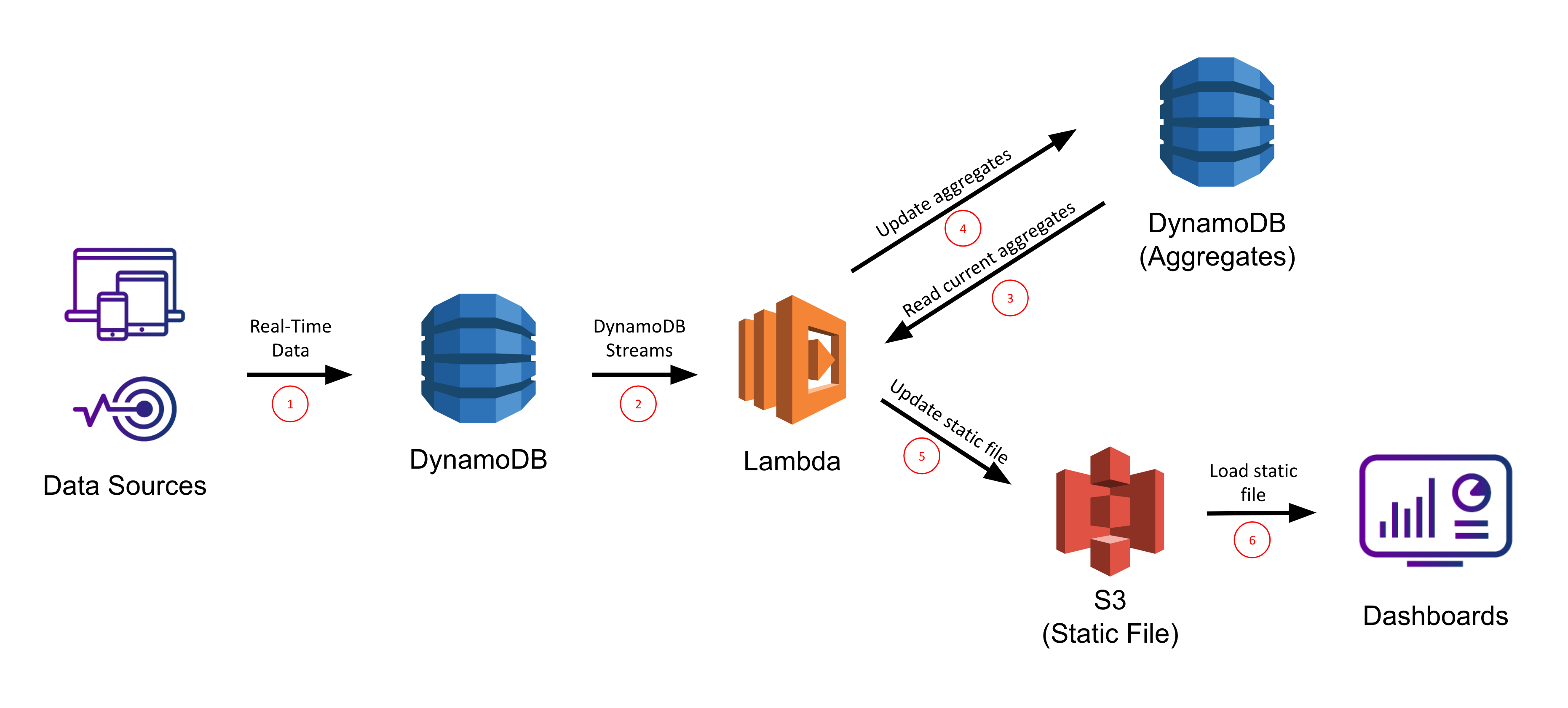

The first approach for DynamoDB reporting and dashboarding we’ll consider makes use of Amazon S3’s static website hosting. In this scenario, changes to our DynamoDB table will trigger a call to a Lambda function, which will take those changes and update a separate aggregate table also stored in DynamoDB. The Lambda will use the DynamoDB Streams API to efficiently iterate through the recent changes to the table without having to do a complete scan. The aggregate table will be fronted by a static file in S3 which anyone can view by going to the DNS endpoint of that S3 bucket’s hosted website.

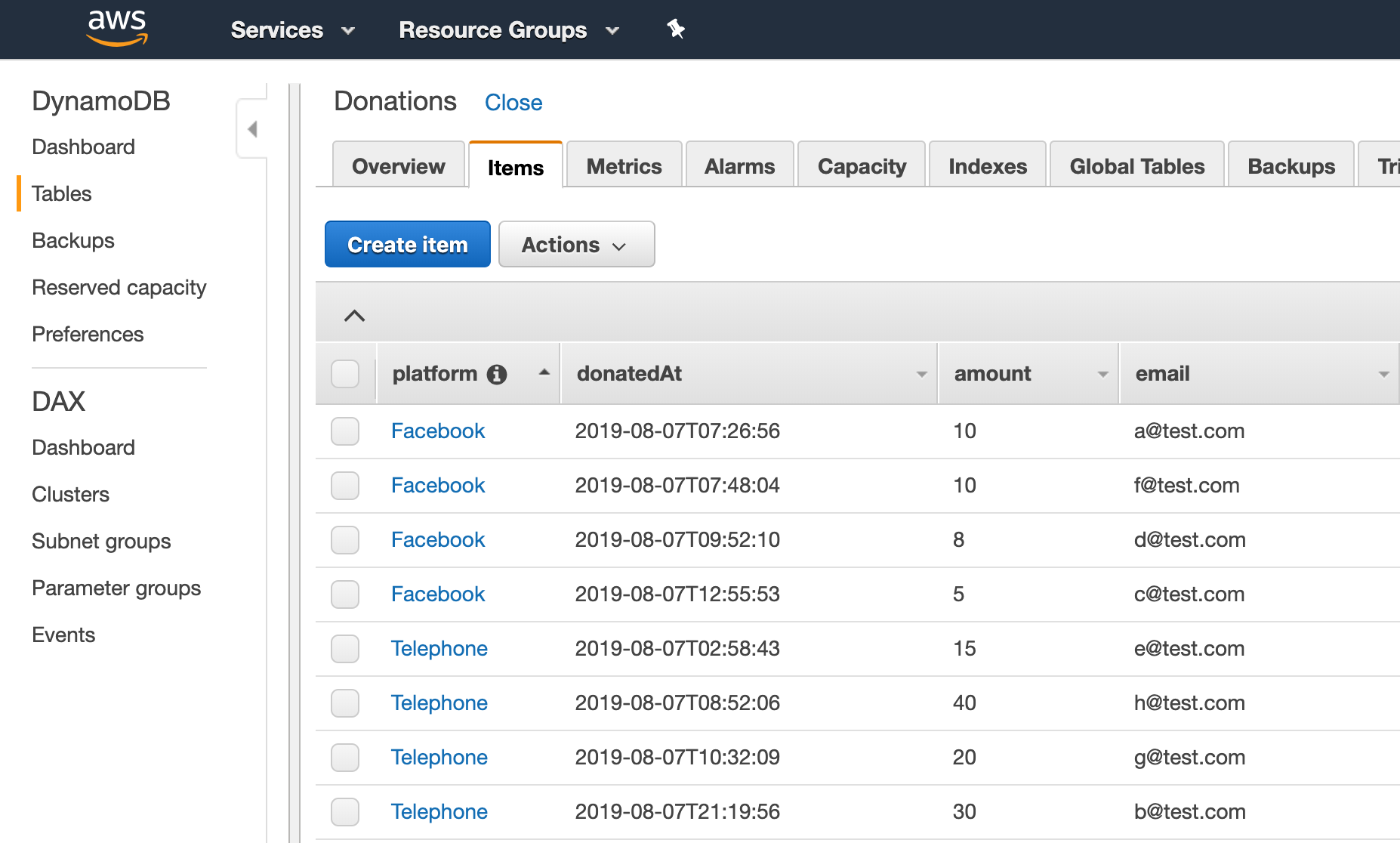

As an example, let’s say we are organizing a charity fundraiser and want a live dashboard at the event to show the progress towards our fundraising goal. Your DynamoDB table for tracking donations might look like

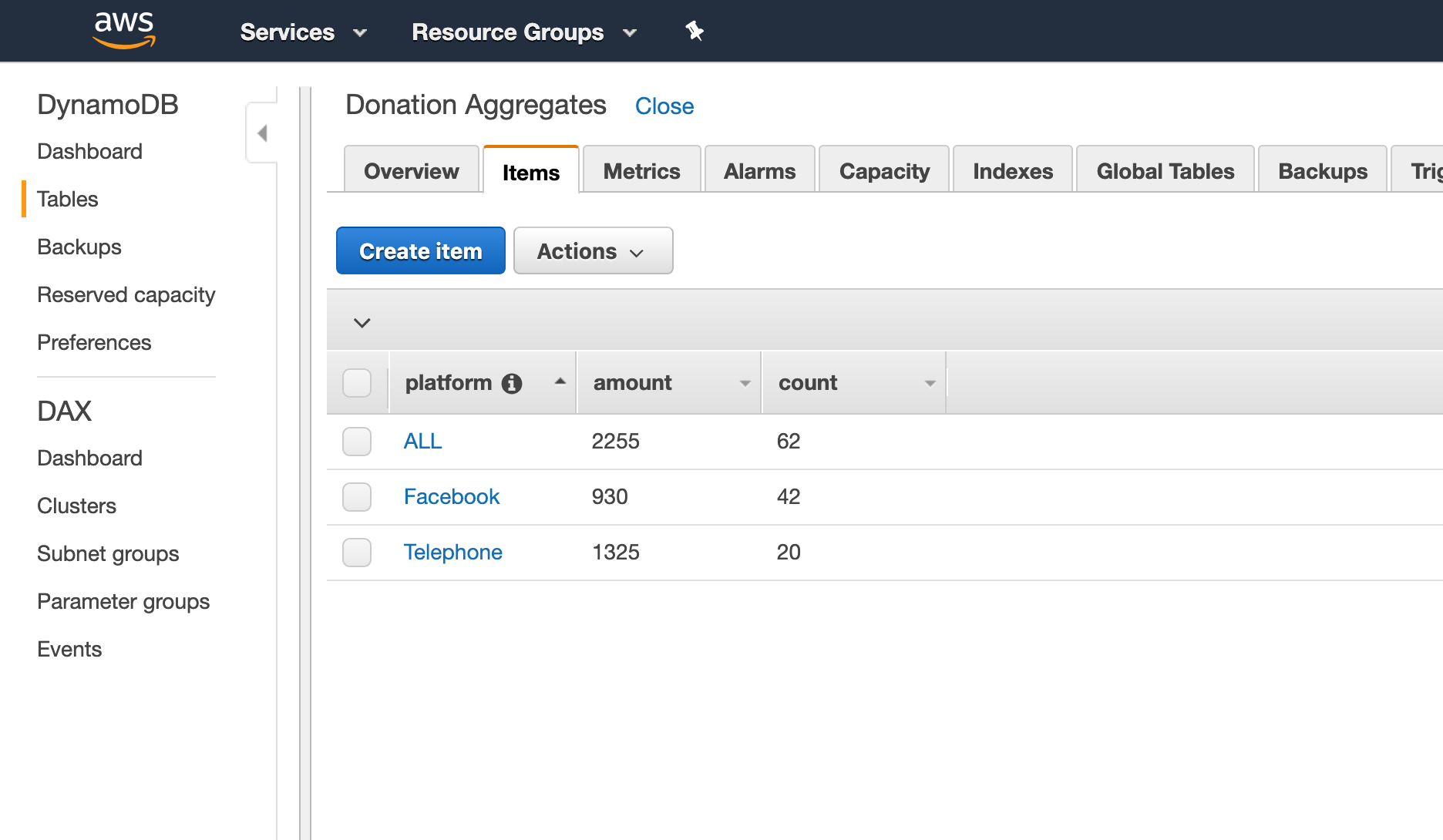

In this scenario, it would be reasonable to track the donations per platform and the total donated so far. To store this aggregated data, you might use another DynamoDB table that would look like

If we keep our volunteers up-to-date with these numbers throughout the fundraiser, they can rearrange their time and effort to maximize donations (for example by allocating more people to the phones since phone donations are about 3x larger than Facebook donations).

To accomplish this, we’ll create a Lambda function using the dynamodb-process-stream blueprint with function body of the form

exports.handler = async (event, context) => {

for (const record of event.Records) {

let platform = record.dynamodb['NewImage']['platform']['S'];

let amount = record.dynamodb['NewImage']['amount']['N'];

updatePlatformTotal(platform, amount);

updatePlatformTotal("ALL", amount);

}

return `Successfully processed ${event.Records.length} records.`;

};

The function updatePlatformTotal would read the current aggregates from the DonationAggregates (or initialize them to 0 if not present), then update and write back the new values. There are then two approaches to updating the final dashboard:

- Write a new static file to S3 each time the Lambda is triggered that overwrites the HTML to reflect the newest values. This is perfectly acceptable for visualizing data that does not change very frequently.

- Have the static file in S3 actually read from the DonationAggregates DynamoDB table (which can be done through the AWS javascript SDK). This is preferable if the data is being updated frequently as it will save many repeated writes to the S3 file.

Finally, we would go to the DynamoDB Streams dashboard and associate this lambda function with the DynamoDB stream on the Donations table.

Pros:

- Serverless / quick to setup

- Lambda leads to low data latency

- Good query latency if the aggregate table is kept small-ish

- Scalability of S3 for serving

Cons:

- No ad-hoc querying, refinement, or exploration in the dashboard (it’s static)

- Final aggregates are still stored in DynamoDB, so if you have enough of them you’ll hit the same slowdown with range scans, etc.

- Difficult to adapt this for an existing, large DynamoDB table

- Need to provision enough read/write capacity on your DynamoDB table (more devops)

- Need to identify all end metrics a priori

TLDR:

- This is a good way to quickly display a few simple metrics on a simple dashboard, but not great for more complex applications

- You’ll need to maintain a separate aggregates table in DynamoDB updated using Lambdas

- These kinds of dashboards won’t be interactive since the data is pre-computed

For a full-blown tutorial of this approach check out this AWS blog.

ElastiCache for Redis

Our next option for live dashboards and applications on top of DynamoDB involves ElastiCache for Redis, which is a fully managed Redis service provided by AWS. Redis is an in-memory key value store which is frequently used as a cache. Here, we will use ElastiCache for Redis much like our aggregate table above. Again we will set up a Lambda function that will be triggered on each change to the DynamoDB table and that will use the DynamoDB Streams API to efficiently retrieve recent changes to the table without needing to perform a complete table scan. However this time, the Lambda function will make calls to our Redis service to update the in-memory data structures we are using to keep track of our aggregates. We will then make use of Redis’ built-in publish-subscribe functionality to get real-time notifications to our webapp of when new data comes in so we can update our application accordingly.

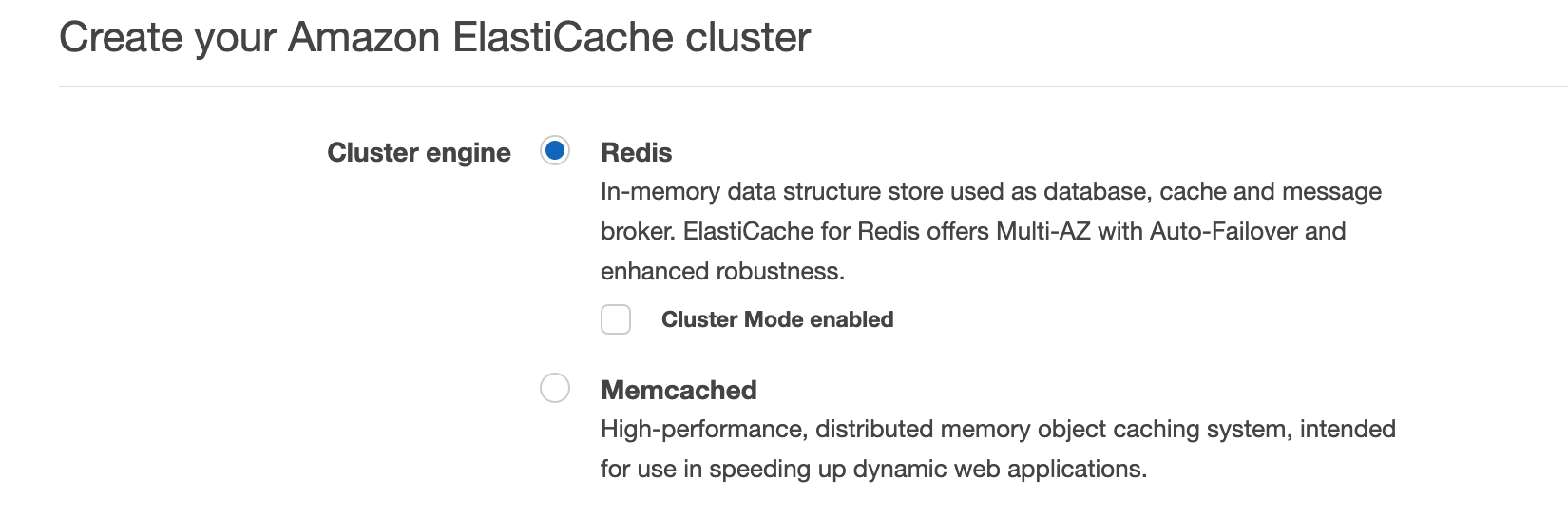

Continuing with our charity fundraiser example, let’s use a Redis hash to keep track of the aggregates. In Redis, the hash data structure is similar to a Python dictionary, Javascript Object, or Java HashMap. First we will create a new Redis instance in the ElastiCache for Redis dashboard.

Then once it’s up and running, we can use the same lambda definition from above and just change the implementation of updatePlatformTotal to something like

function udpatePlatformTotal(platform, amount) {

let redis = require("redis"),

let client = redis.createClient(...);

let countKey = [platform, "count"].join(':')

let amtKey = [platform, "amount"].join(':')

client.hincrby(countKey, 1)

client.publish("aggregates", countKey, 1)

client.hincrby(amtKey, amount)

client.publish("aggregates", amtKey, amount)

}

In the example of the donation record

{

"email": "a@test.com",

"donatedAt": "2019-08-07T07:26:56",

"platform": "Facebook",

"amount": 10

}

This would lead to the equivalent Redis commands

HINCRBY("Facebook:count", 1)

PUBLISH("aggregates", "Facebook:count", 1)

HINCRBY("Facebook:amount", 10)

PUBLISH("aggregates", "Facebook:amount", 10)

The increment calls persist the donation information to the Redis service, and the publish commands send real-time notifications through Redis’ pub-sub mechanism to the corresponding webapp which had previously subscribed to the "aggregates" topic. Using this communication mechanism allows support for real-time dashboards and applications, and it gives flexibility for what kind of web framework to use as long as a Redis client is available to subscribe with.

Note: You can always use your own Redis instance or another managed version other than Amazon ElastiCache for Redis and all the concepts will be the same.

Pros:

- Serverless / quick to setup

- Pub-sub leads to low data latency

- Redis is very fast for lookups → low query latency

- Flexibility for choice of frontend since Redis clients are available in many languages

Cons:

- Need another AWS service or to set up/manage your own Redis deployment

- Need to perform ETL in the Lambda which will be brittle as the DynamoDB schema changes

- Difficult to incorporate with an existing, large, production DynamoDB table (only streams updates)

- Redis doesn’t support complex queries, only lookups of pre-computed values (no ad-hoc queries/exploration)

TLDR:

- This is a viable option if your use case mainly relies on lookups of pre-computed values and doesn’t require complex queries or joins

- This approach uses Redis to store aggregate values and publishes updates using Redis pub-sub to your dashboard or application

- More powerful than static S3 hosting but still limited by pre-computed metrics so dashboards won’t be interactive

- All components are serverless (if you use Amazon ElastiCache) so deployment/maintenance are easy

- Need to develop your own webapp that supports Redis subscribe semantics

For an in-depth tutorial on this approach, check out this AWS blog. There the focus is on a generic Kinesis stream as the input, but you can use the DynamoDB Streams Kinesis adapter with your DynamoDB table and then follow their tutorial from there on.

Rockset

The last option we’ll consider in this post is Rockset, a real-time indexing database built for high QPS to support real-time application use cases. Rockset’s data engine has strong dynamic typing and smart schemas which infer field types as well as how they change over time. These properties make working with NoSQL data, like that from DynamoDB, straightforward.

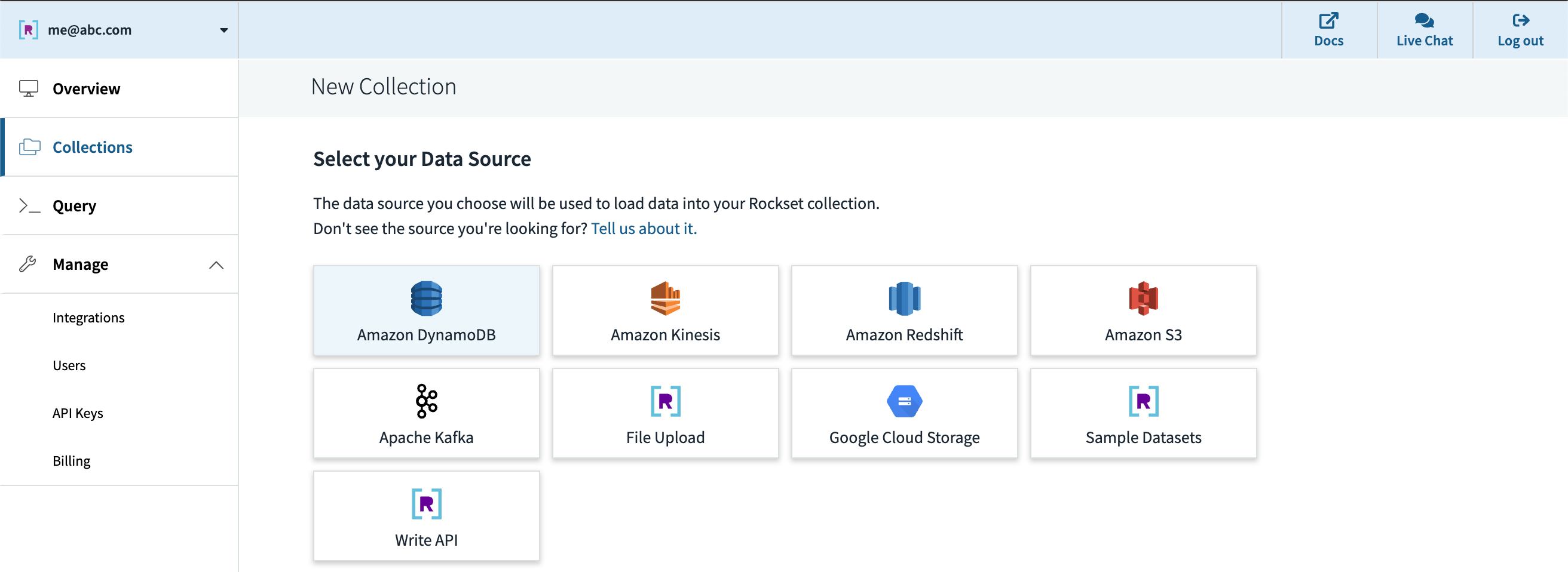

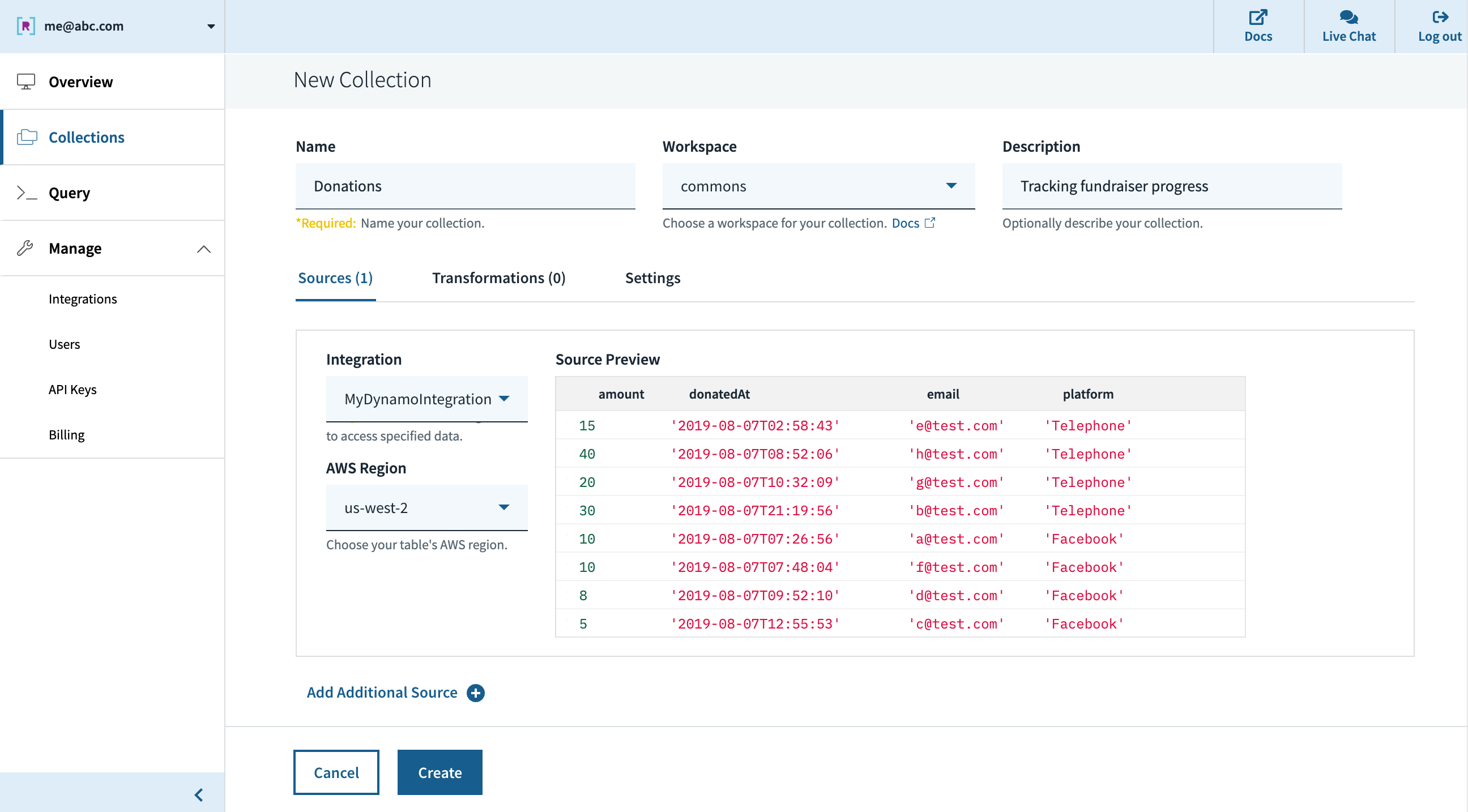

After creating an account at www.rockset.com, we’ll use the console to set up our first integration– a set of credentials used to access our data. Since we’re using DynamoDB as our data source, we’ll provide Rockset with an AWS access key and secret key pair that has properly scoped permissions to read from the DynamoDB table we want. Next we’ll create a collection– the equivalent of a DynamoDB/SQL table– and specify that it should pull data from our DynamoDB table and authenticate using the integration we just created. The preview window in the console will pull a few records from the DynamoDB table and display them to make sure everything worked correctly, and then we are good to press “Create”.

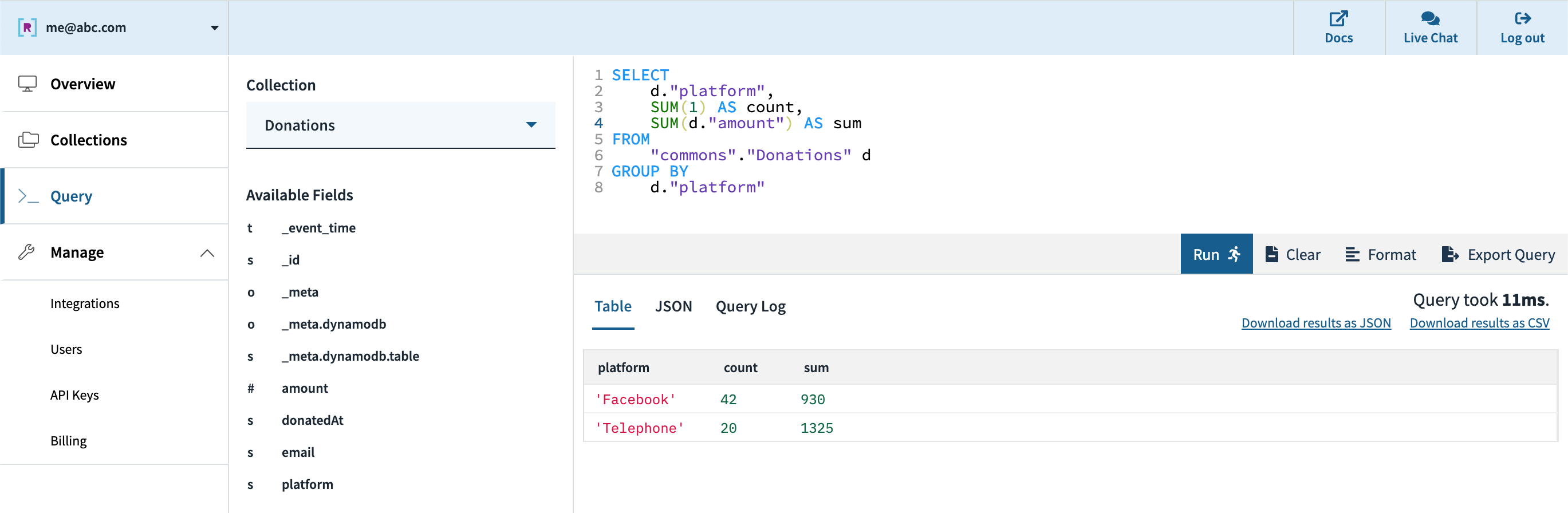

Soon after, we can see in the console that the collection is created and data is streaming in from DynamoDB. We can use the console’s query editor to experiment/tune the SQL queries that will be used in our application. Since Rockset has its own query compiler/execution engine, there is first-class support for arrays, objects, and nested data structures.

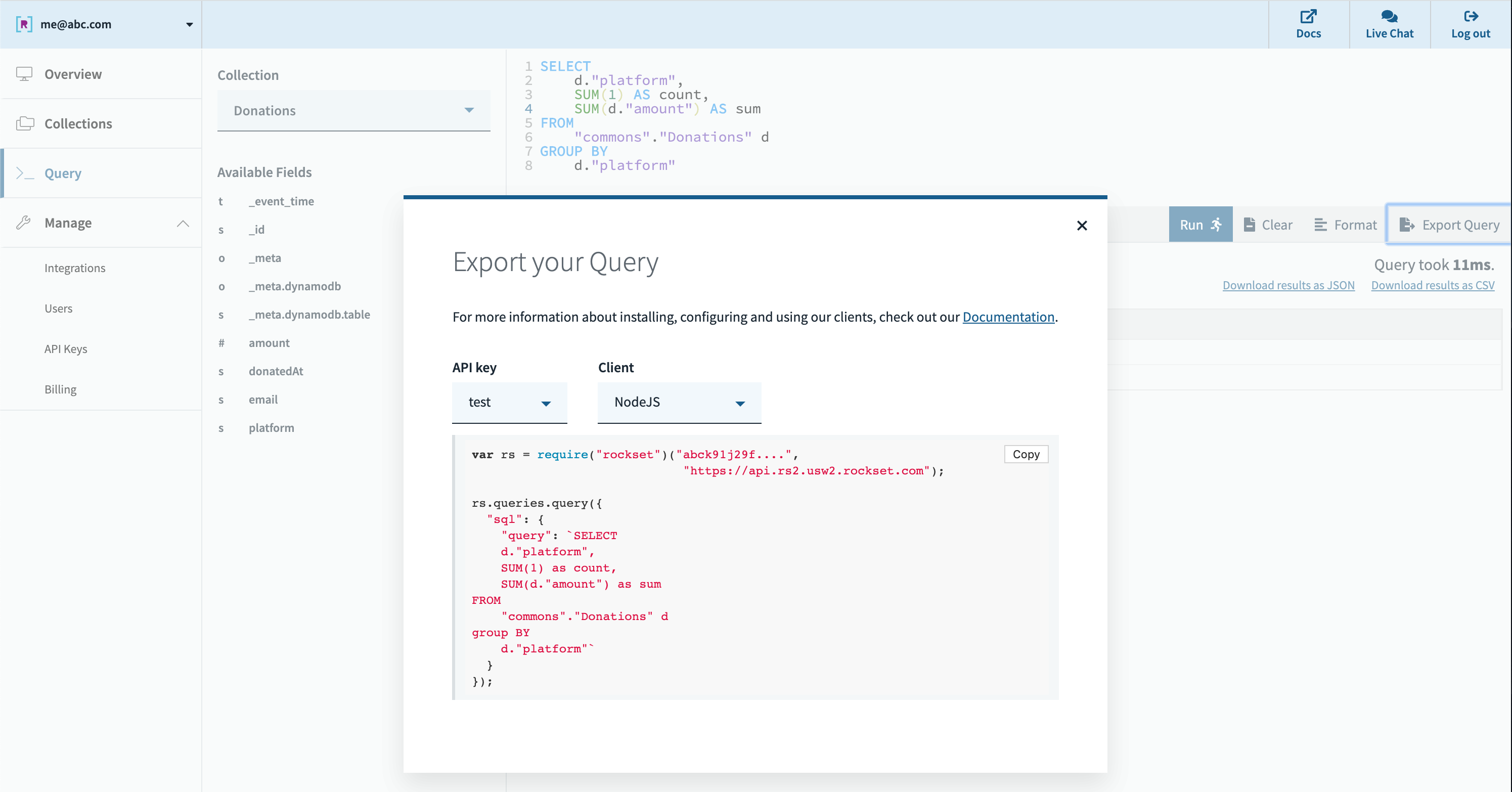

Next, we can create an API key in the console which will be used by the application for authentication to Rockset’s servers. We can export our query from the console query editor it into a functioning code snippet in a variety of languages. Rockset supports SQL over REST, which means any http framework in any programming language can be used to query your data, and several client libraries are provided for convenience as well.

All that’s left then is to run our queries in our dashboard or application. Rockset’s cloud-native architecture allows it to scale query performance and concurrency dynamically as needed, enabling fast queries even on large datasets with complex, nested data with inconsistent types.

Pros:

- Serverless– fast setup, no-code DynamoDB integration, and 0 configuration/management required

- Designed for low query latency and high concurrency out of the box

- Integrates with DynamoDB (and other sources) in real-time for low data latency with no pipeline to maintain

- Strong dynamic typing and smart schemas handle mixed types and works well with NoSQL systems like DynamoDB

- Integrates with a variety of custom dashboards (through client SDKs, JDBC driver, and SQL over REST) and BI tools (if needed)

Cons:

- Optimized for active dataset, not archival data, with sweet spot up to 10s of TBs

- Not a transactional database

- It’s an external service

TLDR:

- Consider this approach if you have strict requirements on having the latest data in your real-time applications, need to support large numbers of users, or want to avoid managing complex data pipelines

- Rockset is built for more demanding application use cases and can also be used to support dashboarding if needed

- Built-in integrations to quickly go from DynamoDB (and many other sources) to live dashboards and applications

- Can handle mixed types, syncing an existing table, and many low-latency queries

- Best for data sets from a few GBs to 10s of TBs

For more resources on how to integrate Rockset with DynamoDB, check out this blog post that walks through a more complex example.

Conclusion

We’ve covered several approaches for building real-time analytics on DynamoDB data, each with its own pros and cons. Hopefully this can help you evaluate the best approach for your use case, so you can move closer to operationalizing your own data!

Other DynamoDB resources: