Making Sense of Real-Time Analytics on Streaming Data: The Landscape

February 25, 2023

Introduction

Let’s get this out of the way at the beginning: understanding effective streaming data architectures is hard, and understanding how to make use of streaming data for analytics is really hard. Kafka or Kinesis? Stream processing or an OLAP database? Open source or fully managed? This blog series will help demystify streaming data, and more specifically, provide engineering leaders a guide for incorporating streaming data into their analytics pipelines.

Here is what the series will cover:

- This post will cover the basics: streaming data formats, platforms, and use cases

- Part 2 will outline key differences between stream processing and real-time analytics

- Part 3 will offer recommendations for operationalizing streaming data, including a few sample architectures

If you’d like to skip around this post, take advantage of our table of contents (to the left of the text).

What Is Streaming Data?

We’re going to start with a basic question: what is streaming data? It’s a continuous and unbounded stream of information that is generated at a high frequency and delivered to a system or application. An instructive example is clickstream data, which records a user’s interactions on a website. Another example would be sensor data collected in an industrial setting. The common thread across these examples is that a large amount of data is being generated in real time.

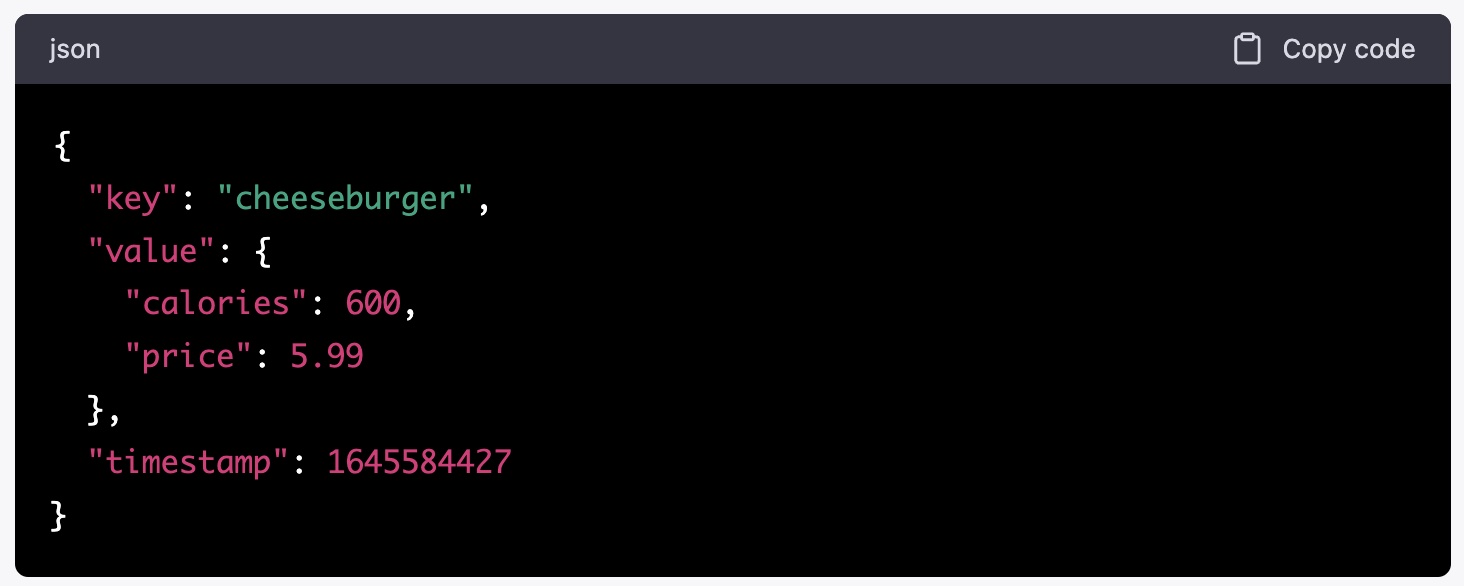

Typically, the “units” of data being streamed are considered events, which resemble a record in a database, with some key differences. First, event data is unstructured or semi-structured and stored in a nested format like JSON or AVRO. Events typically include a key, a value (which can have additional nested elements), and a timestamp. Second, events are usually immutable (this will be a very important feature in this series!). Third, events on their own are not ideal for understanding the current state of a system. Event streams are great at updating systems with information like “A cheeseburger was sold” but are less suitable out of the box to answer “how many cheeseburgers were sold today”. Lastly, and perhaps most importantly, streaming data is unique because it’s high-velocity and high volume, with an expectation that the data is available to be used in the database very quickly after the event has occurred.

Streaming data has been around for decades. It gained traction in the early 1990s as telecommunication companies used it to manage the flow of voice and data traffic over their networks. Today, streaming data is everywhere. It has expanded to various industries and applications, including IoT sensor data, financial data, web analytics, gaming behavioral data, and many more use cases. This type of data has become an essential component of real-time analytics applications because reacting to events quickly can have major effects on a business’ revenue. Real-time analytics on streaming data can help organizations detect patterns and anomalies, identify revenue opportunities, and respond to changing conditions, all near instantly. However, streaming data poses a unique challenge for analytics because it requires specialized technologies and approaches to achieve. This series will walk you through options for operationalizing streaming data, but we’re going to start with the basics, including formats, platforms, and use cases.

Streaming Data Formats

There are a few very common general-purpose streaming data formats. They’re important to study and understand because each format has a few characteristics that make it better or worse for particular use cases. We’ll highlight these briefly and then move on to streaming platforms.

JSON (JavaScript Object Notation)

This is a lightweight, text-based format that is easy to read (usually), making it a popular choice for data exchange. Here are a few characteristics of JSON:

- Readability: JSON is human-readable and easy to understand, making it easier to debug and troubleshoot.

- Wide support: JSON is widely supported by many programming languages and frameworks, making it a good choice for interoperability between different systems.

- Flexible schema: JSON allows for flexible schema design, which is useful for handling data that may change over time.

Sample use case: JSON is a good choice for APIs or other interfaces that need to handle diverse data types. For example, an e-commerce website may use JSON to exchange data between its website frontend and backend server, as well as with third-party vendors that provide shipping or payment services.

Example message:

Avro

Avro is a compact binary format that is designed for efficient serialization and deserialization of data. You can also format Avro messages in JSON. Here are a few characteristics of Avro:

- Efficient: Avro's compact binary format can improve performance and reduce network bandwidth usage.

- Strong schema support: Avro has a well-defined schema that allows for type safety and strong data validation.

- Dynamic schema evolution: Avro’s schema can be updated without requiring a change to the client code.

Sample use case: Avro is a good choice for big data platforms that need to process and analyze large volumes of log data. Avro is useful for storing and transmitting that data efficiently and has strong schema support.

Example message:

\x16cheeseburger\x02\xdc\x07\x9a\x99\x19\x41\x12\xcd\xcc\x0c\x40\xce\xfa\x8e\xca\x1f

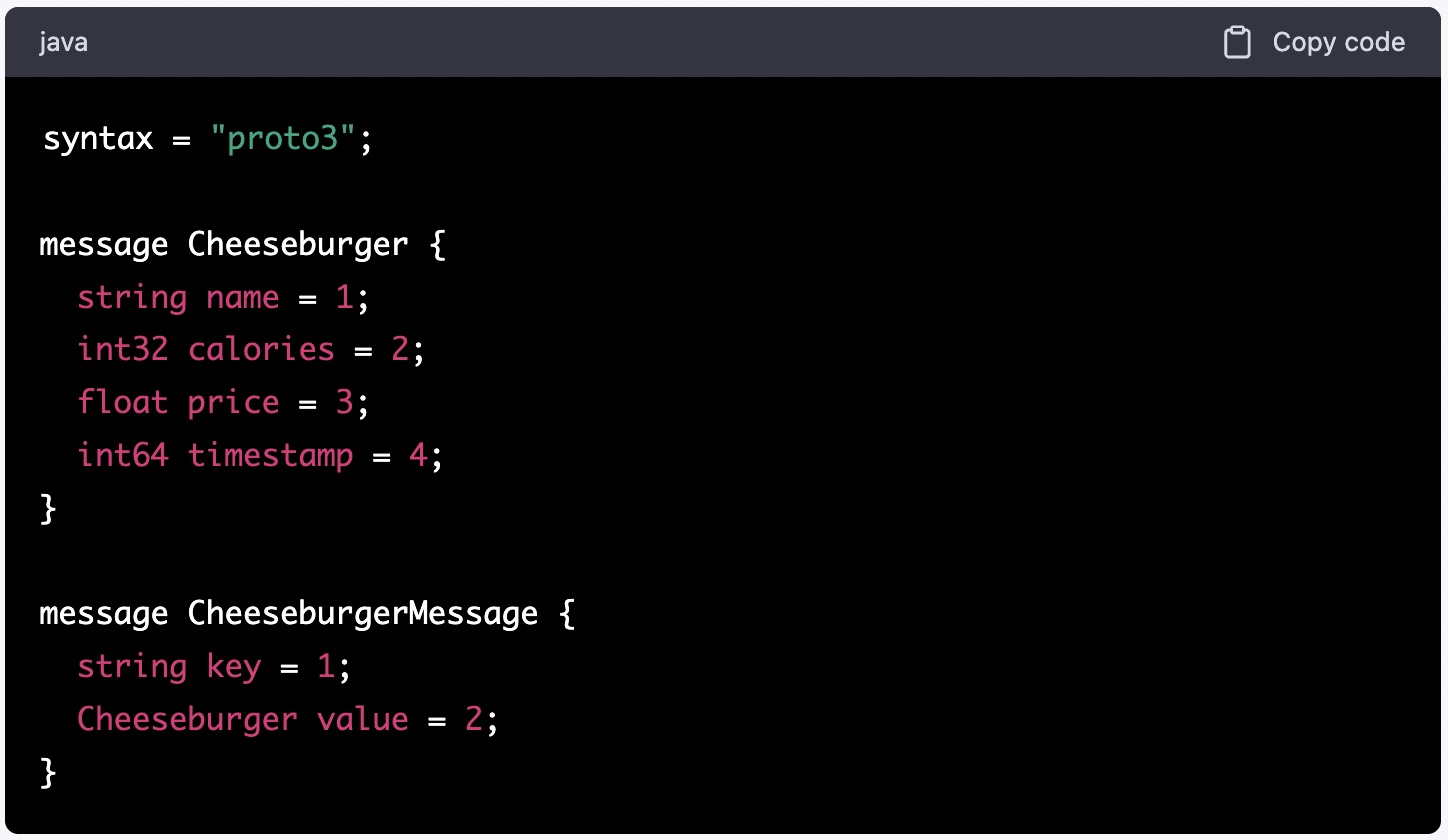

Protocol buffers (usually called protobuf)

Protobuf is a compact binary format that, like Avro, is designed for efficient serialization and deserialization of structured data. Some characteristics of protobuf include:

- Compact: protobuf is designed to be more compact than other serialization formats, which can further improve performance and reduce network bandwidth usage.

- Strong typing: protobuf has a well-defined schema that supports strong typing and data validation.

- Backward and forward compatibility: protobuf supports backward and forward compatibility, which means that a change to the schema will not break existing code that uses the data.

Sample use case: protobuf would work great for a real-time messaging system that needs to handle large volumes of messages. The format is well suited to efficiently encode and decode message data, while also benefiting from its compact size and strong typing support.

Example message:

It’s probably clear that format choice should be use-case driven. Pay special attention to your expected data volume, processing, and compatibility with other systems. That said, when in doubt, JSON has the widest support and offers the most flexibility.

Streaming data platforms

Ok, we’ve covered the basics of streaming as well as common formats, but we need to talk about how to move this data around, process it, and put it to use. This is where streaming platforms come in. It’s possible to go very deep on streaming platforms. This blog will not cover platforms in depth, but instead offer popular options, cover the high-level differences between popular platforms, and provide a few important considerations for choosing a platform for your use case.

Apache Kafka

Kafka, for short, is an open-source distributed streaming platform (yes, that is a mouthful) that enables real-time processing of large volumes of data. This is the single most popular streaming platform. It provides all the basic features you’d expect, like data streaming, storage, and processing, and is widely used for building real-time data pipelines and messaging systems. It supports various data processing models such as stream and batch processing (both covered in part 2 of this series), and complex event processing. Long story short, kafka is extremely powerful and widely used, with a large community to tap for best practices and support. It also offers a variety of deployment options. A few noteworthy points:

- Self-managed Kafka can be deployed on-premises or in the cloud. It’s open source, so it’s “free”, but be forewarned that its complexity will require significant in-house expertise.

- Kafka can be deployed as a managed service via Confluent Cloud or AWS Managed Streaming for Kafka (MSK). Both of these options simplify deployment and scaling significantly. You can get set up in just a few clicks.

- Kafka doesn’t have many built-in ways to accomplish analytics on events data.

AWS Kinesis

Amazon Kinesis is a fully managed, real-time data streaming service provided by AWS. It is designed to collect, process, and analyze large volumes of streaming data in real time, just like Kafka. There are a few notable differences between Kafka and Kinesis, but the largest is that Kinesis is a proprietary and fully-managed service provided by Amazon Web Services (AWS). The benefit of being proprietary is that Kinesis can easily make streaming data available for downstream processing and storage in services such as Amazon S3, Amazon Redshift, and Amazon Elasticsearch. It’s also seamlessly integrated with other AWS services like AWS Lambda, AWS Glue, and Amazon SageMaker, making it easy to orchestrate end-to-end streaming data processing pipelines without having to manage the underlying infrastructure. There are some caveats to be aware of, that will matter for some use cases:

- While Kafka supports a variety of programming languages including Java, Python, and C++, Kinesis primarily supports Java and other JVM languages.

- Kafka provides infinite retention of data while Kinesis stores data for a maximum of 7 days by default.

- Kinesis is not designed for a large number of consumers.

Azure Event Hubs and Azure Service Bus

Both of these fully-managed services by Microsoft offer streaming data built on Microsoft Azure, but they have important differences in design and functionality. There’s enough content here for its own blog post, but we’ll cover the high-level differences briefly.

Azure Event Hubs is a highly scalable data streaming platform designed for collecting, transforming, and analyzing large volumes of data in real time. It is ideal for building data pipelines that ingest data from a wide range of sources, such as IoT devices, clickstreams, social media feeds, and more. Event Hubs is optimized for high throughput, low latency data streaming scenarios and can process millions of events per second.

Azure Service Bus is a messaging service that provides reliable message queuing and publish-subscribe messaging patterns. It is designed for decoupling application components and enabling asynchronous communication between them. Service Bus supports a variety of messaging patterns and is optimized for reliable message delivery. It can handle high throughput scenarios, but its focus is on messaging, which doesn’t typically require real-time processing or stream processing.

Similar to Amazon Kinesis' integration with other AWS services, Azure Event Hubs or Azure Service Bus can be excellent choices if your software is built on Microsoft Azure.

Use cases for real-time analytics on streaming data

We’ve covered the basics for streaming data formats and delivery platforms, but this series is primarily about how to leverage streaming data for real-time analytics; we’ll now shine some light on how leading organizations are putting streaming data to use in the real world.

Personalization

Organizations are using streaming data to feed real-time personalization engines for eCommerce, adtech, media, and more. Imagine a shopping platform that infers a user is interested in books, then history books, and then history books about Darwin’s trip to the Galapagos. Because streaming data platforms are perfectly suited to capture and transport large amounts of data at low-latency, companies are beginning to use that data to derive intent and make predictions about what users might like to see next. Rockset has seen quite a bit of interest in this use case, and companies are driving significant incremental revenue by leveraging streaming data to personalize user experiences.

Anomaly Detection

Fraud and anomaly detection are one of the more popular use cases for real-time analytics on streaming data. Organizations are capturing user behavior via event streams, enriching those streams with historical data, and making use of online feature stores to detect anomalous or fraudulent user behavior. Unsurprisingly, this use case is becoming quite common at fintech and payments companies looking to bring a real-time edge to alerting and monitoring.

Gaming

Online games typically generate massive amounts of streaming data, much of which is now being used for real-time analytics. One can leverage streaming data to tune matchmaking heuristics, ensuring players are matched at an appropriate skill level. Many studios are able to boost player engagement and retention with live metrics and leaderboards. Finally, event streams can be used to help identify anomalous behavior associated with cheating.

Logistics

Another massive consumer of streaming data is the logistics industry. Streaming data with an appropriate real-time analytics stack helps leading logistics orgs manage and monitor the health of fleets, receive alerts about the health of equipment, and recommend preventive maintenance to keep fleets up and running. Additionally, advanced uses of streaming data include optimizing delivery routes with real-time data from GPS devices, orders and delivery schedules.

Domain-driven design, data mesh, and messaging services

Streaming data can be used to implement event-driven architectures that align with domain-driven design principles. Instead of polling for updates, streaming data provides a continuous flow of events that can be consumed by microservices. Events can represent changes in the state of the system, user actions, or other domain-specific information. By modeling the domain in terms of events, you can achieve loose coupling, scalability, and flexibility.

Log aggregation

Streaming data can be used to aggregate log data in real time from systems throughout an organization. Logs can be streamed to a central platform (usually an OLAP database; more on this in parts 2 and 3), where they can be processed and analyzed for alerting, troubleshooting, monitoring, or other purposes.

Conclusion

We’ve covered a lot in this blog, from formats to platforms to use cases, but there’s a ton more to learn about. There’s some interesting and meaningful differences between real-time analytics on streaming data, stream processing, and streaming databases, which is exactly what post 2 in this series will focus on. In the meantime, if you’re looking to get started with real-time analytics on streaming data, Rockset has built-in connectors for Kafka, Confluent Cloud, MSK, and more. Start your free trial today, with $300 in credits, no credit card required.