Rockset: 1 Billion Events in a Day with 1-Second Data Latency

September 15, 2020

YADB (Yet Another Database Benchmark)

The world has a plethora of database benchmarks, starting with the Wisconsin Benchmark which is my favorite. Firstly, that benchmark was from Dr David Dewitt, who taught me Database Internals when I was a graduate student at University of Wisconsin. Secondly, it is probably the earliest conference paper (circa 1983) that I ever read. And thirdly, the results of this paper displeased Larry Ellison so much that he inserted a clause in newer Oracle releases to prevent researchers from benchmarking the Oracle database.

The Wisconsin paper clearly describes how a benchmark measures very specific features of databases, so it follows that as database capabilities evolve, new benchmarks are needed. If you have a database that has new behavior not found in existing databases, then it is clear that you need a new benchmark to measure this new behavior of the database.

Today, we are introducing a new benchmark, RockBench, that does just this. RockBench is designed to measure the most important characteristics of a real-time database.

What Is a Real-Time Database?

A real-time database is one that can sustain a high write rate of new incoming data, while at the same time allowing applications to make queries based on the freshest of data. It is different from a transactional database where the most significant characteristic is the ability to perform transactions, which is why TPC-C is the most cited benchmark for transactional databases.

In typical database ACID parlance, a real-time database provides Atomicity and Durability of updates just like most other databases. It supports an eventual Consistency model, where updates show up in query results as quickly as possible. This time lag is referred to as data latency. A real-time database is one that is designed to minimize data latency.

Different applications need different data latencies, and the ability to measure data latency allows users to choose one real-time database configuration over another based on the needs of their application. RockBench is the only benchmark at present that measures the data latency of a database at varying write rates.

Data latency is different from query latency, which is what is typically used to benchmark transactional databases. We posit that one of the distinguishing factors that differentiates one real-time database from another is data latency. We designed a benchmark called RockBench that can measure the data latency of a real-time database.

Why Is This Benchmark Relevant in the Real World?

Real-time analytics use cases. There are many decision-making systems that leverage large volumes of streaming data to make quick decisions. When a truck arrives at a loading dock, a fleet management system would need to produce a loading list for the truck by examining delivery deadlines, delay-charge estimates, weather forecasts and modeling of other trucks that are arriving in the near future. This type of decision-making system would use a real-time database. Similarly, a product team would look at product clickstreams and user feedback in real time to determine which feature flags to set in the product. The volume of incoming click logs is very high and the time to gather insights from this data is low. This is another use case for a real-time database. Such use cases are becoming the norm these days, which is why measuring the data latency of a real-time database is useful. It allows users to pick the right database for their needs based on how quickly they want to extract insights from their data streams.

High write rates. The most critical measurement for a real-time database is the write rate it can sustain while supporting queries at the same time. The write rate could be bursty or periodic, depending on the time of the day or the day of the week. This behavior is like a streaming logging system that can take in large volumes of writes. However, one difference between a real-time database and a streaming logging system is that the database provides a query API that can perform random queries on the event stream. With writing and querying of data, there is always an inherent tradeoff between high write rates and the visibility of data in queries, and this is precisely what RockBench measures.

Semi-structured data. Most of real-life decision-making data is in semi-structured form, e.g. JSON, XML or CSV. New fields get added to the schema and older fields are dropped. The same field can have multi-typed values. Some fields have deeply nested objects. Before the advent of real-time databases, a user would typically use a data pipeline to clean and homogenize all the fields, flatten nested fields, denormalize nested objects and then write it out it to a data warehouse like Redshift or Snowflake. The data warehouse is then used to gather insights from their data. These data pipelines add to data latency. On the other hand, a real-time database eliminates the need for some of these data pipelines and simultaneously offers lower data latency. This benchmark uses data in JSON format to simulate more of these types of real-life scenarios.

Overview of RockBench

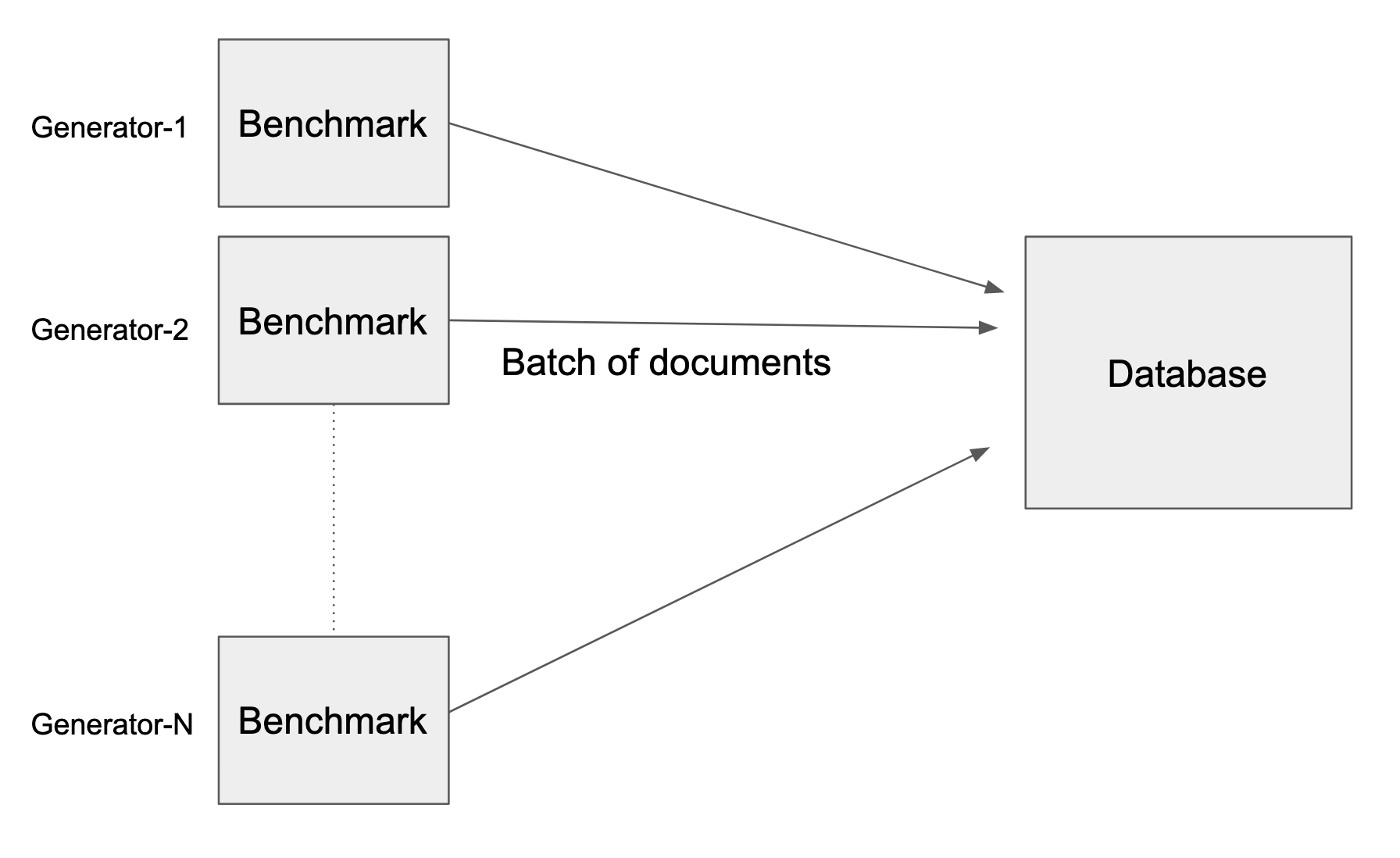

RockBench comprises a Data Generator and a Data Latency Evaluator. The Data Generator simulates a real-life event workload, where every generated event is in JSON format and schemas can change frequently. The Data Generator produces events at various write rates and writes them to the database. The Data Latency Evaluator queries the database periodically and outputs a metric that measures the data latency at that instant. A user can vary the write rate and measure the observed data latency of the system.

The Evaluating Data Latency for Real-Time Databases white paper provides a detailed description of the benchmark. The size of an event is chosen to be around 1K bytes, which is what we found to be the sweet spot for many real-life systems. Each event has nested objects and arrays inside it. We looked at a lot of publicly available events streams like Twitter events, stock market events and online gaming events to pick these characteristics of the data that this benchmark uses.

Results of Running RockBench on Rockset

Before we analyze the results of the benchmark, let’s refresh our memory of Rockset’s Aggregator Leaf Tailer (ALT) architecture. The ALT architecture allows Rockset to scale ingest compute and query compute separately. This benchmark measures the speed of indexing in Rockset’s Converged Index™, which maintains an inverted index, a columnar store and a record store on all fields, and efficiently enables queries on new data to be available almost instantly and to perform incredibly fast. Queries are fast because it can leverage any of these pre-built indices. The data latency that we record in our benchmarking is a measure of how fast Rockset can index streaming data. Complete results can be found here.

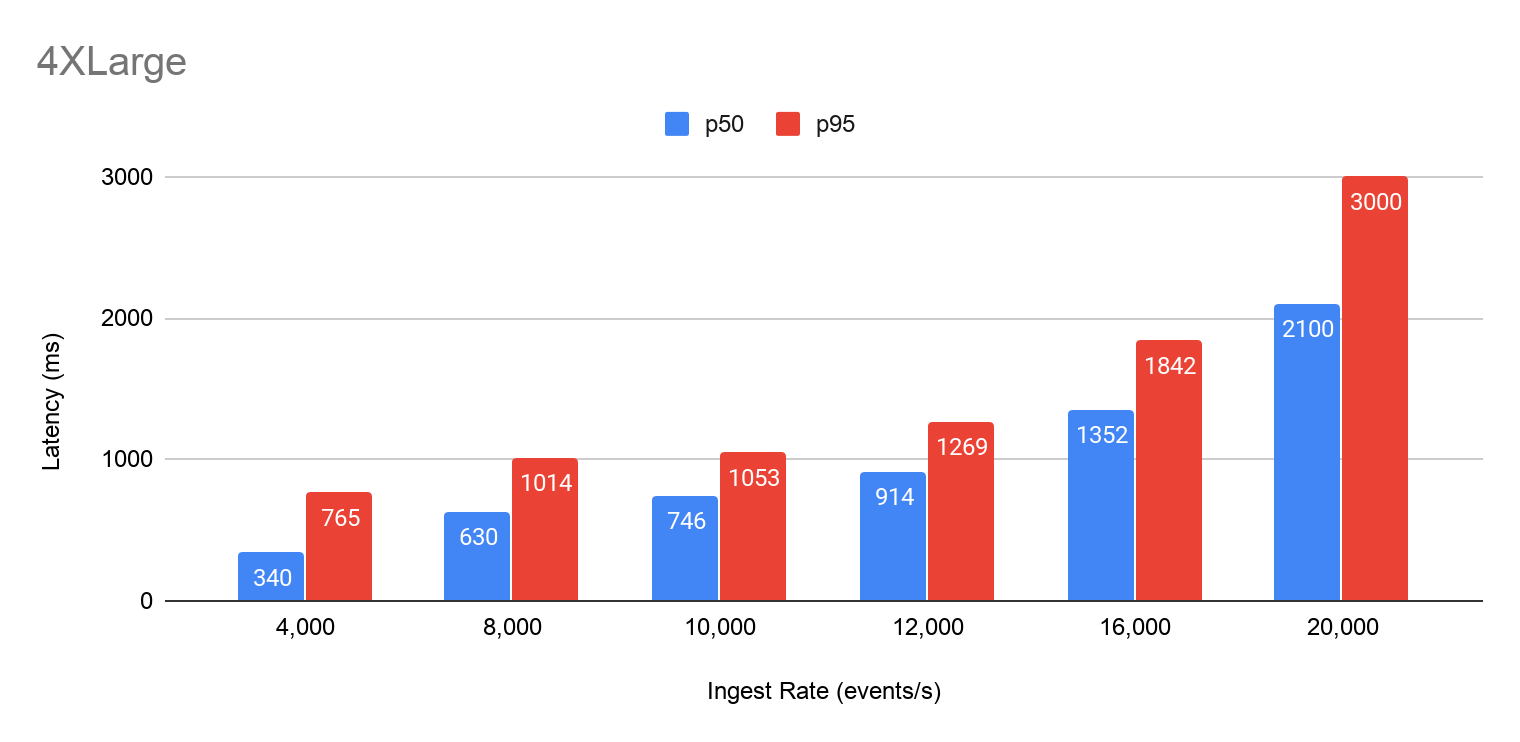

The first observation is that a Rockset 4XLarge Virtual Instance can support a billion events flowing in every day (approx. 12K events/sec) while keeping the data latency to under 1 second. This write rate is sufficient to support a variety of use cases, ranging from fleet management operations to handling events generated from sensors.

The second observation is that if you have to support a higher write rate, it is as simple as upgrading to the next higher Rockset Virtual Instance. Rockset is scalable, and depending on the amount of resources you dedicate, you can reduce your data latency or support higher write rates. Extrapolating from these benchmark results: an online gaming system that produces 40K events/sec and requires a data latency of 1 second may be satisfied with a Rockset 16XLarge Virtual Instance. Also, migrating from one Rockset Virtual Instance to another does not cause any downtime, which makes it easy for users to migrate from one instance to another.

The third observation is that if you are running on a fixed Rockset Virtual Instance and your write rate increases, the benchmark results show that there is a gradual and linear increase in the data latency until CPU resources are saturated. In all these cases, the compute resource on the leaf is the bottleneck, because this compute is the resource that makes recently written data queryable immediately. Rockset delegates compaction CPU to remote compactors, but some minimum CPU is still needed on the leaves to copy files to and from cloud storage.

Rockset uses a specialized bulk-load mechanism to index stationary data and that can load data at terabytes/hour, but this benchmark is not to measure that functionality. This benchmark is purposely used to measure the data latency of high-velocity data when new data is arriving at a fast rate and needs to be immediately queried.

Futures

In its current form, the workload generator issues writes at a specified constant rate, but one of the improvements that users have requested is to make this benchmark simulate a bursty write rate. Another improvement is to add an overwrite feature that overwrites some documents that already exists in the database. Yet another requested feature is to vary the schema of some of the generated documents so that some fields are sparse.

RockBench is designed to be extensible, and we hope that developers in the database community would contribute code to make this benchmark run on other real-time databases as well.

I am thrilled to see the results of RockBench on Rockset. It demonstrates the value of real-time databases, like Rockset, in enabling real-time analytics by supporting streaming ingest of thousands of events per second while keeping data latencies in the low seconds. My hope is that RockBench will provide developers an essential tool for measuring data latency and selecting the appropriate real-time database configuration for their application requirements.

Resources: