Aggregator Leaf Tailer: An Alternative to Lambda Architecture for Real-Time Analytics

February 6, 2019

Aggregator Leaf Tailer (ALT) is the data architecture favored by web-scale companies, like Facebook, LinkedIn, and Google, for its efficiency and scalability. In this blog post, I will describe the Aggregator Leaf Tailer architecture and its advantages for low-latency data processing and analytics.

When we started Rockset, we set out to implement a real-time analytics engine that made the developer's job as simple as possible. That meant a system that was sufficiently nimble and powerful to execute fast SQL queries on raw data, essentially performing any needed transformations as part of the query step, and not as part of a complex data pipeline. That also meant a system that took full advantage of cloud efficiencies–responsive resource scheduling and disaggregation of compute and storage–while abstracting away all infrastructure-related details from users. We chose ALT for Rockset.

Traditional Data Processing: Batch and Streaming

MapReduce, most commonly associated with Apache Hadoop, is a pure batch system that often introduces significant time lag in massaging new data into processed results. To mitigate the delays inherent in MapReduce, the Lambda architecture was conceived to supplement batch results from a MapReduce system with a real-time stream of updates. A serving layer unifies the outputs of the batch and streaming layers, and responds to queries.

The real-time stream is typically a set of pipelines that process new data as and when it is deposited into the system. These pipelines implement windowing queries on new data and then update the serving layer. This architecture has become popular in the last decade because it addresses the stale-output problem of MapReduce systems.

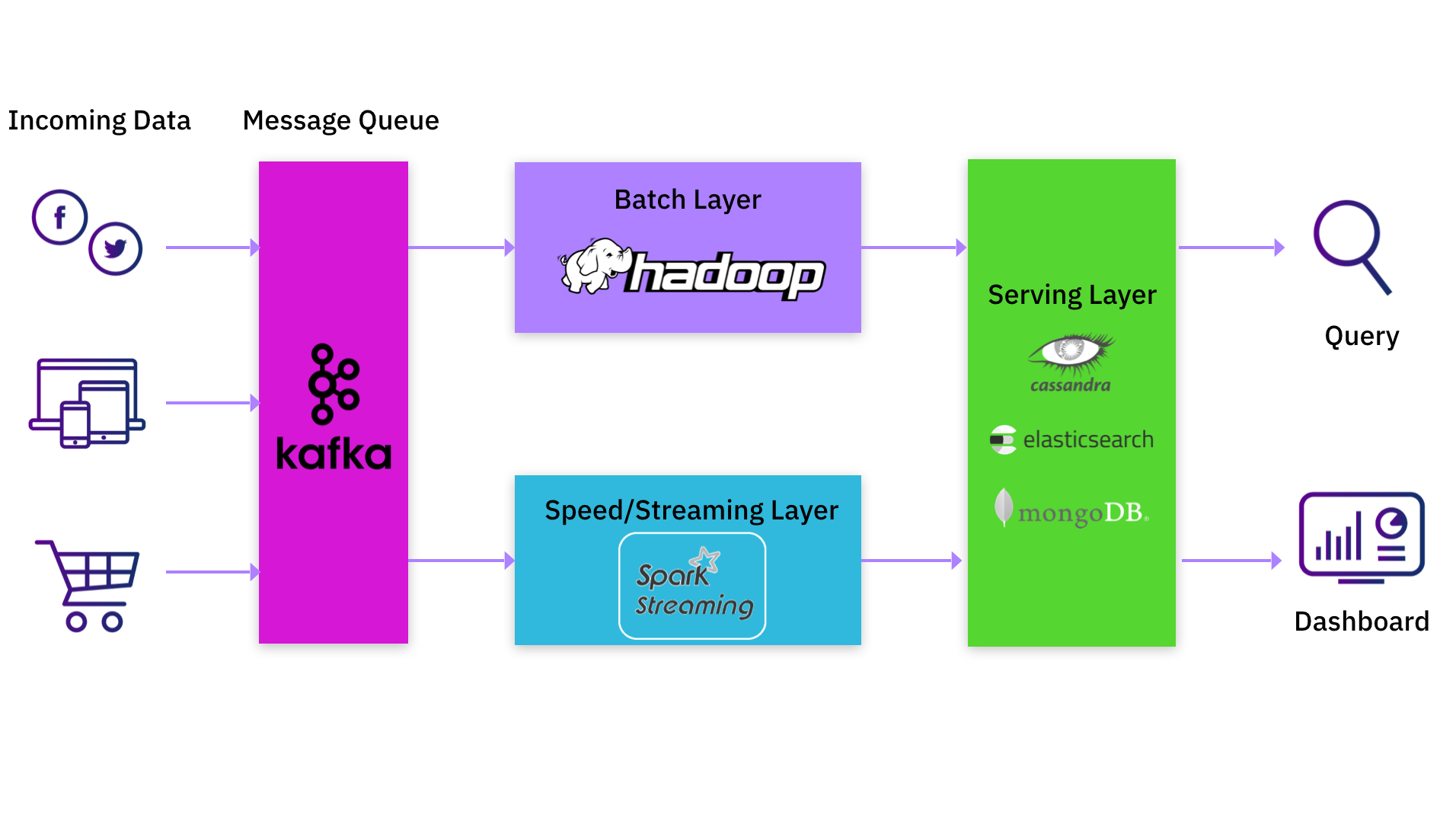

Common Lambda Architectures: Kafka, Spark, and MongoDB/Elasticsearch

If you are a data practitioner, you would probably have either implemented or used a data processing platform that incorporates the Lambda architecture. A common implementation would have large batch jobs in Hadoop complemented by an update stream stored in Apache Kafka. Apache Spark is often used to read this data stream from Kafka, perform transformations, and then write the result to another Kafka log. In most cases, this would not be a single Spark job but a pipeline of Spark jobs. Each Spark job in the pipeline would read data produced by the previous job, do its own transformations, and feed it to the next job in the pipeline. The final output would be written to a serving system like Apache Cassandra, Elasticsearch or MongoDB.

Shortcomings of Lambda Architectures

Being a data practitioner myself, I recognize the value the Lambda architecture offers by allowing data processing in real time. But it isn't an ideal architecture, from my perspective, due to several shortcomings:

- Maintaining two different processing paths, one via the batch system and another via the real-time streaming system, is inherently difficult. If you ship new code functionality to the streaming software but fail to make the necessary equivalent change to the batch software, you could get erroneous results.

- If you are an application developer or data scientist who wants to make changes to your streaming or batch pipeline, you have to either learn how to operate and modify the pipeline, or you have to wait for someone else to make the changes on your behalf. The former option requires you to pick up data engineering tasks and detracts from your primary role, while the latter forces you into a holding pattern waiting on the pipeline team for resolution.

- Most of the data transformation happens as new data enters the system at write time, whereas the serving layer is a simpler key-value lookup that does not handle complex transformations. This complicates the job of the application developer because she/he cannot easily apply new transformations retroactively on pre-existing data.

The biggest advantage of the Lambda architecture is that data processing occurs when new data arrives in the system, but ironically this is its biggest weakness as well. Most processing in the Lambda architecture happens in the pipeline and not at query time. As most of the complex business logic is tied to the pipeline software, the application developer is unable to make quick changes to the application and has limited flexibility in the ways he or she can use the data. Having to maintain a pipeline just slows you down.

ALT: Real-Time Analytics Without Pipelines

The ALT architecture addresses these shortcomings of Lambda architectures. The key component of ALT is a high-performance serving layer that serves complex queries, and not just key-value lookups. The existence of this serving layer obviates the need for complex data pipelines.

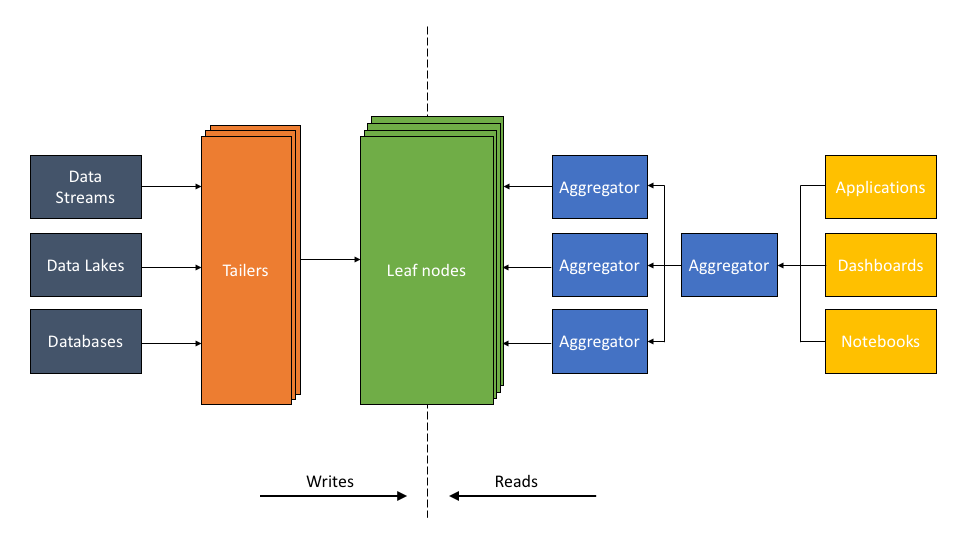

The ALT architecture described:

- The Tailer pulls new incoming data from a static or streaming source into an indexing engine. Its job is to fetch from all data sources, be it a data lake, like S3, or a dynamic source, like Kafka or Kinesis.

- The Leaf is a powerful indexing engine. It indexes all data as and when it arrives via the Tailer. The indexing component builds multiple types of indexes—inverted, columnar, document, geo, and many others—on the fields of a data set. The goal of indexing is to make any query on any data field fast.

- The scalable Aggregator tier is designed to deliver low-latency aggregations, be it columnar aggregations, joins, relevance sorting, or grouping. The Aggregators leverage indexing so efficiently that complex logic typically executed by pipeline software in other architectures can be executed on the fly as part of the query.

Advantages of ALT

The ALT architecture enables the app developer or data scientist to run low-latency queries on raw data sets without any prior transformation. A large portion of the data transformation process can occur as part of the query itself. How is this possible in the ALT architecture?

- Indexing is critical to making queries fast. The Leaves maintain a variety of indexes concurrently, so that data can be quickly accessed regardless of the type of query—aggregation, key-value, time series, or search. Every document and field is indexed, including both value and type of each field, resulting in fast query performance that allows significantly more complex data processing to be inserted into queries.

- Queries are distributed across a scalable Aggregator tier. The ability to scale the number of Aggregators, which provide compute and memory resources, allows compute power to be concentrated on any complex processing executed on the fly.

- The Tailer, Leaf, and Aggregator run as discrete microservices in disaggregated fashion. Each Tailer, Leaf, or Aggregator tier can be independently scaled up and down as needed. The system scales Tailers when there is more data to ingest, scales Leaves when data size grows, and scales Aggregators when the number or complexity of queries increases. This independent scalability allows the system to bring significant resources to bear on complex queries when needed, while making it cost-effective to do so.

The most significant difference is that the Lambda architecture performs data transformations up front so that results are pre-materialized, while the ALT architecture allows for query on demand with on-the-fly transformations.

Why ALT Makes Sense Today

While not as widely known as the Lambda architecture, the ALT architecture has been in existence for almost a decade, employed mostly on high-volume systems.

- Facebook’s Multifeed architecture has been using the ALT methodology since 2010, backed by the open-source RocksDB engine, which allows large data sets to be indexed efficiently.

- LinkedIn’s FollowFeed was redesigned in 2016 to use the ALT architecture. Their previous architecture, like the Lambda architecture discussed above, used a pre-materialization approach, also called fan-out-on-write, where results were precomputed and made available for simple lookup queries. LinkedIn's new ALT architecture uses a query on demand or fan-out-on-read model using RocksDB indexing instead of Lucene indexing. Much of the computation is done on the fly, allowing greater speed and flexibility for developers in this approach.

- Rockset uses RocksDB as a foundational data store and implements the ALT architecture (see white paper) in a cloud service.

The ALT architecture clearly has the performance, scale, and efficiency to handle real-time use cases at some of the largest online companies. Why has it not been used as widely till recently? The short answer is that “indexing” software is traditionally costly, and not commercially viable, when data size is large. That ruled out many smaller organizations from pursuing an ALT, query-on-demand approach in the past. But the current state of technology—the combination of powerful indexing software built on open-source RocksDB and favorable cloud economics—has made ALT not only commercially feasible today, but an elegant architecture for real-time data processing and analytics.

Learn more about Rockset's architecture in this 30 minute whiteboard video session by Rockset CTO and Co-founder Dhruba Borthakur.

Embedded content: https://youtu.be/msW8nh5TTwQ

Rockset is the leading real-time analytics platform built for the cloud, delivering fast analytics on real-time data with surprising efficiency. Learn more at rockset.com.