Scaling Our SaaS Sales Training Platform with Real-Time Analytics from Rockset

January 9, 2023

Modern Snack-Sized Sales Training

At ConveYour, we provide automated sales training via the cloud. Our all-in-one SaaS platform brings a fresh approach to hiring and onboarding new sales recruits that maximizes training and retention.

High sales staff churn is wasteful and bad for the bottom line. However, it can be minimized with personalized training that is delivered continuously in bite-sized portions. By tailoring curricula for every sales recruit’s needs and attention spans, we maximize engagement and reduce training time so they can hit the ground running.

Such real-time personalization requires a data infrastructure that can instantly ingest and query massive amounts of user data. And as our customers and data volumes grew, our original data infrastructure couldn’t keep up.

It wasn't until we discovered a real-time analytics database called Rockset that we could finally aggregate millions of event records in under a second and our customers could work with actual time-stamped data, not out-of-date information that was too stale to efficiently aid in sales training.

Our Business Needs: Scalability, Concurrency and Low Ops

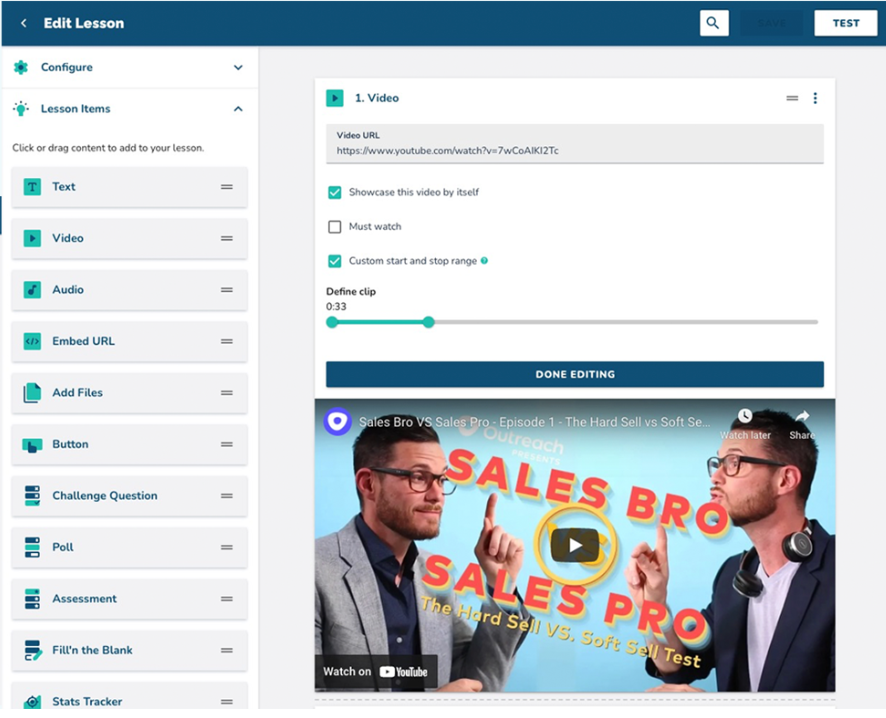

Built on the principles of microlearning, ConveYour delivers short, convenient lessons and quizzes to sales recruits via text messages, while allowing our customers to monitor their progress at a detailed level using the above internal dashboard (above).

We know how far they are in that training video down to the 15-second segment. And we know which questions they got right and wrong on the latest quiz – and can automatically assign more or fewer lessons based on that.

More than 100,000 sales reps have been trained via ConveYour. Our microlearning approach reduces trainee boredom, boosts learning outcomes and slashes staff churn. These are wins for any company, but are especially important for direct sales-driven firms that constantly hire new reps, many of them fresh graduates or new to sales.

Scale has always been our number one issue. We send out millions of text messages to sales reps every year. And we’re not just monitoring the progress of sales recruits – we track every single interaction they have with our platform.

For example, one customer hires nearly 8,000 sales reps a year. Recently, half of them went through a compliance training program deployed and managed through ConveYour. Tracking the progress of an individual rep as they progress through all 55 lessons creates 50,000 data points. Multiply that by 4,000 reps, and you get around 2 million pieces of event data. And that’s just one program for one customer.

To make insights available on demand to company sales managers, we had to run the analytics in a batch first and then cache the results. Managing the various caches was extremely hard. Inevitably, some caches would get stale, leading to outdated results. And that would lead to calls from our client sales managers unhappy that the compliance status of their reps was incorrect.

As our customers grew, so did our scalability needs. This was a great problem to have. But it was still a big problem.

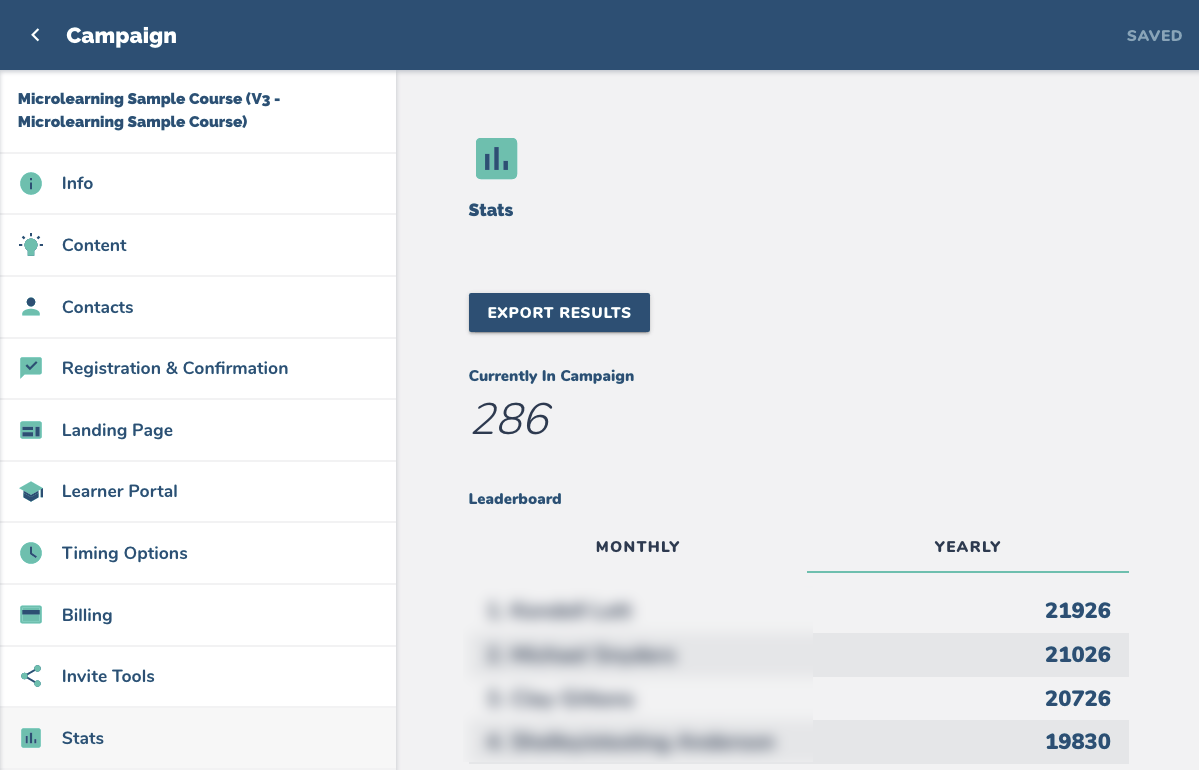

Other times, caching would not cut it. We also needed highly-concurrent, instant queries. For instance, we built a CRM dashboard (above) that provided real-time aggregated performance results on 7,000 sales reps. This dashboard was used by hundreds of middle managers who couldn’t afford to wait for that information to come in a weekly or even daily report. Unfortunately, as the amount of data and number of manager users grew, the dashboard’s responsiveness slowed.

Throwing more data servers could have helped. However, our usage is also very seasonal: busiest in the fall, when companies bring on-board crops of fresh graduates, and ebbing at other times of the year. So deploying permanent infrastructure to accommodate spiky demand would have been expensive and wasteful. We needed a data platform that could scale up and down as needed.

Our final issue is our size. ConveYour has a team of just five developers. That’s a deliberate choice. We would much rather keep the team small, agile and productive. But to unleash their inner 10x developer, we wanted to move to the best SaaS tools – which we did not have.

Technical Challenges

Our original data infrastructure was built around an on-premises MongoDB database that ingested and stored all user transaction data. Connected to it via an ETL pipeline was a MySQL database running in Google Cloud that serves up both our large ongoing workhorse queries and also the super-fast ad hoc queries of smaller datasets.

Neither database was cutting the mustard. Our “live” CRM dashboard was increasingly taking up to six seconds to return results, or it would just simply time out. This had several causes. There was the large but growing amount of data we were collecting and having to analyze, as well as the spikes in concurrent users such as when managers checked their dashboards in the mornings or at lunch.

However, the biggest reason was simply that MySQL is not designed for high-speed analytics. If we didn’t have the right indexes already built, or the SQL query wasn’t optimized, the MySQL query would inevitably drag or time out. Worse, it would bleed over and hurt the query performance of other customers and users.

My team was spending an average of ten hours per week monitoring, managing and fixing SQL queries and indexes, just to avoid having the database crash.

It got so bad that any time I saw a new query hit MySQL, my blood pressure would shoot up.

Drawbacks of Alternative Solutions

We looked at many potential solutions. To scale, we thought about creating additional MongoDB slaves, but decided it would be throwing money at a problem without solving it.

We also tried out Snowflake and liked some aspects of their solution. However, the one big hole I couldn’t fill was the lack of real-time data ingestion. We simply couldn’t afford to wait an hour for data to go from S3 into Snowflake.

We also looked at ClickHouse, but found too many tradeoffs, especially on the storage side. As an append-only data store, ClickHouse writes data immutably. Deleting or updating previously-written data becomes a lengthy batch process. And from experience, we know we need to backfill events and remove contacts all the time. When we do, we don’t want to run any reports and have those contacts still showing up. Again, it’s not real-time analytics if you can’t ingest, delete and update data in real time.

We also tried but rejected Amazon Redshift for being ineffective with smaller datasets, and too labor-intensive in general.

Scaling with Rockset

Through YouTube, I learned about Rockset. Rockset has the best of both worlds. It can write data quickly like a MongoDB or other transactional database, but is also really really fast at complex queries.

We deployed Rockset in December 2021. It took just one week. While MongoDB remained our database of record, we began streaming data to both Rockset and MySQL and using both to serve up queries.

Our experience with Rockset has been incredible. First is its speed at data ingestion. Because Rockset is a mutable database, updating and backfilling data is super fast. Being able to delete and rewrite data in real-time matters a lot for me. If a contact gets removed and I do a JOIN immediately afterward, I don’t want that contact to show up in any reports.

Rockset’s serverless model is also a huge boon. The way Rockset’s compute and storage independently and automatically grows or shrinks reduces the IT burden for my small team. There’s just zero database maintenance and zero worries.

Rockset also makes my developers super productive, with the easy-to-use UI and Write API and SQL support. And features like Converged Index and automatic query optimization eliminate the need to spend valuable engineering time on query performance. Every query runs fast out of the box. Our average query latency has shrunk from six seconds to 300 milliseconds. And that’s true for small datasets and large ones, up to 15 million events in one of our collections. We’ve cut the number of query errors and timed-out queries to zero.

I no longer worry that giving access to a new developer will crash the database for all users. Worst case scenario, a bad query will simply consume more RAM. But it will. Still. Just. Work. That’s a huge weight off my shoulders. And I don’t have to play database gatekeeper anymore.

Also, Rockset’s real-time performance means we no longer have to deal with batch analytics and stale caches. Now, we can aggregate 2 million event records in less than a second. Our customers can look at the actual time-stamped data, not some out-of-date derivative.

We also use Rockset for our internal reporting, ingesting and analyzing our virtual server usage with our hosting provider, Digital Ocean (watch this short video). Using a Cloudflare Worker, we regularly sync our Digital Ocean Droplets into a Rockset collection for easy reporting around cost and network topology. This is a much easier way to understand our usage and performance than using Digital Ocean’s native console.

Our experience with Rockset has been so good that we are now in the midst of a full migration from MySQL to Rockset. Older data is being backfilled from MySQL into Rockset, while all endpoints and queries in MySQL are slowly-but-surely being shifted over to Rockset.

If you have a growing technology-based business like ours and need easy-to-manage real-time analytics with instant scalability that makes your developers super-productive, then I recommend you check out Rockset.