Space-Time Tradeoff: Examining Snowflake's Compute Cost

March 5, 2021

Imagine you had a big book, and you were looking for the section that talks about dinosaurs. Would you read through every page or use the index? The index will save you a lot of time and energy. Now imagine that it’s a big book with a lot of words in really tiny print, and you need to find all the sections that talk about animals. Using the index will save you a LOT of time and energy. Extending this analogy to the world of data analytics: “time” is query latency and “energy” is compute cost.

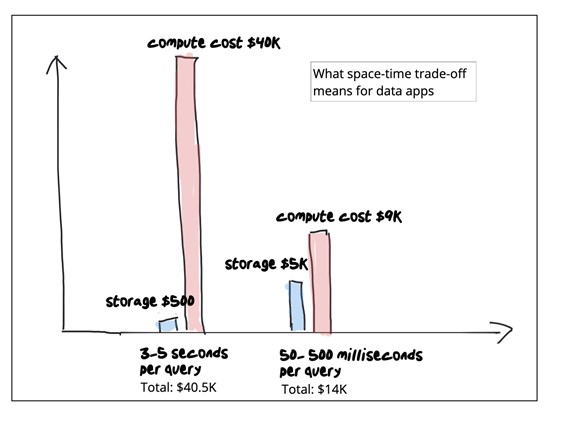

What has this got to do with Snowflake? I’m personally a huge fan of Snowflake - it’s massively scalable, it’s easy to use and if you’re making the right space-time tradeoff it’s very affordable. However if you make the wrong space-time tradeoff, you’ll find yourself throwing more and more compute at it while your team continues to complain about latency. But once you understand how it really works, you can reduce your Snowflake compute cost and get better query performance for certain use cases. I discuss Snowflake here, but you can generalize this to most warehouses.

Understanding the space-time tradeoff in data analytics

In computer science, a space-time tradeoff is a way of solving a problem or calculation in less time by using more storage space, or by solving a problem in very little space by spending a long time.

How Snowflake handles space-time tradeoff

When data is loaded into Snowflake, it reorganizes that data into its compressed, columnar format and stores it in cloud storage - this means it is highly optimized for space which directly translates to minimizing your storage footprint. The column design keeps data closer together, but requires computationally intensive scans to satisfy the query. This is an acceptable trade-off for a system heavily optimized for storage. It is budget-friendly for analysts running occasional queries, but compute becomes prohibitively expensive as query volume increases due to programmatic access by high concurrency applications.

How Rockset handles space-time tradeoff

On the other hand, Rockset is built for real-time analytics. It is a real-time indexing database designed for millisecond-latency search, aggregations and joins so it indexes every field in a Converged Index™ which combines a row index, column index and search index - this means it is highly optimized for time which directly translates to doing less work and reducing compute cost. This translates to a bigger storage footprint in exchange for faster queries and lesser compute. Rockset is not the best parking lot if you’re doing occasional queries on a PB-scale dataset. But it is best suited for serving high concurrency applications in the sub-100TB range because it makes an entirely different space-time tradeoff, resulting in faster performance at significantly lower compute costs.

Achieving lower query latency at lower compute cost

Snowflake uses columnar formats and cloud storage to optimize for storage cost. However for each query it needs to scan your data. To accelerate performance, query execution is split among multiple processors that scan large portions of your dataset in parallel. To execute queries faster, you can exploit locality using micropartitioning and clustering. Use parallelism to add more compute until at some point you hit the upper bound for performance. When each query is computationally intensive, and you start running many queries per second, the total compute cost per month explodes on you.

In stark contrast, Rockset indexes all fields, including nested fields, in a Converged Index™ which combines an inverted index, a columnar index and a row index. Given that each field is indexed, you can expect space amplification which is optimized using advanced storage architecture and compaction strategies. And data is served from hot storage ie NVMe SSD so your storage cost is higher. This is a good trade-off, because applications are a lot more compute-intensive. As of today, Rockset does not scan any faster than Snowflake. It simply tries really hard to avoid full scans. Our distributed SQL query engine uses multiple indexes in parallel, exploiting selective query patterns and accelerating aggregations over large numbers of records, to achieve millisecond latencies at significantly lower compute costs. The needle-in-a-haystack type queries go straight to the inverted index and completely avoid scans. With each WHERE clause in your query, Rockset is able to use the inverted index to execute faster and use lesser compute (which is the exact opposite of a warehouse).

One example of the type of optimizations required to achieve sub-second latencies: query parsing, optimizing, planning, scheduling takes about 1.2 ms on Rockset -- in most warehouses the query startup cost runs in 100s of milliseconds.

Achieving lower data latency at lower compute cost

A cloud data warehouse is highly optimized for batch inserts. Updates to an existing record typically result in a copy-on-write on large swaths of data. New writes are accumulated and when the batch is full, that batch must be compressed and published before it is queryable.

Continuous Data Ingestion in Minutes vs. Milliseconds

Snowpipe is Snowflake’s continuous data ingestion service. Snowpipe loads data within minutes after files are added to a stage and submitted for ingestion. In short, Snowpipe provides a “pipeline” for loading fresh data in micro-batches, but it typically takes many minutes and incurs very high compute cost. For example at 4K writes per second, this approach results in hundreds of dollars of compute per hour.

In contrast, Rockset is a fully mutable index which uses RocksDB LSM trees and a lockless protocol to make writes visible to existing queries as soon as they happen. Remote compaction speeds up the indexing of data even when dealing with bursty writes. The LSM index compresses data while allowing for inserts, updates and deletes of individual records so that new data is queryable within a second of it being generated. This mutability means that it is easy to stay in sync with OLTP databases or data streams. It means new data is queryable within a second of it being generated. This approach reduces both data latency and compute cost for real-time updates. For example, at 4K writes per second, new data is queryable in 350 milliseconds, and uses roughly 1/10th of the compute compared to Snowpipe.

Friends don’t let friends build apps on warehouses

Embedded content: https://youtu.be/-vaE0uB6eqc

Cloud data warehouses like Snowflake are purpose-built for massive scale batch analytics ie large scale aggregations and joins on PBs of historical data. Rockset is built for serving applications with milisecond-latency search, aggregations and joins. Snowflake is optimized for storage efficiency while Rockset is optimized for compute efficiency. One is great for batch analytics. The other is great for real-time analytics. Data apps have selective queries. They have low latency, high concurrency requirements. They are always on. If your warehouse compute cost is exploding, ask yourself if you’re making the right space-time tradeoff for your particular use case.