Stream Processing vs. Real-Time Analytics Databases

March 28, 2023

This is part two in Rockset’s Making Sense of Real-Time Analytics on Streaming Data series. In part 1, we covered the technology landscape for real-time analytics on streaming data. In this post, we’ll explore the differences between real-time analytics databases and stream processing frameworks. In the coming weeks we’ll publish the following:

- Part 3 will offer recommendations for operationalizing streaming data, including a few sample architectures

Unless you’re already familiar with basic streaming data concepts, please check out part 1 because we’re going to assume some level of working knowledge. With that, let’s dive in.

Differing Paradigms

Stream processing systems and real-time analytics (RTA) databases are both exploding in popularity. However, it’s difficult to talk about their differences in terms of “features”, because you can use either for almost any relevant use case. It’s easier to talk about the different approaches they take. This blog will clarify some conceptual differences, provide an overview of popular tools, and offer a framework for deciding which tools are best suited for specific technical requirements.

Let’s start with a quick summary of both stream processing and RTA databases. Stream processing systems allow you to aggregate, filter, join, and analyze streaming data. “Streams”, as opposed to tables in a relational database context, are the first-class citizens in stream processing. Stream processing approximates something like a continuous query; each event that passes through the system is analyzed according to pre-defined criteria and can be consumed by other systems. Stream processing systems are rarely used as persistent storage. They’re a “process”, not a “store”, which brings us to…

Real-time analytics databases are frequently used for persistent storage (though there are exceptions) and have a bounded context rather than an unbounded context. These databases can ingest streaming events, index the data, and enable millisecond-latency analytical queries against that data. Real-time analytics databases have a lot of overlap with stream processing; they both enable you to aggregate, filter, join, and analyze high volumes streaming data for use cases like anomaly detection, personalization, logistics, and more. The biggest difference between RTA databases and stream processing tools is that databases provide persistent storage, bounded queries, and indexing capabilities.

So do you need just one? Both? Let’s get into the details.

Stream Processing…How Does It Work?

Stream processing tools manipulate streaming data as it flows through a streaming data platform (Kafka being one of the most popular options, but there are others). This processing happens incrementally, as the streaming data arrives.

Stream processing systems typically employ a directed acyclic graph (DAG), with nodes that are responsible for different functions, such as aggregations, filtering, and joins. The nodes work in a daisy-chain fashion. Data arrives, it hits one node and is processed, and then passes the processed data to the next node. This continues until the data has been processed according to predefined criteria, referred to as a topology. Nodes can live on different servers, connected by a network, as a way to scale horizontally to handle massive volumes of data. This is what’s meant by a “continuous query”. Data comes in, it’s transformed, and its results are generated continuously. When the processing is complete, other applications or systems can subscribe to the processed stream and use it for analytics or within an application or service. One additional note: while many stream processing platforms support declarative languages like SQL, they also support Java, Scala, or Python, which are appropriate for advanced use cases like machine learning.

Stateful Or Not?

Stream processing operations can either be stateless or stateful. Stateless stream processing is far simpler. A stateless process doesn’t depend contextually on anything that came before it. Imagine an event containing purchase information. If you have a stream processor filtering out any purchase below $50, that operation is independent of other events, and therefore stateless.

Stateful stream processing takes into account the history of the data. Each incoming item depends not only on its own content, but on the content of the previous item (or multiple previous items). State is required for operations like running totals as well as more complex operations that join data from one stream to another.

For example, consider an application that processes a stream of sensor data. Let's say that the application needs to compute the average temperature for each sensor over a specific time window. In this case, the stateful processing logic would need to maintain a running total of the temperature readings for each sensor, as well as a count of the number of readings that have been processed for each sensor. This information would be used to compute the average temperature for each sensor over the specified time period or window.

These state designations are related to the “continuous query” concept that we discussed in the introduction. When you query a database, you’re querying the current state of its contents. In stream processing, a continuous, stateful query requires maintaining state separately from the DAG, which is done by querying a state store, i.e. an embedded database within the framework. State stores can reside in memory, on disk, or in deep storage, and there is a latency / cost tradeoff for each.

Stateful stream processing is quite complex. Architectural details are beyond the scope of this blog, but here are 4 challenges inherent in stateful stream processing:

- Managing state is expensive: Maintaining and updating the state requires significant processing resources. The state must be updated for each incoming data item, and this can be difficult to do efficiently, especially for high-throughput data streams.

- It’s tough to handle out-of-order data: this is an absolute must for all stateful stream processing. If data arrives out of order, the state needs to be corrected and updated, which adds processing overhead.

- Fault tolerance takes work: Significant steps must be taken to ensure data is not lost or corrupted in the event of a failure. This requires robust mechanisms for checkpointing, state replication, and recovery.

- Debugging and testing is tricky: The complexity of the processing logic and stateful context can make reproducing and diagnosing errors in these systems difficult. Much of this is due to the distributed nature of stream processing systems - multiple components and multiple data sources make root cause analysis a challenge.

While stateless stream processing has value, the more interesting use cases require state. Dealing with state makes stream processing tools more difficult to work with than RTA databases.

Where Do I Start With Processing Tools?

In the past few years, the number of available stream processing systems has grown significantly. This blog will cover a few of the big players, both open source and fully managed, to give readers a sense of what’s available

Apache Flink

Apache Flink is an open-source, distributed framework designed to perform real-time stream processing. It was developed by the Apache Software Foundation and is written in Java and Scala. Flink is one of the more popular stream processing frameworks due to its flexibility, performance, and community (Lyft, Uber, and Alibaba are all users, and the open-source community for Flink is quite active). It supports a wide variety of data sources and programming languages, and - of course - supports stateful stream processing.

Flink uses a dataflow programming model that allows it to analyze streams as they are generated, rather than in batches. It relies on checkpoints to correctly process data even if a subset of nodes fail. This is possible because Flink is a distributed system, but beware that its architecture requires considerable expertise and operational upkeep to tune, maintain, and debug.

Apache Spark Streaming

Spark Streaming is another popular stream processing framework, is also open source, and is appropriate for high complexity, high-volume use cases.

Unlike Flink, Spark Streaming uses a micro-batch processing model, where incoming data is processed in small, fixed-size batches. This results in higher end-to-end latencies. As for fault tolerance, Spark Streaming uses a mechanism called "RDD lineage" to recover from failures, which can sometimes cause significant overhead in processing time. There’s support for SQL through the Spark SQL library, but it’s more limited than other stream processing libraries, so double check that it can support your use case. On the other hand, Spark Streaming has been around longer than other systems, which makes it easier to find best practices and even free, open-source code for common use cases.

Confluent Cloud and ksqlDB

As of today, Confluent Cloud’s primary stream processing offering is ksqlDB, which combines KSQL’s familiar SQL-esque syntax with additional features such as connectors, a persistent query engine, windowing, and aggregation.

One important feature of ksqlDB is that it’s a fully-managed service, which makes it simpler to deploy and scale. Contrast this to Flink, which can be deployed in a variety of configurations, including as a standalone cluster, on YARN, or on Kubernetes (note that there are also fully-managed versions of Flink). ksqlDB supports a SQL-like query language, provides a range of built-in functions and operators, and can also be extended with custom user-defined functions (UDFs) and operators. ksqlDB is also tightly integrated with the Kafka ecosystem and is designed to work seamlessly with Kafka streams, topics, and brokers.

But Where Will My Data Live?

Real-time analytics (RTA) databases are categorically different than stream processing systems. They belong to a distinct and growing industry, and yet have some overlap in functionality. For an overview on what we mean by “RTA database”, check out this primer.

In the context of streaming data, RTA databases are used as a sink for streaming data. They are similarly useful for real-time analytics and data applications, but they serve up data when they’re queried, rather than continuously. When you ingest data into an RTA database, you have the option to configure ingest transformations, which can do things like filter, aggregate, and in some cases join data continuously. The data resides in a table, which you cannot “subscribe” to the same way you can with streams.

Besides the table vs. stream distinction, another important feature of RTA databases is their ability to index data; stream processing frameworks index very narrowly, while RTA databases have a large menu of options. Indexes are what allow RTA databases to serve millisecond-latency queries, and each type of index is optimized for a particular query pattern. The best RTA database for a given use case will often come down to indexing options. If you’re looking to execute incredibly fast aggregations on historical data, you’ll likely choose a column-oriented database with a primary index. Looking to look up data on a single order? Choose a database with an inverted index. The point here is that every RTA database makes different indexing decisions. The best solution will depend on your query patterns and ingest requirements.

One final point of comparison: enrichment. In fairness, you can enrich streaming data with additional data in a stream processing framework. You can essentially “join” (to use database parlance) two streams in real time. Inner joins, left or right joins, and full outer joins are all supported in stream processing. Depending on the system, you can also query the state to join historical data with live data. Just know that this can be difficult; there are many tradeoffs to be made around cost, complexity, and latency. RTA databases, on the other hand, have simpler methods for enriching or joining data. A common method is denormalizing, which is essentially flattening and aggregating two tables. This method has its issues, but there are other options as well. Rockset, for example, is able to perform inner joins on streaming data at ingest, and any type of join at query time.

The upshot of RTA databases is that they enable users to execute complex, millisecond-latency queries against data that’s 1-2 seconds old. Both stream processing frameworks and RTA databases allow users to transform and serve data. They both offer the ability to enrich, aggregate, filter, and otherwise analyze streams in real time.

Let’s get into three popular RTA databases and evaluate their strengths and weaknesses.

Elasticsearch

Elasticsearch is an open-source, distributed search database that allows you to store, search, and analyze large volumes of data in near real-time. It’s quite scalable (with work and expertise), and commonly used for log analysis, full-text search, and real-time analytics.

In order to enrich streaming data with additional data in Elasticsearch, you need to denormalize it. This requires aggregating and flattening data before ingestion. Most stream processing tools do not require this step. Elasticsearch users typically see high-performance for real-time analytical queries on text fields. However, if Elasticsearch receives a high volume of updates, performance degrades significantly. Furthermore, when an update or insert occurs upstream, Elasticsearch has to reindex that data for each of its replicas, which consumes compute resources. Many streaming data use cases are append only, but many are not; consider both your update frequency and denormalization before choosing Elasticsearch.

Apache Druid

Apache Druid is a high-performance, column-oriented, data store that is designed for sub-second analytical queries and real-time data ingestion. It is traditionally known as a timeseries database, and excels at filtering and aggregations. Druid is a distributed system, often used in big data applications. It’s known for both performance and being tricky to operationalize.

When it comes to transformations and enrichment, Druid has the same denormalization challenges as Elasticsearch. If you’re relying on your RTA database to join multiple streams, consider handling those operations elsewhere; denormalizing is a pain. Updates present a similar challenge. If Druid ingests an update from streaming data, it must reindex all data in the affected segment, which is a subset of data corresponding to a time range. This introduces both latency and compute cost. If your workload is update-heavy, consider choosing a different RTA database for streaming data. Finally, It’s worth noting that there are some SQL features that are not supported by Druid's query language, such as subqueries, correlated queries, and full outer joins.

Rockset

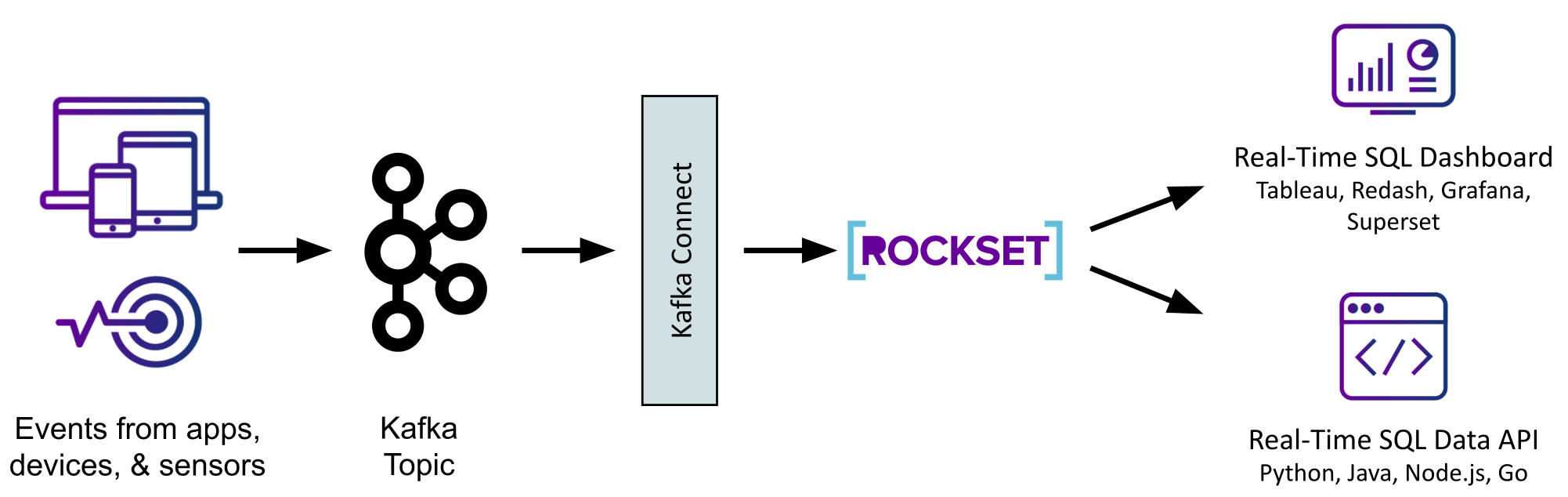

Rockset is a fully-managed real-time analytics database built for the cloud - there’s nothing to manage or tune. It enables millisecond-latency, analytical queries using full-featured SQL. Rockset is well suited to a wide variety of query patterns due to its Converged Index(™), which combines a column index, a row index, and a search index. Rockset’s custom SQL query optimizer automatically analyzes each query and chooses the appropriate index based on the fastest query plan. Additionally, its architecture allows for full isolation of compute used for ingesting data and compute used for querying data (more detail here).

When it comes to transformations and enrichment, Rockset has many of the same capabilities as stream processing frameworks. It supports joining streams at ingest (inner joins only), enriching streaming data with historical data at query time, and it entirely obviates denormalization. In fact, Rockset can ingest and index schemaless events data, including deeply nested objects and arrays. Rockset is a fully mutable database, and can handle updates without performance penalty. If ease of use and price / performance are important factors, Rockset is an ideal RTA database for streaming data. For a deeper dive on this topic, check out this blog.

Wrapping Up

Stream processing frameworks are well suited for enriching streaming data, filtering and aggregations, and advanced use cases like image recognition and natural language processing. However, these frameworks are not typically used for persistent storage and have only basic support for indexes - they often require an RTA database for storing and querying data. Further, they require significant expertise to set up, tune, maintain, and debug. Stream processing tools are both powerful and high maintenance.

RTA databases are ideal stream processing sinks. Their support for high-volume ingest and indexing enable sub-second analytical queries on real-time data. Connectors for many other common data sources, like data lakes, warehouses, and databases, allow for a broad range of enrichment capabilities. Some RTA databases, like Rockset, also support streaming joins, filtering, and aggregations at ingest.

The next post in the series will explain how to operationalize RTA databases for advanced analytics on streaming data. In the meantime, if you’d like to get smart on Rockset’s real-time analytics database, you can start a free trial right now. We provide $300 in credits and don’t require a credit card number. We also have many sample data sets that mimic the characteristics of streaming data. Go ahead and kick the tires.