Using Elasticsearch to Offload Real-Time Analytics from MongoDB

November 12, 2020

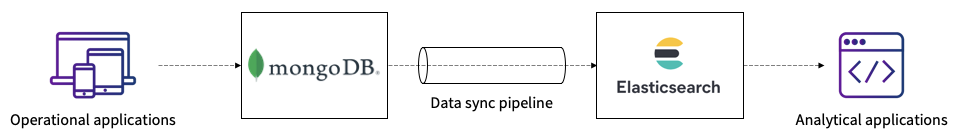

Offloading analytics from MongoDB establishes clear isolation between write-intensive and read-intensive operations. Elasticsearch is one tool to which reads can be offloaded, and, because both MongoDB and Elasticsearch are NoSQL in nature and offer similar document structure and data types, Elasticsearch can be a popular choice for this purpose. In most scenarios, MongoDB can be used as the primary data storage for write-only operations and as support for quick data ingestion. In this situation, you only need to sync the required fields in Elasticsearch with custom mappings and settings to get all the advantages of indexing.

This blog post will examine the various tools that can be used to sync data between MongoDB and Elasticsearch. It will also discuss the various advantages and disadvantages of establishing data pipelines between MongoDB and Elasticsearch to offload read operations from MongoDB.

Tools to Sync Data Between Elasticsearch and MongoDB

When setting up a data pipeline between MongoDB and Elasticsearch, it’s important to choose the right tool.

First of all, you need to determine if the tool is compatible with the MongoDB and Elasticsearch versions you are using. Additionally, your use case might affect the way you set up the pipeline. If you have static data in MongoDB, you may need a one-time sync. However, a real-time sync will be required if continuous operations are being performed in MongoDB and all of them need to be synced. Finally, you’ll need to consider whether or not data manipulation or normalization is needed before data is written to Elasticsearch.

If you need to replicate every MongoDB operation in Elasticsearch, you’ll need to rely on MongoDB oplogs (which are capped collections), and you’ll need to run MongoDB in cluster mode with replication on. Alternatively, you can configure your application in such a way that all operations are written to both MongoDB and Elasticsearch instances with guaranteed atomicity and consistency.

With these considerations in mind, let’s look at some tools that can be used to replicate MongoDB data to Elasticsearch.

Monstache

Monstache is one of the most comprehensive libraries available to sync MongoDB data to Elasticsearch. Written in Go, it supports up to and including the latest versions of MongoDB and Elasticsearch. Monstache is also available as a sync daemon and a container.

Mongo-Connector

Mongo-Connector, which is written in Python, is a widely used tool for syncing data between MongoDB and Elasticsearch. It only supports Elasticsearch through version 5.x and MongoDB through version 3.6.

Mongoosastic

Mongoosastic, written in NodeJS, is a plugin for Mongoose, a popular MongoDB data modeling tool based on ORM. Mongoosastic simultaneously writes data in MongoDB and Elasticsearch. No additional processes are needed for it to sync data.

Logstash JDBC Input Plugin

Logstash is Elastic’s official tool for integrating multiple input sources and facilitating data syncing with Elasticsearch. To use MongoDB as an input, you can employ the JDBC input plugin, which uses the MongoDB JDBC driver as a prerequisite.

Custom Scripts

If the tools described above don’t meet your requirements, you can write custom scripts in any of the preferred languages. Remember that sound knowledge of both the technologies and their management is necessary to write custom scripts.

Advantages of Offloading Analytics to Elasticsearch

By syncing data from MongoDB to Elasticsearch, you remove load from your primary MongoDB database and leverage several other advantages offered by Elasticsearch. Let’s take a look at some of these.

Reads Don’t Interfere with Writes

In most scenarios, reading data requires more resources than writing. For faster query execution, you may need to build indexes in MongoDB, which not only consumes a lot of memory but also slows down write speed.

Additional Analytical Functionality

Elasticsearch is a search server built on top of Lucene that stores data in a unique structure known as an inverted index. Inverted indexes are particularly helpful for full-text searches and document retrievals at scale. They can also perform aggregations and analytics and, in some cases, provide additional services not offered by MongoDB. Common use cases for Elasticsearch analytics include real-time monitoring, APM, anomaly detection, and security analytics.

Multiple Options to Store and Search Data

Another advantage of putting data into Elasticsearch is the possibility of indexing a single field in multiple ways by using some mapping configurations. This feature assists in storing multiple variations of a field that can be used for different types of analytic queries.

Better Support for Time Series Data

In applications that generate a huge volume of data, such as IoT applications, achieving high performance for both reads and writes can be a challenging task. Using MongoDB and Elasticsearch in combination can be a useful approach in these scenarios since it is then very easy to store the time series data in multiple indices (such as daily or monthly indices) and search those indices’ data via aliases.

Flexible Data Storage and an Incremental Backup Strategy

Elasticsearch supports incremental data backups using the _snapshot API. These backups can be performed on the file system or on cloud storage directly from the cluster. This feature deletes the old data from the Elasticsearch cluster once the backup is taken. Whenever access to old data is necessary, it can easily be restored from the backups using the _restore API. This allows you to determine how much data should be kept in the live cluster and also facilitates better resource assignments for the read operations in Elasticsearch.

Integration with Kibana

Once you put data into Elasticsearch, it can be connected to Kibana, which makes it easy to explore the data, plus build visualizations and dashboards.

Disadvantages of Offloading Analytics to Elasticsearch

While there are several advantages to indexing MongoDB data into Elasticsearch, there are a number of potential disadvantages you should be aware of as well, which we discuss below.

Building and Maintaining a Data Sync Pipeline

Whether you use a tool or write a custom script to build your data sync pipeline, maintaining consistency between the two data stores is always a challenging job. The pipeline can go down or simply become hard to manage due to several reasons, such as either of the data stores shutting down or any data format changes in the MongoDB collections. If the data sync relies on MongoDB oplogs, optimal oplog parameters should be configured to make sure that data is synced before it disappears from the oplogs. In addition, when you need to use many Elasticsearch features, complexity can increase if the tool you’re using is not customizable enough to support the necessary configurations, such as custom routing, parent-child or nested relationships, indexing referenced models, and converting dates to formats recognizable by Elasticsearch.

Data Type Conflicts

Both MongoDB and Elasticsearch are document-based and NoSQL data stores. Both of these data stores allow dynamic field ingestion. However, MongoDB is completely schemaless in nature, and Elasticsearch, despite being schemaless, does not allow different data types of a single field across the documents inside an index. This can be a major challenge if the schema of MongoDB collections is not fixed. It’s always advisable to define the schema in advance for Elasticsearch. This will avoid conflicts that can occur while indexing the data.

Data Security

MongoDB is a core database and comes with fine-grained security controls, such as built-in authentication and user creations based on built-in or configurable roles. Elasticsearch does not provide such controls by default. Although it is achievable in the X-Pack version of Elastic Stack, it’s hard to implement the security features in free versions. The Difficulty of Operating an Elasticsearch Cluster Elasticsearch is hard to manage at scale, especially if you’re already running a MongoDB cluster and setting up the data sync pipeline. Cluster management, horizontal scaling, and capacity planning come with some limitations. Challenges arise when the application is write-intensive and the Elasticsearch cluster does not have enough resources to cope with that load. Once shards are created, they can’t be increased on the fly. Instead, you need to create a new index with a new number of shards and perform reindexing, which is tedious.

Memory-Intensive Process

Elasticsearch is written in Java and writes data in the form of immutable Lucene segments. This underlying data structure causes these segments to continue merging in the background, which requires a significant amount of resources. Heavy aggregations also cause high memory utilization and may cause out of memory (OOM) errors. When these errors appear, cluster scaling is typically required, which can be a difficult task if you have a limited number of shards per index or budgetary concerns.

No Support for Joins

Elasticsearch does not support full-fledged relationships and joins. It does support nested and parent-child relationships, but they are usually slow to perform or require additional resources to operate. If your MongoDB data is based on references, it may be difficult to sync the data in Elasticsearch and write queries on top of them.

Deep Pagination Is Discouraged

One of the biggest advantages of using a core database is that you can create a cursor and iterate through the data while performing the sort operations. However, Elasticsearch’s normal search queries don’t allow you to fetch more than 10,000 documents from the total search result. Elasticsearch does have a dedicated scroll API to achieve this task, although it, too, comes with limitations.

Uses Elasticsearch DSL

Elasticsearch has its own query DSL, but you need a good hands-on understanding of its pitfalls to write optimized queries. While you can also write queries using Lucene Syntax, its grammar is tough to learn, and it lacks input sanitization. Elasticsearch DSL is not compatible with SQL visualization tools and, therefore, offers limited capabilities for performing analytics and building reports.

Summary

If your application is primarily performing text searches, Elasticsearch can be a good option for offloading reads from MongoDB. However, this architecture requires an investment in building and maintaining a data pipeline between the two tools.

The Elasticsearch cluster also requires considerable effort to manage and scale. If your use case involves more complex analytics—such as filters, aggregations, and joins—then Elasticsearch may not be your best solution. In these situations, Rockset, a real-time indexing database, may be a better fit. It provides both a native connector to MongoDB and full SQL analytics, and it’s offered as a fully managed cloud service.

Learn more about offloading from MongoDB using Rockset in these related blogs: