Offload Real-Time Reporting and Analytics from MongoDB Using PostgreSQL

September 3, 2020

MongoDB’s Advantages & Disadvantages

MongoDB has comprehensive aggregation capabilities. You can run many analytic queries on MongoDB without exporting your data to a third-party tool. However, these aggregation queries are frequently CPU-intensive and can block or delay the execution of other queries. For example, Online Transactional Processing (OLTP) queries are usually short read operations that have direct impacts on the user experience. If an OLTP query is delayed because a read-heavy aggregation query is running on your MongoDB cluster, your users will experience a slow down. This is never a good thing.

These delays can be avoided by offloading heavy read operations, such as aggregations for analytics, to another layer and letting the MongoDB cluster handle only write and OLTP operations. In this situation, the MongoDB cluster doesn’t have to keep up with the read requests. Offloading read operations to another database, such as PostgreSQL, is one option that accomplishes this end. After discussing what PostgreSQL is, this article will look at how to offload read operations to it. We’ll also examine some of the tradeoffs that accompany this choice.

What Is PostgreSQL?

PostgreSQL is an open-source relational database that has been around for almost three decades.

PostgreSQL has been gaining a lot of traction recently because of its ability to provide both RDBMS-like and NoSQL-like features which enable data to be stored in traditional rows and columns while also providing the option to store complete JSON objects.

PostgreSQL features unique query operators which can be used to query key and value pairs inside JSON objects. This capability allows PostgreSQL to be used as a document database as well. Like MongoDB, it provides support for JSON documents. But, unlike MongoDB, it uses a SQL-like query language to query even the JSON documents, allowing seasoned data engineers to write ad hoc queries when required.

Unlike MongoDB, PostgreSQL also allows you to store data in a more traditional row and column arrangement. This way, PostgreSQL can act as a traditional RDBMS with powerful features, such as joins.

The unique ability of PostgreSQL to act as both an RDBMS and a JSON document store makes it a very good companion to MongoDB for offloading read operations.

Connecting PostgreSQL to MongoDB

MongoDB’s oplog is used to maintain a log of all operations being performed on data. It can be used to follow all of the changes happening to the data in MongoDB and to replicate or mimic the data in another database, such as PostgreSQL, in order to make the same data available elsewhere for all read operations. Because MongoDB uses its oplog internally to replicate data across all replica sets, it is the easiest and most straightforward way of replicating MongoDB data outside of MongoDB.

If you already have data in MongoDB and want it replicated in PostgreSQL, export the complete database as JSON documents. Then, write a simple service which reads these JSON files and writes their data to PostgreSQL in the required format. If you are starting this replication when MongoDB is still empty, no initial migration is necessary, and you can skip this step.

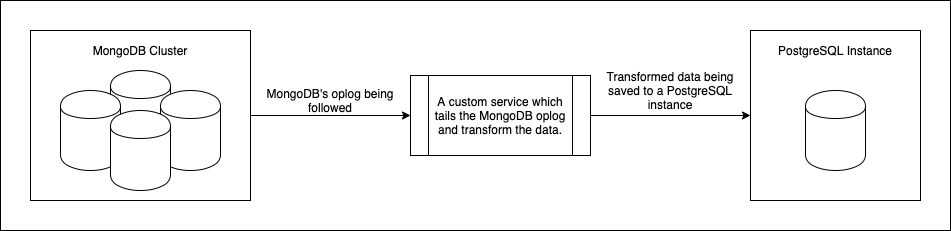

After you’ve migrated the existing data to PostgreSQL, you’ll have to write a service which creates a data flow pipeline from MongoDB to PostgreSQL. This new service should follow the MongoDB oplog and replicate the same operations in PostgreSQL that were running in MongoDB, similar to the process shown in Figure 1 below. Every change happening to the data stored in MongoDB should eventually be recorded in the oplog. This will be read by the service and applied to the data in PostgreSQL.

Figure 1: A data pipeline which continuously copies data from MongoDB to PostgreSQL

Schema Options in PostgreSQL

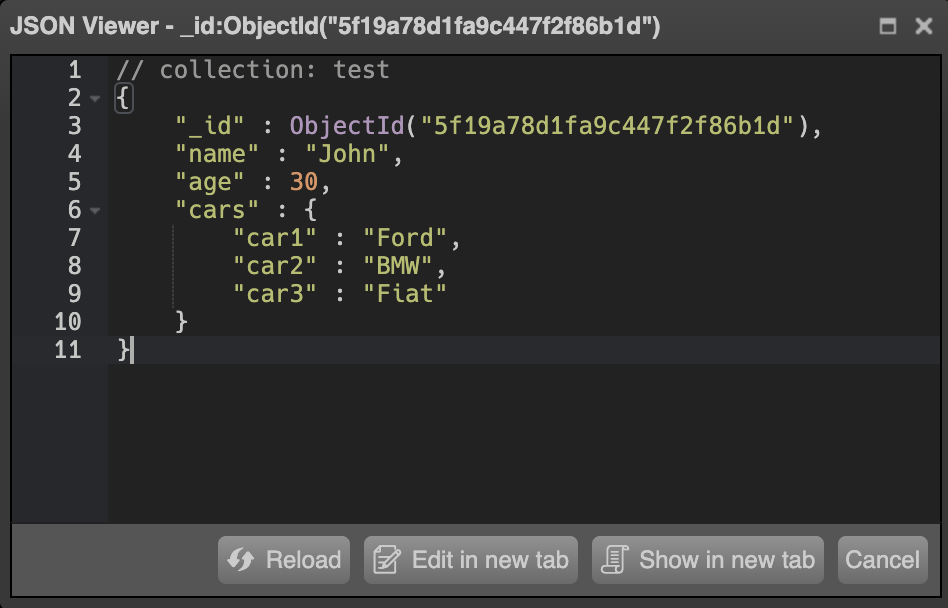

You now need to decide how you’ll be storing data in PostgreSQL, since the data from MongoDB will be in the form of JSON documents, as shown in Figure 2 below.

Figure 2: An example of data stored in MongoDB

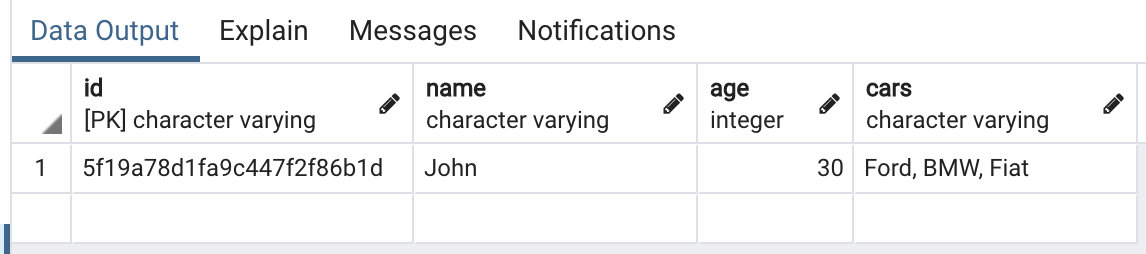

On the PostgreSQL end, you have two options. You can either store the complete JSON object as a column, or you can transform the data into rows and columns and store it in the traditional way, as shown in Figure 3 below. This decision should be based on the requirements of your application; there is no right or wrong way to do things here. PostgreSQL has query operations for both JSON columns and traditional rows and columns.

Figure 3: An example of data stored in PostgreSQL in tabular format

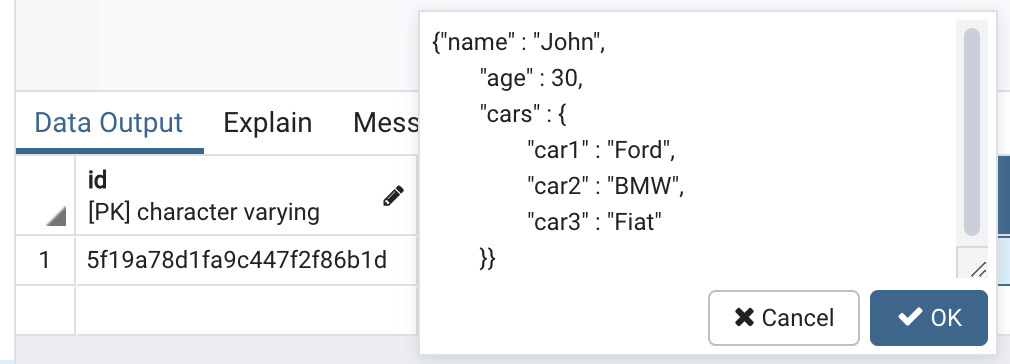

Once your migration service has the oplog data, it can be transformed according to your business needs. You can split one JSON document from MongoDB into multiple rows and columns or even multiple tables in PostgreSQL. Or, you can just copy the whole JSON document into one column in one table in PostgreSQL, as shown in Figure 4 below. What you do here depends on how you plan to query the data later on.

Figure 4: An example of data stored in PostgreSQL as a JSON column

Getting Data Ready for Querying in PostgreSQL

Now that your data is being replicated and continuously updated in PostgreSQL, you’ll need to make sure that it’s ready to take over read operations. To do so, figure out what indexes you need to create by looking at your queries and making sure that all combinations of fields are included in the indexes. This way, whenever there’s a read query on your PostgreSQL database, these indexes will be used and the queries will be performant. Once all of this is set up, you’re ready to route all of your read queries from MongoDB to PostgreSQL.

The Advantages of Using PostgreSQL for Real-Time Reporting and Analytics

There are many advantages of using PostgreSQL to offload read operations from MongoDB. To begin with, you can leverage the power of the SQL query language. Even though there are some third-party services which provide a MongoDB SQL solution, they often lack features which are essential either for MongoDB users or SQL queries.

Another advantage, if you decide to transform your MongoDB data into rows and columns, is the option of splitting your data into multiple tables in PostgreSQL to store it in a more relational format. Doing so will allow you to use PostgreSQL’s native SQL queries instead of MongoDB’s. Once you split your data into multiple tables, you’ll obviously have the option to join tables in your queries to do more with a single query. And, if you have joins and relational data, you can run complex SQL queries to perform a variety of aggregations. You can also create multiple indexes on your tables in PostgreSQL for better performing read operations. Keep in mind that there is no elegant way to join collections in MongoDB. However, this doesn’t mean that MongoDB aggregations are weak or are missing features.

Once you have a complete pipeline set up in PostgreSQL, you can easily switch the database from MongoDB to PostgreSQL for all of your aggregation operations. At this point, your analytic queries won’t affect the performance of your primary MongoDB database because you’ll have a completely separate set up for analytic and transactional workloads.

The Disadvantages of Using PostgreSQL for Real-Time Reporting and Analytics

While there are many advantages to offloading your read operations to PostgreSQL, a number of tradeoffs come along with the decision to take this step.

Complexity

To begin with, there’s the obvious new moving part in the architecture you will have to build and maintain—the data pipeline which follows MongoDB’s oplog and recreates it at the PostgreSQL end. If this one pipeline fails, data replication to PostgreSQL stops, creating a situation where the data in MongoDB and the data in PostgreSQL are not the same. Depending on the number of write operations happening in your MongoDB cluster, you might want to think about scaling this pipeline to avoid it becoming a bottleneck. It has the potential to become the single point of failure in your application.

Consistency

There can also be issues with data consistency, because it takes anywhere from a few milliseconds to several seconds for the data changes in MongoDB to be replicated in PostgreSQL. This lag time could easily go up to minutes if your MongoDB write operations experience a lot of traffic.

Because PostgreSQL, which is mostly an RDBMS, is your read layer, it might not be the best fit for all applications. For example, in applications that process data originating from a variety of sources, you might have to use a tabular data structure in some tables and JSON columns in others. Some of the advantageous features of an RDBMS, such as joins, might not work as expected in these situations. In addition, offloading reads to PostgreSQL might not be the best option when the data you’re dealing with is highly unstructured. In this case, you’ll again end up replicating the absence of structure even in PostgreSQL.

Scalability

Finally, it’s important to note that PostgreSQL was not designed to be a distributed database. This means there’s no way to natively distribute your data across multiple nodes. If your data is reaching the limits of your node’s storage, you’ll have to scale up vertically by adding more storage to the same node instead of adding more commodity nodes and creating a cluster. This necessity might prevent PostgreSQL from being your best solution.

Before you make the decision to offload your read operations to PostgreSQL—or any other SQL database, for that matter—make sure that SQL and RDBMS are good options for your data.

Considerations for Offloading Read-Intensive Applications from MongoDB

If your application works mostly with relational data and SQL queries, offloading all of your read queries to PostgreSQL allows you to take full advantage of the power of SQL queries, aggregations, joins, and all of the other features described in this article. But, if your application deals with a lot of unstructured data coming from a variety of sources, this option might not be a good fit.

It’s important to decide whether or not you want to add an extra read-optimized layer early on in the development of the project. Otherwise, you’ll likely end up spending a significant amount of time and money creating indexes and migrating data from MongoDB to PostgreSQL at a later stage. The best way to handle the migration to PostgreSQL is by moving small pieces of your data to PostgreSQL and testing the application’s performance. If it works as expected, you can continue the migration in small pieces until, eventually, the complete project has been migrated.

If you’re collecting structured or semi-structured data which works well with PostgreSQL, offloading read operations to PostgreSQL is a great way to avoid impacting the performance of your primary MongoDB database.

Rockset & Elasticsearch: Alternatives for Offloading From MongoDB

If you’ve made the decision to offload reporting and analytics from MongoDB for the reasons discussed above but have more complex scalability requirements or less structured data, you may want to consider other real-time databases, such as Elasticsearch and Rockset. Both Elasticsearch and Rockset are scale-out alternatives that allow schemaless data ingestion and leverage indexing to speed up analytics. Like PostgreSQL, Rockset also supports full-featured SQL, including joins.

Learn more about offloading from MongoDB using Elasticsearch and Rockset options in these related blogs: