What Do I Do When My Snowflake Query Is Slow? Part 2: Solutions

January 25, 2022

Snowflake’s data cloud enables companies to store and share data, then analyze this data for business intelligence. Although Snowflake is a great tool, sometimes querying vast amounts of data runs slower than your applications — and users — require.

In our first article, What Do I Do When My Snowflake Query Is Slow? Part 1: Diagnosis, we discussed how to diagnose slow Snowflake query performance. Now it’s time to address those issues.

We’ll cover Snowflake performance tuning, including reducing queuing, using result caching, tackling disk spilling, rectifying row explosion, and fixing inadequate pruning. We’ll also discuss alternatives for real-time analytics that might be what you’re looking for if you are in need of better real-time query performance.

Reduce Queuing

Snowflake lines up queries until resources are available. It’s not good for queries to stay queued too long, as they will be aborted. To prevent queries from waiting too long, you have two options: set a timeout or adjust concurrency.

Set a Timeout

Use STATEMENT_QUEUED_TIMEOUT_IN_SECONDS to define how long your query should stay queued before aborting. With a default value of 0, there is no timeout.

Change this number to abort queries after a specific time to avoid too many queries queuing up. As this is a session-level query, you can set this timeout for particular sessions.

Adjust the Maximum Concurrency Level

The total load time depends on the number of queries your warehouse executes in parallel. The more queries that run in parallel, the harder it is for the warehouse to keep up, impacting Snowflake performance.

To rectify this, use Snowflake’s MAX_CONCURRENCY_LEVEL parameter. Its default value is 8, but you can set the value to the number of resources you want to allocate.

Keeping the MAX_CONCURRENCY_LEVEL low helps improve execution speed, even for complex queries, as Snowflake allocates more resources.

Use Result Caching

Every time you execute a query, it caches, so Snowflake doesn’t need to spend time retrieving the same results from cloud storage in the future.

One way to retrieve results directly from the cache is by RESULT_SCAN.

Fox example:

select * from table(result_scan(last_query_id()))

The LAST_QUERY_ID is the previously executed query. RESULT_SCAN brings the results directly from the cache.

Tackle Disk Spilling

When data spills to your local machine, your operations must use a small warehouse. Spilling to remote storage is even slower.

To tackle this issue, move to a more extensive warehouse with enough memory for code execution.

alter warehouse mywarehouse

warehouse_size = XXLARGE

auto_suspend = 300

auto_resume = TRUE;

This code snippet enables you to scale up your warehouse and suspend query execution automatically after 300 seconds. If another query is in line for execution, this warehouse resumes automatically after resizing is complete.

Restrict the result display data. Choose the columns you want to display and avoid the columns you don’t need.

select last_name

from employee_table

where employee_id = 101;

select first_name, last_name, country_code, telephone_number, user_id from

employee_table

where employee_type like "%junior%";

The first query above is specific as it retrieves the last name of a particular employee. The second query retrieves all the rows for the employee_type of junior, with multiple other columns.

Rectify Row Explosion

Row explosion happens when a JOIN query retrieves many more rows than expected. This can occur when your join accidentally creates a cartesian product of all rows retrieved from all tables in your query.

Use the Distinct Clause

One way to reduce row explosion is by using the DISTINCT clause that neglects duplicates.

For example:

SELECT DISTINCT a.FirstName, a.LastName, v.District

FROM records a

INNER JOIN resources v

ON a.LastName = v.LastName

ORDER BY a.FirstName;

In this snippet, Snowflake only retrieves the distinct values that satisfy the condition.

Use Temporary Tables

Another option to reduce row explosion is by using temporary tables.

This example shows how to create a temporary table for an existing table:

CREATE TEMPORARY TABLE tempList AS

SELECT a,b,c,d FROM table1

INNER JOIN table2 USING (c);

SELECT a,b FROM tempList

INNER JOIN table3 USING (d);

Temporary tables exist until the session ends. After that, the user cannot retrieve the results.

Check Your Join Order

Another option to fix row explosion is by checking your join order. Inner joins may not be an issue, but the table access order impacts the output for outer joins.

Snippet one:

orders LEFT JOIN products

ON products.id = products.id

LEFT JOIN entries

ON entries.id = orders.id

AND entries.id = products.id

Snippet two:

orders LEFT JOIN entries

ON entries.id = orders.id

LEFT JOIN products

ON products.id = orders.id

AND products.id = entries.id

In theory, outer joins are neither associative nor commutative. Thus, snippet one and snippet two do not return the same results. Be aware of the join type you use and their order to save time, retrieve the expected results, and avoid row explosion issues.

Fix Inadequate Pruning

While running a query, Snowflake prunes micro-partitions, then the remaining partitions’ columns. This makes scanning easy because Snowflake now does not have to go through all the partitions.

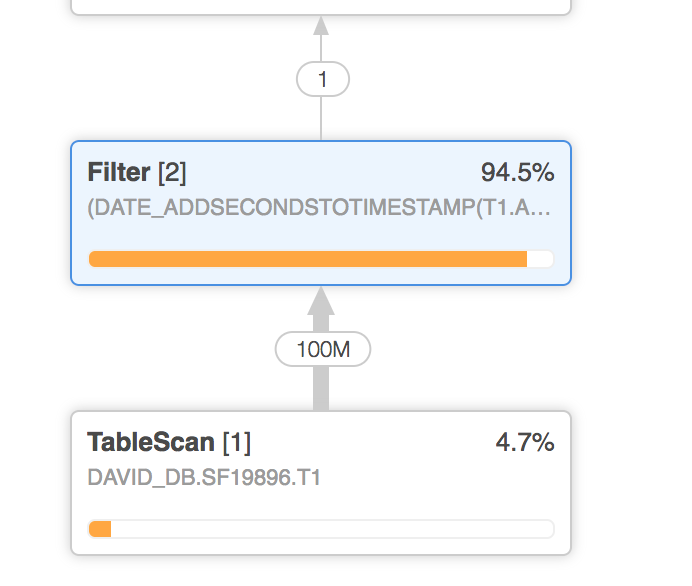

However, pruning does not happen perfectly all the time. Here is an example:

When executing the query, the filter removes about 94 percent of the rows. Snowflake prunes the remaining partitions. That means the query scanned only a portion of the four percent of the rows retrieved.

Data clustering can significantly improve this. You can cluster a table when you create it or when you alter an existing table.

CREATE TABLE recordsTable (C1 INT, C2 INT) CLUSTER BY (C1, C2);

ALTER TABLE recordsTable CLUSTER BY (C1, C2);

Data clustering has limitations. Tables must have a large number of records and shouldn’t change frequently. The right time to cluster is when you know the query is slow, and you know that you can enhance it.

In 2020, Snowflake deprecated the manual re-clustering feature, so that is not an option anymore.

Wrapping Up Snowflake Performance Issues

We explained how to use queuing parameters, efficiently use Snowflake’s cache, and fix disk spilling and exploding rows. It’s easy to implement all these methods to help improve your Snowflake query performance.

Another Strategy for Improving Query Performance: Indexing

Snowflake can be a good solution for business intelligence, but it’s not always the optimum choice for every use case, for example, scaling real-time analytics, which requires speed. For that, consider supplementing Snowflake with a database like Rockset.

High-performance real-time queries and low latency are Rockset's core features. Rockset provides less than one second of data latency on large data sets, making new data ready to query quickly. Rockset excels at data indexing, which Snowflake doesn’t do, and it indexes all the fields, making it faster for your application to scan through and provide real-time analytics. Rockset is far more compute-efficient than Snowflake, delivering queries that are both fast and economical.

Rockset is an excellent complement to your Snowflake data warehouse. Sign up for your free Rockset trial to see how we can help drive your real-time analytics.

Rockset is the real-time analytics database in the cloud for modern data teams. Get faster analytics on fresher data, at lower costs, by exploiting indexing over brute-force scanning.