Why Real-Time Analytics Requires Both the Flexibility of NoSQL and Strict Schemas of SQL Systems

July 6, 2022

This is the fifth post in a series by Rockset's CTO and Co-founder Dhruba Borthakur on Designing the Next Generation of Data Systems for Real-Time Analytics. We'll be publishing more posts in the series in the near future, so subscribe to our blog so you don't miss them!

Posts published so far in the series:

- Why Mutability Is Essential for Real-Time Data Analytics

- Handling Out-of-Order Data in Real-Time Analytics Applications

- Handling Bursty Traffic in Real-Time Analytics Applications

- SQL and Complex Queries Are Needed for Real-Time Analytics

- Why Real-Time Analytics Requires Both the Flexibility of NoSQL and Strict Schemas of SQL Systems

The hardest substance on earth, diamonds, have surprisingly limited uses: saw blades, drilling bits, wedding rings and other industrial applications.

By contrast, one of the softer metals in nature, iron, can be transformed for an endless list of applications: the sharpest blades, the tallest skyscrapers, the heaviest ships, and soon, if Elon Musk is right, the most cost-effective EV car batteries.

In other words, iron’s incredible usefulness is because it is both rigid and flexible.

Similarly, databases are only useful for today’s real-time analytics if they can be both strict and flexible.

Traditional databases, with their wholly-inflexible structures, are brittle. So are schemaless NoSQL databases, which capably ingest firehoses of data but are poor at extracting complex insights from that data.

Customer personalization, autonomic inventory management, operational intelligence and other real-time use cases require databases that stricly enforce schemas and possess the flexibility to automatically redefine those schemas based on the data itself. This satisfies the three key requirements of modern analytics:

- Support both scale and speed for ingesting data

- Support flexible schemas that can instantly adapt to the diversity of streaming data

- Support fast, complex SQL queries that require a strict structure or schema

Yesterday’s Schemas: Hard but Fragile

The classic schema is the relational database table: rows of entities, e.g. people, and columns of different attributes (age or gender) of those entities. Typically stored in SQL statements, the schema also defines all the tables in the database and their relationship to each other.

Traditionally, schemas are strictly enforced. Incoming data that does not match the predefined attributes or data types is automatically rejected by the database, with a null value stored in its place or the entire record skipped completely. Changing schemas was difficult and rarely done. Companies carefully engineered their ETL data pipelines to align with their schemas (not vice-versa).

There were good reasons back in the day for pre-creating and strictly enforcing schemas. SQL queries were easier to write. They also ran a lot faster. Most importantly, rigid schemas prevented query errors created by bad or mismatched data.

However, strict, unchanging schemas have huge disadvantages today. First, there are many more sources and types of data than there were in the 90s. Many of them cannot easily fit into the same schema structure. Most notable are real-time event streams. Streaming and time-series data usually arrives in semi-structured formats that change frequently. As those formats change, so must the schemas.

Second, as business conditions change, companies continually need to analyze new data sources, run different types of analytics – or simply update their data types or labels.

Here’s an example. Back when I was on the data infrastructure team at Facebook, we were involved in an ambitious initiative called Project Nectar. Facebook’s user base was exploding. Nectar was an attempt to log every user action with a standard set of attributes. Standardizing this schema worldwide would enable us to analyze trends and spot anomalies on a global level. After much internal debate, our team agreed to store every user event in Hadoop using a timestamp in a column named time_spent that had a resolution of a second.

After debuting Project Nectar, we presented it to a new set of application developers. The first question they asked: “Can you change the column time-spent from seconds to milliseconds?” In other words, they casually asked us to rebuild a fundamental aspect of Nectar’s schema post-launch!

ETL pipelines can make all your data sources fit under the same proverbial roof (that’s what the T, which stands for data transformation, is all about). However, ETL pipelines are time-consuming and expensive to set up, operate, and manually update as your data sources and types evolve.

Attempts at Flexibility

Strict, unchanging schemas destroy agility, which all companies need today. Some database makers responded to this problem by making it easier for users to manually modify their schemas. There were heavy tradeoffs, though.

Changing schemas using the SQL ALTER-TABLE command takes a lot of time and processing power, leaving your database offline for an extended time. And once the schema is updated, there is a high risk of inadvertently corrupting your data and crippling your data pipeline.

Take PostgreSQL, the popular transactional database that many companies have also used for simple analytics. To properly ingest today’s fast-changing event streams, PostgreSQL must change its schema through a manual ALTER-TABLE command in SQL. This locks the database table and freezes all queries and transactions for as long as ALTER-TABLE takes to finish. According to many commentators, ALTER-TABLE takes a long time, whatever the size of your PostgreSQL table. It also requires a lot of CPU, and creates the risk of data errors and broken downstream applications.

The same problems face the NewSQL database, CockroachDB. CockroachDB promises online schema changes with zero downtime. However, Cockroach warns against doing more than one schema change at a time. It also strongly cautions against changing schemas during a transaction. And just like PostgreSQL, all schema changes in CockroachDB must be performed manually by the user. So CockroachDB’s schemas are far less flexible than they first appear. And the same risk of data errors and data downtime also exists.

NoSQL Comes to the Rescue ... Not

Other makers released NoSQL databases that greatly relaxed schemas or abandoned them altogether.

This radical design choice made NoSQL databases — document databases, key-value stores, column-oriented databases and graph databases — great at storing huge amounts of data of varying kinds together, whether it is structured, semi-structured or polymorphic.

Data lakes built on NoSQL databases such as Hadoop are the best example of scaled-out data repositories of mixed types. NoSQL databases are also fast at retrieving large amounts of data and running simple queries.

However, there are real disadvantages to lightweight/no-weight schema databases.

While lookups and simple queries can be fast and easy, queries that are complex. nested and must return precise answers tend to run slowly and be difficult to create. That’s due to the lack of SQL support, and their tendency to poorly support indexes and other query optimizations. Complex queries are even more likely to time out without returning results due to NoSQL’s overly-relaxed data consistency model. Fixing and rerunning the queries is a time-wasting hassle. And when it comes to the cloud and developers, that means wasted money.

Take the Hive analytics database that is part of the Hadoop stack. Hive does support flexible schemas, but crudely. When it encounters semi-structured data that does not fit neatly into its existing tables and databases, it simply stores the data as a JSON-like blob. This keeps the data intact. However, at query time, the blobs need to be deserialized first, a slow and inefficient process.

Or take Amazon DynamoDB, which uses a schemaless key-value store. DynamoDB is ultra-fast at reading specific records. Multi-record queries tend to be much slower, though building secondary indexes can help. The bigger issue is that DynamoDB does not support any JOINs or any other complex queries.

The Right Way to Strict and Flexible Schemas

There is a winning database formula, however, that blends the flexible scalability of NoSQL with the accuracy and reliability of SQL, while adding a dash of the low-ops simplicity of cloud-native infrastructure.

Rockset is a real-time analytics platform built on top of the RocksDB key-value store. Like other NoSQL databases, Rockset is highly scalable, flexible and fast at writing data. But like SQL relational databases, Rockset has the advantages of strict schemas: strong (but dynamic) data types and high data consistency, which, along with our automatic and efficient Converged Indexing™, combine to ensure your complex SQL queries are fast.

Rockset automatically generates schemas by inspecting data for fields and data types as it is stored. And Rockset can handle any type of data thrown at it, including:

- JSON data with deeply-nested arrays and objects, as well as mixed data types and sparse fields

- Real-time event streams that constantly add new fields over time

- New data types from new data sources

Supporting schemaless ingest along with Converged Indexing enables Rockset to reduce data latency by removing the need for upstream data transformations.

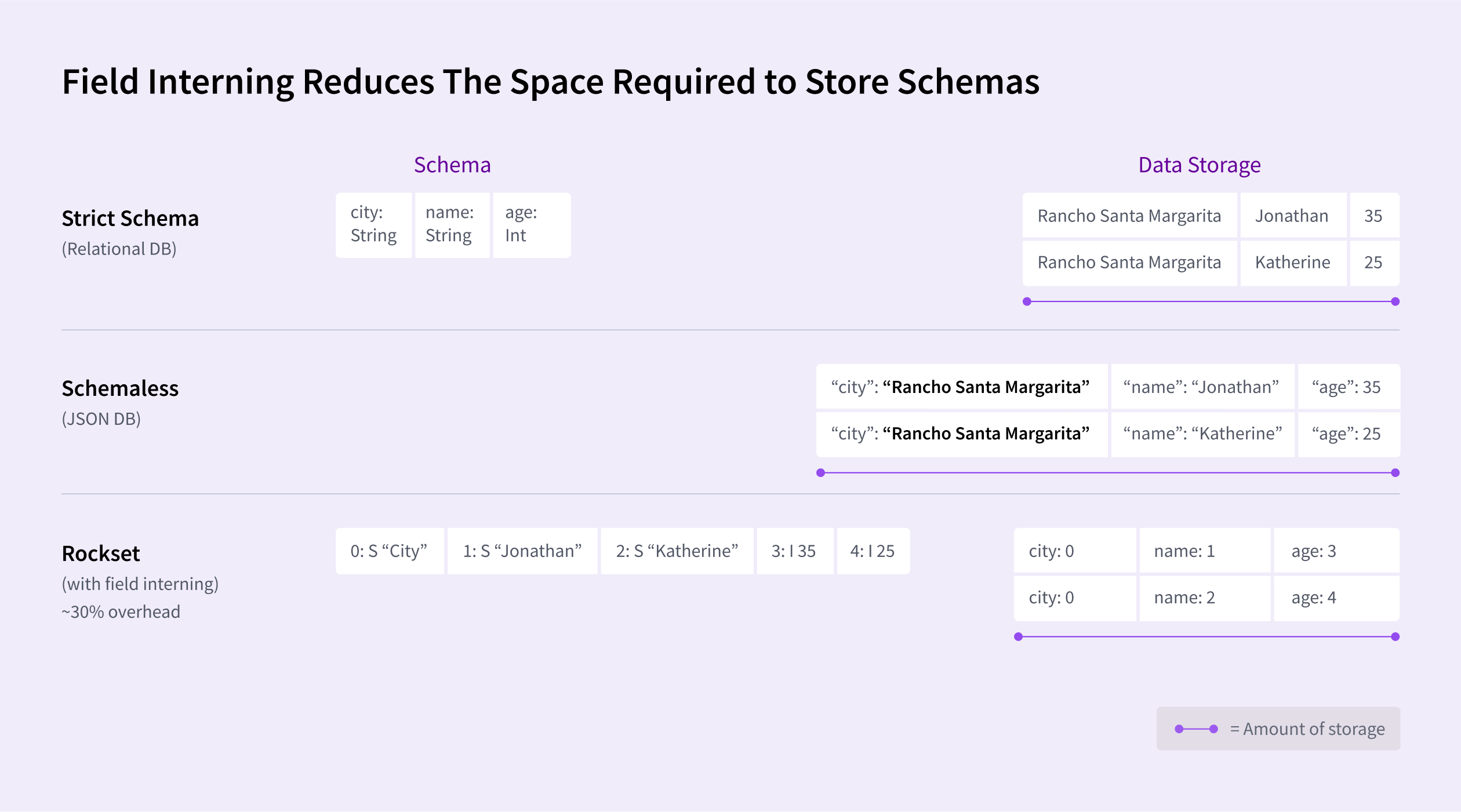

Rockset has other optimization features to reduce storage costs and accelerate queries. For every field of every record, Rockset stores the data type. This maximizes query performance and minimizes errors. And we do this efficiently through a feature called field interning that reduces the required storage by up to 30 percent compared to a schemaless JSON-based document database, for example.

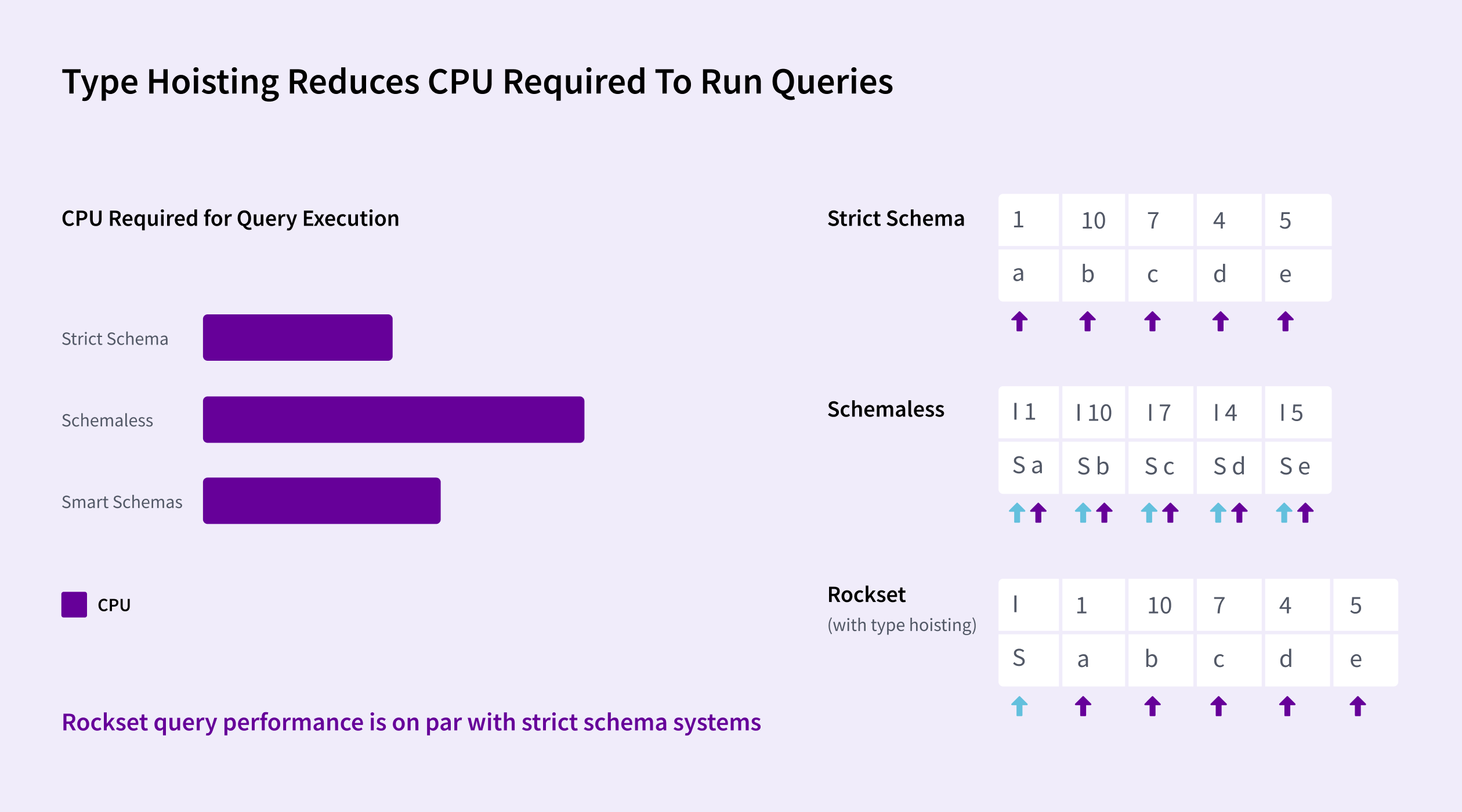

Rockset uses something called type hoisting that reduces processing time for queries. Adjacent items that have the same type can hoist their type information to apply to the entire set of items rather than storing with every individual item in the list. This enables vectorized CPU instructions to process the entire set of items quickly. This implementation – along with our Converged Index™ – enables Rockset queries to run as fast as databases with rigid schemas without incurring additional compute.

Some NoSQL database makers claim only they can support flexible schemas well. It's not true and is just one of many outdated data myths that modern offerings such as Rockset are busting.

I invite you to learn more about how Rockset’s architecture offers the best of traditional and modern — SQL and NoSQL — schemaless data ingestion with automatic schematization. This architecture fully empowers complex queries and will satisfy the requirements of the most demanding real-time data applications with surprising efficiency.