Handling Bursty Traffic in Real-Time Analytics Applications

May 12, 2022

This is the third post in a series by Rockset's CTO Dhruba Borthakur on Designing the Next Generation of Data Systems for Real-Time Analytics. We'll be publishing more posts in the series in the near future, so subscribe to our blog so you don't miss them!

Posts published so far in the series:

- Why Mutability Is Essential for Real-Time Data Analytics

- Handling Out-of-Order Data in Real-Time Analytics Applications

- Handling Bursty Traffic in Real-Time Analytics Applications

- SQL and Complex Queries Are Needed for Real-Time Analytics

- Why Real-Time Analytics Requires Both the Flexibility of NoSQL and Strict Schemas of SQL Systems

Developers, data engineers and site reliability engineers may disagree on many things, but one thing they can agree on is that bursty data traffic is almost unavoidable.

It’s well documented that web retail traffic can spike 10x during Black Friday. There are many other occasions where data traffic balloons suddenly. Halloween causes consumer social media apps to be inundated with photos. Major news events can set the markets afire with electronic trades. A meme can suddenly go viral among teenagers.

In the old days of batch analytics, bursts of data traffic were easier to manage. Executives didn’t expect reports more than once a week nor dashboards to have up-to-the-minute data. Though some data sources like event streams were starting to arrive in real time, neither data nor queries were time sensitive. Databases could just buffer, ingest and query data on a regular schedule.

Moreover, analytical systems and pipelines were complementary, not mission-critical. Analytics wasn’t embedded into applications or used for day-to-day operations as it is today. Finally, you could always plan ahead for bursty traffic and overprovision your database clusters and pipelines. It was expensive, but it was safe.

Why Bursty Data Traffic Is an Issue Today

These conditions have completely flipped. Companies are rapidly transforming into digital enterprises in order to emulate disruptors such as Uber, Airbnb, Meta and others. Real-time analytics now drive their operations and bottom line, whether it is through a customer recommendation engine, an automated personalization system or an internal business observability platform. There’s no time to buffer data for leisurely ingestion. And because of the massive amounts of data involved today, overprovisioning can be financially ruinous for companies.

Many databases claim to deliver scalability on demand so that you can avoid expensive overprovisioning and keep your data-driven operations humming. Look more closely, and you’ll see these databases usually employ one of these two poor man’s solutions:

- Manual reconfigurations. Many systems require system administrators to manually deploy new configuration files to scale up databases. Scale-up cannot be triggered automatically through a rule or API call. That creates bottlenecks and delays that are unacceptable in real time.

- Offloading complex analytics onto data applications. Other databases claim their design provides immunity to bursty data traffic. Key-value and document databases are two good examples. Both are extremely fast at the simple tasks they are designed for — retrieving individual values or whole documents — and that speed is largely unaffected by bursts of data. However, these databases tend to sacrifice support for complex SQL queries at any scale. Instead, these database makers have offloaded complex analytics onto application code and their developers, who have neither the skills nor the time to constantly update queries as data sets evolve. This query optimization is something that all SQL databases excel at and do automatically.

Bursty data traffic also afflicts the many databases that are by default deployed in a balanced configuration or were not designed to segregate the tasks of compute and storage. Not separating ingest from queries means that they directly affect the other. Writing a large amount of data slows down your reads, and vice-versa.

This problem — potential slowdowns caused by contention between ingest and query compute — is common to many Apache Druid and Elasticsearch systems. It’s less of an issue with Snowflake, which avoids contention by scaling up both sides of the system. That’s an effective, albeit expensive, overprovisioning strategy.

Database makers have experimented with different designs to scale for bursts of data traffic without sacrificing speed, features or cost. It turns out there is a cost-effective and performant way and a costly, inefficient way.

Lambda Architecture: Too Many Compromises

A decade ago, a multitiered database architecture called Lambda began to emerge. Lambda systems try to accommodate the needs of both big data-focused data scientists as well as streaming-focused developers by separating data ingestion into two layers. One layer processes batches of historic data. Hadoop was initially used but has since been replaced by Snowflake, Redshift and other databases.

There is also a speed layer typically built around a stream-processing technology such as Amazon Kinesis or Spark. It provides instant views of the real-time data. The serving layer — often MongoDB, Elasticsearch or Cassandra — then delivers those results to both dashboards and users’ ad hoc queries.

When systems are created out of compromise, so are their features. Maintaining two data processing paths creates extra work for developers who must write and maintain two versions of code, as well as greater risk of data errors. Developers and data scientists also have little control over the streaming and batch data pipelines.

Finally, most of the data processing in Lambda happens as new data is written to the system. The serving layer is a simpler key-value or document lookup that does not handle complex transformations or queries. Instead, data-application developers must handle all the work of applying new transformations and modifying queries. Not very agile. With these problems and more, it’s no wonder that the calls to “kill Lambda” keep increasing year over year.

ALT: The Best Architecture for Bursty Traffic

There is an elegant solution to the problem of bursty data traffic.

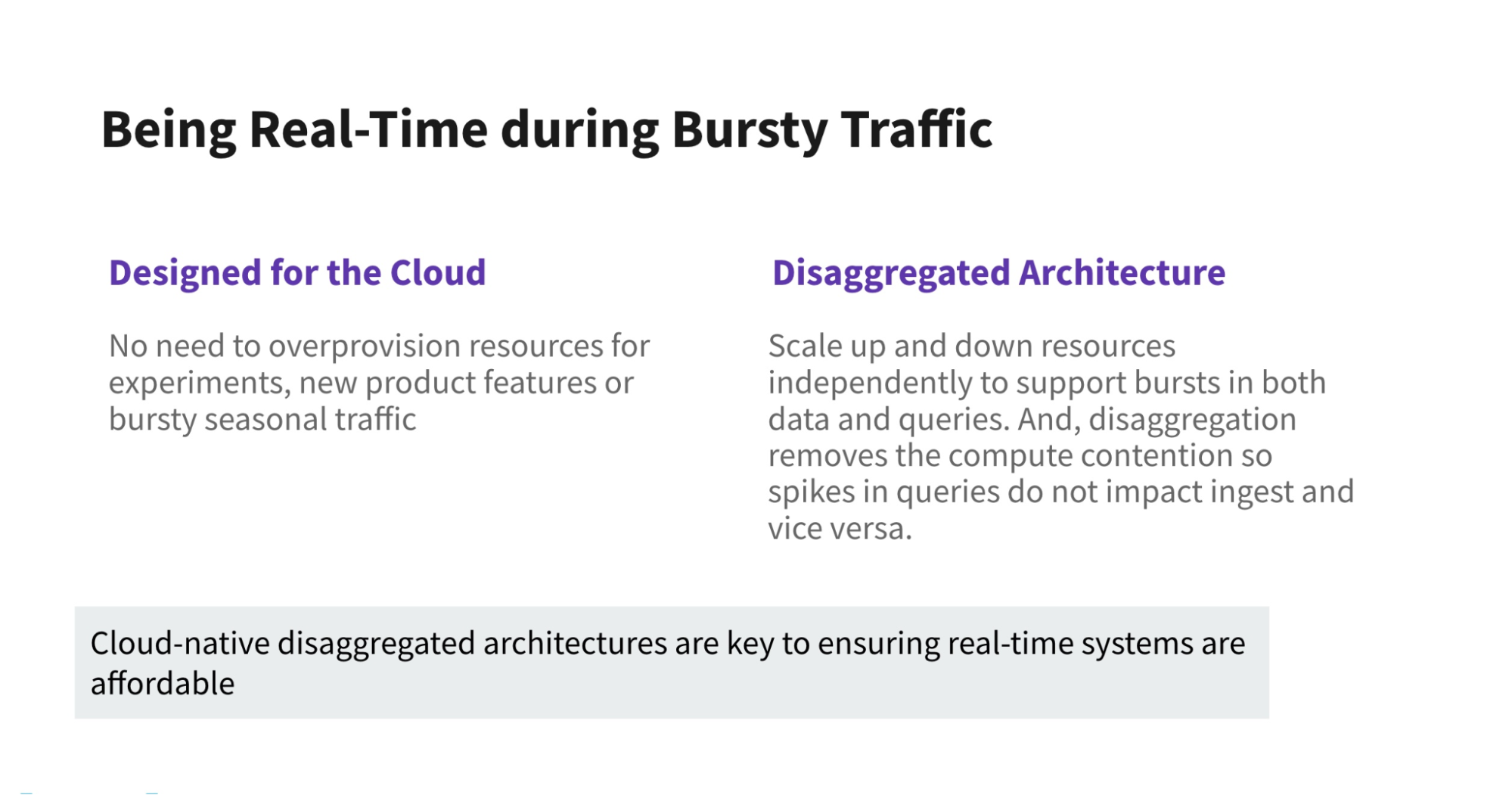

To efficiently scale to handle bursty traffic in real time, a database would separate the functions of storing and analyzing data. Such a disaggregated architecture enables ingestion or queries to scale up and down as needed. This design also removes the bottlenecks created by compute contention, so spikes in queries don’t slow down data writes, and vice-versa. Finally, the database must be cloud native, so all scaling is automatic and hidden from developers and users. No need to overprovision in advance.

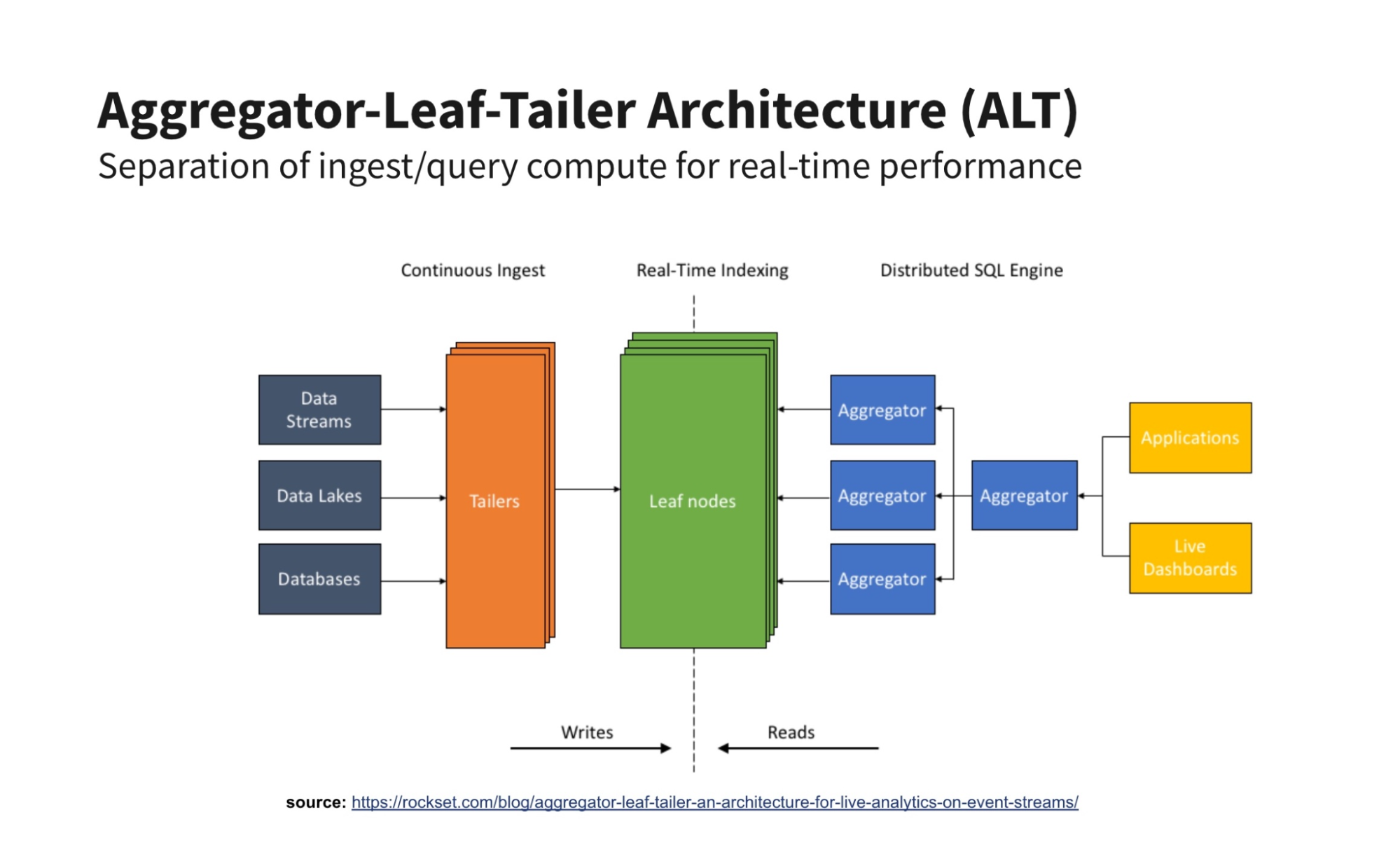

Such a serverless real-time architecture exists and it’s called Aggregator-Leaf-Tailer (ALT) for the way it separates the jobs of fetching, indexing and querying data.

Like cruise control on a car, an ALT architecture can easily maintain ingest speeds if queries suddenly spike, and vice-versa. And like a cruise control, those ingest and query speeds can independently scale upward based on application rules, not manual server reconfigurations. With both of those features, there’s no potential for contention-caused slowdowns, nor any need to overprovision your system in advance either. ALT architectures provide the best price performance for real-time analytics.

I witnessed the power of ALT firsthand at Facebook (now Meta) when I was on the team that brought the News Feed (now renamed Feed) — the updates from all of your friends — from an hourly update schedule into real time. Similarly, when LinkedIn upgraded its real-time FollowFeed to an ALT data architecture, it boosted query speeds and data retention while slashing the number of servers needed by half. Google and other web-scale companies also use ALT. For more details, read my blog post on ALT and why it beats the Lambda architecture for real-time analytics.

Companies don’t need to be overstaffed with data engineers like the ones above to deploy ALT. Rockset provides a real-time analytics database in the cloud built around the ALT architecture. Our database lets companies easily handle bursty data traffic for their real-time analytical workloads, as well as solve other key real-time issues such as mutable and out-of-order data, low-latency queries, flexible schemas and more.

If you are picking a system for serving data in real time for applications, evaluate whether it implements the ALT architecture so that it can handle bursty traffic wherever it comes from.

Dhruba Borthakur is CTO and co-founder of Rockset and is responsible for the company's technical direction. He was an engineer on the database team at Facebook, where he was the founding engineer of the RocksDB data store. Earlier at Yahoo, he was one of the founding engineers of the Hadoop Distributed File System. He was also a contributor to the open source Apache HBase project.

Rockset is the leading real-time analytics platform built for the cloud, delivering fast analytics on real-time data with surprising efficiency. Learn more at rockset.com.