Handling Slow Queries in MongoDB - Part 2: Solutions

August 25, 2020

In Part One, we discussed how to first identify slow queries on MongoDB using the database profiler, and then investigated what the strategies the database took doing during the execution of those queries to understand why our queries were taking the time and resources that they were taking. In this blog post, we’ll discuss several other targeted strategies that we can use to speed up those problematic queries when the right circumstances are present.

Avoiding Collection Scans using User-Defined Read Indexes

When operating at scale, most primary production databases cannot afford any collection scans at all unless the QPS is very low or the collection size itself is small. If you found during your investigation in Part One that your queries are being slowed down by unnecessary collection scans, you may want to consider using user-defined indexes in MongoDB.

Just like relational databases, NoSQL databases like MongoDB also utilize indexes to speed up queries. Indexes store a small portion of each collection’s data set into separate traversable data structures. These indexes then enable your queries to perform at faster speeds by minimizing the number of disk accesses required with each request.

When you know the queries ahead of time that you’re looking to speed up, you can create indexes from within MongoDB on the fields which you need faster access to. With just a few simple commands, MongoDB will automatically sort these fields into separate entries to optimize your query lookups.

To create an index in MongoDB, simply use the following syntax:

db.collection.createIndex( <key and index type specification>, <options> )

For instance, the following command would create a single field index on the field color:

db.collection.createIndex( { color: -1 } )

MongoDB offers several index types optimized for various query lookups and data types:

- Single Field Indexes are used to a index single field in a document

- Compound Field Indexes are used to index multiple fields in a document

- Multikey Indexes are used to index the content stored in arrays

- Geospatial Indexes are used to efficiently index geospatial coordinate data

- Text Indexes are used to efficiently index string content in a collection

- Hashed Indexes are used to index the hash values of specific fields to support hash-based sharding

While indexes can speed up with certain queries tremendously, they also come with tradeoffs. Indexes use memory, and adding too many will cause the working set to no longer fit inside memory, which will actually tank the performance of the cluster. Thus, you always want to ensure you’re indexing just enough, but not too much.

For more details, be sure to check out our other blog post on Indexing on MongoDB using Rockset!

Avoiding Document Scans Entirely using Covered Queries

If you found during your investigation that your queries are scanning an unusually high number of documents, you may want to look into whether or not a query can be satisfied without scanning any documents at all using index-only scan(s). When this occurs, we say that the index has “covered” this query since we no longer need to do any more work to complete this query. Such queries are known as covered queries, and are only possible if and only if all of these two requirements are satisfied:

- Every field the query needs to access is part of an index

- Every field returned by this query is in the same index

Furthermore, MongoDB has the following restrictions which prevent indexes from fully covering queries:

- No field in the covering index is an array

- No field in the covering index is a sub-document

- The _id field cannot be returned by this query

For instance, let’s say we have a collection rocks which has a multikey index on two fields, color and type:

db.rocks.createIndex({ color: 1, type: 1 })

Then, if try to find the types of rocks for a particular color, that query would be “covered” by the above index:

db.users.find({ color: "black" }, { type: 1, _id: 0 })

Let’s take a deeper look at what the database is doing using the EXPLAIN method we learned about during the investigation phase.

Using a basic query without a covering index with a single document, the following executionStats are returned:

"executionStats" : {

"executionSuccess" : true,

"nReturned" : 1,

"executionTimeMillis" : 0,

"totalKeysExamined" : 1,

"totalDocsExamined" : 1

}

Using our covered query, however, the following executionStats are returned:

"executionStats" : {

"executionSuccess" : true,

"nReturned" : 1,

"executionTimeMillis" : 0,

"totalKeysExamined" : 1,

"totalDocsExamined" : 0

}

Note that the number of documents scanned changed to 0 in the covered query – this performance improvement was made possible due to the index we created earlier which contained all the data we needed (thereby “covering” the query). Thus, MongoDB did not need to scan any collection documents at all. Tweaking your indexes and queries to allow for such cases can significantly improve query performance.

Avoiding Application-Level JOINs using Denormalization

NoSQL databases like MongoDB are often structured without a schema to make writes convenient, and it’s a key part what also makes them so unique and popular. However, the lack of a schema can dramatically slows down reads, causing problems with query performance as your application scales.

For instance, one of the most commonly well-known drawbacks of using a NoSQL database like MongoDB is the lack of support for database-level JOINs. If any of your queries are joining data across multiple collections in MongoDB, you’re likely doing it at the application level. This, however, is tremendously costly since you have to transfer all the data from the tables involved into your application before you can perform the operation.

Increasing Read Performance by Denormalizing Your Data

When you are storing relational data in multiple collections in MongoDB which requires multiple queries to retrieve the data you need, you can denormalize it to increase read performance. Denormalization is the process by which we trade write performance for read performance by embedding data from one collection into another, either by making a copy of certain fields or by moving it entirely.

For instance, let’s say you have the following two collections for employees and companies:

{

"email" : "john@example.com",

"name" : "John Smith",

"company" : "Google"

},

{

"email" : "mary@example.com",

"name" : "Mary Adams",

"company" : "Microsoft"

},

...

{

"name" : "Google",

"stock" : "GOOGL",

"location" : "Mountain View, CA"

},

{

"name" : "Microsoft",

"stock" : "MSFT",

"location" : "Redmond, WA"

},

...

Instead of trying to query the data from both collections using an application-level JOIN, we can instead embed the companies collection inside the employees collection:

{

"email" : "john@example.com",

"name" : "John Smith",

"company" : {

"name": "Google",

"stock" : "GOOGL",

"location" : "Mountain View, CA"

}

},

{

"email" : "mary@example.com",

"name" : "Mary Adams",

"company" : {

"name" : "Microsoft",

"stock" : "MSFT",

"location" : "Redmond, WA"

}

},

...

Now that all of our data is already stored in one place, we can simply query the employees collection a single time to retrieve everything we need, avoiding the need to do any JOINs entirely.

As we noted earlier, while denormalizing your data does increase read performance, it does not come without its drawbacks either. An immediate drawback would be that we are potentially increasing storage costs significantly by having to keep a redundant copies of the data. In our previous example, every single employee would now have the full company data embedded inside its document, causing an exponential increase in storage size. Furthermore, our write performance would be severely affected – for instance, if we wanted to change the location field of a company that moved its headquarters, we’d now have to go through every single document in our employees collection to update its company’s location.

What about MongoDB’s $lookup operator?

To help tackle its lack of support for JOINs, MongoDB added a new operator called $lookup in the release for MongoDB 3.2. The $lookup operator is an aggregation pipeline operator which performs a left outer join to an unsharded collection in the same database to filter in documents from the “joined” collection for processing. The syntax is as follows:

{

$lookup:

{

from: <collection to join>,

localField: <field from the input documents>,

foreignField: <field from the documents of the "from" collection>,

as: <output array field>

}

}

For instance, let’s take a look at our previous example again for the two collections employees and companies:

{

"email" : "john@example.com",

"name" : "John Smith",

"company" : "Google"

},

{

"email" : "mary@example.com",

"name" : "Mary Adams",

"company" : "Microsoft"

},

...

{

"name" : "Google",

"stock" : "GOOGL",

"location" : "Mountain View, CA"

},

{

"name" : "Microsoft",

"stock" : "MSFT",

"location" : "Redmond, WA"

},

...

You could then run the following command to join the tables together:

db.employees.aggregate([{

$lookup: {

from: "companies",

localField: "company",

foreignField: "name",

as: "employer"

}

}])

The query would return the following:

{

"email" : "john@example.com",

"name" : "John Smith",

"company" : "Google"

"employer": {

"name" : "Microsoft",

"stock" : "GOOGL",

"location" : "Mountain View, CA"

}

},

{

"email" : "mary@example.com",

"name" : "Mary Adams",

"company" : "Microsoft"

"employer": {

"name" : "Microsoft",

"stock" : "MSFT",

"location" : "Redmond, WA"

}

},

...

While this helps to alleviate some of the pain of performing JOINs on MongoDB collections, it is far from a complete solution with some notoriously well-known drawbacks. Most notably, its performance is significantly worse than JOINs in SQL databases like Postgres, and almost always requires an index to support each JOIN. In addition, even minor changes in your data or aggregation requirements can cause you to have to heavily rewrite the application logic.

Finally, even at peak performance, the functionality is simply very limited – the $lookup operator only allows you to perform left outer joins, and cannot be used on sharded collections. It also cannot work directly with arrays, meaning that you would have to a separate operator in the aggregation pipeline to first unnest any nested fields. As MongoDB’s CTO Eliot Horowitz wrote during its release, “we’re still concerned that $lookup can be misused to treat MongoDB like a relational database.” At the end of the day, MongoDB is still a document-based NoSQL database, and is not optimized for relational data at scale.

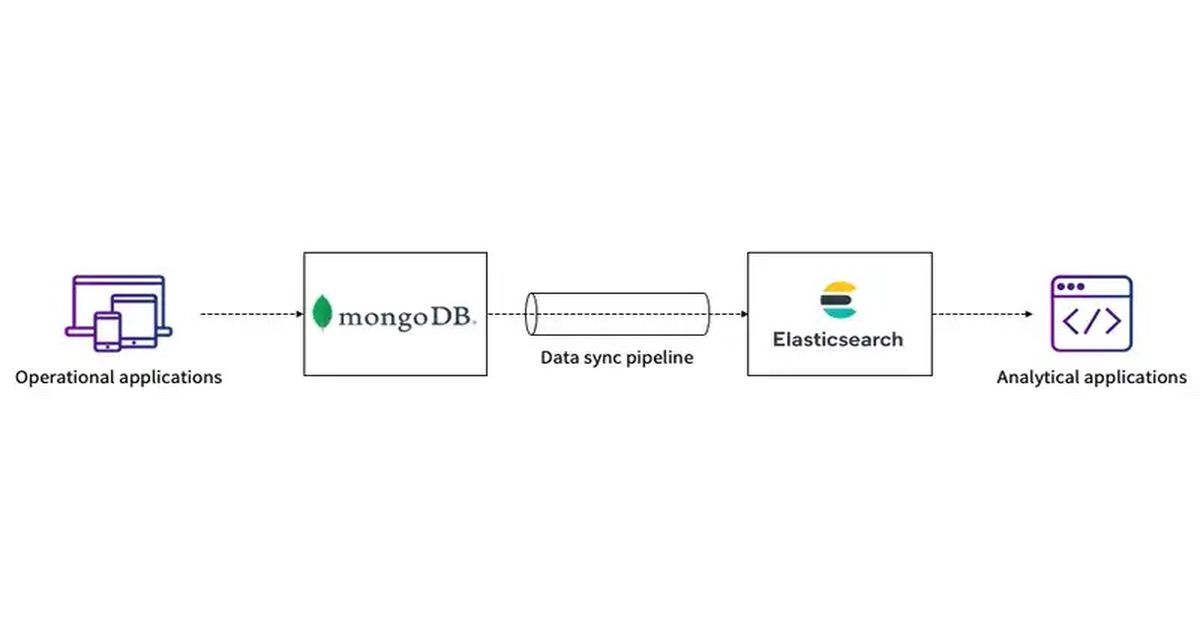

Speed Up Queries and Perform Fast JOINs using External Indexes

If you’ve tried all the internal optimizations you can think of within MongoDB and your queries are still too slow, it may be time for an external index. Using an external index, your data can be indexes and queried from an entirely separate database with a completely different set of strengths and limitations. External indexes are tremendously helpful for not only decreasing load on your primary OLTP databases, but also to perform certain complex queries that are not ideal on a NoSQL database like MongoDB (such as aggregation pipelines using $lookup and $unwind operators), but may be ideal when executed in the chosen external index.

Exceed Performance Limitations using Rockset as an External Index

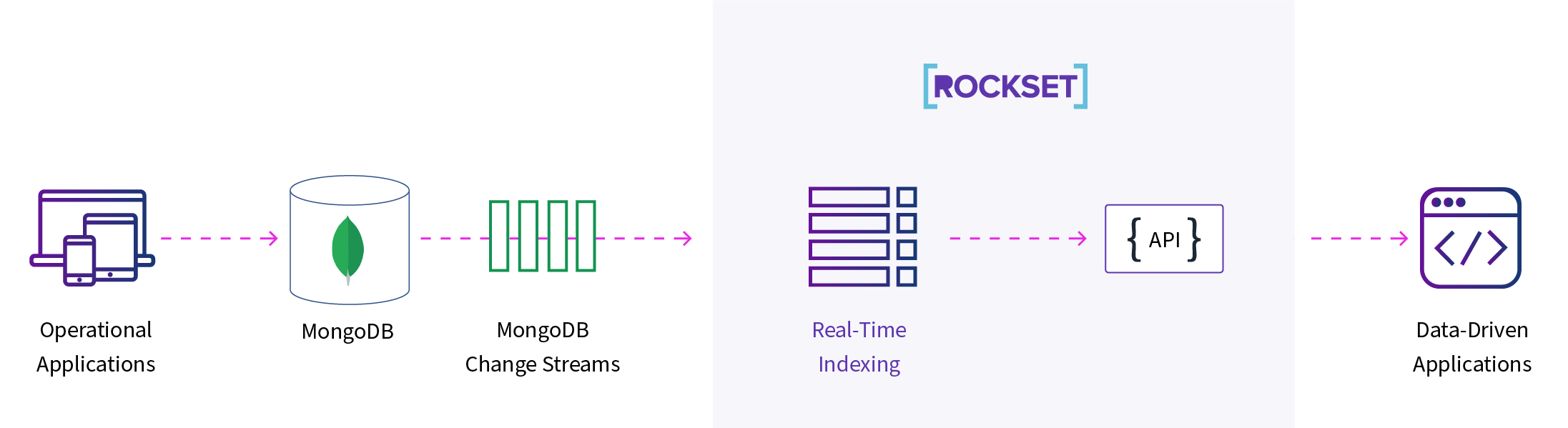

Here at Rockset, we’ve partnered with MongoDB and built a fully managed connector with our real-time indexing technology that enables you to perform fast JOINs and aggregations at scale. Rockset is a real-time serverless database which can be used as a speed layer on top of MongoDB Atlas, allowing you to perform SQL aggregations and JOINs in real-time.

Using our MongoDB integration, you can get set up in minutes – simply click and connect Rockset with your MongoDB collections by enabling proper read permissions, and the rest is automatically done for you. Rockset will then sync your data into our real-time database using our schemaless ingest technology, and then automatically create indexes for you on every single field in your collection, including nested fields. Furthermore, Rockset will also automatically stay up-to-date with your MongoDB collections by syncing within seconds anytime you update your data.

Once your data is in Rockset, you will have access to Rockset’s Converged Index™ technology and query optimizer. This means that Rockset enables full SQL support including fast search, aggregations, and JOIN queries at scale. Rockset is purpose-built for complex aggregations and JOINs on nested data, with no restrictions on covering indexes. Furthermore, you will also get faster queries using Rockset’s disaggregated Aggregator-Leaf-Tailer Architecture enabling real-time performance for both ingesting and querying.

Enable Full SQL Support for Aggregations and JOINs on MongoDB

Let’s re-examine our example earlier where we used the $lookup aggregation pipeline operator in MongoDB to simulate a SQL LEFT OUTER JOIN. We used this command to perform the join:

db.employees.aggregate([{

$lookup: {

from: "companies",

localField: "company",

foreignField: "name",

as: "employer"

}

}])

With full SQL support in Rockset, you can simply use your familiar SQL syntax to perform the same join:

SELECT

e.email,

e.name,

e.company AS employer,

e.stock,

e.location

FROM

employees e

LEFT JOIN

companies c

ON e.company = c.name;

Let’s look at another example aggregation in MongoDB where we GROUP by two fields, COUNT the total number of relevant rows, and then SORT the results:

db.rocks.aggregate([{

"$group": {

_id: {

color: "$color",

type: "$type"

},

count: { $sum: 1 }

}}, {

$sort: { "_id.type": 1 }

}])

The same command can be performed in Rockset using the following SQL syntax:

SELECT

color,

type,

COUNT(*)

FROM

rocks

GROUP BY

color,

type

ORDER BY

type;

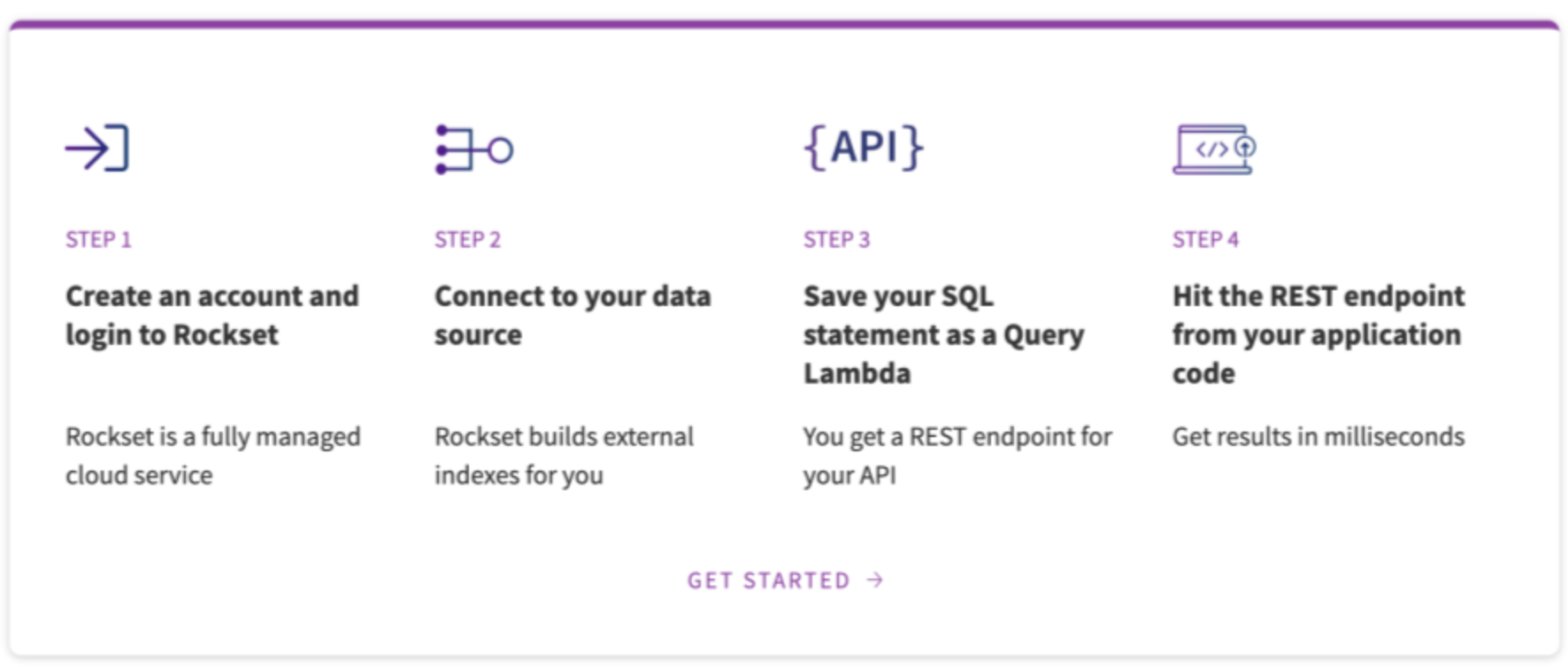

Getting Started with Rockset on MongoDB

Decrease load on your primary MongoDB instance by offloading expensive operations to Rockset, while also enabling significantly faster queries. On top of this, you can even integrate Rockset with data sources outside of MongoDB (including data lakes like S3/GCS and data streams like Kafka/Kinesis) to join your data together from multiple external sources and query them at once.

If you’re interested in learning more, be sure to check out our full MongoDB.live session where we go into exactly how Rockset continuously indexes your data from MongoDB. You can also view our tech talk on Scaling MongoDB to hear about additional strategies for maintaining performance at scale. And whenever you’re ready to try it out yourself, watch our step-by-step walkthrough and then create your Rockset account!

| Learn more about using MongoDB with Rockset | ||

|

|

|

| Using Elasticsearch to Offload Real-Time Analytics from MongoDB | Real-Time Analytics on MongoDB: The Ultimate Guide | Handling Slow Queries in MongoDB - Part 1: Investigation |