Rockset Enhances Kafka Integration to Simplify Real-Time Analytics on Streaming Data

September 15, 2021

We’re introducing a new Rockset Integration for Apache Kafka that offers native support for Confluent Cloud and Apache Kafka, making it simpler and faster to ingest streaming data for real-time analytics. This new integration comes on the heels of several new product features that make Rockset more affordable and accessible for real-time analytics including SQL-based rollups and transformations.

With the Kafka Integration, users no longer need to build, deploy or operate any infrastructure component on the Kafka side. Here’s how Rockset is making it easier to ingest event data from Kafka with this new integration:

- It is managed entirely by Rockset and can be setup with just a few clicks, keeping with our philosophy on making real-time analytics accessible.

- The integration is continuous so any new data in the Kafka topic will get indexed in Rockset, delivering an end-to-end data latency of 2 seconds.

- The integration is pull-based, ensuring that data can be reliably ingested even in the face of bursty writes and require no tuning on the Kafka side.

- There is no need to pre-create a schema to run real-time analytics on event streams from Kafka. Rockset indexes the entire data stream so when new fields are added, they are immediately exposed and made queryable using SQL.

- We’ve also enabled the ingest of historical and real-time streams so that customers can access a 360 view of their data, a common real-time analytics use case.

In this blog, we introduce how the Kafka Integration with native support for Confluent Cloud and Apache Kafka works and walk through how to run real-time analytics on event streams from Kafka.

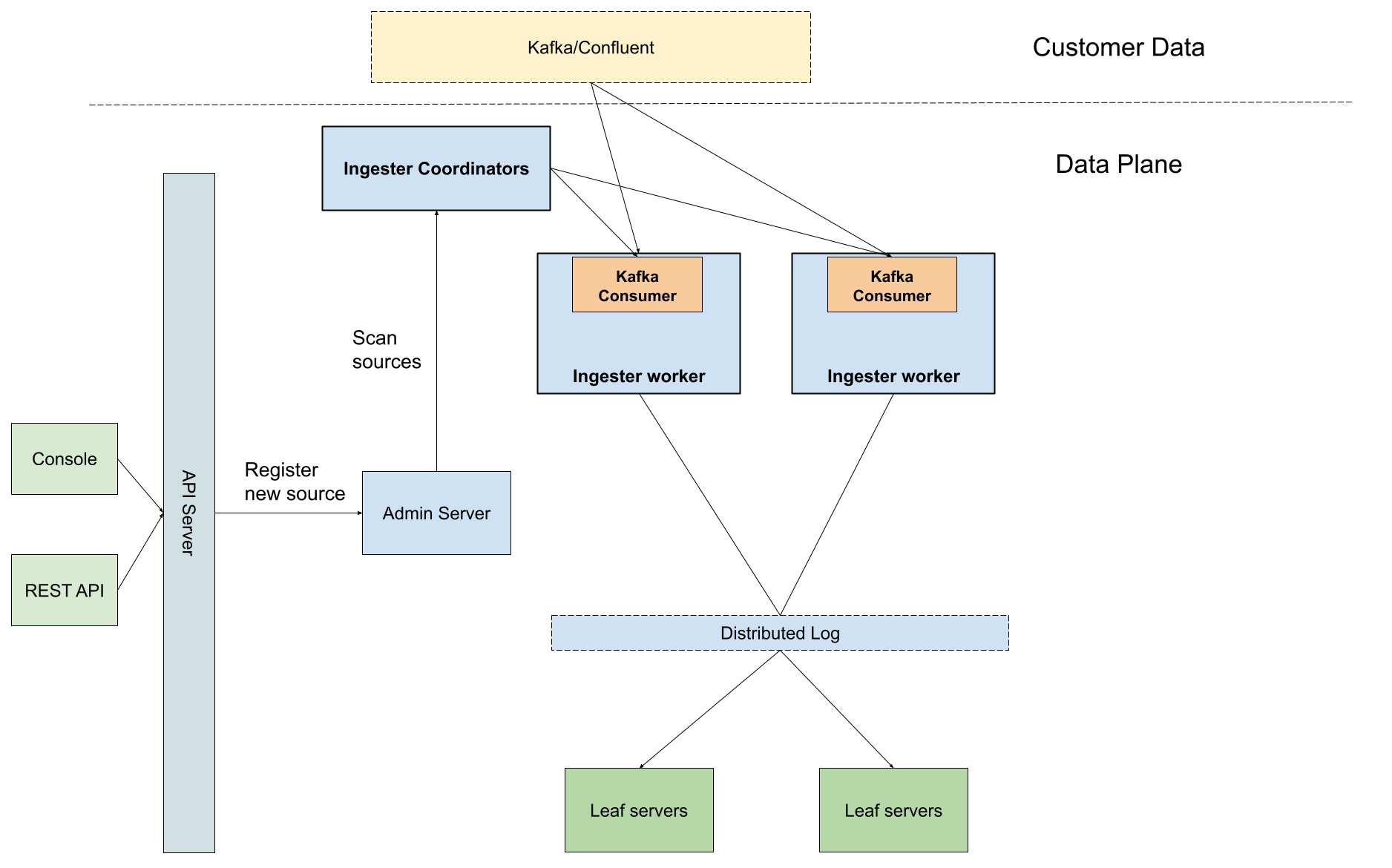

A Quick Dip Under the Hood

The new Kafka Integration adopts the Kafka Consumer API , which is a low-level, vanilla Java library that could be easily embedded into applications to tail data from a Kafka topic in real time.

There are two Kafka consumer modes:

- subscription mode, where a group of consumers collaborate in tailing a common set of Kafka topics in a dynamic way, relying on Kafka brokers to provide rebalancing, checkpointing, failure recovery, etc

- assign mode, where each individual consumer specifies assigned topic partitions and manages the progress checkpointing manually

Rockset adopts the assign mode as we have already built a general-purpose tailer framework based on the Aggregator Leaf Tailer Architecture (ALT) to handle the heavy-lifting, such as progress checkpointing and common failure cases. The consumption offsets are completely managed by Rockset, without saving any information inside user's cluster. Each ingestion worker receives its own topic partition assignment and last processed offsets during the initialization from the ingestion coordinator, and then leverages the embedded consumer to fetch Kafka topic data.

The above diagram shows how the Kafka consumer is embedded into the Rockset tailer framework. A customer creates a new Kafka collection through the API server endpoint and Rockset stores the collection metadata inside the admin server. Rockset’s ingester coordinator is notified of new sources. When any new Kafka source is spotted, the coordinator spawns a reasonable number of worker tasks equipped with Kafka consumers to start fetching data from the customer's Kafka topic.

Kafka and Rockset for Real-Time Analytics

As soon as event data lands in Kafka, Rockset automatically indexes it for sub-second SQL queries. You can search, aggregate and join data across Kafka topics and other data sources including data in S3, MongoDB, DynamoDB, Postgres, and more. Next, simply turn the SQL query into an API to serve data in your application.

A sample architecture for real-time analytics on streaming data from Apache Kafka

Let’s walk through a step-by-step example of analyzing real-time order data using a mock dataset from Confluent Cloud’s datagen. In this example, we’ll assume that you already have a Kafka cluster and topic setup.

An Easy 5 Minutes to Get Setup

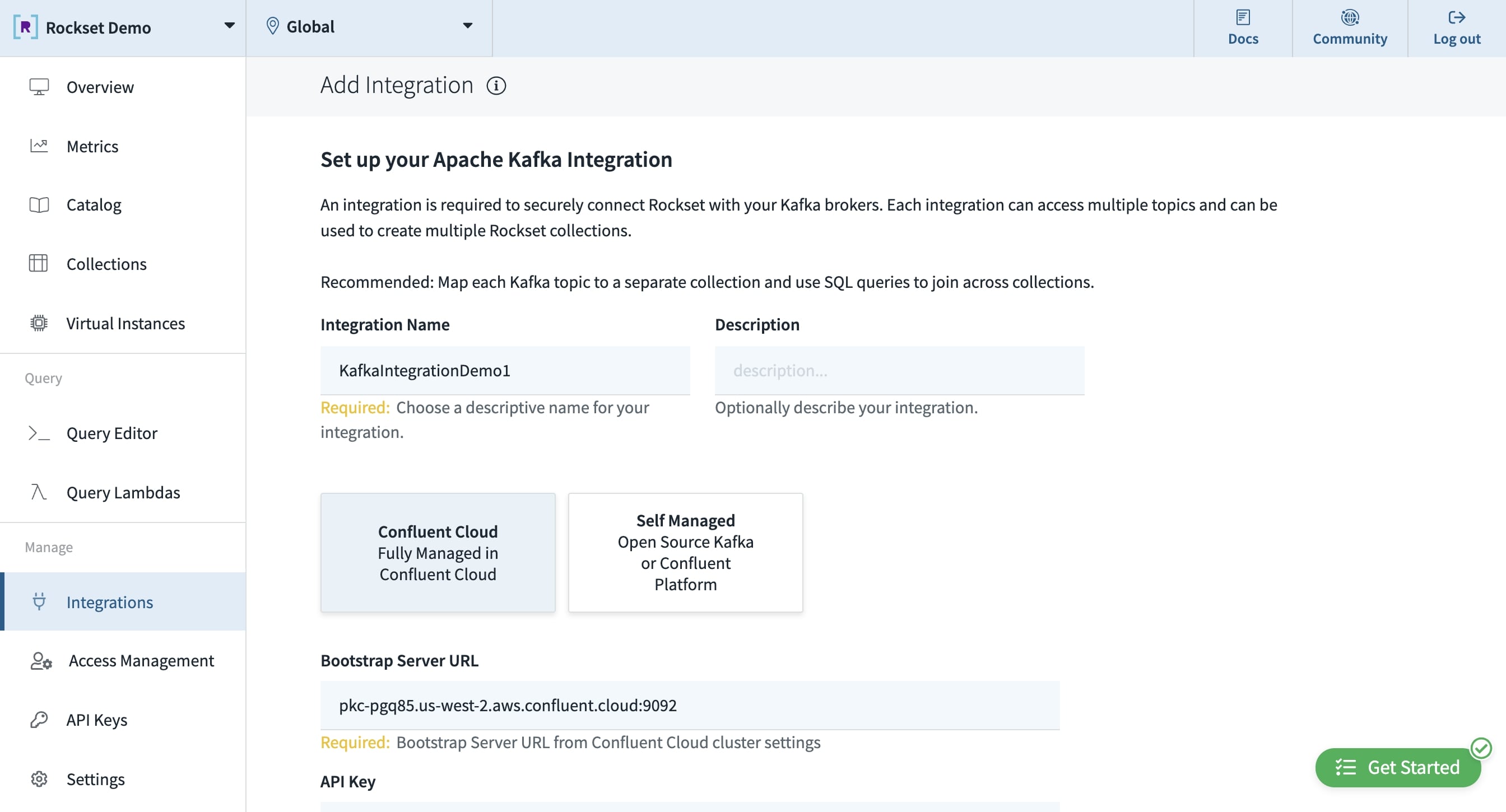

Setup the Kafka Integration

To setup Rockset’s Kafka Integration, first select the Kafka source from between Apache Kafka and Confluent Cloud. Enter the configuration information including the Kafka provided endpoint to connect and the API key/secret, if you’re using the Confluent platform. For the first version of this release, we are only supporting JSON data (stay tuned for Avro!).

The Rockset console where the Apache Kafka Integration is setup.

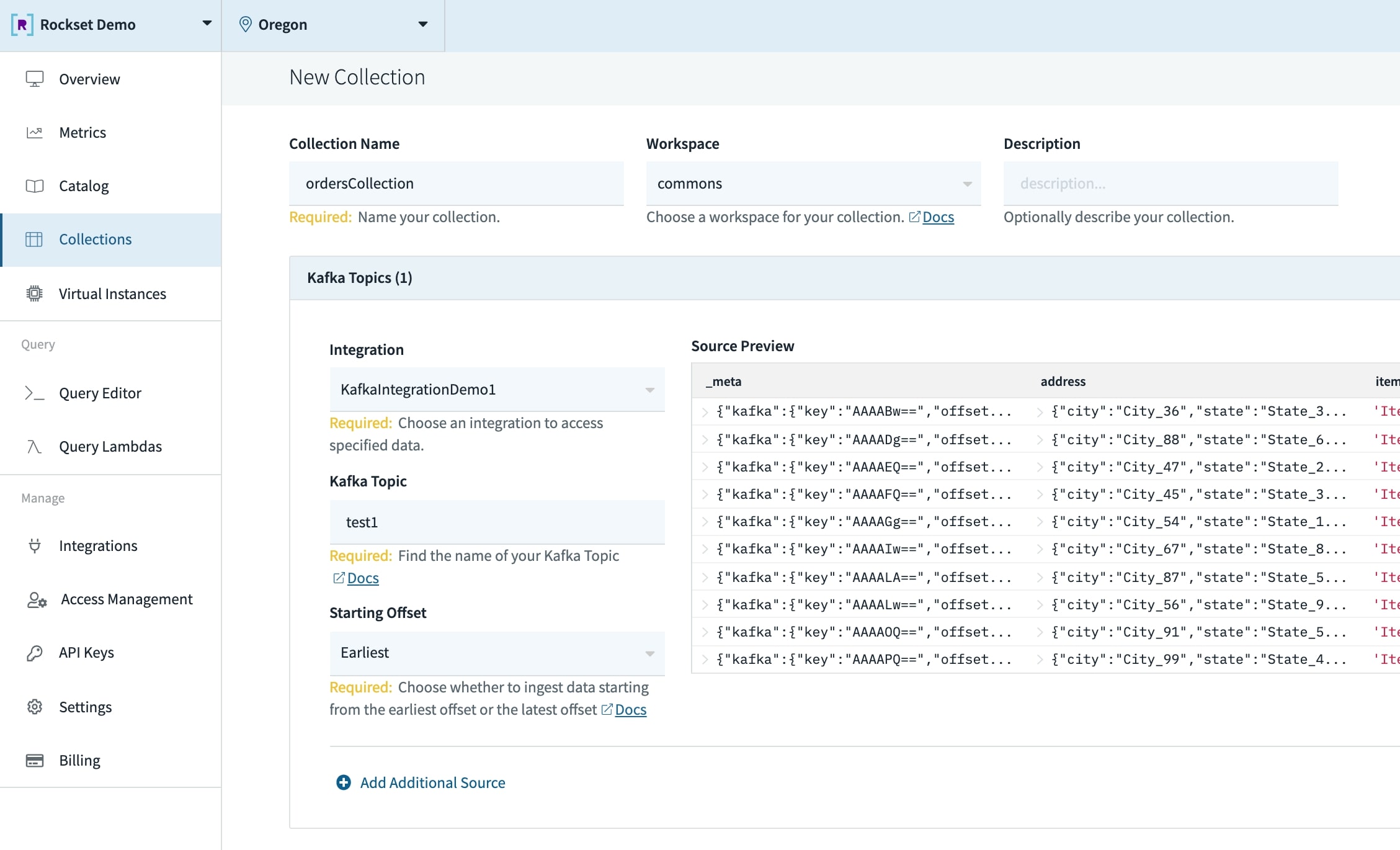

Create a Collection

A collection in Rockset is similar to a table in the SQL world. To create a collection, simply add in details including the name, description, integration and Kafka topic. The starting offset enables you to backfill historical data as well as capture the latest streams.

A Rockset collection that is pulling data from Apache Kafka.

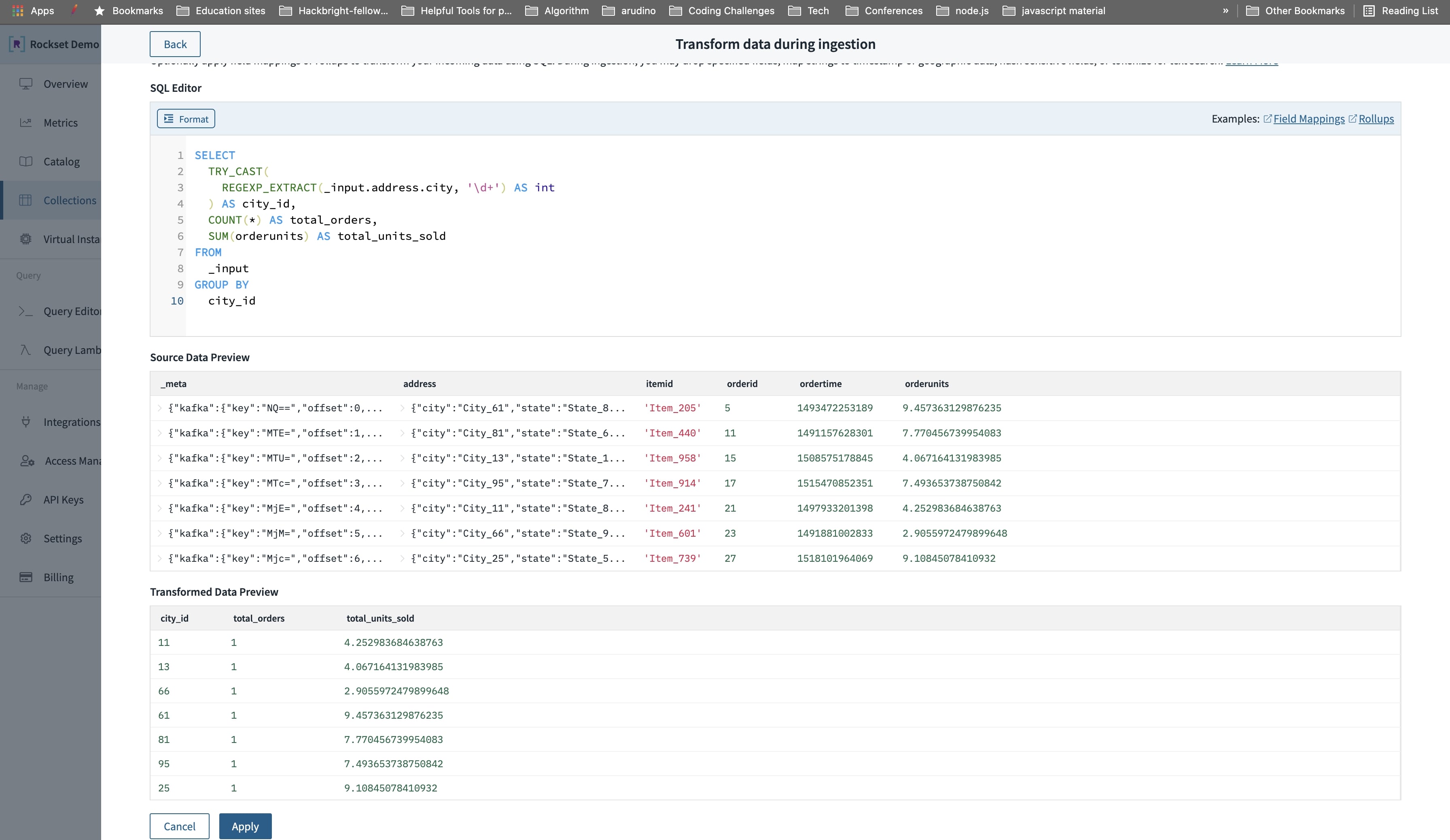

Transform and Rollup Data

You have the option at ingest time to also transform and rollup event data using SQL to reduce the storage size in Rockset. Rockset rollups are able to support complex SQL expressions and rollup data correctly and accurately even for out of order data.

In this example, we’ll do a rollup to aggregate the total units sold (SUM(orderunits)) and total orders made (COUNT(*)) in a particular city.

A SQL based rollup at ingest time in the Rockset console.

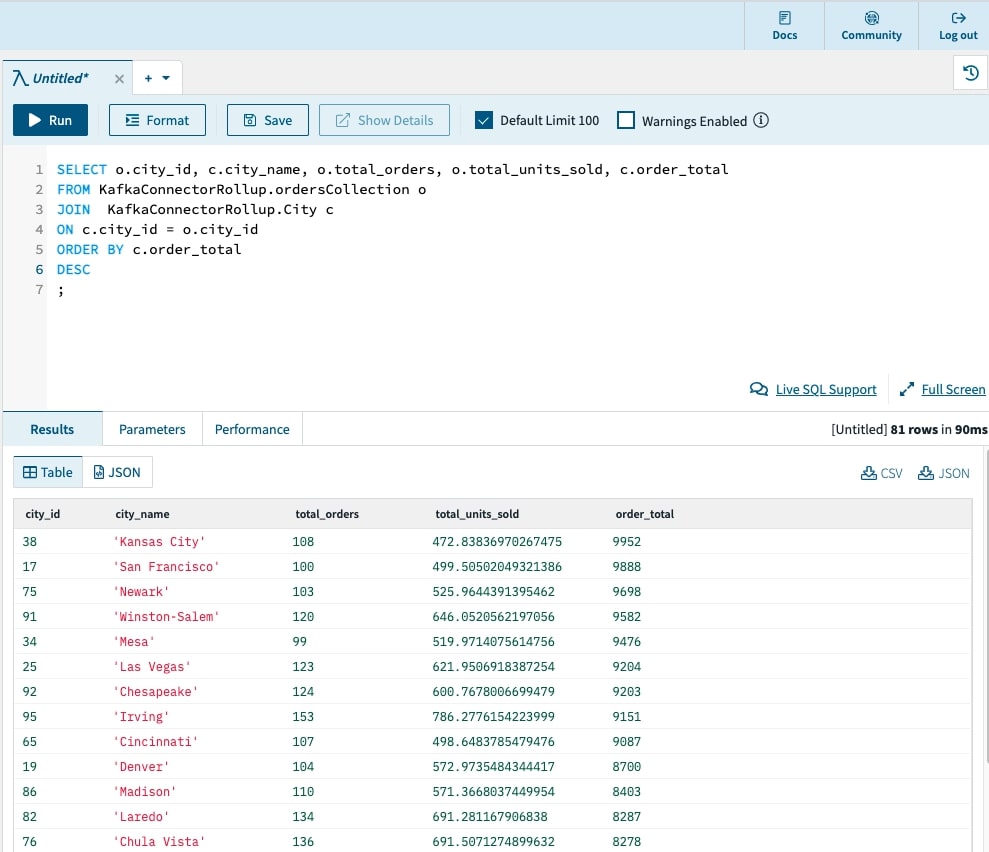

Query Event Data Using SQL

As soon as the data is ingested, Rockset will index the data in a Converged Index for fast analytics at scale. This means you can query semi-structured, deeply nested data using SQL without needing to do any data preparation or performance tuning.

In this example, we’ll write a SQL query to find the city with the highest order volume. We’ll also join the Kafka data with a CSV in S3 of the city IDs and their corresponding names.

🙌 The SQL query on streaming data returned in 91 Milliseconds!

We’ve been able to go from raw event streams to a fast SQL query in 5 minutes 💥. We also recorded an end-to-end demonstration video so you can better visualize this process.

Embedded content: https://youtu.be/jBGyyVs8UkY

Unlock Streaming Data for Real-Time Analytics

We’re excited to continue to make it easy for developers and data teams to analyze streaming data in real time. If you’ve wanted to make the move from batch to real-time analytics, it’s easier now than ever before. And, you can make that move today. Contact us to join the beta for the new Kafka Integration.

About Boyang Chen - Boyang is a staff software engineer at Rockset and an Apache Kafka Committer. Prior to Rockset, Boyang spent two years at Confluent on various technical initiatives, including Kafka Streams, exactly-once semantics, Apache ZooKeeper removal, and more. He also co-authored the paper Consistency and Completeness: Rethinking Distributed Stream Processing in Apache Kafka . Boyang has also worked on the ads infrastructure team at Pinterest to rebuild the whole budgeting and pacing pipeline. Boyang has his bachelors and masters degrees in computer science from the University of Illinois at Urbana-Champaign.