Office Hours Recap: Optimize Cost and Query Latency With SQL Transformations and Real-Time Rollups

May 25, 2022

Visit our Rockset Community to review previous Office Hours or to see what's coming up.

During our Office Hours a few weeks ago, Tyler and I went over what are SQL transformations and real-time rollups, how to apply them, and how they affect your query performance and index storage size. Below, we’ll cover some of the highlights.

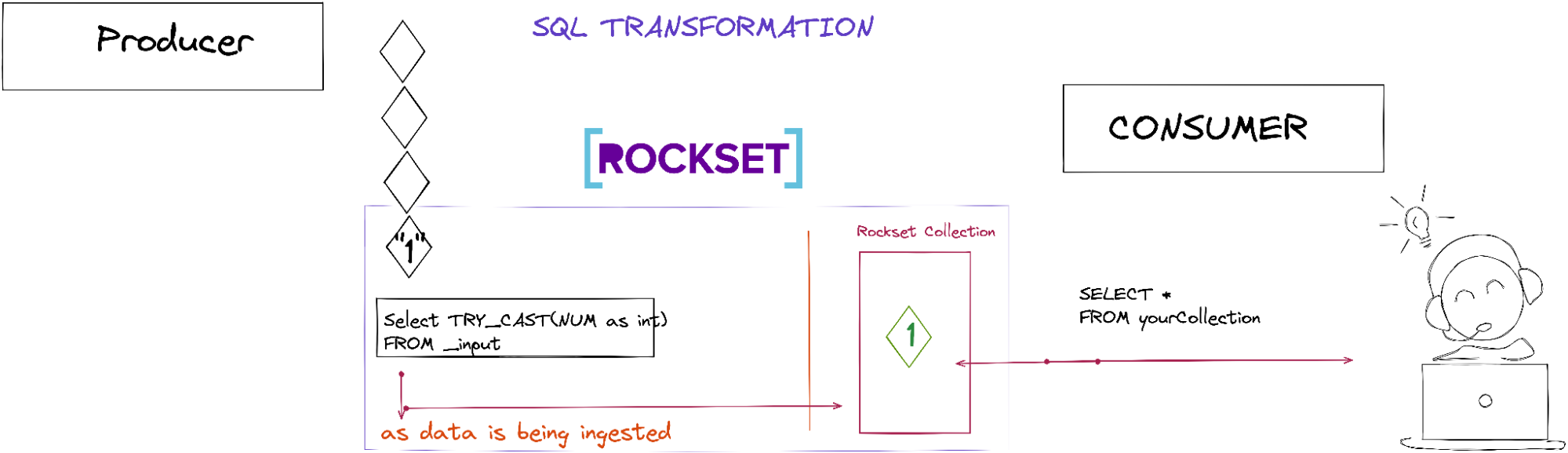

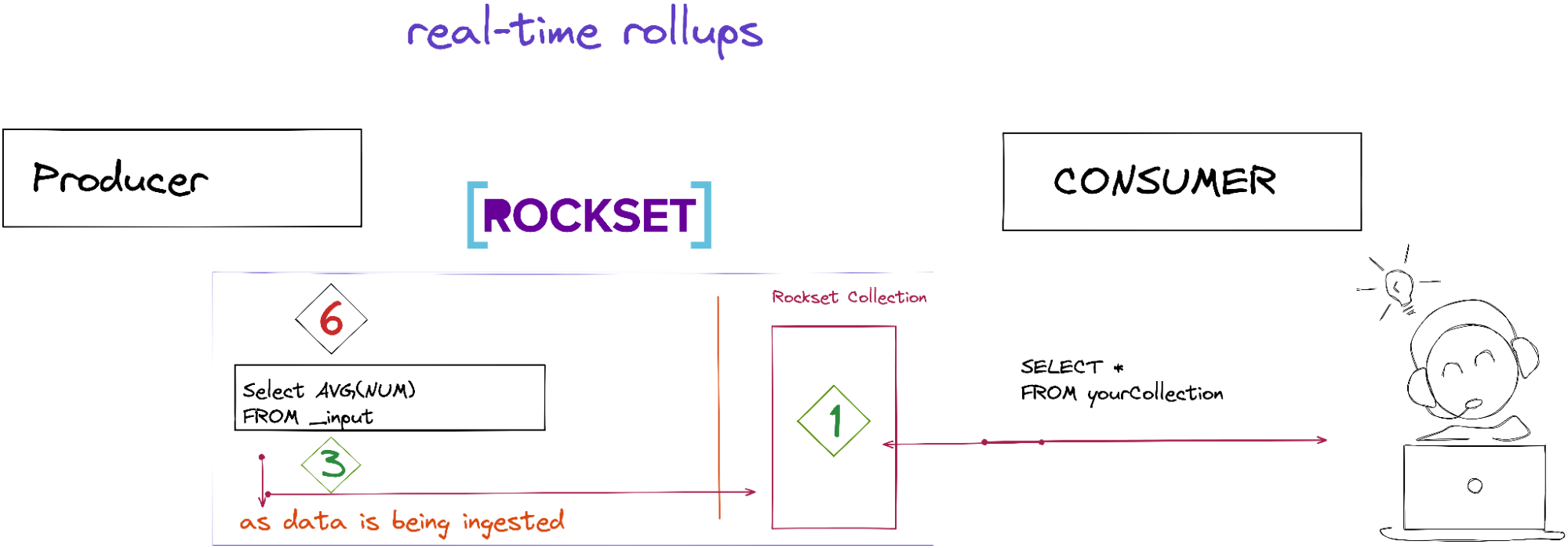

SQL transformations and real-time rollups occur at ingestion time before the Rockset collection is populated with data. Here’s the diagram I did during Rockset Office Hours.

Tyler demonstrated how query performance and storage are impacted when you use SQL transformations and real-time rollups with three different queries. Below, I’ll describe how we built the collection and what we’re doing in the queries.

Initial Query With no SQL Transformations or Rollups Applied

In this query, we’re building a time-series object that grabs the most active tweeters within the last day. There are no SQL transformations or rollups, so the collection contains just the raw data.

-- Initial query against the plain collection 1day: 12sec

with _data as (

SELECT

count(*) tweets,

cast(DATE_TRUNC('HOUR',PARSE_TIMESTAMP('%a %h %d %H:%M:%S %z %Y', t.created_at)) as string) as event_date_hour,

t.user.id,

arbitrary(t.user.name) name

FROM

officehours."twitter-firehose" t hint(access_path=column_scan)

where

t.user.id is not null

and t.user.id is not undefined

and PARSE_TIMESTAMP('%a %h %d %H:%M:%S %z %Y', t.created_at) > CURRENT_TIMESTAMP() - DAYS(1)

group by

t.user.id,

event_date_hour

order by

event_date_hour desc

),

_intermediate as (

select

array_agg(event_date_hour) _keys,

array_agg(tweets) _values,

id,

arbitrary(name) name

from

_data

group by

_data.id

)

select

object(_keys, _values) as timeseries,

id,

name

from

_intermediate

order by length(_keys) desc

limit 100

Source: GitHub gist

- On line 4 we are counting the total tweets

- On line 7 we are pulling the ARBITRARY for

t.user.name— you can read more about ARBITRARY - On lines 15 and 16 we’re doing aggregations on

t.user.idandevent_date_hour - On line 5 we create the

event_date_hourby doing a CAST - On line 11-12 we filter user.id that is not null or undefined

- On line 13 we get the latest tweeters from the last day

- On lines 14-16 we do a GROUP BY with

t.user.idandevent_date_hour - On lines 20-37 we build our time series object

- On line 38 we return the top 100 tweeters

This inefficient contrived query was run on live data with a medium VI and took about 7 seconds to execute.

Second Query With SQL Transformation Applied Only

In the second query, we applied SQL transformations when we created the collection.

SELECT

*

, cast(DATE_TRUNC('HOUR', PARSE_TIMESTAMP('%a %h %d %H:%M:%S %z %Y', i.created_at)) as string) as event_date_hour

, PARSE_TIMESTAMP('%a %h %d %H:%M:%S %z %Y', i.created_at) as _event_time

, cast(i.id as string) as id

FROM

_input i

where

i.user.id is not null

and i.user.id is not undefined

Source: GitHub gist

- On line 3, we create an

event_date_hour - On line 4, we create an

event_time - On line 5, we create an id as a string

- On lines 9 and 10, we select

user.idthat is not null or undefined

After we apply the transformations, our SQL query looks more simplified than the initial query:

with _data as (

SELECT

count(*) tweets,

event_date_hour,

t.user.id,

arbitrary(t.user.name) name

FROM

officehours."twitter-firehose_sqlTransformation" t hint(access_path=column_scan)

where

_event_time > CURRENT_TIMESTAMP() - DAYS(1)

group by

t.user.id,

event_date_hour

order by

event_date_hour desc

),

_intermediate as (

select

array_agg(event_date_hour) _keys,

array_agg(tweets) _values,

id,

arbitrary(name) name

from

_data

group by

_data.id

)

select

object(_keys, _values) as timeseries,

id,

name

from

_intermediate

order by length(_keys) desc

limit 100

Source: GitHub gist

- On line 3, we are counting the total tweets

- On line 6 we are pulling the ARBITRARY for

t.user.name - On line 10, the filter is now on the timestamp

- On lines 11-13 we still do a GROUP BY with

t.user.idandevent_date_hour - On lines 17-34 we still create our time-series object

Basically, we excluded whatever we applied during SQL transformations in the query itself. When we run the query, the storage index size doesn’t change too much, but the query performance goes from seven seconds to three seconds or so. By doing SQL transformations, we save on compute, and it shows — the query performs much faster.

Third Query With SQL Transformation and Rollups Applied

In the third query we performed SQL transformations and rollups when we created the collection.

SELECT

count(*) tweets,

cast(DATE_TRUNC('HOUR', PARSE_TIMESTAMP('%a %h %d %H:%M:%S %z %Y', i.created_at)) as string) as event_date_hour_str,

DATE_TRUNC('HOUR', PARSE_TIMESTAMP('%a %h %d %H:%M:%S %z %Y', i.created_at)) as event_date_hour,

cast(i.user.id as string) id,

arbitrary(i.user.name) name

FROM

_input i

where

i.user.id is not null

and i.user.id is not undefined

group by

i.user.id,

event_date_hour_str,

event_date_hour

Source: GitHub gist

In addition to what we did applied earlier for the SQL transformations, we’re now applying rollups as well.

- On line 2, we are counting all the tweets

- On line 6 we are pulling the ARBITRARY

- On lines 12-15 we are applying the GROUP_BY

So now, our final SQL query looks like this:

with _data as (

SELECT

tweets,

event_date_hour_str,

event_date_hour,

id,

name

FROM

officehours."twitter-firehose-rollup" t hint(access_path=column_scan)

where

t.event_date_hour > CURRENT_TIMESTAMP() - DAYS(1)

order by

event_date_hour desc

),

_intermediate as (

select

array_agg(event_date_hour_str) _keys,

array_agg(tweets) _values,

id,

arbitrary(name) name

from

_data

group by

_data.id

)

select

object(_keys, _values) as timeseries,

id,

name

from

_intermediate

order by length(_keys) desc

Limit 100

Source: GitHub gist

When we apply the SQL transformations with the rollups, our query goes from a womping seven seconds to two seconds. Also, our storage index size goes from 250 GiB to 11 GiB now!

Advantages/Considerations for SQL Transformations and Real-Time Rollups

SQL Transformations

Advantages:

- Improves query performance

- Can drop and mask fields at ingestion time

- Improve compute cost

Consideration:

- Need to know what your data looks like

Real-Time Rollups

Advantages:

- Improves query performance and storage index size

- Data is updated within the second

- Don’t need to worry about out-of-order arrivals

- Exactly-once semantics

- Improve compute cost

Considerations:

- Data resolution — You’ll lose the raw data resolution. If you need a copy of the raw data, create another collection without rollups. If you want to avoid double storage, you can set a retention policy when you create a collection.

Rockset’s SQL-based transformations and rollups allow you to perform data transformation that improves query performance and reduces storage index size. The final data transformation is what is persisted in the Rockset collection. It’s important to note that real-time rollups will continuously run on incoming data. In terms of out-of-order arrivals, Rockset will process them and update the required data exactly as though those events actually arrived in-order and on-time. Finally, Rockset guarantees exactly-once semantics for streaming sources, like Kafka and Kinesis.

You can catch the replay of Tyler’s Office Hours session on the Rockset Community. If you have more questions, please find Tyler and Nadine in the Rockset Community.

Embedded content: https://youtu.be/dUrHqoVKC34

Resources:

Rockset is the leading real-time analytics platform built for the cloud, delivering fast analytics on real-time data with surprising efficiency. Learn more at rockset.com.