Using DynamoDB Single-Table Design with Rockset

February 9, 2023

Background

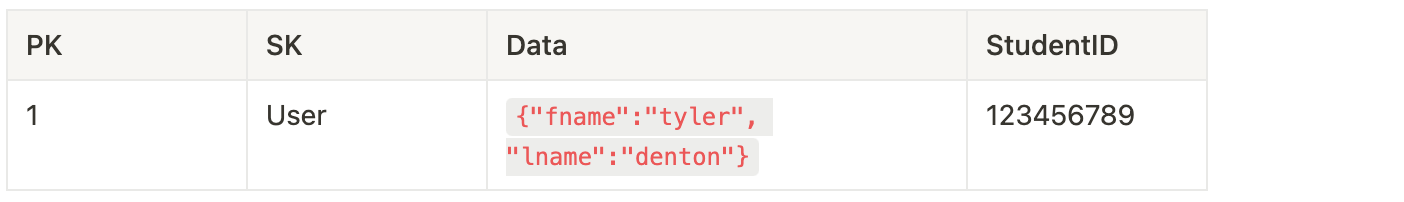

The single table design for DynamoDB simplifies the architecture required for storing data in DynamoDB. Instead of having multiple tables for each record type you can combine the different types of data into a single table. This works because DynamoDB is able to store very wide tables with varying schema. DynamoDB also supports nested objects. This allows users to combine PK as the partition key, SK as the sort key with the combination of the two becoming a composite primary key. Common columns can be used across record types like a results column or data column that stores nested JSON. Or the different record types can have totally different columns. DynamoDB supports both models, or even a mix of shared columns and disparate columns. Oftentimes users following the single table model will use the PK as a primary key within an SK which works as a namespace. An example of this:

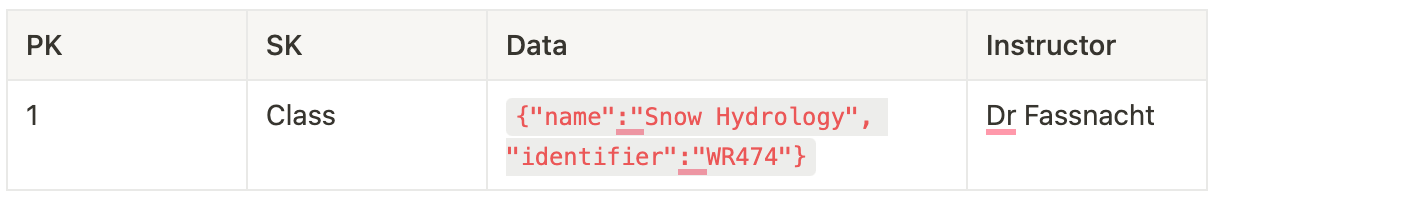

Notice that the PK is the same for both records, but the SK is different. You could imagine a two table model like the following:

and

While neither of these data models is actually a good example of proper data modeling, the example still represents the idea. The single table model uses PK as a primary Key within the namespace of an SK.

How to use the single table model in Rockset

Rockset is a real-time analytics database that is often used in conjunction with DynamoDB. It syncs with data in DynamoDB to offer an easy way to perform queries for which DynamoDB is less suited. Learn more in Alex DeBrie's blog on DynamoDB Filtering and Aggregation Queries Using SQL on Rockset.

Rockset has 2 ways of creating integrations with DynamoDB. The first is to use RCUs to scan the DynamoDB table, and once the initial scan is complete Rockset tails DynamoDB streams. The other method utilizes DynamoDB export to S3 to first export the DynamoDB table to S3, perform a bulk ingestion from S3 and then, after export, Rockset will start tailing the DynamoDB streams. The first method is used for when tables are very small, < 5GB, and the second is much more performant and works for larger DynamoDB tables. Either method is appropriate for the single table method.

Reminder: Rollups cannot be used on DDB.

Once the integration is set up you have a few options to consider when configuring the Rockset collections.

Method 1: Collection and Views

The first and simplest is to ingest all of the table into a single collection and implement views on top of Rockset. So in the above example you would have a SQL transformation that looks like:

-- new_collection

select i.* from _input i

And you would build two views on top of the collection.

-- user view

Select c.* from new_collection c where c.SK = 'User';

and

--class view

select c.* from new_collection c where c.SK='Class';

This is the simplest approach and requires the least amount of knowledge about the tables, table schema, sizes, access patterns, etc. Typically for smaller tables, we start here. Reminder: views are syntactic sugar and will not materialize data, so they must be processed like they are part of the query for every execution of the query.

Method 2: Clustered Collection and Views

This method is very similar to the first method, except that we will implement clustering when making the collection. Without this, when a query that uses Rockset's column index is run, the entire collection must be scanned because there is no actual separation of data in the column index. Clustering will have no impact on the inverted index.

The SQL transformation will look like:

-- clustered_collection

select i.* from _input i cluster by i.SK

The caveat here is that clustering does consume more resources for ingestion, so CPU utilization will be higher for clustered collections vs non-clustered collections. The advantage is queries can be much faster.

The views will look the same as before:

-- user view

Select c.* from new_collection c where c.SK = 'User';

and

--class view

select c.* from new_collection c where c.SK='Class';

Method 3: Separate Collections

Another method to consider when building collections in Rockset from a DynamoDB single table model is to create multiple collections. This method requires more setup upfront than the previous two methods but gives considerable performance benefits. Here we will use the where clause of our SQL transformation to separate the SKs from DynamoDB into separate collections. This allows us to run queries without implementing clustering, or implement clustering inside an individual SK.

-- User collection

Select i.* from _input i where i.SK='User';

and

-- Class collection

Select i.* from _input i where i.SK='Class';

This method does not require views because the data is materialized into individual collections. This is really helpful when splitting out very large tables where queries will use mixes of Rockset's inverted index and column index. The limitation here is that we are going to have to do a separate export and stream from DynamoDB for each collection you want to create.

Method 4: Mix of Separate Collections and Clustering

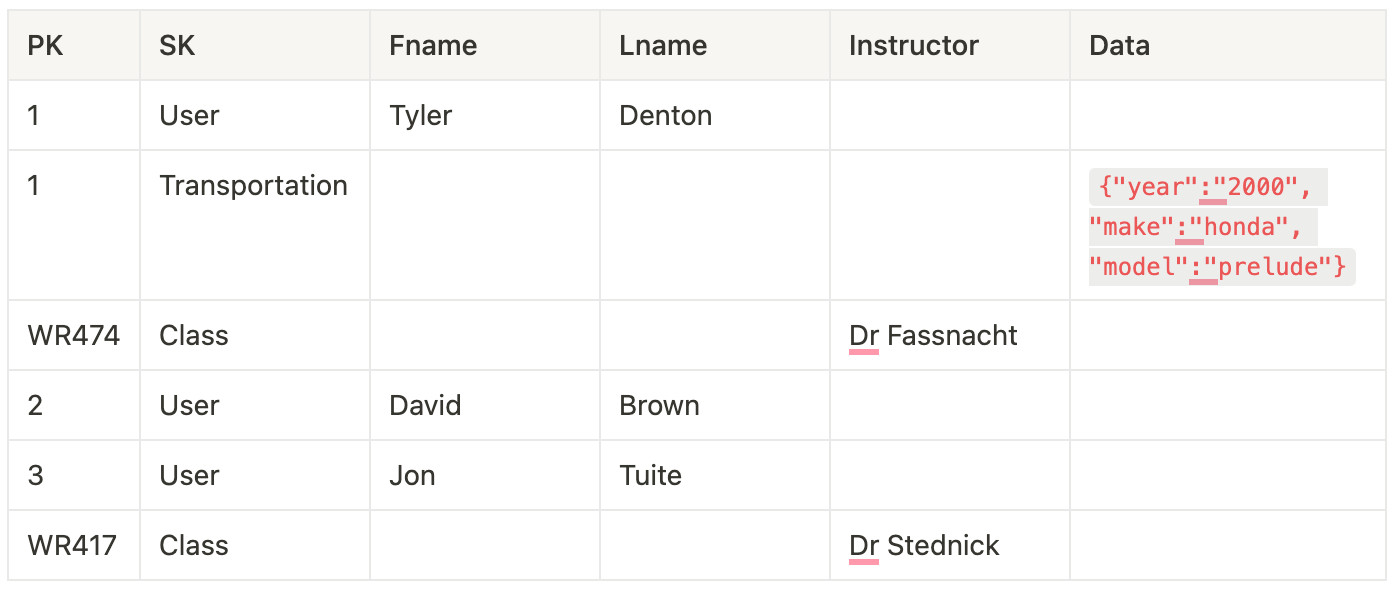

The last method to discuss is the combination of the previous methods. Here you would break out large SKs into separate collections and use clustering and a combined table with views for the smaller SKs.

Take this dataset:

You can build two collections here:

-- user_collection

select i.* from _input i where i.SK='User';

and

-- combined_collection

select i.* from _input i where i.SK != 'User' Cluster By SK;

And then 2 views on top of combined_collection:

-- class_view

select * from combined_collection where SK='Class';

and

-- transportation_view

select * from combined_collection where SK='Transportation';

This gives you the benefits of separating out the large collections from the small collections, while keeping your collection size smaller, allowing other smaller SKs to be added to the DynamoDB table without having to recreate and re-ingest the collections. It also allows the most flexibility for query performance. This option does come with the most operational overhead to setup, monitor, and maintain.

Conclusion

Single table design is a popular data modeling technique in DynamoDB. Having supported numerous DynamoDB users through the development and productionization of their real-time analytics applications, we've detailed several methods for organizing your DynamoDB single table model in Rockset, so you can select the design that works best for your specific use case.