Elasticsearch or Rockset for Real-Time Analytics: Real-Time Ingestion and Indexing

March 15, 2021

When working with a real-time analytics system you need your database to meet very specific requirements. This includes making the data available for query as soon as it is ingested, creating proper indexes on the data so that the query latency is very low, and much more.

Before it can be ingested, there’s usually a data pipeline for transforming incoming data. You want this pipeline to take as little time as possible, because stale data doesn’t provide any value in a real-time analytics system.

While there’s typically some amount of data engineering required here, there are ways to minimize it. For example, instead of denormalizing the data, you could use a query engine that supports joins. This will avoid unnecessary processing during data ingestion and reduce the storage bloat due to redundant data.

The Demands of Real-Time Analytics

Real-time analytics applications have specific demands (i.e., latency, indexing, etc.), and your solution will only be able to provide valuable real-time analytics if you are able to meet them. But meeting these demands depends entirely on how the solution is built. Let’s look at some examples.

Data Latency

Data latency is the time it takes from when data is produced to when it is available to be queried. Logically then, latency has to be as low as possible for real-time analytics.

In most analytics systems today, data is being ingested in massive quantities as the number of data sources continually increases. It is important that real-time analytics solutions be able to handle high write rates in order to make the data queryable as quickly as possible. Elasticsearch and Rockset each approaches this requirement differently.

Because constantly performing write operations on the storage layer negatively impacts performance, Elasticsearch uses the memory of the system as a caching layer. All incoming data is cached in-memory for a certain amount of time, after which Elasticsearch ingests the cached data in bulk to storage.

This improves the write performance, but it also increases latency. This is because the data is not available to query until it is written to the disk. While the cache duration is configurable and you can reduce the duration to improve the latency, this means you are writing to the disk more frequently, which in turn reduces the write performance.

Rockset approaches this problem differently.

Rockset uses a log-structured merge-tree (LSM), a feature offered by the open-source database RocksDB. This feature makes it so that whenever Rockset receives data, it too caches the data in its memtable. The difference between this approach and Elasticsearch’s is that Rockset makes this memtable available for queries.

Thus queries can access data in the memory itself and don’t have to wait until it is written to the disk. This almost completely eliminates write latency and allows even existing queries to see new data in memtables. This is how Rockset is able to provide less than a second of data latency even when write operations reach a billion writes a day.

Indexing Efficiency

Indexing data is another crucial requirement for real-time analytics applications. Having an index can reduce query latency by minutes over not having one. On the other hand, creating indexes during data ingestion can be done inefficiently.

For example, Elasticsearch’s primary node processes an incoming write operation then forwards the operation to all the replica nodes. The replica nodes in turn perform the same operation locally. This means that Elasticsearch reindexes the same data on all replica nodes, over and over again, consuming CPU resources each time.

Rockset takes a different approach here, too. Because Rockset is a primary-less system, write operations are handled by a distributed log. Using RocksDB’s remote compaction feature, only one replica performs indexing and compaction operations remotely in cloud storage. Once the indexes are created, all other replicas just copy the new data and replace the data they have locally. This reduces the CPU usage required to process new data by avoiding having to redo the same indexing operations locally at every replica.

Frequently Updated Data

Elasticsearch is primarily designed for full text search and log analytics uses. For these cases, once a document is written to Elasticsearch, there’s lower probability that it’ll be updated again.

The way Elasticsearch handles updates to data is not ideal for real-time analytics that often involves frequently updated data. Suppose you have a JSON object stored in Elasticsearch and you want to update a key-value pair in that JSON object. When you run the update query, Elasticsearch first queries for the document, takes that document into memory, changes the key-value in memory, deletes the document from the disk, and finally creates a new document with the updated data.

Even though only one field of a document needs to be updated, a complete document is deleted and indexed again, causing an inefficient update process. You could scale up your hardware to increase the speed of reindexing, but that adds to the hardware cost.

In contrast, real-time analytics often involves data coming from an operational database, like MongoDB or DynamoDB, which is updated frequently. Rockset was designed to handle these situations efficiently.

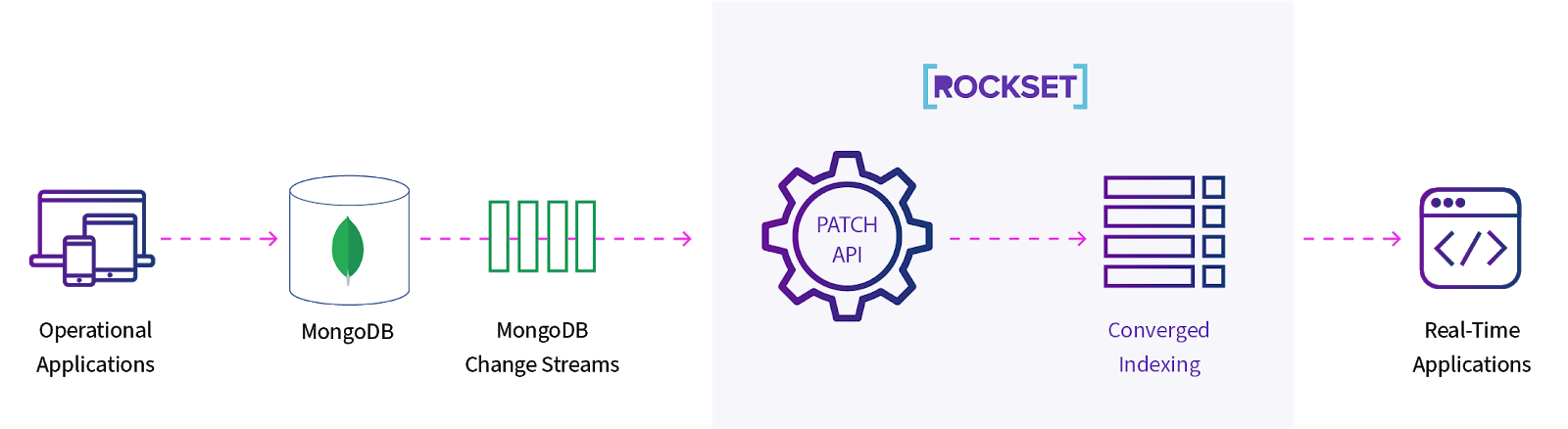

Using a Converged Index, Rockset breaks the data down into individual key-value pairs. Each such pair is stored in three different ways, and all are individually addressable. Thus when the data needs to be updated, only that field will be updated. And only that field will be reindexed. Rockset offers a Patch API that supports this incremental indexing approach.

Figure 1: Use of Rockset’s Patch API to reindex only updated portions of documents

Because only parts of the documents are reindexed, Rockset is very CPU efficient and thus cost efficient. This single-field mutability is especially important for real-time analytics applications where individual fields are frequently updated.

Joining Tables

For any analytics application, joining data from two or more different tables is necessary. Yet Elasticsearch has no native join support. As a result, you might have to denormalize your data so you can store it in such a way that doesn’t require joins for your analytics. Because the data has to be denormalized before it is written, it will take additional time to prepare that data. All of this adds up to a longer write latency.

Conversely, because Rockset provides standard SQL query language support and parallelizes join queries across multiple nodes for efficient execution, it is very easy to join tables for complex analytical queries without having to denormalize the data upon ingest.

Interoperability with Sources of Real-Time Data

When you are working on a real-time analytics system, it is a given that you’ll be working with external data sources. The ease of integration is important for a reliable, stable production system.

Elasticsearch offers tools like Beats and Logstash, or you could find a number of tools from other providers or the community, which allow you to connect data sources—such as Amazon S3, Apache Kafka, MongoDB—to your system. For each of these integrations, you have to configure the tool, deploy it, and also maintain it. You have to make sure that the configuration is tested properly and is being actively monitored because these integrations are not managed by Elasticsearch.

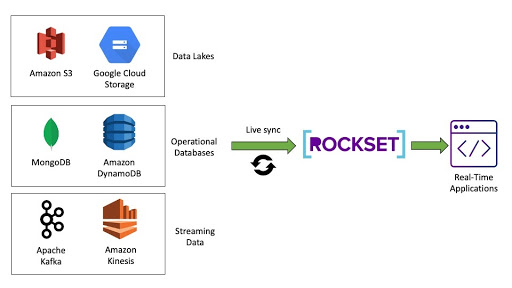

Rockset, on the other hand, provides a much easier click-and-connect solution using built-in connectors. For each commonly used data source (for example S3, Kafka, MongoDB, DynamoDB, etc.), Rockset provides a different connector.

Figure 2: Built-in connectors to common data sources make it easy to ingest data quickly and reliably

You simply point to your data source and your Rockset destination, and obtain a Rockset-managed connection to your source. The connector will continuously monitor the data source for the arrival of new data, and as soon as new data is detected it will be automatically synced to Rockset.

Summary

In previous blogs in this series, we examined the operational factors and query flexibility behind real-time analytics solutions, specifically Elasticsearch and Rockset. While data ingestion may not always be top of mind, it is nevertheless important for development teams to consider the performance, efficiency and ease with which data can be ingested into the system, particularly in a real-time analytics scenario.

When selecting the right real-time analytics solution for your needs, you may need to ask questions to establish how quickly data can be available for querying, taking into account any latency introduced by data pipelines, how costly it would be to index frequently updated data, and how much development and operations effort it would take to connect to your data sources. Rockset was built precisely with the ingestion requirements for real-time analytics in mind.

You can read the Elasticsearch vs Rockset white paper to learn more about the architectural differences between the systems and the migration guide to explore moving workloads to Rockset.

Other blogs in this Elasticsearch or Rockset for Real-Time Analytics series:

- Part 1: Managing Clusters vs Going Serverless

- Part 2: How Much Query Flexibility Do You Have?

- Part 3: Real-Time Ingestion and Indexing