Running Fast SQL on DynamoDB Tables

January 23, 2019

Have you ever wanted to run SQL queries on Amazon DynamoDB tables without impacting your production workloads? Wouldn't it be great to do so without needing to set up an ETL job and then having to manually monitor that job?

In this blog, I will discuss how Rockset integrates with DynamoDB and continuously updates a collection automatically as new objects are added to a DynamoDB table. I will walk through steps on how to set up a live integration between Rockset and a DynamoDB table and run millisecond-latency SQL on it.

DynamoDB Integration

Amazon DynamoDB is a key-value and document database where the key is specified at the time of table creation. DynamoDB supports scan operations over one or more items and also captures table activity using DynamoDB Streams. Using these features, Rockset continuously ingests data from DynamoDB in two steps:

- The first time a user creates a DynamoDB-sourced collection, Rockset does an entire scan of the DynamoDB table.

- After the scan finishes, Rockset continuously processes DynamoDB Streams to account for new or modified records.

To ensure Rockset does not lose any new data which is recorded in the DynamoDB table when the scan is happening, Rockset enables strongly consistent scans in the Rockset-DynamoDB connector, and also creates DynamoDB Streams (if not already present) and records the sequence numbers of existing shards. The continuous processing step (step 2 above) processes DynamoDB Streams starting from the sequence number recorded before the scan.

Primary key values from the DynamoDB table are used to construct the _id field in Rockset to uniquely identify a document in a Rockset collection. This ensures that updates to an existing item in the DynamoDB table are applied to the corresponding document in Rockset.

Connecting DynamoDB to Rockset

For this example, I have created a DynamoDB table programmatically using a Hacker News data set. The data set consists of data about each post and comment on the website. Each field in the dataset is described here. I have included a sample of this data set in our recipes repository.

The table was created using the id field as the partition key for DynamoDB. Also, I had to massage the data set as DynamoDB doesn't accept empty string values. With Rockset, as you will see in the next few steps, you don't need to perform such ETL operations or provide schema definitions to create a collection and make it immediately queryable via SQL.

Creating a Rockset Collection

I will use the Rockset Python Client to create a collection backed by a DynamoDB table. To try this in your environment, you will need to create an Integration (an object that represents your AWS credentials) and set up relevant permissions on the DynamoDB table, which allows Rockset to perform certain read operations on that table.

from rockset import Client

rs=Client(api_key=...)

aws_integration=rs.Integration.retrieve("aws-rockset")

sources=[

rs.Source.dynamo(

table_name="rockset-demo",

integration=aws_integration)]

rockset_dynamodb_demo=rs.Collection.create("rockset-dynamodb-demo", sources=sources)

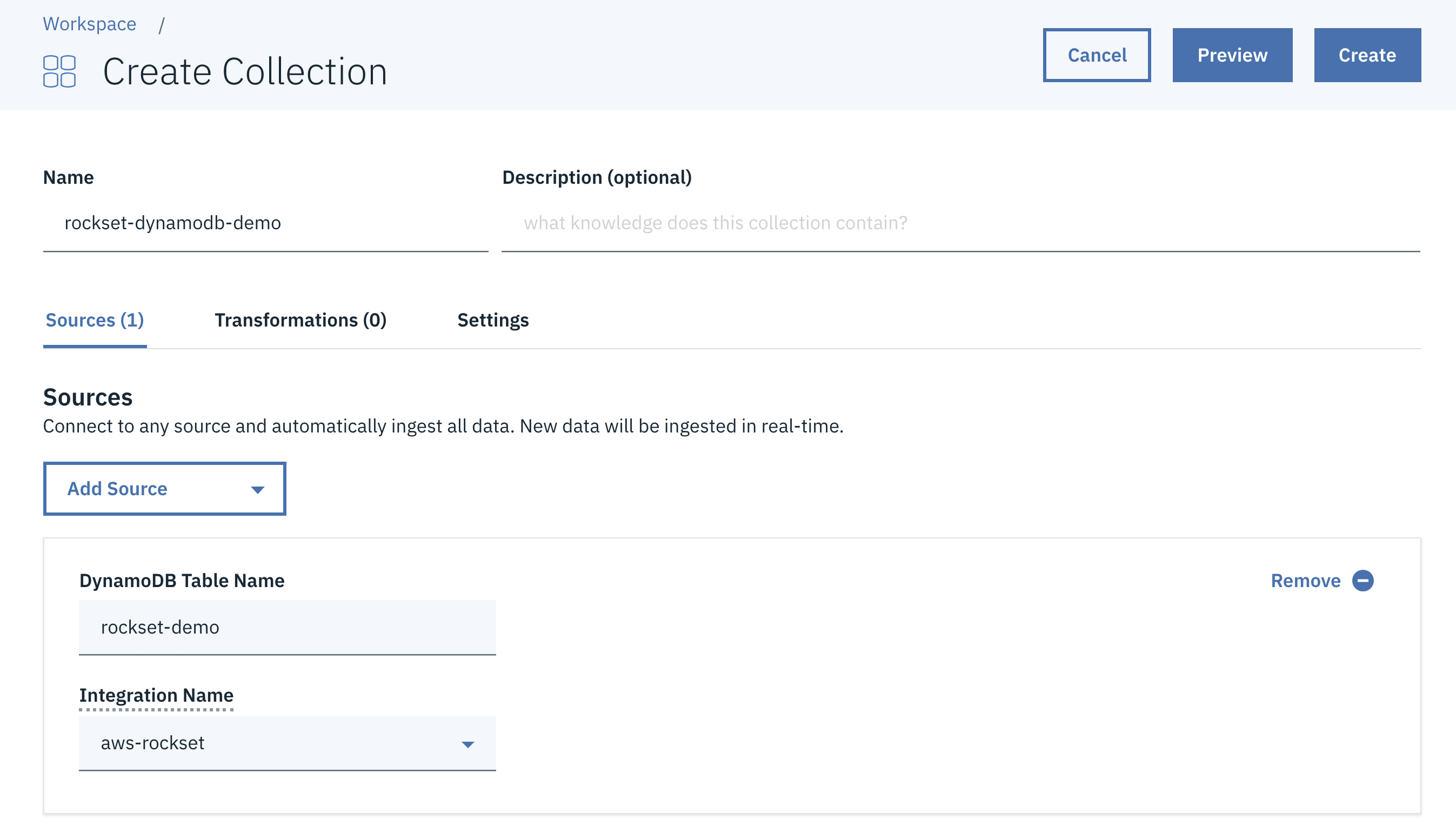

Alternatively, DynamoDB-sourced collections can also be created from the Rockset console, as shown below.

Running SQL on DynamoDB Data

Each document in Rockset corresponds to one row in the DynamoDB table. Rockset automatically infers the schema, as shown below.

rockset> describe "rockset-dynamodb-demo";

+---------------------------------+---------------+----------+-----------+

| field | occurrences | total | type |

|---------------------------------+---------------+----------+-----------|

| ['_event_time'] | 18926775 | 18926775 | timestamp |

| ['_id'] | 18926775 | 18926775 | string |

| ['_meta'] | 18926775 | 18926775 | object |

| ['_meta', 'dynamodb'] | 18926775 | 18926775 | object |

| ['_meta', 'dynamodb', 'table'] | 18926775 | 18926775 | string |

| ['by'] | 18926775 | 18926775 | string |

| ['dead'] | 890827 | 18926775 | bool |

| ['deleted'] | 562904 | 18926775 | bool |

| ['descendants'] | 2660205 | 18926775 | string |

| ['id'] | 18926775 | 18926775 | string |

| ['parent'] | 15716204 | 18926775 | string |

| ['score'] | 3045941 | 18926775 | string |

| ['text'] | 18926775 | 18926775 | string |

| ['time'] | 18899951 | 18926775 | string |

| ['title'] | 18926775 | 18926775 | string |

| ['type'] | 18926775 | 18926775 | string |

| ['url'] | 18926775 | 18926775 | string |

+---------------------------------+---------------+----------+-----------+

Now we are ready to run fast SQL on data from our DynamoDB table. Let's write a few queries to get some insights from this data set.

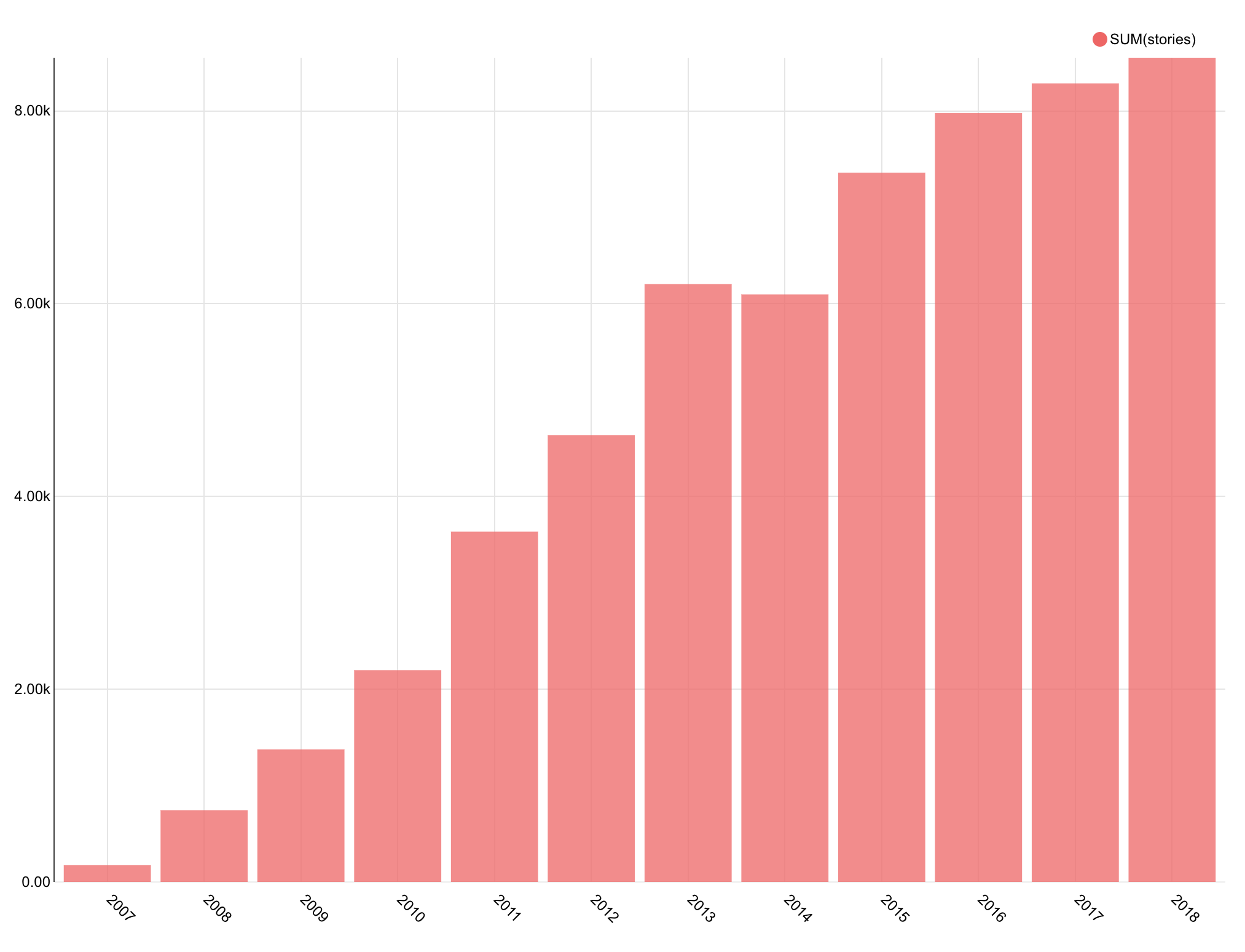

Since we are clearly interested in the topic of data, let's see how frequently people have discussed or shared about "data" on Hacker News over the years. In this query, I am tokenizing the title, extracting the year from the the time field, and returning the number of occurrences of "data" in the tokens, grouped by year.

with title_tokens as (

select rows.tokens as token, subq.year as year

from (

select tokenize(title) as title_tokens,

EXTRACT(YEAR from DATETIME(TIMESTAMP_SECONDS(time::int))) as year

from "rockset-dynamodb-demo"

) subq, unnest(title_tokens as tokens) as rows

)

select year, count(token) as stories

from title_tokens

where lower(token) = 'data'

group by year order by year

+-----------+------+

| stories | year |

|-----------+------|

| 176 | 2007 |

| 744 | 2008 |

| 1371 | 2009 |

| 2192 | 2010 |

| 3624 | 2011 |

| 4621 | 2012 |

| 6164 | 2013 |

| 6020 | 2014 |

| 7224 | 2015 |

| 7878 | 2016 |

| 8159 | 2017 |

| 8438 | 2018 |

+-----------+------+

Using Apache Superset integration with Rockset, I plotted a graph with the results. (It is possible to use data visualization tools like Tableau, Redash, and Grafana as well.)

The number of stories concerning data has clearly been increasing over time.

Next, let's mine the Hacker News data set for observations on one of the most talked-about technologies of the past two years, blockchain. Let's first check how user engagement around blockchain and cryptocurrencies has been trending.

with title_tokens as (

select rows.tokens as token, subq.year as year

from (

select tokenize(title) as title_tokens,

EXTRACT(YEAR from DATETIME(TIMESTAMP_SECONDS(time::int))) as year

from "rockset-dynamodb-demo"

) subq, unnest(title_tokens as tokens) as rows

)

select year, count(token) as count

from title_tokens

where lower(token) = 'crypto' or lower(token) = 'blockchain'

group by year order by year

+---------------+--------+

| count | year |

|---------------+--------|

| 6 | 2008 |

| 26 | 2009 |

| 35 | 2010 |

| 43 | 2011 |

| 75 | 2012 |

| 278 | 2013 |

| 431 | 2014 |

| 750 | 2015 |

| 1383 | 2016 |

| 2928 | 2017 |

| 5550 | 2018 |

+-----------+------------+

As you can see, interest in blockchain went up immensely in 2017 and 2018. The results are also aligned with this study, which estimated that the number of crypto users doubled in 2018.

Also, along with blockchain, hundreds of cryptocurrencies emerged. Let's find the most popular coins in our data set.

with crypto_text_tokens as (

select rows.tokens as token

from (

select tokenize(text) as text_tokens

from (

select text as text

from "rockset-dynamodb-demo"

where text like '%crypto%' or text like '%blockchain%'

) subq

) subq, unnest(text_tokens as tokens) as rows

)

select token, count(token) as count

from crypto_text_tokens

where token like '_%coin'

group by token

order by count desc

limit 10;

+---------+------------+

| count | token |

|---------+------------|

| 29197 | bitcoin |

| 512 | litecoin |

| 454 | dogecoin |

| 433 | cryptocoin |

| 362 | namecoin |

| 239 | altcoin |

| 219 | filecoin |

| 122 | zerocoin |

| 81 | stablecoin |

| 69 | peercoin |

+---------+------------+

Bitcoin, as one would have guessed, seems to be the most popular cryptocurrency in our Hacker News data.

Summary

In this entire process, I simply created a Rockset collection with a DynamoDB source, without any data transformation and schema modeling, and immediately ran SQL queries over it. Using Rockset, you too can join data across different DynamoDB tables or other sources to power your live applications.

Other DynamoDB resources:

- DynamoDB Filtering and Aggregation Queries Using SQL on Rockset

- Analytics on DynamoDB: Comparing Athena, Spark and Elastic

- Real-Time Analytics on DynamoDB - Using DynamoDB Streams with Lambda and ElastiCache

- Tableau Operational Dashboards and Reporting on DynamoDB - Evaluating Redshift and Athena

- Using Tableau with DynamoDB: How to Build a Real-Time SQL Dashboard on NoSQL Data