Building Data Applications Powered by Real-Time Analytics

May 11, 2021

For long-term success with real-time analytics it is important to use the right tool for the job. Data applications are an emerging breed of applications that demand sub-second analytics on fresh data. Examples include logistics tracking, gaming leaderboards, investment decisions systems, connected devices and embedded dashboards in SaaS apps.

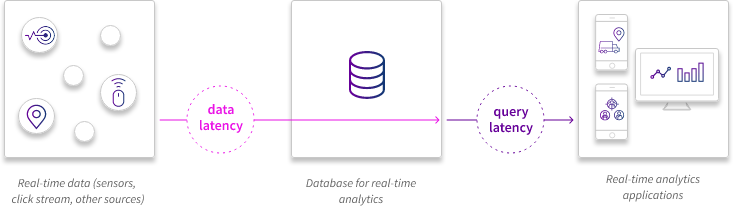

Real-time analytics is all about using data as soon as it is produced to answer questions, make predictions, understand relationships, and automate processes.

Typically, data applications require sub-second query latency since they are user-facing, but may have data latency requirements ranging from few milliseconds to few hours, depending on the use case.

To future-proof yourself as you explore your options for real-time analytics platforms, look for the three key criteria that massively successful data applications have:

- Scaling performance without proportionally scaling cost - it has been said that with enough thrust enough pigs can fly. It may be tempting to throw more resources at existing systems in a bid to eke out more performance, but the question is, how can you get the real-time performance you need without sending your compute cost through the roof?

- Flexibility to adapt to changing queries - with more developers embedding real-time analytics into applications it is important to recognize that product requirements will be constantly changing so embracing flexibility as a core design principle is the key to long-term success. Some systems require you to denormalize your data and do extensive data preparation upfront. When dealing with nested JSON, look for real-time analytics platforms that have built-in UNNEST capabilities to give developers and product teams the flexibility they need to move fast.

- Ability to stay in sync with any type of data source - your data may be coming from your lake, stream or transactional database, but a lot of time series databases are append-only which means they can insert new data but they cannot update or delete data, which in turn causes performance problems down the road. Instead look for real-time analytics platforms that are fully mutable. For example, what happens when you have an event stream like Kafka but also dimension tables in your transactional database like MySQL or Postgres?

This approach is based on lessons learned from successful real-time analytics implementations at cloud-scale including Facebook’s newsfeed. It allows for massive growth without increasing cost or slowing down teams.

Time to market is the most important currency for fast-moving companies building data applications. The best thing an engineering leader can do to ensure rapid success with real-time analytics is to adopt a cloud-native strategy. Serverless data stacks have proven to be the easiest to adopt, with many teams reporting that the time to successful implementation has gone down from 6 months to one week with a cloud-native real-time analytics platform. Real-time analytics is a prime example of a workload that has a lot of variability in terms of the volume of data and the number of queries coming in. This type of variability is extremely expensive and difficult to architect on-premises but scales nicely in the cloud

When you’re building data applications your mandate is simple,

- make it easy for your developers to build delightful products

- make sure your infra scales seamlessly with you

Data applications powered by real-time analytics are becoming the biggest competitive differentiators in a variety of industries. Just like a CMO wouldn’t be caught dead without investing in a CRM platform early, the most forward-looking CIOs & CTOs are investing in real-time analytics platforms early and enabling their teams to move faster than their competitors.