Joining Streaming and Historical Data for Real-Time Analytics: Your Options With Snowflake, Snowpipe and Rockset

June 21, 2022

We’re excited to announce that Rockset’s new connector with Snowflake is now available and can increase cost efficiencies for customers building real-time analytics applications. The two systems complement each other well, with Snowflake designed to process large volumes of historical data and Rockset built to provide millisecond-latency queries, even when tens of thousands of users are querying the data concurrently. Using Snowflake and Rockset together can meet both batch and real-time analytics requirements needed in a modern enterprise environment, such as BI and reporting, developing and serving machine learning, and even delivering customer-facing data applications to their customers.

What’s Needed for Real-Time Analytics?

These real-time, user-facing applications include personalization, gamification or in-app analytics. For example, in the case of a customer browsing an ecommerce store, the modern retailer wants to optimize the customer's experience and revenue potential while engaged on the store site, so will apply real-time data analytics to personalize and enhance the customer’s experience during the shopping session.

For these data applications, there is invariably a need to combine streaming data–often from Apache Kafka or Amazon Kinesis, or possibly a CDC stream from an operational database–with historical data in a data warehouse. As in the personalization example, the historical data could be demographic information and purchase history, while the streaming data could reflect user behavior in real time, such as a customer’s engagement with the website or ads, their location or their up-to-the-moment purchases. As the need to operate in real time increases, there will be many more instances where organizations will want to bring in real-time data streams, join them with historical data and serve sub-second analytics to power their data apps.

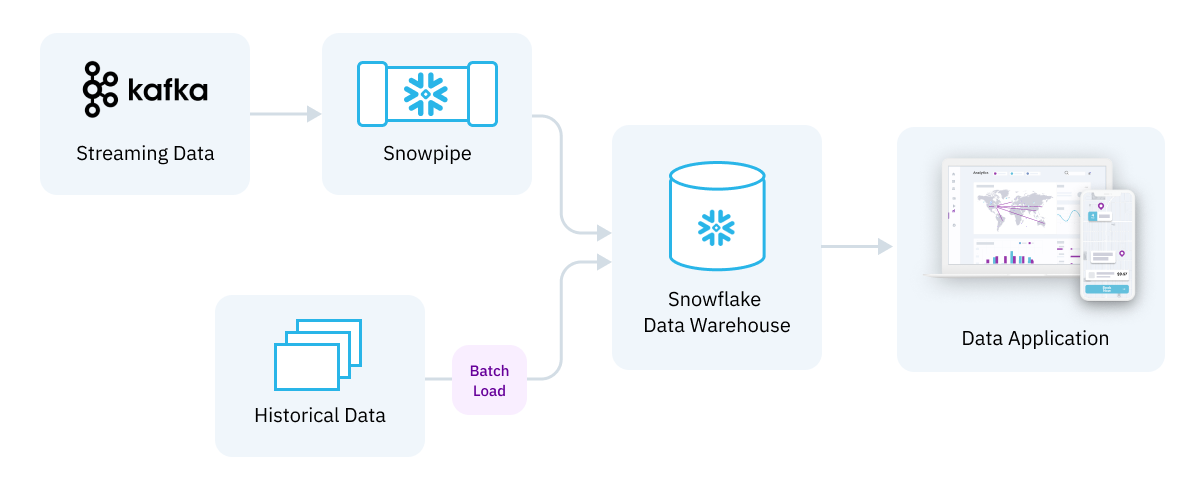

The Snowflake + Snowpipe Option

One alternative to analyze both streaming and historical data together would be to use Snowflake in conjunction with their Snowpipe ingestion service. This has the benefit of landing both streaming and historical data into a single platform and serving the data app from there. However, there are multiple limitations to this option, particularly if query optimization and ingest latency are critical for the application, as outlined below.

While Snowflake has modernized the data warehouse ecosystem and allowed enterprises to benefit from cloud economics, it is primarily a scan-based system designed to run large-scale aggregations periodically across large historical data sets, typically by an analyst running BI reports or a data scientist training an ML model. When running real-time workloads that require sub-second latency for tens of thousands of queries running concurrently, Snowflake may be too slow or expensive for the task. Snowflake can be scaled by spinning up more warehouses to attempt to meet the concurrency requirements, but that likely is going to come at a cost that will grow rapidly as data volume and query demand increase.

Snowflake is also optimized for batch loads. It stores data in immutable partitions and therefore works most efficiently when these partitions can be written in full, as opposed to writing small numbers of records as they arrive. Typically, new data could be hours or tens of minutes old before it is queryable within Snowflake. Snowflake’s Snowpipe ingestion service was introduced as a micro-batching tool that can bring that latency down to minutes. While this mitigates the issue with data freshness to some extent, it still does not sufficiently support real-time applications where actions need to be taken on data that is seconds old. Additionally, forcing the data latency down on an architecture built for batch processing necessarily means that an inordinate amount of resources will be consumed, thus making Snowflake real-time analytics cost prohibitive with this configuration.

In sum, most real-time analytics applications are going to have query and data latency requirements that are either impossible to meet using a batch-oriented data warehouse like Snowflake with Snowpipe, or attempting to do so would prove too costly.

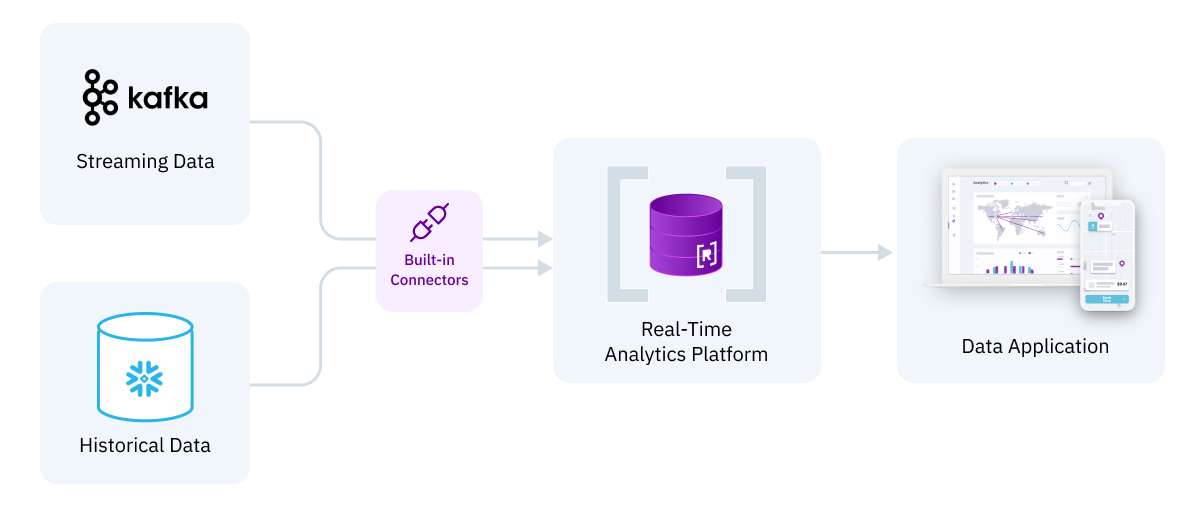

Rockset Complements Snowflake for Real-Time Analytics

The recently introduced Snowflake-Rockset connector offers another option for joining streaming and historical data for real-time analytics. In this architecture, we use Rockset as the serving layer for the application as well as the sink for the streaming data, which could come from Kafka as one possibility. The historical data would be stored in Snowflake and brought into Rockset for analysis using the connector.

The advantage of this approach is that it uses two best-of-breed data platforms–Rockset for real-time analytics and Snowflake for batch analytics–that are best suited for their respective tasks. Snowflake, as noted above, is highly optimized for batch analytics on large data sets and bulk loads. Rockset, in contrast, is a real-time analytics platform that was built to serve sub-second queries on real-time data. Rockset efficiently organizes data in a Converged Index™, which is optimized for real-time data ingestion and low-latency analytical queries. Rockset’s ingest rollups enable developers to pre-aggregate real-time data using SQL without the need for complex real-time data pipelines. As a result, customers can reduce the cost of storing and querying real-time data by 10-100x. To learn how Rockset architecture enables fast, compute-efficient analytics on real-time data, read more about Rockset Concepts, Design & Architecture.

Rockset + Snowflake for Real-Time Customer Personalization at Ritual

One company that uses the combination of Rockset and Snowflake for real-time analytics is Ritual, a company that offers subscription multivitamins for purchase online. Using a Snowflake database for ad-hoc analysis, periodic reporting and machine learning model creation, the team knew from the outset that Snowflake would not meet the sub-second latency requirements of the site at scale and looked to Rockset as a potential speed layer. Connecting Rockset with data from Snowflake, Ritual was able to start serving personalized offers from Rockset within a week at the real-time speeds they needed.

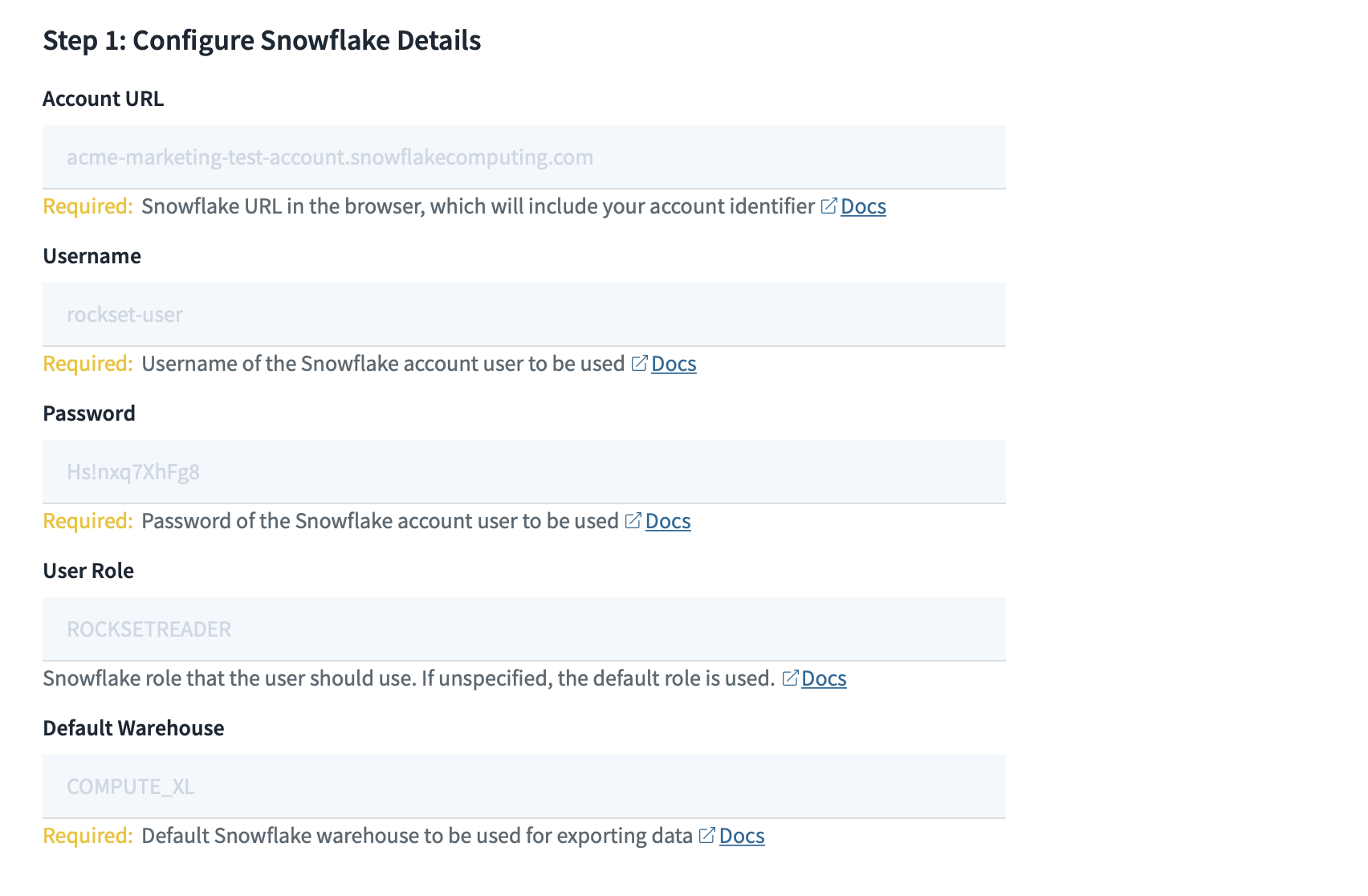

Connecting Snowflake to Rockset

It’s simple to ingest data from Snowflake into Rockset. All you need to do is provide Rockset with your Snowflake credentials and configure AWS IAM policy to ensure proper access. From there, all the data from a Snowflake table will be ingested into a Rockset collection. That’s it!

Rockset’s cloud-native ALT architecture is fully disaggregated and scales each component independently as needed. This allows Rockset to ingest TBs of data from Snowflake (or any other system) in minutes and gives customers the ability to create a real-time data pipeline between Snowflake and Rockset. Coupled with Rockset’s native integrations with Kafka and Amazon Kinesis, the Snowflake connector with Rockset can now enable customers to join both historical data stored in Snowflake and real-time data directly from streaming sources.

We invite you to start using the Snowflake connector today! For more information, please visit our Rockset-Snowflake documentation.

You can view a short demo of how this might be implemented in this video:

Embedded content: https://www.youtube.com/watch?v=GSlWAGxrX2k

Rockset is the leading real-time analytics platform built for the cloud, delivering fast analytics on real-time data with surprising efficiency. Learn more at rockset.com.